Real-time Visualization of Abstract Relationships

Between Devices

by

Arjun Ramegowda Narayanswamy

Submitted to the Department of Electrical Engineering and Computer

Science

in partial fulfillment of the requirements for the degree of

Master of Engineering in Computer Science and Engineering

at the

MASSACHUSETTS INSTITUTE OF TECHNOLOGY

June 2003

© Arjun Ramegowda Narayanswamy, MMIII. All rights reserved.

The author hereby grants to MIT permission to reproduce and

distribute publicly paper and electronic copies of this thesis document

in whole or in part.

MASSACHUSETTS INSTITUTE

OFTECHNOLOGY

JUL 3 0 2003

LIBRARIES

.............

A uthor .......

Departme6rElectrical Engineering and Computer Science

June 20, 2003

C ertified by ........

.................

Lawrence Rudolph

Principal Scientist

Thesis-Supervisor

Accepted by....

..

Arthur C. Smith

Chairman, Department Committee on Graduate Students

BARKER

2

Real-time Visualization of Abstract Relationships Between

Devices

by

Arjun Ramegowda Narayanswamy

Submitted to the Department of Electrical Engineering and Computer Science

on June 20, 2003, in partial fulfillment of the

requirements for the degree of

Master of Engineering in Computer Science and Engineering

Abstract

In the near future, the living space of the user will be populated by many intercommunicating devices. We are motivated by the high-level vision of exposing these

relationships to the user so that they may be inspected and modified in real-time. We

have identified three sub-problems of this vision that we tackle in this thesis. These

are (1) the design of a directional device identification system, (2) the design and

implementations of a rest-detection system and an (3) evaluation of the tilt-meters

of the Mercury backpack. We have established five criteria for evaluating directional

identification systems. We propose a new tag system based on the principles of directional query, broadcast response and photo-triggered power tags that meets these

criteria. We have also designed and implemented a more robust rest-detection system by augmenting traditional tilt-meter readings with video data. The system is

implemented as a library and an API in Linux to facilitates easy integration into

applications. A sample application of rest-detection to Cricket beacon estimates is

presented. And finally, we have concluded our empirical analysis of the Mercury

backpack tilt-meters with the discovery of a set of anomalies that can direct further

development and debugging of these devices.

Thesis Supervisor: Lawrence Rudolph

Title: Principal Scientist

3

4

Acknowledgments

I would like to thank Larry Rudolph - for support and for freedom. Larry has been

kind enough to stand back this year and given me the opportunity to shape and define

my thesis on my own. I could walk in to his office at any time and argue any point

of any argument with him. If this thesis seems at all to make any sense, it's because

Larry has extensively revised and clarified my thinking. All flaws of argument are

mine, all clarity of thought is his.

On the 6th floor of L.C.S., I'd like to thank Kenneth Steele, Eugene Weinstein

and Jason Waterman. Thanks to Eugene and Jason for helping me get up to speed

on iPaqs, ipackages and Familiar Linux issues.

Ken has a been a marvel for his

unflagging patience with flagging hardware. I'm especially grateful to Ken for his

generous support in my tilts at tilt-meters. I've made extensive use of his time and

his patience.

Back in NE43-218, thanks to my lab-buddies for all their support. Todd Amicon, Jonathan 'Mr Numbers' Brunsman, Glenn 'The Weasel' Eguchi, Sonia 'One-eye'

Garg, Hana 'Miss Demeanour' Kim, Kan 'Hammerhead' Liu and Jorge Ortiz. Lab

has never been as fun before, and lab will never be as fun afterwards. I would also

like to thank Shelly Mae O'Gilve and Debbie Wan for their efforts to get me playing

with solder, bread-boards and photoresistors.

To my friends, especially Vikram Aditya Siddharth - thank you for standing by

me at this time. You were there when I worked, and you were there when I didn't

want to work. Thanks to Jennifer Huang, Buddhika Kottahachchi, Judy Y Chen,

Nora Szasz, and my long-suffering room-mate Shastri Sandy. To the BC Crew, the

Bangaloreans, the Indians, the Caribbeans and the Assorted- this has been a great

year of my life and thanks for helping it be that way.

To my family; father, sister and my inspirational mother Aruna. Not a single step

of the long, long road that's lead me here could have been taken without your love,

your support and your blessings. A Master's degree from MIT - who would have

believed it? And how many long-distance phone calls has it been? Thank you so

5

much, once again, for always, always being with me.

Finally, to God, to whom I owe everything including the love and friendship of all

the people above.

6

Contents

1

Introduction

1.1

9

O verview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

2 Directional Device Identification

2.1

11

13

Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

13

2.1.1

Speech and Pointing . . . . . . . . . . . . . . . . . . . . . . .

13

2.2

Chapter Overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

14

2.3

Existing Device Identification Technologies . . . . . . . . . . . . . . .

15

2.4

Evaluation of Directional Identification Systems . . . . . . . . . . . .

18

2.4.1

Aesthetic Impact . . . . . . . . . . . . . . . . . . . . . . . . .

18

2.4.2

C ost of Tags . . . . . . . . . . . . . . . . . . . . . . . . . . . .

19

2.4.3

Differentiating between Identification Systems . . . . . . . . .

19

2.5

The "Right" Technology . . . . . . . . . . . . . . . . . . . . . . . . .

22

2.6

A 'Point-and-Shout' System . . . . . . . . . . . . . . . . . . . . . . .

25

2.7

Conclusion and Future Work . . . . . . . . . . . . . . . . . . . . . . .

26

3 Video-Enhanced Multi-modal Rest Detection

27

3.1

Introduction . . . . . . . . . . . . . . . . . . .

27

3.2

Chapter Overview . . . . . . . . . . . . . . . .

29

3.3

Previous Work

. . . . . . . . . . . . . . . . .

30

3.4

Challenges . . . . . . . . . . . . . . . . . . . .

31

3.4.1

Complexity and Cost . . . . . . . . . .

31

3.4.2

Tilt-Meters

32

. . . . . . . . . . . . . . .

7

3.5

3.6

3.7

3.8

3.9

4

. . . . . . . . . . . . . . .

34

3.5.1

Statistical Measures . . . . . . . . . . . . . . . . .

35

3.5.2

Experim ents . . . . . . . . . . . . . . . . . . . . .

36

3.5.3

O bservations

39

. . . . . . . . . . . . . . . . . . . .

Integra tion of Video and Tilt

. . . . . . . . . . . . . . .

40

3.6.1

Case: AIMAGE low, ATILT high . . . . . . . .

40

3.6.2

Case: AIMAGE high, ATILT low . . . . . . . .

41

3.6.3

A lgorithm . . . . . . . . . . . . . . . . . . . . . .

42

Implerr entation . . . . . . . . . . . . . . . . . . . . . . .

43

3.7.1

Goals . . . . . . . . . . . . . . . . . . . . . . . . .

43

3.7.2

Software Architecture. .....

45

.. .. .. .. .. ...

Results

48

3.8.1

Case Study: Crickets and CricketNav . . . . . . .

50

3.8.2

A Simple Rest-Aware Cricket Distance Estimator

53

Conclu sion and Future Work . . . . . . . . . . . . . . . .

55

Static Analysis of Mercury Backpack Tilt-meter

57

4.1

Motivation.

57

4.2

Tilt-meter Description

. . . . . . . . . . . . . .

. . . . . . . .

58

4.2.1

Composition . . . . . . . . . .

58

4.2.2

Layout . . . . . . . . . . . . .

59

4.2.3

Interpretation . . . . . . . . .

60

4.3

Static Experiments . . . . . . . . . .

61

4.4

Results . . . . . . . . . . . . . . . . .

62

4.4.1

Extreme Measurements . . . .

62

4.4.2

Normality . . . . . . . . . . .

62

4.4.3

Correlation

. . . . . . . . . .

69

4.4.4

Serial autocorrelation . . . . .

69

Inferences . . . . . . . . . . . . . . .

74

4.5

5

A Vide o-Enhanced Approach

Conclusion and Future Work

77

8

Chapter 1

Introduction

The past decade has seen a steady growth in the number of devices owned by a

single user. If the PC revolution of the 80's can be seen as the 'one person, one

computer' revolution, then pervasive computing can be described as the 'one person,

n computers' vision. We envisage a world where the living space of a user is populated

by many devices and are engaged in the development of infrastructure and interface

technologies that enable a user to manage these devices Intuitive interface technologies

that allow a user to manipulate devices in human terms are an important part of

this vision. In particular, we are interested in techniques that allow a naive user to

visualize relationships between devices communicating wirelessly in a room.

Despite that our devices communicate wirelessly, wires remain familiar paradigms

for expressing relationships between devices. All ranges of users- from the naive to

the expert- understand that "wiring" two devices together means that their behavior

is somehow connected. Similarly, seeing which devices are connected to which other

devices helps us understand if a particular device is "wired right". Therefore exposing

abstract relationships between devices as a form of "virtual wire" promises to provide

a simple interface of visualization and manipulation of device configurations. This is

particularly important now because:

" Computing will be used by users with all levels of technical sophistication

" The relationships between devices will change dynamically

9

ure 1-1: The Stargazer Vision: A possible screen-shot

e There will exist no visible physical link between intercommunicating devices

This thesis has been motivated by the open-ended vision of creating a Stargazeran application that can sense and display abstract relationships between devices as

a real-time superposition on a live video feed. The fundamental problem to be faced

is the challenge of looking at the video feed and assigning device IDs to portions of

pixels (in real time). We are interested in wxploring the challenges faced during the

design of such a system.

For instance, a directional device identification scheme is useful for this task.

However, no clear scheme exists for directional device identification; and the system

has only recently become of interest to augmented reality and pervasive computing

researchers.

Secondly, accurate position and heading information is necessary, but

existing location systems exhibit too much error. Mobile devices did not even have a

10

reliable way of detecting when they are at rest- hence the problem of rest-detection is

important in its own right. Finally, applications are being constructed today using the

Mercury backpack'. However the statistical nature of tilt-meter readings from this

backpack is poorly understood. Therefore this thesis aims not to develop a Stargazer

application, but to address the three problems described above.

1.1

Overview

Chapter 2 talks about directional device identification. A number of systems have

been developed that allow the user to indicate interest in a device by pointing at

it. We evaluate existing approaches using the criteria of directional query, ease of

sensing, infrastructural demands, tag power usage and detection range. Currently,

glyph recognition, IR transceivers and contact tags are promising technologies- but

none fully meet our evaluation criteria. We argue for an improved system based on

the principles of directional query, broadcast response and photo-triggered, powered

tags. We call this system a 'point-and-shout' system.

Chapter 3 addresses itself to the problem of rest-detection. Device rest-detection

is important for gesture recognition, power conservation, position triangulation and

heading drift cancellation.

Currently rest is principally detected using static tilt-

meters. However tilt-meters are fundamentally insensitive to horizontal translation.

A better rest-detection algorithm may be developed by augmenting tilt-meter readings

with video data. This paper combines video optical-flow readings with traditional tiltmeter readings to obtain a better estimator of device rest conditions. The algorithm

is implemented as a library and API in Familiar Linux for easy integration into

applications. Stand-alone rest-detection results are presented. A simple application

of rest-detection to Cricket beacon distance estimates is also presented.

Chapter 4 provides the results of an empirical investigation of the performance of

the Mercury backpack tilt-meters. These were initially performed in the lead-up to

1 A prototype hand-held sleeve that gives an off-the-shelf

iPaq access to tilt-meters, a video camera

and two PCMCIA Type II slots. See the Project Mercury page[20] for more information.

11

tackling rest-detection, but we found that these results are of interest in their own

right.

This thesis can either be read in toto, or each chapter can be read as a stand-alone

component. The conclusions of each individual chapter are presented in full at the

end of that chapter. They are also amalgamated and summarized in Chapter 5.

The key contributions of this thesis are:

" The argument for a 'Point-and-Shout' approach to directional device identification

" The development of a multi-modal rest-detection system

" The identification of anomalies in the tilt-meter readings of the Mercury backpack

12

Chapter 2

Directional Device Identification

2.1

Introduction

The past decade has seen a steady growth in the number of devices owned by a

single user. If the PC revolution of the 80's can be seen as the 'one person, one

computer' revolution, then pervasive computing can be described as the 'one person,

n computers' vision. We envisage a world where the living space of a user is populated

by many devices and are engaged in the development of infrastructure and interface

technologies that enable a user to manage and manipulate these devices. Intuitive

interface technologies that allow a user to manipulate devices in human terms are an

important part of this vision.

2.1.1

Speech and Pointing

Consider a fictional user walking into a room full of devices. The user is engaged in

a simple task. He has spotted a device of interest in the upper-far-right corner of the

room, and he wishes to reach out to the device and indicate an interest in it. How

might he go about this task?

Speech, writing and physical gestures are common ways in which users interact

with their environment.

Of these, speech and gestures are particularly useful as

sources of deictic (pointing) information. To indicate his interest, our user is likely

13

to say "The brown device in the upper, far, right corner of this room". Or he is likely

to point at the device and say "That device!".

Speech-recognition has evolved to the point where there exist systems that can understand our user's spoken command. However, there are still two challenges for using

speech as pointing information. These are complexity and restricted expressiveness.

First, speech-based deixis is more complex than pointing[17].

We can see this

already in our fictional example- the structure of the gesture is simpler than the

speech-only command. Understanding speech means that we not only have to understand what words people say, but we also have to understand all the different ways in

which they can express the same idea. This is a complicated task; physical gestures

are simpler and less ambiguous.

Second, speech-based deixis works well when the user already has a way of describing the object of interest. This is not always going to be the case, especially if we

have an unfamiliar object or a novice user. No matter how good a speech system is, it

will always have problems understanding what the user means when he (at a loss for

words) says the word "thingamajig". For pointing, physical gestures are expressive

in a way speech is not.

In pervasive computing, it is not likely that we will restrict ourselves to one form

of input. However, recognizing that speech is not a substitute for physical pointing

is important to our study of existing directional device identification technologies.

2.2

Chapter Overview

In Section 2.3 we will survey the literature to identify device identification technologies

that can be used for directional device identification.

In Section 2.4, we develop

five criteria to evaluate these technologies. We use these criteria to identify "best

practices" for directional identification and pick three promising existing technologies

(Section 2.5). However none of these technologies are fully satisfactory. Therefore,

in Section 2.6, we design a new directional device identification system that we call a

'Point-and-Shout' system.

14

Figure 2-1: Scenario: A user wishes to interact with a particular device in the room.

The user is labeled 'U', the devices in the room are labeled 'D' and the pervasive

computing infrastructure is labeled 'I'.

2.3

Existing Device Identification Technologies

Let us recast our initial example. Our scenario now consists of three elements: a

user, a set of devices, and a pervasive computing infrastructure (Figure 2-1). The

user may or may not carry a pointing device of some sort, the devices in the room

may or may not be tagged with an identifier of some sort. A number of systems have

been developed that either directly address, or can be modified to tackle the problem

of directional device identification.

Video-based natural gesture recognition is an example of a general purpose interface in which neither the user nor the devices are instrumented. Cameras are mounted

in a room to record the physical gestures of the user. The user communicates with the

pervasive environment using natural gestures. To achieve directional device identifi15

cation, the user points to a device to indicate his interest in it. The video feed is then

sent to a processor which computes the location and orientation of the user. This

information can be used in conjunction with a device-location database to discover

the identity of the device being pointed at.

Cricket[18] and Cricket Compass[19] are indoor systems that were developed to

provide mobile devices with location and orientation information. In these systems,

the ceiling space of the user is instrumented with a number of carefully positioned

RF/Ultrasound beacons. The user carries a listener which is a combination of an

RF receiver and an array of ultrasound detectors. By measuring the time of flight

of ultrasound pulses from the beacons, the user is able to compute 3-D location and

orientation. Given this information, one can achieve directional device identification

by looking up a comprehensive device-location database to discover the identity of

what we are pointing at.

Like Cricket and CricketCompass, there are a number of similar approaches that

(1) compute a 3-D pointing vector and then (2) lookup a device-location database to

identify the device being pointed at. The pointing vector may be discovered using

only one system(e.g. infra-red[32] or RF/ultrasound[19]) or by combining location

systems (e.g. infra-red[30], RF-only[2] or RF/ultrasound[31, 18]) with orientation

systems (e.g. inertial gyroscopes, digital compasses, tilt-meters).

Systems that do not use natural gestures or pointing vectors to identify devices

resort to labeling the devices in the room with an ID-tag of some sort. The tags may

be standard bar-codes[14, 25], visual glyphs[22] or radio-frequency tags[7, 23].

Rekimoto et.al.[22] describe CyberCode, a comprehensive device identification

scheme based on 2-D visual glyph recognition. CynerCode was developed to directly

address the problem of deictic device identification. Video is processed using a lowresolution CCD/CMOS camera coupled to a workstation processor. Each tag encodes

24-48 bits, and is constructed so that distance and orientation information may be

extracted by processing the visual image. The tags may either be printed on paper

(and affixed to a device) or they may be digitized (and displayed on a CRT screen).

Rekimoto describes some applications of this technology to augmented reality, direct

16

manipulation and gyroscope-enhanced mobile indoor navigation.

Bluetooth[7] is a cheap, low-power,short-range wireless technology that operates in

the unlicensed 2.4 GHz ISM band. Bluetooth is designed to replace wired connections

between devices. The Bluetooth Service Discovery Protocol[4] describes a method for

identifying devices and services in the vicinity of a Bluetooth device. Every service

available on a device is described by a 128-bit universally unique identifier (UUID).

Nearby services are discovered by making broadcast inquiries and pages. Bluetooth

is an example of an active radio tag system.

Radio Frequency Identification (RF-id) [23] is another wireless device identification

technology. An RF-id system typically consists of a reader (composed of an antenna

and a transceiver) and a set of tags (transponders). RF-id tags are cheap, and can be

engineered to be very small in size. In addition, RF-id tags may either be passive or

active. Passive RF-id tags are unpowered, and draw energy from the antenna of the

reader. The lifetime of this tag is practically unlimited They are typically read-only,

carry about 32-128 bits of information, and can be read from short distances. They

constitute a new class of tagging systems which we will call passive radio tag systems.

Active RF-id tags are coupled with a power-source. They can carry up to 1MB of

read-write data and can be accessed from longer distances by a lower-powered reader.

Want et.al.[29] describe a system for tagging real-world devices with passive RFid tags. Inspired by the DigitalDesk[33], passive RF-id tags are incorporated into

books and documents. The idea of a physical icon is implemented by instrumenting

different sides of a cube with different RF-id tags. The authors found that the tags

could be read 10-15cm away by using an RF-id reader connected to the serial port of

the computer.

The Cooltown initiative[12] tightly couples URLs with devices for detection, configuration and operation. Users can "e-Squirt" URLS at Web-enabled devices using

a short-range wireless link such as infra-red or Bluetooth. The project developed

tiny IR transceivers to transfer URLs using the IRDA protocol. Information about a

museum exhibit can be obtained by directing a browser to the URL a neighbouring

beacon broadcasts. Squirting a URL at printer or projector directs that device to

17

retrieve and output content stored at the transferred URL.

An iButton[11] is a chip housed in a stainless steel enclosure. The logical functions

provided by the chip range from a simple serial number to 64 Kbits and beyond of

non-volatile ROM and EPROM.

Every iButton has a globally

Figure 2-2: An iButton.

unique registration number, which

is etched on the inside of the

case. The information stored in

an iButton can be read by touching it with a special iButton probe.

The energy needed for information exchange may be "stolen"

from the data line of the probe.

Since tag and reader are in actual physical contact, the energy cost of data transfer is small. Information can be read or written to the iButton in a bit-sequential,

half-duplex manner.An iButton is an example of a contact tag.

2.4

Evaluation of Directional Identification Systems

The literature on augmented reality and directional device identification discusses desirable attributes of device identification systems. Chief amongst these are: aesthetic

impact, cost of tags, directional query, ease of sensing, infrastructuraldemands, tag

power usage, security and detection range.

2.4.1

Aesthetic Impact

Device identification researchers often worry about the aesthetic impact of their systems. This is a particular concern for those researchers constructing tagged device

identifications schemes, because they would like their tags to be as unobtrusive as

18

possible. However, deictic device identification systems may find it useful to present

the user with a target to point at, particularly if there are multiple devices clustered

close together or if the user intends to interact with the target device from a distance.

Therefore, we do not necessarily want our tagging systems to be invisible.

Additionally, we can expect that the aesthetics of the target will improve during the process of commercialization of the technology. Radio device tags may be

constructed to be more unobtrusive; visual tags may be written in UV reflective ink

to render them as imperceptible to the human eye as required. Deictic device identification technologies have not evolved to the point where aesthetics are a driving

argument behind system design. Therefore we do not believe that existing technologies should be differentiated on aesthetic impact.

2.4.2

Cost of Tags

All the device identification systems presented in Section 2.3 have been engineered so

that the monetary cost of the device tags is minimal. Natural gesture and pointing

vector systems require no tags, and hence incur no tagging costs. Visual glyphs may

be printed or displayed, and are extremely cheap. RF-id tags (passive and active) are

projected to cost under 10 cents a tag. iButtons currently cost approximately $1.00

apiece. By far the most expensive tagging system we have described are Bluetooth

and Infra-red, and both of these systems are already deployed on millions of devices

world-wide. We do not believe that the cost of tags is a significant impediment to

the widespread deployment of any of these technologies.

2.4.3

Differentiating between Identification Systems

Therefore, the attributes we use for differentiating one identification technology from

another are directional query, ease of sensing, infrastructural demands, tag power

usage and security/detection range.

e Directional Query

19

Directionality is important for device query so that we may accurately specify

the object of interest to us. Natural gesture and pointing vector systems are

inherently directional. Similarly, glyph recognition and IR transceiver systems

use line-of-sight visible light and IR for communication. Contact tags tightly

bound the mobility of the tag-reader and hence may be considered directional.

In contrast, the radio ID broadcasts used in Bluetooth and RF-ID for device

identification are omni-directional.

The length of antenna required to construct a directional antenna for an electromagnetic emission is directly proportional to the wavelength of the emission.

Radio transmissions have a much larger wavelength than visible or infra-red

light. A directional antenna for a 2.4Ghz radio transmission is typically 9-12

inches in length. The focussed output of a directional radio antenna is a potential health-hazard. Therefore radio is inherently unsuited to make short-range

directional queries.

e Ease of Sensing

Identification technologies differ from each other in the amount of computation

that must be done during the actual sensing of a device ID. Natural gesture

systems require the application of gesture detection and pose estimation algorithms every time a user points at a device.

Pointing vector systems require

that beacon readings be processed by a position triangulation algorithm before

a 3-D pointing vector is determined.

A device-location lookup is also neces-

sary. Visual glyph recognition requires that we process the video feed before we

can identify the ID of the device that we are pointing at. All of these systems

require computationally expensive sensing. In a related concern, passive radio

tag readers require a powerful antenna in order to obtain a response from RF-id

tags. The power consumption of this sensing technique is high.

In contrast, active radio tags, infra-red transceiver and contact tag identification

techniques can be recognized simply and without significant computation or

power consumption. Therefore we can use ease of sensing to differentiate device

20

identification technologies from one another.

" Infrastructural Demands

Technologies such as natural gesture recognition and CricketCompass require

the environment of the user to be instrumented significantly. Natural gesture

recognition requires that every room contain one or more video camera networked to an image processor.

CricketCompass requires that a number of

Cricket beacons be carefully positioned throughout the user's building. The

complexity and maintenance cost of these systems is high. In contrast, tagged

systems do not require any infrastructural outlay.

" Tag Power Usage

Unlike tag cost, the different device techniques vary significantly in the power

consumption of the device tags. Untagged schemes have zero tag power consumption. Passive tags such as visual glyphs and passive RF-id also do not

require any power source. iButtons do not use any energy for transmitting information and hence have long lifetimes. Active tags such as IR transceivers

and Bluetooth actively broadcast their IDs. Therefore these systems have a

lifetime that is significantly shorter than the other identification schemes.

" Security/Detection Range

For wireless communication systems security and detection range are interrelated concerns; which is why we choose to address them jointly. The broadcast

response of a radio tag means that nearby systems can snoop on the device ID

transmissions without necessarily having to be in the same room as the user.

These identification systems offer greater detection range but lower security.

In contrast line-of-sight communication systems are less susceptible to sniffing.

The greatest security is provided by contact communication systems - iButton,

for example, offer high security but low mobility communication.

The minimum security level required is ultimately a function of the particular

pervasive application being developed. However, for the purpose of evaluating

21

these different systems, we will assume that we can tolerate the security risk

of a short-range radio broadcast and will instead focus on the mobility and

detection range of the systems.

2.5

The "Right" Technology

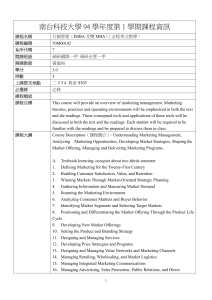

Table 2.1: Comparison of existing directional device identification systems.

Technology

Natural Gestures

Pointing Vectors

Glyph Recognition

Active Radio Tags

Passive Radio Tags

IR Transceivers

Contact Tags

Directional

Query

V

/

X

X

V

V

Ease of

Sensing

X

X

X

/

X

/

/

Low Infrastructural

Demands

X

X

/

/

/

/

Low Tag

Power Usage

Detection

Range

V

/

X

/

X

/

/

X

V/

X

Table 2.1 summarizes the performance of various device identification systems

using the criteria in Section 2.4.3.

From this table, we can gather the following

insights for the design of a deictic device identification technology:

* Radio is not the right medium for directional query

Radio tags, both passive and active, suffer from the problem of not being directional in query. The omni-directionality of RF-id tags is a benefit for a number

of tracking and inventory systems; however this same feature makes it ill-suited

to the problem of directional device identification. In contrast, light or infrared based systems are line-of-sight. Therefore, light or infra-red is the preferred

physical medium with which to initiate a device query.

* Device IDs can be read cheaply if they are communicated using modulated

electromagnetic signals

22

The computational complexity of the video processing done by natural gesture

systems indicate that the sensing of device IDs via signal modulation is an

attractive approach. Such a system is also capable of holding more than the

36-128 bits provided by CyberCode without running into tag size constraints.

" A complex infrastructural outlay is unnecessary

Natural gesture recognition was developed to provide a generalized humancomputer interface, not to directly address the problem of directional device

identification. Similarly, pointing vector systems were designed to provide in-

door location and orientation systems. Therefore, the design rationale of these

systems includes complex environmental modification. As, a number of successful tagged identification systems have demonstrated, we do not need extensive

infrastructural modification to address the problem of directional device identification.

" Tags that constantly poll the environment are energy-inefficient

Bluetooth and IR transceiver tags are built around the notion of automatic

device discovery. The designers of these systems incorporated polling mechanisms into their systems because they wanted the user to do no more than

come within range of a reader; device identification was meant to be handled

as seamlessly and invisibly as possible. In contrast, directional device identification inherently requires human mediation. Chances are, the device tags and

readers will always be within the same room and hence always within detection

range of each other. However, we only want to initiate identification when the

user points at a device- we would like our identification system to stay dormant

otherwise.

Therefore, device tags that constantly poll the environment are a detrimentthis feature adds no benefit but instead reduces the lifetime of a tag. In the

absence of user query, we would like our tags to conserve energy and be as

passive as possible.

23

* Our system should allow for a large detection range

The physical contact requirement of iButtons restricts the kind of pervasive

applications that can be developed using this technology. Security concerns

permitting, there is a powerful argument for being able to sense IDs at a distance.

Therefore, of the seven technologies described in this chapter, three are particularly promising approaches to directional device identification. These are

1. Glyph Recognition

As demonstrated by CyberCode, this approach is directional, requires no infrastructural outlay, uses tags that consume no power, and can be queried from

a distance. The greatest disadvantage of this techniques is that the user must

carry around a powerful image processor as a pointing device. The cost and

power consumption of such a processor is significant.

2. IR Transceivers

This technology is directional, requires no infrastructural outlay, and transmits

IDs that can be sensed without requiring expensive processing. The downside

of this technology is that tags lose power by polling their environment and

transmitting information. Therefore the lifetime of this system is bound by the

battery life of the tags.

3. Contact Tags

iButtons implement ID transmission though parasitic power, hence these tags

have a long lifetime. Requiring the user to be physically in contact with the

tag means that this approach is the most secure of the ones we describe. However the requirement that one be in physical contact with the tag also severely

restricts the mobility of the user.

Depending on the particular requirements of the situation, one or the other of

these technologies may be a good fit.

24

2.6

A 'Point-and-Shout' System

Table 2.2: Comparison of Point-and-Shout with existing directional device identification systems.

Technology

Glyph Recognition

IR Transceivers

Contact Tags

Point-and-Shout

Directional Ease of

Query

Sensing

/

X

/

V

V

V

Low Infrastructural

Low Tag

Detection

Demands

Power Usage

Range

V

X

V

V

X

/

V

We propose a new system that meets all the criteria raised in Section 2.4.3. Our

system is a tagged system- the tags consist of a battery , a radio transmitter, and

a photo-sensitive switch. The tag reader consists of a radio receiver coupled with a

directional IR transmitter. The key components of this system are (1) Directional

query (2) Broadcast reply and (3) photo-triggered powered tags.

We envisage our reader sending out bursts of directional IR when the user intends

to query a tag. Upon detecting this burst of radiation via a passive photo-sensitive

switch, the tag switches on and broadcasts it's ID via radio for a few seconds. The

broadcast tag is sensed by an RF receiver on the reader. We call this system a

'Point-and-Shout' system.

The point-and-shout system has the advantage of using directionality only when

necessary. Consider that the energy cost of a directional transmission is composed

of two parts- the cost of aligning transmitter with receiver and the energy cost of

generating radiation. During 'point-and-shout' query, the user aligns the reader with

the target- thus the reader only pays the energy cost of generating radiation. During

directional response however, it is the responsibility of the tag to align its transmitter

with the reader's receiver. The cost and complexity of doing this automatically is

very high. Therefore, we abandon the idea of directional response and instead use a

broadcast mechanism for response.

The tag response is intended to be short-range and limited in time. The broadcast

25

medium could be IR or RF. However, we would like to keep query/trigger wavelengths

and response wavelengths distinct and non-overlapping to avoid (1) collision of response with query and (2) triggering of false responses by nearby tags. Therefore we

propose that response be made using radio transmissions.

The point-and-shout system does not require the installation of a complex infrastructure. Responses of the point-and-shout tags are as easy to read as Bluetooth

broadcasts or Cricket beacon broadcasts. The length of the tag response is not subject

to space constraints (like in CyberCode). A reader may be built out of cheap, readily

available components- and it does not require connection to a powerful processor.

Device tags may either be integrated with a device and draw power from it, or they

may be supplemented with a small battery and be stand-alone. Since we anticipate

that device tags will not be queried constantly, a single small battery can power tag

responses for a long time. Additionally, providing a tag with a power source means

that we do not impose a large energy drain on the reader. Therefore, tag responses

can now be read from a distance. This allows for the development of applications not

possible with iButtons.

Table 2.2 compares point-and-shout with glyph recognition, IR transceivers and

contact tags.

2.7

Conclusion and Future Work

In this chapter we have traced the arguments for a point-and-shout system, beginning

with the survey and evaluation of current device identification systems for the task

of directional device identification. We are currently looking to actually design and

construct these tags. The task of developing APIs and applications to manage this

system is also the object of future work in this area.

26

Chapter 3

Video-Enhanced Multi-modal Rest

Detection

3.1

Introduction

The position and orientation of a device in a 3 dimensional world can be modeled

by a 5-tuple < x, y, z, 0, 0 > where < x, y, z > gives the location of an object in 3-D

space and < 0, 0 > gives the orientationof the device. When the device is in 'motion'

in this three-dimensional world, one or more of x, y, z, 0 and 0 changes appreciably 1

with time, otherwise the device is at 'rest'.

We explore different techniques of robust rest-detection and construct a system

that exposes rest-information simply and cheaply to applications. Rest-detection can

be made robust by combining traditional tilt-meters with video-based optical flow

techniques. Our system is useful for applications in the domains of power conversation, context detection, position triangulation and drift cancellation.

Consider a power-constrained device running a deictic (i.e. pointing) application

of some sort. In this case, detecting that the device is at rest may imply that the user

intends to query or use the object at which the device is pointing. If the process of

identifying and querying the pointed-at-object is expensive, then it is good to delay

'The word 'appreciably' has been chosen judiciously. We well see in Section 3.7.1 that the notion

of 'rest' must be application-defined.

27

such operations until we are reasonably sure that the device is at rest.

To continue this point, power conservation is a particularly important challenge for

small mobile devices; it is often the single hardest constraint in application design.

Also, most query/lookup mechanisms involve an implicit exchange of data with a

networked information source- and the first hop of this exchange is made over a

wireless network. Wireless exchange of data is an extremely power-hungry operation,

especially for small resource-constrained mobile computers. We can use motion cues

to reduce our use of power-hungry operations.

Rest-detection is also helpful in identifying several gestures (user contexts). For

example, placing a device on the table or picking it up may have meaning. Fundamentally, context-detection applications are translating physical motion of a device into

high-level user intentions. Therefore, knowing when a device is at rest is important

for these applications.

Not surprisingly, being able to detect rest has applications in the field of location

and heading detection. A standard approach to location estimation is to assume

that the device is at rest, collect distance-estimates from multiple beacons and then

triangulate the position of the device in 3-D space. Popular systems that implement

such triangulation algorithms are Active Bat[31] and Cricket[18]. However, there is

a nagging epistemological concern with this approach. It can be illustrated by the

following conundrum:

The 'Assumption of Rest' Conundrum

1. To estimate your position you need to know you are at rest.

2. To estimate your rest-condition you need to know your position.

In other words, there currently exists no reliable way of knowing when a device is

actually at rest. In current implementations, the user manually signals to the position

triangulation mechanism that she intends to be stationary for the next few seconds.

She does this in order to give the triangulation algorithm time to collect the distance

readings it needs. The algorithm has no way of ensuring that this contract is met,

and therefore cannot decide if the readings it collects are corrupted by user motion.

28

Additionally, the distance estimates collected by the device exhibit error. To

mitigate the overall positioning error, positioning algorithms can either resort to

collecting multiple samples from each beacon, or to collecting individual samples

from multiple beacons. The latter approach involves designing our system with a

greater beacon density; since this is technically and economically challenging, we

would like to avoid this approach if possible. However, the cost of using approaches

that collect multiple readings from each beacon is application latency- we have to

wait longer to collect the needed readings. Since latency determines the end usability

of a positioning algorithm, location support systems today are designed to bound

latency and only then minimize cost.

Continuing, the standard approach to bounding latency is to a priori choose a

triangulation window of a fixed, static size so that worst-case latency is bounded.

However this approach is suboptimal because it ignores the observation that users of

a pervasive devices are at rest often, and for varying periods of time. Using a fixed

window size means that we impose high-level behavioral restrictions on the user. We

also lose access to optimizations that we might have been able to perform had we

chosen a larger triangulation window. Both of these are expensive failings.

A different argument applies in the domain of heading detection. Many heading detection systems fundamentally obtain heading information by integrating some

measure of change in heading. A consequence of this integration is that error is introduced at every instance of integration and the overall estimate of heading drifts with

time. Knowing when the device is stationary may enable us to attempt out-of-band

methods of drift cancellation.

3.2

Chapter Overview

In Section 3.3, we will survey the literature to find previous work that is relevant to

the problem of rest-detection. Section 3.4 will describe the concerns with previous

research that lead us to look for an improved solution. In Section 3.5, we argue for

a multi-modal rest-detection algorithm that incorporates video and tilt data. Sec29

tion 3.6 explores the integration of these inputs in more detail. Section 3.7 describes

the design and implementation our rest-detector, culminating with stand-alone restdetection results presented in Section 3.8. Finally, Section 3.8.1 illustrates the application of this research to a current problem in the domain of position triangulation.

3.3

Previous Work

Three different research communities have previously addressed the problem of restdetection. These are the mobile robotics, object tracking and human-computer interface research communities.

Determining a robot's location and heading in real-time has been called the global

positioning problem. A number of researchers have focussed on using video sensor data to enable a robot to detect its location and orientation. The major techniques in this area are landmark-recognition techniques[27, 13], camera-configuration

techniques[28], model-based approaches[6], and feature-based visual-map building

systems[15]. The last approach is remarkable because it does not require any a priori

map of the world. Chapter 9 of Borenstein et.al.[5] provides an excellent overview of

visual positioning systems in mobile robotics.

In the area of object-tracking, a large number of algorithms and systems have

been developed to accurately track a moving object via video data. For our purposes,

we may divide these systems into on-line or off-line systems. Off-line systems are

not useful to our goal of making rest-detection an application utility. An interesting

real-time, video-based position location systems is Project HiBall[32].

Project Hi-

Ball instrumented the ceiling space of the user with a dense array of LEDs, and

the position of the user was detected via a complex 'ball' that contains six infrared

sensors.

Human-computer interface researchers have long appreciated the importance of

determining when a device is at rest. The problem of detecting the motion and

rotation of the device in 3-D space came to be called one of context detection.

Rekimoto[21

attached tilt-meters to a Palm device.

30

Harrison et.al.[8], Small &

Ishii[26] and Bartlett[3] explored using tilt-meters to navigate. Hinckley et.al.[9] used

tilt-meters coupled with a touch sensor and infrared proximity detector to identify

when a hand-held device is being used as a voice recorder. The applications require

significant reliability from the tilt-meter readings- in one case requiring a very specific

relationship between detected x and y angles, and in another identifying tilt angles

to within ± 3 degrees. Hinckley et.al.[10] also describe the Video-Mouse, a six degree of freedom pointing device that consist of a video-enhanced mouse and a special

mouse-pad with a special grid. This device implements video-only rest and motion

detection by processing the image of the grid received by the mouse-camera. The

TEA project[24] extends the idea of multi-modal context detection both theoretically

and practically. They develop and use a customized hardware board fusing data from

8 sensors (photo-diode, two accelerometers, IR proximity detector, temperature, pressure, CO 2 gas, and sound). They offer an insightful distinction between physical and

logical sensors and use Kohonen maps to automatically detect contexts as regions of

points in 8-dimensional physical sensor data space. Their device was able to use this

approach to distinguish between rest, walking and placement in a briefcase.

3.4

Challenges

To the best of our knowledge, the is the first attempt to directly address the problem

of rest-detection. Previous work in this area has touched upon rest-detection only as

a related issue to a different problem- be it robot positioning, real-time tracking or

gesture recognition. However, the importance of rest-detection to a number of varied

fields suggests that this is a problem that merits study in its own right. Therefore,

we feel that previous approaches to rest-detection can be improved on the following

grounds: (1) Complexity (2) Cost and (3) Rectifying flaws in Tilt-meter Usage

3.4.1

Complexity and Cost

An approach based on extensive analysis means that the technique cannot be performed in real-time, or locally on a hand-held device. This automatically eliminates a

31

number of mobile robotics mapping-based algorithms. Additionally, while the problem of object-tracking via video remains an area of extensive research, these systems

are clearly overly complicated for our purposes. Our motivation in rest-detection is

not to track objects but merely to decide if we are at rest or not. Also, systems

such as HiBall that require expensive hardware or extensive instrumentation/tagging

of the user's work environment are not attractive simply because of the amount of

environmental modification they require.

3.4.2

Tilt-Meters

While tilt-meters have been powerful in certain areas of context detection, there exist

two fundamental problems with their use for the problem of rest-detection. Tilt-meter

approaches are (i) not sensitive to certain kinds of motion and (ii) not designed to be

be an accurate indicator of rest.

Static tilt-meters work by measuring their attitude relative to the Earth's gravity

field at a given point (gravity vector for short). If the location and orientation of

a device in 3-D space can be described by the 5-tuple < x, y, z, 0,

#

>, then static

tilt-meters are most sensitive to change in / and z. This is because change in these

parameters directly affects the relationship of a tilt-meter to the gravity vector at a

given point. Therefore, there are changes in x, y and 0 that can be made that do not

strongly affect readings from a static tilt-meter. The issue will be explored in greater

detail in Section 3.5.2.

Static tilt-meters today are designed to provide pervasive applications with tilt

information. They are constructed so that they can quickly tell an application it's

general orientation with respect to the gravity vector. The fundamental question

they attempt to answer is "Is the device closer to horizontal or vertical?" rather than

"Has the device orientation changed in the last few seconds?". Increasing the range

of orientations over which quick and accurate comparisons may be made means that

tilt-meters cannot be excessively sensitive to small changes from a recent state. Such

a sensitivity would distort the ability of the tilt-meter to make macro tilt comparisons.

This is a rather subtle issue with the design of tilt-meters wand it will be explored

32

30

....

-

Optical flow readings

Tilt-meter readings

1.5 a noise band for Tilt-meter

20

C

0

-a

10

0

E

~&

0

A

KR

M41

~M

A

N.AK'

C

-10

0

CD

0

-20

-3C

-4C

0

5

10

15

20

25

30

Time (seconds)

Figure 3-1: A 50-second trace of the output from sensors running on a Mercury

backpack. Both sensors are attempting to measure horizontal drift, albeit by different

techniques. The optical flow technique corresponds to a simple optical flow algorithm.

The tilt-meter readings correspond to readings from /dev/backpaq/accel. All readings

taken at 15Hz.

in greater depth in Chapter 4 where a thorough empirical investigation has been

conducted into the static error of the tilt-meters used in the Mercury backpack.

However, the consequences of this design trade-off can be seen in Figure 3.4.2; which

shows the results of a 50-second trace of two sensors running on a Mercury backpack

held in the hand of a user. The tilt-meter readings exhibit a lot of variation while at

rest.

33

3.5

A Video-Enhanced Approach

Combining video data with tilt-meter data is an approach that promises to meet the

constraints raised in Section 3.4. In this section, we will outline the pros and cons

of incorporating video data for rest-detection and then justify the construction of a

combined video and tilt approach.

Mobile-robotics research has already shown that video techniques can be used to

make position and heading estimations. However, much of this work has not been

widely used because it has not been cheap or simple to develop a mobile, sensor-rich,

video-capable platform for pervasive computing. Fortunately, this is no longer the

case- commercial cell-phones produced by Samsung and Nokia now come with built-in

video cameras, and video-capable PDAs are becoming widely available. For example,

the research presented in this paper was conducted using a Mercury backpack[20] at

the M.I.T. Lab for Computer Science. This device gives a regular off-the-shelf iPaq

access to tilt-meters, video and PCMCIA extension slots. It illustrates how video

data can now be considered an integral part of the sensor universe of a small, mobile

computer.

Video data is high-quality, digital and gravity independent.

It is sensitive to

horizontal translation motions in a way static accelerometers are not. And it offers

the choice of using detection and sensing algorithms that are not handicapped by

prior hardware-based design decisions. All of these are attractive arguments for a

video-based approach.

On the flip side, the use of video images means that a video-based rest detection

algorithm will always be susceptible to background motion. It is not reassuring for

our rest-decisions to be solely dependent on who walks across our camera at any given

time. Additionally, video based techniques are notoriously computationally intensive

- which make them hard to implement on small, mobile, resource-constrained devices.

The arguments for and against a pure video-based rest detections system are

summarized in Table

3.1.

Given this discussion, we can see that a more robust

approach is to combine information from both video and tilt sensors to produce a

34

Table 3.1: Pros and Cons of Video-Based Approaches to Two-Dimensional Rest Detection.

Pros

Cons

1. Sensitive to image change

1. Susceptible to back-ground motion

2. Improvable via software

3. Independent of Earth's gravity field

2. Potentially computationally expensive

better rest-detection system.

3.5.1

Statistical Measures

The video component is based on an Optical Flow algorithm which is simple, computationally inexpensive, provides a good measure of overall image drift, and resilient

to variations in image color, brightness and minor movement.

Consider a given frame P. Let shift(P,h, v) be the same frame except with each

pixel displaced horizontally by h columns and vertically by v rows. Let D be a binary

difference measure that operates across frames then. For any two consecutive frames

P and P_ 1 , we define:

optical-flow = < h,v > where D(Pn_1 , shift(P, h,v)) is minimum.

For the purposes of rest-detection, the vertical-flow component v is more of a

liability than a benefit. The information it provides is easily corrupted by scan lines

seen in standard video feeds. Additionally the information it provides is redundant

with pitch and tilt information obtainable from a tilt-meter. Therefore we choose to

ignore the vertical flow component and instead define AIMAGE where:

AIMAGE = AVERAGE(hi, h2 ...hk) where (hl, h 2 ... hk) is a window of horizontal

flow readings from an optical-flow algorithm.

For the tilt-meter component, we use a window of tilt-readings from a tilt-meter.

Unlike the optical-flow measure, the mean of the window is not informative in this

case because all it tells us is the current tilt of the device. We are not interested in

the current tilt, but in the change in tilt. Therefore we define ATILT, where:

35

ATILT = STD DEV(ri, r2...rk) where (ri, r2...kr) is a window of consecutive tilt

readings from a tilt-meter.

We hope to build a system where we receive new video and tilt-readings at approximately 10Hz. Therefore we arbitrarily choose a window size of 1 second so that our

AIMAGE and ATILT measures are obtained from at least 10 readings. Readings

from the two measures are never compared to each other numerically, hence we have

no need to normalize the two types of readings.

To guide the design of a video-based rest detector, we performed three experiments

with the hand held device (1) at rest in the palm of a user, (2) translating horizontally

across a room and (3) staying in place but pointing to different objects in the room.

3.5.2

Experiments

1. Hand-held Rest

The device is at rest in the hands of the user. The user holds the device steady,

as if attempting to use it as a pointing device. Over the course of this experiment,

the user made tapping motions on the iPaq, as if attempting to select portions of

the screen or input data. This models the device being used as a pointing device.

2. Horizontal Translation

In this experiment the user carries the device in the palm of his hand and walks

back and forth across a room. Over the course of a 50-second trial, the user walked

a distance of 30 meters. This experiment models the common case when a user is

using the device, but is not stationary.

3. Pitching and Rolling

In this experiment, the device was kept in one place but randomly pointed at

different objects in the room. This models the case when the device exhibits a lot

of motion.

36

60 -x - Pitching and Rolling

Horizontal Translation

....

Hand held Rest

50-

xX

xx

X

Sx

x

40

X

-

10

x

x

x(<

X

CZXXX

20

X

x

-

cc

xx

X

x

In6

20

3.

x.X

X

4

XXK

X

XA

X

x

0100304506

Time (seconds)

Figure 3-2: A 50-second trace of AIMAGE with a sliding window size of 1 second

and a sampling rate of 15Hz. A clear difference can be seen between readings at rest

and in motion.

37

90-x

Pitching and Rolling

Horizontal Translation

-.- Hand held Rest

80x

70x

7 -

0

..

50

1

X$Zx<4*

x

X X

x

--

x

x

x

x

x

XX.'

X'x

xx23456

xc

K

X.x

0Z

x~~

6060-

xx

x

f

Time (seconds)

Figure 3-3: A 50-second trace of the output from ATILT with a sliding window

size of 1 second window and a sampling rate of 15Hz. It is not easy to differentiate

between rest and horizontal translation.

38

Table 3.2: Performance of AIMAGE and ATILT in different rest conditions. The

sliding window size is 1 second, and the sampling frequency is 15Hz.

AIMAGE

Average Reading

hand-held rest

horizontal translation

pitching and rolling

0.19

9.87

30.06

ATILT

_

0.18

8.30

6.86

Average Reading

Standard Deviation

7.57

7.74

52.68

1.38

1.70

9.01

hand-held rest

horizontal translation

pitching and rolling

3.5.3

Standard Deviation

Observations

Figure 3-2 and Figure 3-3 compare the performance of vision and tilt techniques in

each of these situations. From the graphs, we can make the following observations:

I. The AIMAGE technique makes a sharp distinction between cases at rest and

in motion.

The readings for AIMAGE when the device is in motion are almost always

higher than the maximum values attained for AIMAGE when the device is at

hand-held rest. For horizontal translation, AIMAGE exhibits momentary low

readings that are caused by natural moments of rest in the gait of the user.

This suggests that the usage of an initial wait-period of a short duration will be

required to filter out conditions of momentary rest. Table 3.2 provides additional

statistics on these experiments.

II. ATILT cannot detect horizontal translation

From Figure 3-3 and Table 3.2, we see that is difficult to differentiate between

horizontal motion and rest based on tilt-meter readings alone. This means that

tilt-meters are not useful in identifying rest when the user is using the device

and walking around. However, ATILT is effective in robustly detecting gross

changes in device orientation.

39

3.6

Integration of Video and Tilt

Given our preliminary experiments, it is possible for us to define a two-sided range

LOW for readings from both AIMAGE and ATILT that corresponds to readings

when the device is at hand-held rest. Given our definition of LOW, we can define a

one-sided range called HIGH (more accurately, NOT-LOW) that contains all values

greater than the LOW range. Therefore, at all times our algorithm is given video

and tilt readings that are from {low, high} X {low, high} and asked to decide if it is

at instantaneous rest or instantaneous motion. The algorithm believes itself to be at

overall rest after a prolonged period of instantaneous rest.

The cases where both video and tilt data corroborate each other is easy to label.

If both AIMAGE and ATILT readings are in the low range, then it is likely that

we are in a period of instantaneous rest; if both readings are in the high range, then

we are are in a period of instantaneous motion. But what happens, when video and

tilt readings do not agree with each other?

3.6.1

Case: AIMAGE low, ATILT high

Consider the case where AIMAGE is low, but ATILT is high. Initially, we imagined

that such a situation could be produced by 'hoodwinking' the video by presenting

it with a dark or featureless surface, However, we found that the design of the optical flow algorithm is such that the output of AIMAGE in this case is not zero but

random. Intuitively this means that the algorithm cannot reliably pick one value of

flow over another, and hence becomes sensitive to very minute differences in image

quality. Therefore, switching off the lights or blocking the camera lens does not produce low values for AIMAGE. Similarly, we found that even apparently featureless

surfaces such as blank white-boards contained enough variation in pixel values for the

algorithm to detect motion.

In fact, the only way we were able to fool AIMAGE into making a false positive

error for rest detection was to affix a small highly-detailed image (in our case, a circuit

board) a short distance in front of the camera lens. The effort required to fool the

40

camera in this way suggests that this situation is unlikely to occur often. However,

if we did face the case AIMAGE low, ATILT high, are we to trust the video data

and assume rest, or are we to trust the tilt data and assume motion?

We observe that, unlike a camera, it is hard to artificially force the tilt-meter to

generate high readings without actually moving the device. While the a low reading

from ATILT may be ambiguous, a high reading from ATILT generally means that

the device is undergoing acceleration (and hence motion). Hence the correct decision

in this situation is to trust the tilt-meters and assume that we are in instantaneous

motion.

3.6.2

Case: AIMAGE high, ATILT low

What happens when AIMAGE readings are high, but ATILT readings are low?

Are we at rest, or are we in motion?

Horizontal translation is an example of an instantaneous motion situation where

ATILT readings are low but AIMAGE readings are high. Since horizontal motion

models the case where the user is walking and using the device, we cannot assume

that this is an unlikely situation. Therefore this set of readings may mean that we

are in motion.

However, AIMAGE readings may also go high due to momentary occlusions by

parts of the user's body; or by large moving portions of the background. One may

attempt to filter out background motion by using more complicated techniques than

optical-flow, but at the most fundamental level this is a decision that is undecidable

visually. Video is also sensitive to background noise. Therefore this set of readings

may also mean that we are at rest.

So unlike the AIMAGE low, ATILT high case, we cannot argue for trusting one

device over another. How do we resolve this situation? Essentially, our system can

either decide that the device is at rest (and run the risk of making a false positive

error) or decide that the device is in motion (and thereby risk a false negative error).

Looking at the examples given in Section 3.1, it can be seen that the cost associated

with a false positive error is greater. Erroneously deciding that the device is at rest

41

Table 3.3: Integration of Video and Tilt Readings. Given tilt (ATILT) and video

(AIMAGE) readings, this table tells us whether our algorithm treats the situation

as instantaneous rest or instantaneous motion. The algorithm transitions into overall

rest if and only if it is in instantaneous rest for an application-specified period of time.

AIMAGE

low

low

high

REST

MOTION

(Conservative rest detection)

MOTION

ATILT

high

MOTION

(Video is being 'hoodwinked')

could lead to unnecessary usage of expensive resources, wildly inaccurate position

estimations, and failed calibrations.

In contrast, assuming that we are in motion

means that higher level applications are forced to make looser assumptions about

their context. Hence it is preferable to err on the side of detecting too much motion

rather than detecting too much rest. Hence, the system in this situation should decide

that it is in instantaneous motion. 2

The complete integration of video AIMAGE and tilt-meter ATILT readings is

therefore as given in Table 3.3.

The text in bold tells us whether our algorithm

interpreted the event as instantaneous rest or instantaneous motion.

3.6.3

Algorithm

Let < m, t > be instantaneous readings of the AIMAGE

and ATILT metrics.

Given these, we can at every instant of time determine if our instantaneous-stateis

instantaneous-rest or instantaneous-motion. Our rest-detection algorithm is a simple

state machine that exists on one of three states - MOTION, WAIT and REST. We

assume that the algorithm is initially in the MOTION state, but transitions to the

REST state after being in instantaneous rest for an application-specified period of

time. The algorithm exits the REST state when it encounters an instance of instan2

Our system could also potentially ignore this conflict between video and tilt and do nothing at

all. However the argument for conservative rest detection means that we are safer believing ourselves

to be in motion.

42

taneous motion. We maintain a state variable called rest-counter that counts the

number of at-rest intervals the device has experienced since rebooting. Applications

that poll this system can use the current state of the rest-algorithm and the value of

the rest-counter to decide if they have been at rest since the last poll.

The algorithm is outlined in Figure 3-4.

Figure 3-4: Algorithm

rest-counter = 0

SWITCH instantaneous-state

CASE instantaneous-motion:

state = MOTION

CASE instantaneous-rest:

SWITCH state

CASE MOTION:

state = WAIT

CASE WAIT:

IF waited< init. wait period

state = WAIT

ELSE

state = REST

rest-counter++

ENDIF

CASE REST:

state = REST

END

END

3.7

Implementation

We intend for the implementation of our rest-detection system to meet the following

high-level goals:

3.7.1

Goals

* Application Programmability

The notion of 'rest' varies from application to application. An energy conservation application would consider itself at rest when unused for a few seconds.

43

A screensaver application may consider itself at rest only if unused for a few

minutes. Hence it is important that applications be important to define what

"rest' means to them.

o Ease of Development

Our aim is that the notions of rest and rest-detection be simply and clearly

defined, and that applications be written easily against an rest-detection mechanism. Hence it is important the rest-detection mechanism be simply and easily

integrated into applications.

o Local Code

On the hand-held computers of today power, CPU and memory are scarce

resources. Many visual algorithms are resource hungry. Extensive computation

may also not be shipped to a remote server because wireless network access is

a power-hungry operation. Hence it is important that our visual rest-detection

system be small, local and computationally inexpensive.

o Push/Pull Mechanism

In certain applications, it may suffice to query a system variable to determine if

the device is at rest. Other applications may require intimation when a device

is at rest. Therefore our rest-detection mechanism should support both polling

and call-back mechanisms.

The rest-detection system is written in C, with the video devices being accessed

via a Video4Linux API. The test hardware platform is an iPaq 3650 running Familiar

Linux over an ARM processor. The system defines a single object file with a simple

header file rest.h that defines the API. The API consists of a struct called a RestDetector and simple functions that allow an application to control sampling rates, define

rest-detection parameters and utilise the callbacks and thread-safe polling mechanisms provided by the system. The principal component of the system is a daemon

that reads video and tilt data from the underlying Linux file-system and executes the

44

algorithms discussed earlier in this section. We have been able to run this daemon at

speeds of upto 15Hz on our hardware platform.

Therefore a simple rest-aware application may be written as simply as in Table 3.4.

3.7.2

Software Architecture

The software components of the system are:

Device Layer

These comprises video and tilt-meters. The tilt-device is built from an Analog Devices

ADXL202E two-axis static accelerometer. The video device is composed of a CCD

device controlled by an FPGA. These devices are part of the Mercury backpack and

are presented in Linux as files in the /dev/ filesystem. They provide device-level file

I/O capabilities.

Data Presentation Layer

For the purposes of developing and experimenting with different rest-detection mechanisms, we found it useful to abstract over the low-level Linux file descriptors with

a more meaningful API. The API exposes and maintains useful sensor information.

The API provides higher-level layers the ability to change the frequency with which

the low-level devices are sampled. As seen in Chapter 4, this changes the fundamental

statistical nature of the readings from the devices.

For video, the API defines callbacks which can be used as hooks for higher-level

layers. A higher-layer application may thus be notified every time a new frame is

received. To control resource usage the higher layer can also control the frequency

with which the device is polled, and the maximum frequency at which callbacks are

made.

45

Table 3.4: A Sample Rest-Aware Application

#include<stdio.h>

#include''rest.h''

struct RestDetector *rd = &(struct RestDetector);

int main(int argc, char** argv) {

The application decides on the sampling

frequency and initial wait period

for the rest-detector

restinit(rd, 15, 1.0) ;

//

15Hz sampling, 1.0 second wait

The application specifies the function

that will be called at changes in state

//

//

restsetcallback(rd, callbackfn);

reststart(rd)

callbackfn will be called at rest

start the system

The rest detector may also be polled

if (rd->RESTSTATE == REST) {

}

reststop(rd)

//

}

46

stop the system

re 3-5: Software Architecture of the Rest-Detection System

47

Statistics Package

In the absence of a small, light statistics library that was compiled for the ARM

processor, we wrote a pared-down statistics package that implements a sliding-window

data structure (akin to a ring-buffer). The package provides utility functions that may

be used to determine the mean, median and standard deviation of the sliding window.

The size of the sliding window may be dynamically adjusted. The statistics package

has proves useful both for video and tilt data.

Rest-Detector

This is the layer that implements the algorithms discussed earlier in this section. At

its lower boundary it is a consumer of video and tilt information from the device presentation layer. At its higher boundary it presents a simple interface to applications

that allows for control of sampling rates, definition of rest-detection parameters and