CMPS 5433 – Parallel Algorithms Dr. Ranette Halverson Chapter 1

advertisement

CMPS 5433 – Parallel Algorithms

Dr. Ranette Halverson

TEXT: Structured Parallel Programming: Patterns for Efficient

Computation

M. McCool, A. Robison, J. Reinders

Chapter 1

1

Introduction

• Parallel? Sequential?

• In general, what does Sequential mean? Parallel?

• Is Parallel always better than Sequential?

• Examples??

• Parallel resources?

• How Efficient is Parallel?

• How can you measure Efficiency?

2

Parallel Computing Features

• Vector instructions

• Multithreaded cores

• Multicore processors

• Multiple processors

• Graphics engines

• Parallel co-processors

• Pipelining

• Multi-tasking

Automatic

Parallelization

vs.

Explicit Parallel

Programming

3

All Modern Computers are Parallel

• All programmers must be able to program for parallel

computers!!

• Design & Implement

• Efficient, Reliable, Maintainable

• Scalable

• Will try to avoid hardware issues…

4

Patterns for Efficient Computation

• Patterns: valuable algorithmic structures commonly

seen in efficient parallel programs

• AKA: algorithm skeletons

• Examples of Patterns in Sequential Programming?

• Linear search

• Recursion ~~ Divide-and-Conquer

• Greedy Algorithm

5

Goals of This Book

• Capture Algorithmic Intent

• Avoid mapping algorithms to particular hardware

• Focus on

• Performance

• Low-overhead implementation

• Achieving efficiency & scalability

• Think Parallel

6

Scalable

• Scalable: ability to efficiently apply an algorithm to ‘any’

number of processors

• Considerations:

• Bottleneck: situation in which productive work is delayed

due to limited resources relative to amount of work;

congestion occurring when work arrives more quickly than it

can be processed

• Overhead: cost of implementation of a (parallel) algorithm

that is not part of the solution itself – often due to the

distribution or collection of data & results

7

Serialization (1.1)

• Act of putting set of operations into a specific order

• Serial Semantics & Serial Illusion

• But were computers really serial?

• Have we become overly dependent on sequential strategy?

• Pipelining, Multi-tasking

8

Can we ignore parallel strategies?

• Improved Performance

• The Past

• The Future

• Why is my new faster computer not faster??

• Deterministic algorithms

• End result vs. Intermediate results

• Timing issues – Relative vs. Absoute

9

Approach of THIS Book

• Structured Patterns for parallelism

• Eliminate non-determinism as much as possible

10

Serial Traps

• Programmers think serial

(sequential)

• List of instructions,

executed in serial order

• Standard programming

training

• Serial Trap: Assumption of

serial ordering

Consider:

A = B + 7

Read Z

C = X * 2

Does order matter??

A = B + 7

Read A

B = X * 2

11

Example:

Search web for a particular search phrase

What does parallel_for imply? Are both correct? Will the

results differ? What about time?

for (i=0; i<num_web_sites;++i)

search (search_phrase, website[i]

parallel_for (i=0; i<num_web_sites;++i)

search (search_phrase, website[i]

12

Can we apply “parallel” to previous

example?? Results?

parallel_do

{

A = B + 7

Read Z

C = X * 2

}

parallel_do

{

A = B + 7

Read A

B = X * 2

}

13

Performance (1.2)

Complexity of a

Sequential Algorithm

• Time complexity

• Big Oh

• What do we mean?

• Why do we measure

performance?

Complexity of a Parallel

Algorithm

• Time

• How can we compare?

• Work

• How do we split problems

across processors?

14

Time Complexity

• Doesn’t really mean time

• Assumptions about measuring “time”

• All instructions take same amount of time

• Computers are same speed

• Are these assumptions true?

• So, what is Time Complexity, really?

15

Example: Payroll Checks

Suppose want to calculate & print 1000 payroll checks.

Each employee’s pay is independent of others.

parallel_for (i<num_employees;++i)

Calc_Pay(empl[i]);

Any problems with this?

16

Payroll Checks (cont’d)

• What if all employee data is stored in an array? Can it be

accessed in parallel?

• What about printing* the checks?

• What if computer has 2000 processors?

• Is this application a good candidate for a parallel

program?

• How much faster can we do the job?

17

Example: Sum 1000 Integers

• How can you possibly sum a single list of integers in

parallel?

• What is the optimal number of processors to use?

• Consider number of operations.

18

Actual Time vs. Number of Instructions

Considerations of parallel processing

• Communication

• How is data distributed? Results compiled?

• Shared memory vs. Local memory

• One computer vs. Network (e.g. SETI Project)

• Work

19

SPAN = Critical Path

• The time required to perform the longest chain of tasks

that must be performed sequentially

• SPAN limits speed up that can be accomplished via

parallelism

• See Figures 1.1 & 1.2

• Provides a basis for optimization

20

Shared Memory & Locality (of reference)

• Shared memory model – book assumption

• Communication is simplified

• Causes bottlenecks ~~ Why? Locality

• Locality

• Memory (data) accesses tend to be close together

• Accesses near each other (time & space) tend to be cheaper

• Communication – best when none

• More processors is not always better

21

Amdahl’s Law (Argument)

• Proposed in 1967

• Provides upper bound on speed-up attainable via

parallel processing

• Sequential vs. Parallel parts of a program

• Sequential portion limits parallelism

22

Work-Span Model

3 components to

consider when

parallelizing

• Total Work

• Span

• Communication

Example: Consider

program with 1000

operations

• Are ops independent?

• OFTEN, parallelizing adds

to the total number of

operations to be

performed

23

Reduction

• Communication strategy for “reducing” number

information from n to 1 workers

• O(log p) = p is number of workers/processors

• Example: 16 processors hold 1 integer each & integers

need to be added together.

• How can we effectively do this?

24

Broadcast

• The “opposite” of Reduction

• Communication strategy for distributing data from 1

worker to n workers

• Example: 1 processor holds 1 integer & integer needs to

be distributed to the 15 others

• How can we effectively do this?

25

Load Balancing

• A process that endures all processors have

approximately the same amount of work/instructions

• To ensure processors are not idle

26

Granularity

• A measure of the degree to which a problem is broken

down into smaller parts, particularly for assigning each

part to a separate processor in a parallel computer

• Coarse granularity: Few parts, each relatively large

• Fine granularity: Many parts, each relatively small

27

Pervasive Parallelism &

Hardware Trends (1.3.1)

Moore’s Law – 1965, Gordon More of Intel

• Number of integrated circuits on silicon chip doubles

every 2 years (approximately)

• Until 2004 – increased transistor switching speeds

increased clock rate increased performance

• Note Figures 1.3 & 1.4 in text

• BUT around 2003 no more increase in clock rate – 3 GHz

28

Walls limiting single processor performance

• Power wall: power consumption growth with clock speed, nonlinear growth

• Instruction-level parallelism wall: no new low-level parallelism

(automatic) – only constant factor increase

• Superscalar instructions

• Very Large Instruction Word (by compiler)

• Pipelining – max 10 stages

• Memory wall: difference between processor & memory speeds

• Latency

• Bandwidth

29

Historical Trends (1.3.2)

• Early Parallelism – WW2

• Newer HW features

•

•

•

•

•

•

•

Larger word size

Superscalar

Vector

Multithreading

Parallel ALUs

Pipelines

GPUs

• Virtual memory

• Prefetching

• Cache

• Benchmarks

• Standard programs used to

compare performance on

different computers or to

demonstrate a computer’s

capabilities

30

Explicit Parallel Programming (1.3.3)

• Serial Traps: unnecessary assumptions deriving from

serial programming & execution

• Programmer assumes serial execution so gives not

consideration to possible parallelism

• Later, no possible to parallelize

• Automatic Parallelism ~ Different strategies

• Absolute automatic – no help from programmer – compiler

• Parallel constructs – “optional”

31

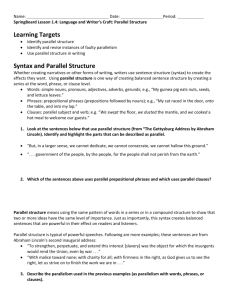

Examples of Automatic Parallelism

Consider the following

simple program:

1. A = B + C

2. D = 5 * X + Y

3. E = P + Z / 7

4. F = B + X

5. G = A * 10

Rule 1: Parallelize a block of seq. instruct.

with no repeated variables.

Rule 2: Parallelize any block of seq. instruct.

if repeated variables don’t change (not on

left)

What about instruction 5? What can we do?

32

Parallelization Problems

• Pointers allow a data structure to be distributed across

memory

• Parallel analysis is very difficult

• Loops can accommodate or restrict or hide possible

parallelization

void addme(int n, double a[n],b[n], c[n]

{

int I;

for (I=0; I <n; ++I)

a[I] = b[I] + c[I];}

33

Examples

void addme(int n, double

a[n],b[n], c[n]

{ int I;

for (I=0; I <n; ++I)

a[I]=b[I]+c[I];}

double a[10]

a[0]= 1

addme(9,a+1,a,a)

34

Examples

void call me ( )

{

foo ( );

bar ( ); }

void call me ( )

{

cilk_spawn foo ( );

bar ( ); }

Mandatory Parallelism

VS.

Optional Parallelism

“Explicit parallel programming

constructs allow algorithms to

be expressed without

specifying unintended &

unnecessary serial constraints”

35

Structured Pattern-based Programming (1.4)

• Patterns: commonly recurring strategies for dealing with

particular problems

• Tools for Parallel problems

• Goals: parallel scalability, good data locality, reduced overhead

• Common in CS: OOP, Natural language proc., data

structures, SW engineering

36

Patterns

• Abstractions: strategies or approaches which help to hide

certain details & simplify problem solving

• Implementation Patterns – low-level, system specific

• Design Patterns – high-level abstraction

• Algorithm Strategy Patterns – Algorithm Skeletons

• Semantics – pattern = building block, task arrangement, data

dependencies, abstract

• Implementation – for real machine, granularity, cache

• Ideally, treat separately, but not always possible

37

Figure 1.1 – Overview of Parallel Patterns

38

Patterns – 3 most common

• Nesting

• Composability

• Map

• Regular parallelism, Embarrassing Parallelism

• Fork-Join

• Divide & Conquer

39

Parallel Programming Models (1.5)

Desired Properties (1.5.1)

• Contemporary, Popular languages – not designed parallel

• Need transition to parallel

• Desired Properties of Parallel Language (p. 21)

• Performance – achievable, scalable, predictable, tunable

• Productivity – expressive, composable, debuggable,

maintainable

• Portability – functionality & performance across systems

(compilers & OS)

40

Programming – C, C++

Textbook – Focus on

• C++ & parallel support

• Intel support

• Intel Threading Building Blocks (TBB) – Appendix C

• C++ Template Library, open source & commercial

• Intel Cilk Plus – Appendix B

• Compiler extensions for C & C++, open source & commercial

• Other products available – Figure 1.2

41

Abstractions vs. Mechanisms (1.5.2)

• Avoid HW Mechanisms

• Particularly vectors & threads

• Focus on

• TASKS – opportunities for parallelism

• DECOMPOSITION – breaking problem down

• DESIGN of ALGORITHM – overall strategy

42

Abstractions, not Mechanisms

“(Parallel) programming should focus on decomposition

of problem & design of algorithm rather than specific

mechanisms by which it will be parallelized.” p. 23

Reasons to avoid HW specifics (mechanisms)

• Reduced portability

• Difficult to manage nested parallelism

• Mechanisms vary by machine

43

Regular Data Parallelism (1.5.3)

• Key to scalability – Data Parallelism

• Divide up DATA not CODE!

• Data Parallelism: any form of parallelism in which the

amount of work grows with the size of the problem

• Regular Data Parallelism: subcategory of D.P. which

maps efficiently to vector instructions

• Parallel languages contain constructs for D.P.

44

Composability (1.5.4)

The ability to use a feature in a program without regard

to other features being used elsewhere

• Issues:

• Incompatibility

• Inability to support hierarchical composition (nesting)

• Oversubscription: situation in nested parallelism in

which a very large number of threads are created

• Can lead to failure, inefficiency, inconsistency

45

Thread (p. 387)

• Smallest sequence of

program instructions that

can be managed

• Program Processes

Threads

• “Cheap” Context Switch

• Multiple Processors

Process

Data

Thr1 Thr2 Thr3

~~

~~

~~

Stack Stack Stack

46

Portability & Performance (1.5.5 & 1.5.6)

• Portable: the ability to run on a variety of HW with little

adjustments

• Very desirable; C, C++, Java are portable languages

• Performance Portability: the ability to maintain

performance levels when run on a variety of HW

• Trade-offs

General/Portable Specific/Performance

47

Issues (1.5.7)

• Determinism vs.

Non-determinism

• Safety – ensuring only

correct orderings occur

• Serially Consistent

• Maintainability

48