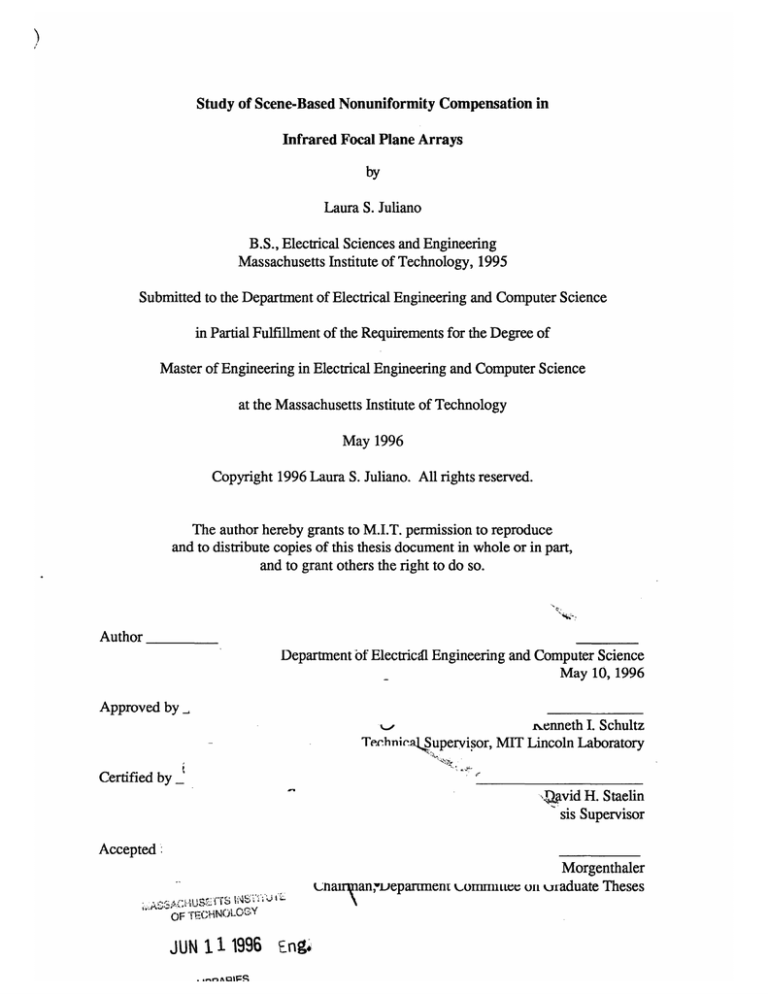

Study of Scene-Based Nonuniformity Compensation in

Infrared Focal Plane Arrays

by

Laura S. Juliano

B.S., Electrical Sciences and Engineering

Massachusetts Institute of Technology, 1995

Submitted to the Department of Electrical Engineering and Computer Science

in Partial Fulfillment of the Requirements for the Degree of

Master of Engineering in Electrical Engineering and Computer Science

at the Massachusetts Institute of Technology

May 1996

Copyright 1996 Laura S. Juliano. All rights reserved.

The author hereby grants to M.I.T. permission to reproduce

and to distribute copies of this thesis document in whole or in part,

and to grant others the right to do so.

Author

Department of Electricdl Engineering and Computer Science

May 10, 1996

Approved by

,

renneth I. Schultz

Technica•Supervisor, MIT Lincoln Laboratory

Certified by

-. avid H. Staelin

sis Supervisor

Accepted :

° *uk.nairnanr

i

OF.T-ECHNOLOGýY

JUN 11 1996 Eng.

LIBRARIES

Morgenthaler

epartment k.omnnutee on uraduate Theses

Study of Scene-Based Nonuniformity Compensation in

Infrared Focal Plane Arrays

by

Laura S. Juliano

Submitted to the Department of Electrical Engineering and Computer Science

May 10, 1996

In Partial Fulfillment of the Requirements for the Degree of

Master of Engineering in Electrical Engineering and Computer Science.

Abstract

Infrared Focal Plane Arrays (FPAs) are used in a wide variety of military and

industrial applications which require the collection of low noise, high resolution images.

In many of these applications, the dominant noise results from array response

nonuniformities. For low noise applications (e.g., detection of low contrast, unresolved

targets), correction for the nonuniform array response is critical for achieving the

maximum sensitivity of these arrays. Two classes of techniques for reducing the effects of

nonuniformity are source-based compensation, which employs the use of one or more

uniform calibrated sources, and scene-based compensation, which uses scene data to

estimate nonuniformity.

This thesis evaluates the performance of both source-based and scene-based

compensation techniques using experimental sensor data. Array nonuniformities were first

characterized and then used to predict the performance of the different compensation

techniques. Two-point source-based nonuniformity compensation techniques, which use

uniform calibration sources at two temperatures, were applied to blackbody images over a

wide range of temperatures and shown to reduce spatial variations to an average of 0.82

sensor counts (0.03'C). One-point compensation techniques performed as well only near

the calibration temperature; over the wide temperature range, variations averaged 14.7

sensor counts (0.5"C). Scene-based algorithms were evaluated using measured IR images

and were found to decrease spatial variation below that of the two-point compensation

technique, from 43 counts to as low as 17 counts, as a result of the algorithms' clutter

rejection capabilities. The effects of scene-based techniques on target suppression were

not evaluated as a part of this thesis.

VI-A Company: MIT Lincoln Laboratory

Thesis Supervisor: David H. Staelin

Title: Assistant Director, Lincoln Laboratory

Acknowledgments

I would like to thank MIT Lincoln Laboratory for all the support they have given

me over the last 3 years. I am especially grateful to Ken Schultz in Group 106, Systems

and Analysis for all his help and guidance. I would also like to thank everyone in Group

105 - Air Defense Systems who helped with the experimental work. Finally, special

thanks to Dad and Tom for all their love and support.

Table of Contents

1 Introduction.......................................................................................................

8

1.1 Problem Statement .........................................................................................

8

1.2 Thesis Organization.........................................................................................

9

2 Background ..............................................................................................................

11

2.1 Overview.............................................................................................................. 11

2.2 Infrared Imaging Applications......................................................................

13

2.3 Generic FPA Imaging System .................................................

15

2.4 Introduction to Focal Plane Arrays ..................................... ...

............. 16

2.4.1 FPA Detector Materials .....................................................................

17

2.5 Nonuniformity in Focal Plane Arrays .........................................................

19

2.6 General Algorithms for Nonuniformity Compensation...................

22

3 Nonuniformity .......................................................

............................................. 24

3.1 Experimental Setup ..............................................................................................

24

3.2 General Nonuniformity Characteristics ........................................

.........25

3.2.1 Sensor Response as a Function of Scene Radiance............................... 27

3.2.2 Nonuniformity as a Function of Scene Radiance ................................... .. 30

3.3 Spatial Characteristics of Nonuniformity......................................

31

3.4 Temporal Characteristics of Nonuniformity .......................................................

34

3.5 Summary ..............................................................................................................

36

4 Source-Based Nonuniformity Compensation .....................................

.......

4.1 Implementation Issues ..........................................................................................

37

38

4.2 Performance ............................................... .................................................... 39

4.2.1 One-Point Compensation .............................................................................

40

4.2.2 Two-Point Compensation .................................................................

42

............ 45

4.2.3 Temporal Noise Consideration.........................................

47

4.2.4 Investigation of Gain and Offset Terms ........................................................

4.3 Summary..............................................................................................................

5 Scene-Based Nonuniformity Compensation.............................

5.1 Implementation Issues ............................................................

50

.............. 51

......................... 52

5.2 Performance ....................................................................................................

5.2.1 Offset Comparison with Source-Based Techniques ...................................

5.2.2 Applying Algorithm to Synthesized Scene Data .....................................

5.2.3 Applying Algorithm to Skyball Data........................................... .....

53

54

58

67

6 Conclusion and Summary ........................................................................................

75

7 Bibliography .............................................................................................................

77

List of Figures

......... 16

Figure 2-1: Image Processing Block Diagram .......................................

before

nonuniformity

an

HgCdTe

sensor

a)

Infrared

image

from

Figure 2-2:

compensation and b) after nonuniformity compensation ........................................... 21

Figure 3-1: Laboratory setup for CE camera ..................................................................

25

Figure 3-2: Histogram of InSb response to 250 C blackbody............................... 26

Figure 3-3: Mean of InSb array response as a function of blackbody temperature........... 28

Figure 3-4: Relationship between blackbody radiance and sensor counts ..................... 29

Figure 3-5: Standard deviation of InSb array response as a function of blackbody

temperature................................................. ...................................................... 30

Figure 3-6: Horizontal spatial correlation of nonuniformity ........................................ 32

Figure 3-7: Horizontal spatial correlation of nonuniformity .................................... . 32

Figure 3-8: Vertical spatial correlation of nonuniformity ................................................

33

Figure 3-9: Vertical spatial correlation of nonuniformity ................................................

33

Figure 3-10: Relative drift of mean blackbody response of InSb array (the initial

mean value of 1065.6 counts was subtracted from all mean values) ...................... 35

Figure 3-11: Drift of standard deviation of blackbody response of InSb array (the

initial standard deviation of 73.7 counts was subtracted from all standard

deviation values) .....................................................................................................

36

Figure 4-1: Compensation block diagram............................................................

38

0

Figure 4-2: One-point NUC using 25 C as calibration temperature ............................. 41

Figure 4-3: Comparison of original array nonuniformity and error after one-point

N U C .......................................................................................................................

42

Figure 4-4: Two-point NUC using 250 C and 400 C as calibration temperatures............ 44

Figure 4-5: Comparison of original nonuniformity with one-point and two-point

N U C ................................................................................................

....................... 45

Figure 4-6: Standard deviation of single frames after applying offset correction to

200 consecutive frames (frame rate = 51.44 Hz)................................................... 46

Figure 4-7: Temporal variation of one pixel after offset compensation (minus mean

pixel value of 1223.2 counts) .....................................................

47

Figure 4-8: Histogram of Offset Coefficients in 2pt NUC ............................................... 48

Figure 4-9: Histogram of Gain Coefficients in 2pt NUC ................................................. 49

Figure 5-1: Performance of scene-based temporal high-pass filter applied to 200

frames of blackbody data at 250 C ............................................................................

55

Figure 5-2: Performance of scene-based temporal high-pass filter applied to 200

frames of blackbody data at 250 C...............................................

56

Figure 5-3: Comparison of temporal high-pass filter, running average, and onepoint compensation applied to 200 frames from CE camera of blackbody at

250 C ....................................................................................................................... 57

Figure 5-4: Section of IRMS image of Harvard, MA used to test temporal highpass filter ................................................................................................................ 59

Figure 5-5: Horizontal spatial correlation of IRMS image (calculated in 100x100pixel blocks)............................................................................................................ 60

Figure 5-6: Block diagram of algorithm testing ......................................

........ 61

Figure 5-7: Autocorrelation of high-pass filtered IRMS image ....................................... 62

Figure 5-8: Performance of temporal high-pass filter (rate=20) ...................................... 63

Figure 5-9: Steady state value of error between compensated and original images

after spatial high-pass filter........................................................64

Figure 5-10: Comparison of Odiff, yf(m), and Gop as performance measure

(standard deviation of uniform sequence before spatial high-pass filter = 6.97

counts)................................... ........................................................................... 67

Figure 5-11: Uncompensated image from Skyball sensor.......................................

68

Figure 5-12: Compensated Skyball image after (a) Temporal high-pass filter

(m=10), (b) 1-point method (350 C calibration temperature), and (c) 2-point

method (20 0 C and 35 0 C calibration temperatures) .........................................

68

Figure 5-13: Block diagram of performance evaluation ........................................

69

Figure 5-14: Comparison of one-point and two-point compensation for Skyball

data........................................................................................................................

71

Figure 5-15: Standard deviation of compensated image after temporal and spatial

high-pass filters .......................................................................................................

72

Figure 5-16: Comparison of scene-based compensation and two-point

compensation after spatial high-pass filter...................................

............ 73

1

Introduction

1.1 Problem Statement

Nonuniformity in infrared focal plane arrays (FPAs) results in fixed pattern noise

which limits infrared system performance. The methods which are commonly used to

reduce fixed pattern noise can be separated into two main categories: source-based and

scene-based nonuniformity compensation techniques.

There have been a number of studies of source-based compensation techniques,

but there has been considerably less work in evaluating and analyzing scene-based

techniques. This thesis will investigate scene-based nonuniformity compensation methods;

specifically, scene-based compensation techniques are analyzed to determine the

conditions for which a specific level of spatial uniformity can be achieved. This

information can be used to determine reasonable conditions for data collecting and

subsequent processing.

To this end, nonuniformity characteristics are examined using experimental sensor

data. A laboratory setup consisting of an infrared imaging system, blackbody source, and

simple targets was used to obtain test data. The data are used to highlight aspects of

nonuniformity and provide motivation for compensation techniques.

Source-based methods are developed and applied to experimental data. These

performance results serve as a baseline for comparison for the scene-based techniques.

The capabilities of one specific scene averaging technique are examined for a variety of

backgrounds as a function of the spatial frequency and contrast of the background scene.

In addition, data collected by one or more operational sensors are used as another test of

the various compensation methods. The combination of theoretical and experimental

work provides an analysis of scene-based compensation techniques.

1.2 Thesis Organization

This thesis consists of six chapters which develop nonuniformity characteristics

and an analysis of nonuniformity compensation.

The first chapter provides a brief

introduction to the goals and organization of this thesis.

The second chapter gives an overview of infrared focal plane arrays and introduces

the problems of nonuniformity. This chapter also introduces the concepts of scene-based

and source-based nonuniformity compensation.

Chapter 3 consists of an analysis of the spatial and temporal characteristics of focal

plane array nonuniformity.

It also addresses nonuniformity measurement issues and

describes the experimental setup used to obtain sensor data.

Chapter 4 concentrates on the

source-based nonuniformity compensation

techniques. This serves as the basis of comparison for the evaluation of the scene-based

compensation techniques.

Chapter

5 introduces

scene-based

algorithms

and

discusses

algorithm

implementation and performance. The results are compared to the performance of the

source-based compensation techniques.

The final chapter presents a summary of the thesis and discusses potential future

work.

2

Background

The goal of this chapter is to introduce the reader to focal plane array technology

and to provide a context for the problem of nonuniformity compensation.

First, an

overview of infrared imaging applications is presented. Nonuniformity and its relation to

detector performance is then discussed.

In addition, motivation for nonuniformity

compensation is developed. The chapter concludes with a general overview of the sourcebased and scene-based compensation techniques.

2.1 Overview

Infrared systems have long been of interest in military systems and more recently in

commercial applications. This interest is based on the desire to detect, recognize, classify,

or identify objects by their emission characteristics. Objects at or near room temperature

(295K) have a peak emission at 9.8 gm, as determined by the Wien Displacement Law for

peak radiation. Hot objects, such as the plume from a jet aircraft, approximately 650K

(Hudson, 1969) have a peak emission at 4.5 gm. These two classes of objects have led to

the interest in infrared technology, more specifically to mid-wave (3-5 Lim) and long-wave

(8-12gtm) spectral bands. These bands are even more significant when one observes the

atmospheric windows associated with these two infrared spectral bands. The atmosphere

has very high transmission for both the mid-wave and long-wave bands, less the CO 2

absorption band from 4.2 to 4.5 gm and the H20 absorption band from 9.5-10.2 gm.

Initially, infrared imaging technology consisted of a small number of detectors

which were scanned across an image.

Since these scanned linear arrays had a small

number of detectors, it was relatively easy to fabricate detectors with matching

characteristics. There are drawbacks associated with these scanning systems; additional

hardware and optics are required to implement the scanning mechanism. For each frame

of data, the detector array must scan across the image. This limits the pixel integration

time, which reduces system sensitivity.

As the fabrication technology advanced, staring focal plane arrays with large

numbers of detector elements were developed. As these arrays do not require scanning,

they provide for significantly increased integration times, and therefore increased

sensitivity. Since there are a large number of detector elements, the quality of fabrication

techniques is crucial to the development of large, uniform arrays; nonuniform production

techniques can result in nonuniform array response. Such array nonuniformities may in

fact limit sensitivity; spatial variations in the array response can obscure the target

signature, which reduces system detection performance.

The problem is further

complicated, as the spatial variations in array response can vary with time. Any technique

to compensate for nonuniform array response must consider how the response varies

during the time of interest.

Since the development and performance of FPA-based infrared systems have been

limited by array nonuniformity, nonuniformity compensation techniques are generally

required to achieve the full potential of staring focal plane arrays. These techniques are

classified as either source-based or scene-based techniques.

Source-based techniques

employ the use of one or more uniform radiation sources to calculate compensation

coefficients. Such techniques can be complex to implement since they require uniform

sources and additional hardware to make the compensation measurements. If the source

radiation or temperature characteristics are known, source-based techniques have the

potential to provide calibrated image data; the sensor output can be mapped from counts

to absolute temperature or radiance values. Scene-based compensation techniques use

image data to calculate compensation coefficients. Consequently, scene-based techniques

have a potential to provide a simple alternative to source-based techniques. For many

applications of practical interest, such compensated relative measurements are sufficient

(e.g., target detection).

2.2 Infrared Imaging Applications

Infrared focal plane arrays, both scanning and staring two-dimensional arrays, have

a wide variety of applications. The specifics of the system application affect the choice of

the FPA, and influence the choice of the nonuniformity compensation approach. Various

applications of interest are described below.

In infrared search and tracking (IRST) applications, the system is generally used

for long-range surveillance, early target detection, and tracking. The normal system mode

of operation is characterized as "track-while-scan". The detector array is scanned across

an inertially stabilized volume in space. Single or multiple targets are detected and tracked

in subsequent frames while the system continues to scan the prescribed inertial volume.

Tracking is maintained through frame to frame correlation of detections. Due to the long

range operational requirement, the targets of interest are unresolved, typically less than the

size of a pixel (detector element). Therefore, high sensitivity and resolution are required

in IRST applications.

Infrared missile warning systems (IMWS) have the same unresolved target, trackwhile-scan functionality as IRST systems. In the case of aircraft-based missile threat

warning systems, a wide-field-of-view sensor with a two-dimensional staring array is used

to detect the unresolved missile targets (Kruer, Scribner, and Killiany, 1987). The goal of

the system is to achieve rapid target declaration, with a low false alarm rate, in order to

either avoid the missile or employ countermeasures to defeat the missile.

In the case of an infrared missile seeker application, the detector is mounted within

a gimbal in the nose of the missile. The objective is to acquire and intercept a single

target. Generally, the infrared seeker is cued to a target area and must rapidly acquire the

initially unresolved target, since engagement times can be on the order of seconds. Missile

seekers typically employ single target detection and tracking, as opposed to multiple target

tracking.

Forward looking infrared (FLIR) sensors are traditionally used for air-to-ground

target recognition and tracking for weapon delivery. In this case, the target is centered in

the field-of-view of the sensor, and the system tracks a single target. FLIR systems are

often equipped with boresighted laser range finders for weapon guidance. Unlike the

missile seeker applications, the targets are highly resolved and the output signal is intended

to be viewed and interpreted by a human operator. Advanced systems couple the output

signal to automatic target recognition functions which automate the weapon delivery

process.

While each of these applications has its own set of requirements for infrared sensor

performance, the subsequent system characteristics affect the implementation of

nonuniformity compensation algorithms. Although all applications can potentially benefit

from nonuniformity compensation, the efficacy of the compensation algorithms varies with

the application. With IRST applications, for example, compensation may be applied after

several frames of data. Therefore, a compensation algorithm that requires multiple frames

of data may not adversely impact sensor performance. However, in seeker applications

where the time of engagement is very short and access to an infrared reference source

difficult to implement, compensation needs to occur after just a few frames without the

benefit of a calibrated reference. In the case of FLIR systems, where a human observer is

involved in the infrared scene interpretation, precise nonuniformity compensation is less

important. Compensation cannot be considered without regard to sensor application.

2.3 Generic FPA Imaging System

IRST and IMWS represent an emerging class of infrared systems where the

objective is to detect unresolved targets. Figure 2-1 shows the general image processing

steps used in these infrared system applications.

The FPA sensor images either a

background scene, which may contain potential targets, or a uniform calibrated reference

source. Following image acquisition, a high-pass filter can be used to accentuate high

spatial frequency components (as associated with an unresolved target) relative to a low

spatial frequency background (e.g., scene clutter). A threshold test is then applied to the

high-pass filtered image to test for the presence of targets. This filtering method selects

pixels that do not match the underlying correlation of the background scene; the target is

assumed to have high spatial frequency relative to the background. Nonuniformity tends

to have high spatial frequency components; in this respect, nonuniformity characteristics

are very similar to target characteristics and therefore will not be removed by high-pass

filtering. If the nonuniformity is not corrected by the use of compensation algorithms

prior to target detection, the detection capabilities of a system will be greatly diminished,

as the nonuniformity may cause false alarms.

Target

Background

Scene

I

•77>

unnorm

Calibrated

Source

Figure 2-1: Image Processing Block Diagram

2.4 Introduction to Focal Plane Arrays

Originally, infrared detectors consisted of either a single detector element or a

small linear array of detector elements that were scanned across a scene to obtain an

infrared representation of the scene. Scanning sensors using these detector configurations

were limited in both resolution and sensitivity, since they typically had a limited dwell time

and large detector size. As detector technology matured, large numbers of detector

elements could be fabricated as part of a two-dimensional FPA. The array of detectors is

coupled to a multiplexer/preamplifier readout circuit.

Two-dimensional staring FPAs, consisting of thousands of detectors, have a

number of potential advantages over systems with scanned linear arrays. The staring array

design allows economical high-density packing of detectors on the substrate, and allows

for future, advanced signal processing on the focal plane. In addition, infrared systems

employing staring FPAs have increased sensitivity due to longer integration times, higher

frame rate capabilities and simpler optics.

There are fabrication issues which affect the performance characteristics of FPAs.

The nonuniformity of the detector arrays is often the limiting factor in system

performance. Noise can also be introduced in the readout circuitry, which transfers the

detector signals off the focal plane. Charge transfer efficiency variations across the array

are also a source of nonuniformity. Another source of noise is cross talk, where signals

from one detector affect the readout circuitry for nearby detectors.

2.4.1 FPA Detector Materials

There are a number of issues to consider when selecting infrared detector

materials. The detector material must operate in the desired spectral band, for example,

either mid-wave infrared (MWIR) or long-wave infrared (LWIR). The detector material

must have acceptable electronic properties over an operationally feasible temperature

regime. The fabrication technology must be mature enough to produce uniform arrays;

processing which is silicon-based is more developed and controllable than processing

which involves less common materials.

Typical applications for infrared FPAs require detectors that operate in either the

MWIR or LWIR. The MWIR consists of the spectral band ranging from 3-5 gtm. The

LWIR includes wavelengths in the 8-12 gpm spectral band. The operating region has a

direct relation to the required bandgap of the detector material. The relationship between

bandgap energy and spectral operating region is given by,

E=

1.24

[eV],

where E is the bandgap energy and X is the maximum wavelength in the spectral band.

This relation requires the detector material to have a bandgap energy of approximately 0.1

eV for LWIR detectors and 0.25 eV for MWIR detectors.

In order to reduce dark current in the detector, the FPA is operated at a low

temperature. The temperature of operation depends on the allowable array noise, but is

typically 77K, for infrared sensors.

Over the detector spectral band, the quantum

efficiency of a detector is a measure of how well the material converts incident radiation to

electric charge.

High quantum efficiency detectors (e.g., InSb and HgCdTe) have

quantum efficiency values over 60%, while low quantum efficiency detectors (e.g., PtSi,

IrSi) have quantum efficiencies less than 1% (Cantella, 1992).

Producability is also a key issue in focal plane array development. In order to

fabricate a uniform array, the process must be well controlled. The process uniformity

places a practical limit on the size of the focal plane array.

Three main detector materials, which satisfy the requirements for good infrared

detection, are platinum silicide (PtSi), indium antimonide (InSb), and mercury cadmium

telluride (HgCdTe). Platinum silicide and indium antimonide are limited to mid-wave

detection. While platinum silicide is advantageous because it is silicon-based, and the

technology is very mature, this material has low quantum efficiency and must be operated

at very low temperatures. Indium antimonide has a high quantum efficiency and advances

in InSb processing technology have resulted in high quality FPAs. Mercury cadmium

telluride, with its variable bandgap, can be operated in either the mid-wave or long-wave

infrared bands. The bandgap of HgCdTe is a function of composition and therefore any

nonuniformity in composition creates nonuniformity in spectral response. HgCdTe has a

significantly more complex fabrication procedure, as compare to PtSi or InSb, particularly

in long-wave applications, which results in reduced uniformity. For many applications,

this drawback is outweighed by its high quantum efficiency and low noise operation. The

data used in this investigation were obtained from both InSb and HgCdTe arrays.

2.5 Nonuniformity in Focal Plane Arrays

In many cases, nonuniformity is the limiting factor in the detection performance of

infrared focal plane arrays. To understand why this is the case, we must examine the

sources and characteristics of array nonuniformity. The goal of this section is to give the

reader a general sense of the importance of nonuniformity compensation. More specific

characteristics of nonuniformity are analyzed in Chapter 3.

Nonuniformity in FPAs arises from a number of sources.

Differences in the

dimensions and geometry of the individual detectors cause differing responses from one

detector to another. Variations in material composition affect detector quantum efficiency

and spectral response. In HgCdTe arrays, for example, changes in composition of the

detector material result in detectors with varying bandgap. This causes a variation in the

maximum cutoff wavelength, which results in nonuniformity in spectral response.

Nonuniformity also occurs when there is a variation in the coupling of the detector to the

readout mechanism. Finally, temperature variations across the array, which come from

uneven cooling of the focal plane array, affect the detector response. It is important to

understand that the sources of nonuniformity have different spatial and temporal

characteristics. In general, periodic nonuniformity compensation is required to maximize

array uniformity. This drift in focal plane array response presents special problems with

nonuniformity compensation.

The following images in Figure 2-2 demonstrate some of the important

characteristics of sensor nonuniformity. The image on the left is a raw image taken with a

HgCdTe sensor, where the background is a desert scene. The image on the right is the

same image, after nonuniformity correction. The scene detail is greatly increased after

compensation.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

-.n................

i :~::j~j::::~:::i.:::::i~::i~:·:lM.:'·;X

I

i:~~:~:~~:~'i~:ss·:·:

;·:::::~~:::~::·:~~

"

~i·Yi····.

'" ·

Eiiiiiil~~i

~7~·~~i~·'~·.·~'.2~··r··i

''-"' % f...

: ·.::: !:~~:.

·r~·rr~

~~::~'~'u

~~ S::·:·:·:~t

.·.~.·.

:::::~:~::::~.s::~:::::~

~:··· ....;~;::.:~.:.t~?:i:~:

:·i··~·r~::·:.;'."5

M *7..'.·:-

(b)

Figure 2-2: Infrared image from an HgCdTe sensor a) before nonuniformity compensation

and b) after nonuniformity compensation

As is evident in the uncompensated image, the nonuniformity completely obscures

the scene data. In this example, the scene information consists of small perturbations

about the much larger nonuniformity.

This tends to be a problem in infrared imaging,

because infrared scenes typically have low contrast.

Although the magnitude of the nonuniformity poses a serious problem, it is the

spatial frequency characteristics of the nonuniformity that present the greatest problems

with respect to performance.

Simple high-pass filtering will not remove the non-

uniformity present in the above images, since the nonuniformity has considerable high

frequency components.

2.6 General Algorithms for Nonuniformity

Compensation

The two main classes of nonuniformity compensation algorithms are source-based

and scene-based compensation. An overview of these techniques is presented here and the

algorithms are explored in greater depth in Chapters 4 and 5. See Table 1 for a qualitative

overview of source-based and scene-based methods.

Source-Based

Advantages

Disadvantages

Absolute calibration

Recalibration required

Good performance near

calibration temperature

Poor performance away

from calibration

temperature

Scene-Based

No blackbody required

Adaptive algorithm

Relative temperature

calibration

Generally correct offset

Sonly

Table 1: Relative Merits of Compensation Techniques

Source-based nonuniformity compensation techniques commonly employ one or

more uniform blackbody sources. These blackbody sources serve as calibration references

from which compensation coefficients are derived.

The most common source-based

technique is a two-point correction scheme, which compensates for offset and gain

variations from pixel to pixel. In this technique, the sources are operated at temperatures

which span the scene temperatures anticipated in normal system operations.

Source-based nonuniformity compensation must be embedded in the system to

accurately address drift in FPA response, an initial factory calibration is not sufficient.

Therefore, periodic recalibration within the infrared system is required for accurate

compensation. The implementation of the blackbody reference within the system can be

impractical or costly in many applications; the system needs more complicated optics in

order to view the blackbody and a place in a the system to locate the reference sources.

Scene-based compensation uses scene data to approximate observations of a

uniform temperature source, thus simplifying the imaging system.

Compensation

coefficients are generally calculated from some form of spatial averaging over diverse

scenes. This can be accomplished in a variety of ways, including spatial or temporal

filtering, angle dither, and image defocus. Unlike source-base calibration, scene-based

compensation is a relative calibration. This is not, however, a problem in IRST or IMWS

applications where the temperature difference between background scene and target

objects, as opposed to the absolute temperature, is the quantity of interest.

3

Nonuniformity

The goal of this chapter is to gain an understanding of the sources of

nonuniformity and the spatial and temporal characteristics of nonuniformity. This will be

important later in evaluating nonuniformity compensation algorithms. This chapter will

investigate some of the general characteristics of nonuniformity in focal plane arrays.

Experimental data will be used to illustrate some of the key features of sensor

nonuniformity.

3.1 Experimental Setup

The majority of the data analyzed in the next two chapters were obtained with the

Cincinnati Electronics IRC-160ST imaging system, which has a 160x120 InSb array. The

IRC-160ST has a standard 50mm EFL (Effective Focal Length) lens that provides a total

field-of-view of 9.10 in azimuth by 6.80 in elevation and a nominal pixel resolution of 1

milliradian. An additional 200mm EFL lens can be attached, providing 2.30 x 1.70 fieldof-view and a 0.25 mrad pixel resolution. The detector/multiplexer array in the camera is

cooled to 78K with a mechanical Stirling Cycle microcooler (Cincinnati Electronics 1993).

The laboratory setup for the camera is shown in Figure 3-1. The camera was

arranged to view a target that was projected at the focus of a collimator. The target that

was primarily used was a flat target that transmitted the radiation of the 12 inch blackbody

that was behind it. The blackbody temperature ranged from 100C to 600 C.

The digital data from the camera were transferred to a Sun SPARC workstation

via a digital video interface board (frame grabber). The frames of data can be displayed as

well as written to a file. The images were obtained and processed using the Interactive

Data Language (IDL). Some of the code written for processing images in this thesis was

code developed in the Air Defense Systems group at Lincoln Laboratory for the Airborne

Infrared Imager (AIRI).

'4

12 Inch

Blackbody

Mirror

Target

Figure 3-1: Laboratory setup for CE camera

3.2 General Nonuniformity Characteristics

In Chapter 2, the image from a HgCdTe sensor before nonuniformity

compensation helped to illustrate some characteristics of nonuniformity in focal plane

arrays. The nonuniformity was significant compared to the background image and it had

high spatial frequency components. In this chapter, these characteristics will be quantified.

The data used in this section is primarily imaged blackbody data. Since the blackbody

provides uniform radiance, all variations in the output images will be caused by

nonuniformity. As an example, Figure 3-2 is a histogram of the output of the individual

detector element response in the CE InSb array to a 250 C blackbody.

0

500

1000

1500

2000

2500

3000

Counts

Figure 3-2: Histogram of InSb response to 250 C blackbody

Nonuniformity in focal plane arrays can be described as a combination of an

additive or offset term, a multiplicative or gain term, and higher order terms. The output

of each detector, x(t), including the nonuniformity terms, can be written as a function of

the scene radiance, r(t), as follows:

x(t) = a(t) + b(t)r(t) + f(r, t) ,

where x(t) is the output of the detector, a(t) is the offset term, b(t) is the gain term, and

f(r,t) represents the higher order terms. The time dependence of the nonuniformity terms

is examined later in the chapter. In this thesis, the high order nonuniformity terms are

neglected.

The additive nonuniformity component a(t), which is not a function of scene

radiance, comes from variations in dark current, charge-transfer efficiency, and variations

in the readout mechanism. This offset term dominates at very low radiance values.

The multiplicative nonuniformity term, b(t)r(t), is the term which is a function of

exposure.

This results from variations in the responsivity from detector element to

detector element and from photon noise, which is proportional to the square root of

exposure. The effects of the gain and higher order terms increase as background radiance

increase. The relative importance of correcting for gain and offset variations is an issue

that will be addressed when evaluating compensation algorithms.

3.2.1 Sensor Response as a Function of Scene Radiance

One important measurement of an array is how the response of the array varies

with scene radiance.

Figure 3-3 demonstrates the mapping between background

temperature and the output of the InSb detector array, measured in counts. The array was

used to image a blackbody over a range of temperatures. This gives an indication of the

relationship between scene temperature and output counts of the detector system. This

plot can be used as calibration data, that is, it provides the information necessary to

convert sensor counts to temperature. This will be useful in converting the variance of the

response in counts to an apparent temperature variance.

CO)

Blackbody Temperature (degrees C)

Figure 3-3: Mean of InSb array response as a function of blackbody temperature

To verify that the above response as a function of temperature is as expected, we

use Planck's law, which gives the spectral distribution of the blackbody radiance as a

function of temperature,

R() =

37413

3714388

Xs(Exp(

[W cm2 -].

)-

1)

Integrating the above equation over the spectral band in which the CE camera operates,

2.2gm to 4.7gm, will give the relationship between the radiance and the temperature

source. Table 2 lists the results of the integration for the temperatures of interest. Using

these data, we can plot the sensor output (in counts) against the radiance of the blackbody

source. Figure 3-4 indicates that the sensor output is a linear function of input radiance.

Temperature ('C)

10

15

20

25

30

35

40

45

Radiance (W cm2 p)

6.03E-05

7.44E-05

9.13E-05

1.11E-04

1.35E-04

1.62E-04

1.94E-04

2.31E-04

Table 2: Conversion between temperature and radiance for CE camera

180C

1600

-

1400

-

1200

-

1000

800

K

600

400

200

·A·-

I

0.5

2

1.5

Radiance (W cm-2 micron-i)

2.5

x 10,

Figure 3-4: Relationship between blackbody radiance and sensor counts

3.2.2 Nonuniformity as a Function of Scene Radiance

While the previous graph shows the average relationship between array input and

output, it gives no sense of the array nonuniformity. The presence of multiplicative terms

in the nonuniformity will result in nonuniformity that is a function of scene radiance.

Figure 3-5 shows a measure of nonuniformity as a function of the blackbody temperature.

The nonuniformity is measured as the standard deviation of the response over the array as

a function of the temperature of the imaged blackbody. Using the information in Figure 33, we can map the count axis to a temperature axis. For example, a standard deviation of

80 counts corresponds to a standard deviation across the array of approximately 30 C.

4.3

rr^

i

1

3.6

2.9 2.2

,

1.4

0.7

10

15

20

25

30

35

Blackbody Temperature (degrees C)

40

45

0

Figure 3-5: Standard deviation of InSb array response as a function of blackbody

temperature

3.3 Spatial Characteristics of Nonuniformity

The spatial characteristics of interest are not only nonuniformity magnitude, but

the spatial frequency of the nonuniformity.

If the nonuniformity has a low spatial

correlation (high spatial frequency) then it has many of the same characteristics as a

potential unresolved target. If the nonuniformity were to have a low spatial frequency,

that is, if the nonuniformity varied slowly across the array, then it would very likely pose

little or no problem to subsequent target detection, as scenes are typically processed with

a high-pass filter prior to detection. In Chapter 2, we saw an example of an uncorrected

infrared image, which appeared to have low spatial correlation. In this section we will

quantify those results.

The graphs in Figure 3-6 though Figure 3-9 plot the horizontal and vertical spatial

autocorrelation of an image of a blackbody source.

Since the background image is

constant, any variation in the image is due to nonuniformity. By making an estimate of the

correlation between pixels in the array, we are estimating the correlation of the

nonuniformity. The figures show that the correlation falls off significantly for a difference

of one pixel, which indicates the presence of high spatial frequencies in the sensor

nonuniformity.

10

20

30

40

Pixel Separation

50

Figure 3-6: Horizontal spatial correlation of nonuniformity

1

0.9

0.8

• U./

0.6

- 0.5

0.4

8 0.3

0.2

0.1

nv

2

4

6

Pixel Separation

8

Figure 3-7: Horizontal spatial correlation of nonuniformity

60

Z

c

(3

°C€

.2

Cu

10

0

20

40

30

Pixel Separation

50

60

Figure 3-8: Vertical spatial correlation of nonuniformity

1

0.9

0.8

- 0.7

S0.6

o

- 0.5

0.4

80.3

0.2

0.1

n

0

2

4

6

Pixel Separation

8

Figure 3-9: Vertical spatial correlation of nonuniformity

10

3.4 Temporal Characteristics of Nonuniformity

If the nonuniformity were constant in time, then we could perform compensation

just once, and we would expect the performance of the compensation algorithm to remain

high. However, drift in FPA parameters and differences in operating conditions cause a

drift in the nonuniformity. This requires that compensation be performed periodically, in

order to keep the nonuniformity compensation as accurate as possible.

To get an indication of the magnitude of the drift, Figure 3-10 shows the response

of an InSb array to a 250 C blackbody over a period of 6 hours. The units of counts are

not very revealing about the magnitude of the nonuniformity. However, the temperature

scale on the right axis, which was calculated from the data in Figure 3-3, indicates that

over the viewing period the array response would drift almost 0.5 0C. That is, a blackbody

of 250 C would appear to warm up to almost 25.50 C after a number of hours. These data

indicate that the mapping between sensor counts and temperature changes with time, that

is, that recalibration is necessary to get an accurate measurement of temperature.

However, it is not clear from the mean response of the array how the nonuniformity

changes with time.

0

Et

E

Cu

%F

0

1

2

3

4

5

6

Time (hours)

Figure 3-10: Relative drift of mean blackbody response of InSb array (the initial mean

value of 1065.6 counts was subtracted from all mean values)

The standard deviation of the response of the array to a blackbody is a measure of

the magnitude of the nonuniformity. In addition to the appearance of temperature drift

over time, the magnitude of nonuniformity appears to increase over a period of time.

Figure 3-11 shows the drift in the standard deviation of the response to the 250 C

blackbody. The drift in the standard deviation of the FPA response, although very small

over the 6 hour time period, indicates that the performance of any nonadaptive

nonuniformity algorithm will degrade with time.

0.

029

0.

025

0220

0

018 9

U 0

014=

011 E

O0

20.

007M

004

0

Time (hours)

Figure 3-11: Drift of standard deviation of blackbody response of InSb array (the initial

standard deviation of 73.7 counts was subtracted from all standard deviation values)

3.5 Summary

The measurements and analysis of blackbody data in this chapter illustrate the

important features of nonuniformity.

The high spatial frequency and relatively large

magnitude prove the need to compensate for nonuniformity prior to target detection. The

drift in array response indicates that successful compensation algorithms will need to be

adaptive.

Finally, the relative importance of gain and offset correction needs to be

examined when evaluating compensation algorithms.

4

Source-Based Nonuniformity Compensation

In this chapter source-based nonuniformity compensation algorithms will be

examined.

This evaluation will provide a basis of comparison for the scene-based

nonuniformity compensation algorithms addressed in Chapter 5. After a discussion of

implementation, the performance of these algorithms will be presented using data from the

CE camera used in Chapter 3.

In source-based compensation techniques, one or more uniform sources are used

to estimate the nonuniformity compensation coefficients. The number of compensation

coefficients depends on the number of temperatures imaged, as illustrated in Figure 4-1.

If we take a blackbody measurement at one temperature, we can obtain an offset

correction term for each detector element in the array. If we take a second temperature

measurement, then we can also compensate for gain variations in each pixel.

Any

additional temperature measurements can be used to compensate for higher order terms or

to do piecewise-linear compensation between adjacent temperature values. The specific

calculations of the coefficients will be covered in the performance examples.

In addition to using blackbody measurements to perform nonuniformity

compensation, these blackbody measurements can be used to perform calibration. One

advantage of source-based techniques is that they can be used to calibrate the sensor as

calibrated blackbody frames are measured.

Figure 4-1: Compensation block diagram

4.1 Implementation Issues

The

two-or-more-point

nonuniformity

compensation

techniques

are not

computationally complicated to implement; however, taking the calibration frames

presents various problems. Inserting the blackbody into the field of view of the sensor

requires additional hardware and optics; in some systems, the array is mechanically rotated

in its housing to view the blackbody reference which is located behind the array. Another

potential implementation problem is that the blackbody temperature must be accurately

controlled, which requires a precision cooling and heating system.

In

addition

to hardware

requirements

for

source-based

nonuniformity

compensation, measurements of the uniform calibrated source must be obtained. The time

required to make these measurements is time that cannot be spent imaging scene

information.

Finally, the change in nonuniformity with time requires that compensation

coefficients be recalculated periodically, based on the FPA characteristics. The time frame

over which recalibration must occur depends on the performance requirements for the

imaging system.

4.2 Performance

The performance of the source-based algorithms depends on the number of

uniform temperature sources used. It is expected that the more temperatures that are used

in the compensation, the better the performance, assuming that the temperatures of the

blackbodies span the range of expected scene temperatures. However, there is a tradeoff

between the accuracy of the compensation and the amount of time and storage that is

associated with calibrating at multiple temperatures. Most applications use two-point

compensation. If more than two temperature points are used, it is generally for the

purpose of performing piecewise-linear compensation.

The experimental data used to evaluate the performance of the one-point and twopoint nonuniformity compensation (NUC) techniques are the blackbody data obtained

from measurements using the CE camera to image blackbodies of varying temperatures.

There are two types of noise in the sensor, nonuniformity, or fixed pattern noise,

and temporal noise, which consists of photon noise and additive readout noise. The

photon noise is a function of the exposure and will vary from frame to frame. As exposure

levels increase, the photon noise becomes less significant, since it grows as the square root

of the exposure. To minimize the effects of temporal noise in the compensation, multiple

frames of data are averaged together before calculating correction coefficients. In the

measurements in this section, the coefficients are applied to averaged frames, rather than

individual frames. This reduces temporal noise variations that will contribute to the

compensation error. The temporal noise is investigated as a separate issue.

4.2.1 One-Point Compensation

In one-point compensation, data at one temperature are required and only an offset

correction term is calculated. The calculation for the offset correction term for each pixel,

o(i, j) is as follows:

P.L -

CL(i, j)

o(i,j) = pL - (i, j)

where cL is the low temperature calibration frame. The offset term will correct each pixel

in the calibration frame to be the mean value of the array response, •L.

In the following example, a 250 C blackbody was used as the compensation

temperature. To minimize the effects of temporal noise, the offset coefficients were

calculated from a 200-frame average. The derived offset compensation coefficients were

then added to the 200 frame-averaged images of the blackbody sources over a range of

temperatures, thereby isolating the fixed pattern noise from the temporal noise. Since we

are imaging a uniform source, the standard deviation of the compensated images is the

residual compensation error.

Figure 4-2 illustrates the standard deviation of the corrected images. At 25 0C, the

compensation temperature, the error is zero. This was forced to be the case because this

compensation technique calculates the offsets by eliminating the variance at the

compensation temperature.

However, the error increases the further the blackbody

temperature is from the compensation temperature. The left axis is the standard deviation

in sensor counts, while the right axis indicates the equivalent standard deviation in

temperature (using the data from Figure 3-3).

1.08

0.90 5

C,,

0

0.72

0.54-

CO)

Ilu

cc

0.36 -

0.18

10

15

20

35

25

30

Temperature (degrees C)

40

0

45

Figure 4-2: One-point NUC using 250C as calibration temperature

This implies that the offset correction is optimized for images whose scene

temperature is close to that of the blackbody used for compensation. Figure 4-3 plots the

standard deviation of the original images as well as the error after one-point

compensation. It is apparent that the error after compensation is significantly less than

original nonuniformity, even at temperatures away from the offset temperature.

^^ A

1

34.3

4

3.6

1

2.9

8

2.2

cc

rr

1.4 •

0.7

0

Temperature (degrees C)

Figure 4-3: Comparison of original array nonuniformity and error after one-point NUC

4.2.2 Two-Point Compensation

In two-point compensation, data from two temperatures are required to calculate

offset and gain coefficients. Since the result of the one-point compensation indicated that

the best performance occurred close to the compensation temperature, the compensation

temperatures should span the expected range of scene temperatures.

The offset and gain coefficients, o(i, j) and g(i, j) can be calculated as follows:

1

PL = -

H

g(i,j)

i

CL(i, j)

j

-1 C=

, c(i,j)

=

-RHj - P=

co(i,j) - cL(i,j)

o(i,

= L

j)- L(i, j)

AtH

- 9L

o(i,, j) -=cL(i, j)

where cL and cH are the low and high temperature calibration frames. As is the case with

the one-point compensation, the gain and offset coefficients correct each pixel in the

compensation frames to their respective means,

JtL and

gtH.

In the next example, the compensation temperatures were 25 0C and 40 0 C. Again,

200-frame averages were constructed in order to calculate the offset and gain coefficients.

These coefficients were applied to the same set of data as the one-point compensation

algorithm. The standard deviation of the corrected images is a measure of the residual

compensation error.

Figure 4-4 is the graph of the compensation error as a function of the blackbody

temperature. As with the one-point compensation, the minimum error is achieved at the

compensation points.

AIC~

1.

1.

3,..

80.

i

w.

LU

cc

cc

rr"

0.

0.

10

15

20

25

30

35

40

45

Temperature (degrees C)

Figure 4-4: Two-point NUC using 250C and 40 0 C as calibration temperatures

The performance of the two-point nonuniformity compensation algorithm was

considerably better than that of the one-point algorithm.

Figure 4-5 compares the

performance of the two-point technique with the one-point technique and the original

image nonuniformity.

1A

.I IF•A

1

1

h

co

€I)

"13

a

v

LLI

C/3

tV.

CC)

Temperature (degrees C)

Figure 4-5: Comparison of original nonuniformity with one-point and two-point NUC

4.2.3 Temporal Noise Consideration

In the previous treatment of one-point and two-point nonuniformity compensation,

the techniques were applied to averaged frames of data, in order to lessen the effects of

temporal noise. To estimate the effects of the temporal noise on the compensated image,

we can apply the correction coefficients to individual frames of data, rather than the 200frame averages as in the previous sections. Figure 4-6 illustrates the variation in the

individual frames of data after one-point correction. These variations are significantly less

then compensation error that is present in Figure 4-2, and therefore are not the dominant

source of error in the system, except at or very near the calibration temperature.

1.2

1

Mean RMS Error

1.22 Counts

0.044 degrees C

8 0.8

WLU0.6

0.4

0.2

-

I.'

0

50

100

Frame number

150

200

Figure 4-6: Standard deviation of single frames after applying offset correction to 200

consecutive frames (frame rate = 51.44 Hz)

To observe the magnitude of the temporal noise, we examine the response of a

single pixel after compensation. The average response of the individual pixel over the 200

frames is identical to the average corrected value over the array, and the fluctuations,

shown in Figure 4-7, are a result of the temporal noise. The variation in response is

approximately 1.3 counts (0.0460 C). Comparing this value with the one-point and twopoint compensation performance in Figure 4-2 and Figure 4-4, it is clear that this temporal

noise dominates for the entire range of temperatures in the two-point nonuniformity

compensation and at the calibration temperature in the case of one-point nonuniformity

compensation.

0

50

100

Frame number

150

200

Figure 4-7: Temporal variation of one pixel after offset compensation (minus mean pixel

value of 1223.2 counts)

4.2.4 Investigation of Gain and Offset Terms

Once we have the gain and offset terms for a calibration frame, we can return to

the issue of the relative importance of the gain and the offset portions of the

nonuniformity. Histograms of the offset and gain coefficients for the previous two-point

nonuniformity compensation algorithm are presented in Figure 4-8 and Figure 4-9

respectively. From these coefficients we can determine the effects of the gain and offset

terms of the nonuniformity. The contribution of the offset term is its standard deviation,

31.3 counts.

The contribution of the gain term at each temperature is the standard

deviation of the gain coefficients, 0.047 counts, multiplied by the output in counts at that

temperature. For the 250C blackbody, the average output was 1107 counts, which means

the standard deviation of the gain component was 52.0 counts.

This indicates that

compensation for the gain term is indeed important for removing nonuniformity.

Offset (degrees C)

- -

6

)0

Offset (counts)

Figure 4-8: Histogram of Offset Coefficients in 2pt NUC

U

CO

0

E

Z

!5

Gain

Figure 4-9: Histogram of Gain Coefficients in 2pt NUC

From these coefficients, we can make an estimate of the nonuniformity in the

array.

In the previous chapter we assumed that the multiplicative and additive

components dominate the nonuniformity. These components are calculated from the gain

and offset coefficients as follows, where a is the additive component and b is the

multiplicative component,

b(t) = -

1

g(t)

a(t) =

o(t)

--.

g(t)

This relationship will be used in the next chapter, to create nonuniformities in data for the

purposes of testing scene-based algorithm performance.

4.3 Summary

Source-based techniques work very well near the compensation temperatures. The

resulting compensation error, across the wide range of temperatures was far lower than

the original scene nonuniformity. Two-point compensation techniques outperform the

one-point techniques across the 100C - 450C temperature range, on average, by a factor of

18 to 1.

5

Scene-Based Nonuniformity Compensation

In this chapter the characteristics of scene-based nonuniformity compensation

algorithms are examined. The performance of one of these algorithms will be evaluated

and compared to the source-based compensation techniques using both blackbody

measurements and terrain scene data collected using an airborne IR sensor. A method for

determining the parameters for optimal performance, as a function of the temporal

correlation of the data, is developed and tested.

Scene-based nonuniformity compensation techniques use scene data, instead of

blackbody calibration data, to estimate and compensate for array nonuniformities. One

advantage of these techniques is that they are adaptive and can potentially compensate for

drift in FPA response. Another advantage of scene-based techniques is that they do not

require blackbody data, which can simplify the imaging system.

There are, however, potential drawbacks to scene-based techniques. First, the

technique computes estimates of the compensation parameters and may thus result in

additional errors relative to source-based techniques. Also, the scene-based techniques

are only compensation techniques, they do not provide the absolute temperature reference

that is found with source-based methods. Finally, there is an added computational cost

relative to source-based techniques.

The simplest implementation of a scene-based algorithm constructs an estimate of

the offset nonuniformity using some form of weighted averaging over diverse scenes. This

is similar to the one-point source-based compensation. However, as illustrated in Chapter

4, the offset correction only performs well at or near the calibration temperature. The

effect of the scene-based algorithms is to perform the one-point compensation, but with

the adaptive features it is similar to performing one-point compensation with a blackbody

source whose temperature is always the average scene temperature. While this is an

advantage, the performance will depend on the characteristics of the background scene, as

will be discussed later in the chapter.

5.1 Implementation Issues

One implementation is to use a temporal high-pass filter to correct each pixel

x(i,j,n) individually, where n is the frame number and (i,j) denotes the location of the pixel

in the array. In this case, an average of the previous pixel values, f(i,j,n), is constructed,

giving more weight to the more recent pixels, and then subtracted from x(i,j,n) to yield the

compensated pixel value, y(i,j,n). This can be accomplished with the use of a recursively

computable low-pass filter, as follows,

y(i,j, n) = x(i,j, n) - f(i,j,n)

1

m-1

f(i, j, n)= -- x(i,j,n) + - f(i,j,n- 1),

m

m

where m is used to shape the impulse response of the temporal filter. The optimal value

for m depends on the characteristics of the background scene

Since each pixel is corrected independently of the other pixels in the array, there is

a constraint on the background scene. The assumption is that the computed offset, which

is an estimate of the nonuniformity, is the sum of the average value of the nonuniformity

and the average value of the scene at that pixel. In order to make an accurate estimate of

the nonuniformity, we need to guarantee that the average scene radiance that is imaged by

each pixel is the same across the array. If this is not the case, variations in the scene from

pixel to pixel may not be preserved. This is a trivial constraint when applying the scenebased techniques to blackbody data, since the scene radiance is, by definition, constant

across the array. However, this is an issue when we apply the filter to scenes that have

spatial variations.

5.2 Performance

The initial performance measures of this scene-based techniques are made by

applying the temporal high-pass filter to the blackbody data from the CE camera. The

performance can be compared directly to that of the source-based offset compensation

technique. This comparison is not a complete evaluation of the technique since scene

characteristics will affect the performance of the algorithm, but it provides a starting point

for the analysis.

After applying the temporal high-pass filter to blackbody data, we will examine the

performance on synthesized images of a given temporal correlation and imposed

nonuniformity. These images were created by segmenting a large single image obtained

from the Infrared Measurements Sensor (IRMS). The IRMS is a dual waveband (MWIR

and LWIR) scanning system, which can image 200 in azimuth and 2.250 in elevation, and

has an instantaneous field of view (IFOV) of 100 grad.

A metric for determining the

optimal value of m, based on the temporal correlation of the scenes, is developed using

these controlled scenes.

After evaluating the performance on the simulated data, the algorithms are applied

to scene data obtained from the Skyball airborne IR sensor. The Skyball system uses

128x128 HgCdTe FPAs for the MWIR (3.4 - 4.9 im) and LWIR (7.6 - 9.4 gm)

wavebands; in this thesis we use the MWIR waveband data.

The system has a

1.740 x1.740 FOV and an IFOV of 238 gtrad. There are calibration frames preceding

and/or following the scenes, which allow for a comparison between source-based and

scene-based techniques.

5.2.1 Offset Comparison with Source-Based Techniques

We have already examined offset correction for one-point source-based

compensation. To compare the performance of the scene-based temporal high-pass filter

with that of the one-point compensation, we apply the algorithms to the CE camera

images of a 25 0C blackbody, as used in the temporal noise measurements in Chapter 4.

Since the scene is constant, we can examine the steady state performance of the temporal

high-pass filter for various values of the filter parameter m.

The results of applying the temporal high-pass filter are shown in Figure 5-1. The

residual error after compensation is illustrated for three different values of m. As evident

in the graph, the number of frames it takes for the algorithm to reach steady state

performance is proportional to m. As the value of m increases, more frames of data are

required for the filter to adapt to sudden changes in the scene.

In

8'

2

LU•

cc

0

50

100

150

200

Frame Number

Figure 5-1: Performance of scene-based temporal high-pass filter applied to 200 frames of

blackbody data at 250 C

To get a better indication of the performance of the temporal high-pass filter,

Figure 5-2 shows the steady state performance (frames 100-200) for the three values of m

used in Figure 5-1. The general behavior is similar for the three values of m. However,

the lower the value of m, the lower the average steady state error. This is a result of the

filter implementation; for low values of m, more of the current frame is used in the

estimate of the nonuniformity, therefore more of the variations in an individual frame will

be removed. This will have an impact on target detection, since targets may be suppressed

along with the background.

I

I

=2

1.2

m=10

1

8 0.8

m=2

W 0.6

0.4

0.2

n

100

120

160

140

Frame Number

180

200

Figure 5-2: Performance of scene-based temporal high-pass filter applied to 200 frames of

blackbody data at 250 C

In addition to comparing the temporal high-pass filter for different values of m, we

would like to examine the performance relative to the performance of the one-point

compensation discussed in Chapter 4. In Figure 5-3, the results of the temporal high-pass

filter (for m=5) are compared to the results after one-point compensation. The offset for

the one-point compensation is derived from an average of the 200 frames and then applied

to each of the frames individually, as in Figure 4-6.

The one-point compensation

performance provided a measurement of the temporal noise limited performance. In the

case of the temporal high-pass filter, the residual compensation error is lower than that

obtained by the one-point compensation. This results from the use of a fraction of the

current scene in the estimation of the nonuniformity, thus reducing some of the temporal

noise; in the case where we image actual scenes we will expect that this algorithm will also

reduce clutter.

In order to verify that the higher residual error in the one-point compensation is

not due to sensor drift, we also can apply an offset correction which simply subtracts a 5frame local average from each input frame. This is indicated in Figure 5-3 by the dotted

line. If there were significant drift, we would see that the local average would perform

better than the offset calculated by averaging all 200 frames. Since this is not the case,

background suppression, not drift in the sensor, is the reason for the decrease in residual

error in the temporal high-pass filter.

•

I•

1.5

S0.5

ir 0.5

0

20

40

60

80

100

120

140

Frame Number

160

180

200

Figure 5-3: Comparison of temporal high-pass filter, running average, and one-point

compensation applied to 200 frames from CE camera of blackbody at 250 C

5.2.2 Applying Algorithm to Synthesized Scene Data

By applying the scene-based algorithms to images of known background statistics

and nonuniformity, a relationship between the temporal correlation of the scenes and the

m factor in the temporal high-pass filter that yields optimal performance can be

constructed. A rural scene recorded in Harvard, Massachusetts was used in this study; a

portion of the image is shown in Figure 5-4. The mean temperature of the image is 9. 10C

(1671.2 counts) and the standard deviation is 0.6 0C (15.1 counts). This IRMS image was

divided into a sequence of frames, where the apparent rate of motion of the frames is

measured as the horizontal pixel separation between frames. By changing the apparent

rate of motion of the scene, we can create scenes with different temporal correlation

lengths; the temporal correlation function is simply a scaled version of the spatial

correlation function. The rate term is the ratio of the spatial correlation length and the

temporal correlation length,

rate =

Lt

Figure 5-4: Section of IRMS image of Harvard, MA used to test temporal high-pass filter

Figure 5-5 shows the spatial correlation of the portion of the original IRMS image

that was used to create the multiple frames of data. To estimate the spatial correlation

length, we can fit the correlation data, R[n], to an exponential of the form,

R[n] = ae -

+ b,

where L, is the spatial correlation length, and the a and b terms are scaling factors. As is

apparent in Figure 5-5, the horizontal correlation of the image is high. The exponential fit

to the correlation data is also shown in Figure 5-5 and the estimated correlation length is

approximately 2 pixels.

1

1

0.9

0.8

S0.7

E 0.6

0

- 0.5

D 0.4

0.3

0.2

0.1

n

0

10

30

20

Pixel Separation

40

50

Figure 5-5: Horizontal spatial correlation of IRMS image (calculated in 100x100-pixel

blocks).

The processing steps that were used to evaluate the performance of the temporal

high-pass filter are shown in Figure 5-6.

After segmenting the original images,

nonuniformity is imposed. As discussed in Chapter 4, the gain and offset coefficients for a

particular array can be used to estimate the multiplicative and additive factors that

corrupted the original scene.

The gains and offsets used in these cases were the

coefficients that were calculated in Section 4.2.2 for the InSb array. These factors were

applied to the sequence of images in order to create a nonuniformity; this nonuniformity

would be compensated exactly by applying the gain and offset coefficients as previously

calculated.

It is important to note that there is no temporal drift in the imposed

nonuniformity. The advantage of imposing a known nonuniformity on the images is that