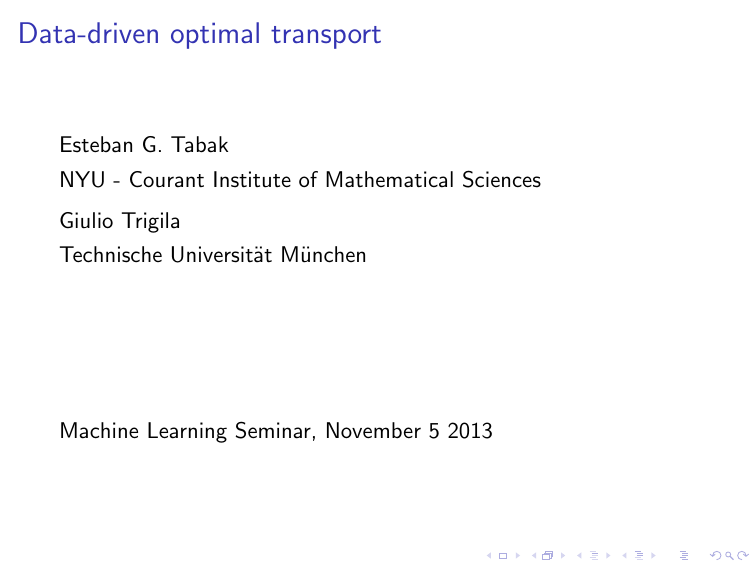

Data-driven optimal transport

advertisement

Data-driven optimal transport

Esteban G. Tabak

NYU - Courant Institute of Mathematical Sciences

Giulio Trigila

Technische Universität München

Machine Learning Seminar, November 5 2013

The story in a picture

µ (y)

ρ (x)

Y

X

π (x,y)

xi

yj

Optimal transport (Monge formulation)

Find a plan to transport material from one location to another that

minimizes the total transportation cost. In terms of the probability

densities ρ(x) and µ(y ) with x, y ∈ Rn ,

Z

min M(y ) =

c(x, y (x)) ρ(x)dx.

y (x)

Rn

subject to

Z

Z

ρ(x)dx =

y −1 (Ω)

µ(y )dy

Ω

for all measurable sets Ω. If y is smooth and one to one, this is

equivalent to the point-wise relation

ρ(x) = µ(y (x))J y (x),

Kantorovich formulation

Z

min K (π) =

c(x, y ) π(x, y )dxdy

π(x,y )

subject to

Z

ρ(x) =

Z

π(x, y )dy ,

µ(y ) =

π(x, y )dx

If c(x, y ) is convex,

min M(y ) = max K (π)

and

π(S) = ρ({x : (x, y (x)) ∈ S}).

Dual formulation

Maximize

Z

D(u, v ) =

Z

u(x)ρ(x)dx +

v (x)µ(y )dy

over all continuous functions u and v satisfying

u(x) + v (y ) ≤ c(x, y ).

The standard example: c(c, y ) = ky − xk2

Z

min

Z

c(x, y ) π(x, y )dxdy → max

with dual

Z

Z

min u(x)ρ(x)dx + v (y )µ(y )dy ,

x · y π(x, y )dxdy ,

u(x) + v (y ) ≥ x · y .

u(x) = max(x · y − v (y )) ≡ v ∗ (x)

y

v (y ) = max(x · y − u(x)) ≡ u ∗ (y )

x

and the optimal map for Monge’s problem is given by

y (x) = ∇u(x),

with potential u(x) satisfying the Monge-Ampere equation

ρ(x) = µ(∇u(x))det(D 2 u)(x).

A data-based formulation

In Kantorovich dual formulation, the objective function to

maximize is a sum of two expected values:

Z

Z

D(u, v ) = u(x)ρ(x)dx + v (x)µ(y )dy .

Hence, if ρ(x) and µ(y ) are known only through samples, it is

natural to pose the problem in terms of empirical means:

Maximize

m

n

i=1

j=1

1X

1 X

u(xi ) +

v (yj )

D(u, v ) =

m

n

over functions u and v satisfying

u(x) + v (y ) ≤ c(x, y ).

A purely discrete reduction

Maximize

m

D(u, v ) =

n

1 X

1X

ui +

vj

m

n

i=1

(1)

j=1

over vectors u and v satisfying

ui + vj ≤ cij .

(2)

This is dual to the uncapacitated transportation problem:

Minimize

C (π) =

X

cij πij

(3)

i,j

subject to

X

πij

= n

(4)

πij

= m.

(5)

j

X

i

Fully constrained scenario

m

n

i=1

j=1

1 X

1X

D(u, v ) =

u(xi )+

v (yj ) =

m

n

Z

Z

u(x)ρ(x)dx+ v (x)µ(y )dy

with

ρ(x) =

m

n

i=1

j=1

1 X

1X

δ(x − xi ), µ(y ) =

δ(y − yj ),

m

n

which brings us back to the purely discrete case.

The Lagrangian

Z

L(π, u, v ) =

c(x, y ) π(x, y ) dxdy

#

Z "

m

1 X

δ(x − xi ) u(x)dx

−

ρ(x) −

m

i=1

Z

n

X

1

− µ(y ) −

δ(y − yj ) v (y )dy ,

n

j=1

where ρ(x) and µ(y ) are shortcut notations for

Z

ρ(x) = π(x, y )dy

and

Z

µ(y ) =

π(x, y )dx.

In terms of the Lagrangian, the problem can be formulated as a

game:

d : max

min L(π, u, v ),

u(x),v (y ) π(x,y )≥0

with dual

p:

min

max L(π, u, v ).

π(x,y )≥0 u(x),v (y )

If the functions u(x) and v (y ) are unrestricted, the problem p

becomes

Z

p : min

c(x, y ) π(x, y ) dxdy

π(x,y )≥0

subject to

ρ(x) =

as before.

m

n

i=1

j=1

1X

1 X

δ(x − xi ), µ(y ) =

δ(y − yj ),

m

n

A suitable relaxation

Instead, restrictict the space of functions F from where u and v

can be selected. If F is invariant under dilations:

u ∈ F , λ ∈ R → λu ∈ F ,

then the problem p becomes

Z

c(x, y ) π(x, y ) dxdy

p : min

π(x,y )≥0

#

m

1 X

ρ(x) −

δ(x − xi ) u(x)dx

m

i=1

Z

n

X

µ(y ) − 1

δ(y − yj ) v (y )dy

n

Z "

= 0,

= 0

j=1

for all u(x), v (y ) ∈ F , a weak formulation of the constraints.

Some possible choices for F

1. Constants: The constraints just guaranty that ρ and µ

integrate to one.

2. Linear Functions: The solution is a rigid displacement that

moves the mean of {xi } into the mean of {yj }.

3. Quadratic Functions: A linear transformation mapping the

empirical mean and covariance matrix of {xi } into those of

{yj }.

4. Smooth functions with appropriate local bandwidth,

neither to over nor under-resolve ρ(x) and µ(y ).

A flow-based, primal-dual approach

1

c = kx − y k2 , y (x) = ∇u(x).

2

We think of the map y (x) as the endpoint of a time-dependent

flow z(x, t), such that

z(x, 0) = x,

and z(x, ∞) = y (x).

z(x, t) follows the gradient flow

"

#

δ

D̃

t

ż = −∇z

δu

u= 12 kxk2

z(x, 0) = x

where

Z

D̃t =

Z

u(z)ρt (z)dz +

u ∗ (y )µ(y )dy

and ρt is the evolving probability distribution underlying the points

z(x, t).

The variational derivative of D̃ adopts the form

δ D̃t

= ρt − D 2 u µ(∇u)

δu

which, applied at u = 12 kxk2 (the potential corresponding to the

identity map), yields the simpler expression

δ D̃t

(z) = ρt (z) − µ(z),

δu

so

(

ż = −∇z [ρt (z) − µ(z)]

z(x, 0) = x

The probability density ρt satisfies the continuity equation

∂ρt (z)

+ ∇z · [ρt (z)ż] = 0,

∂t

yielding

∂ρt (z)

− ∇z · [ρt (z)∇z (ρt (z) − µ(z))] = 0,

∂t

a nonlinear heat equation that converges to ρt=∞ (z) = µ(z).

In terms of samples,

1. Objetive function:

Z

Z

1 X

1X ∗

D̃t = u(z)ρt (z)dz+ u ∗ (y )µ(y )dy →

u(zi )+

u (yj )

m

n

i

j

2. Test functions: General u(z) ∈ F → localized u(z, β), with

β a finite-dimensional parameter and appropriate bandwidth.

3. Gradient descent: −u̇ =

−β̇ = ∇β D̃t (z) =

δ D̃t

δu (z)

= ρt (z) − µ(z) →

1 X

1X

∇β u(zi ) −

∇β u(yj )

m

n

i

j

Left to discuss:

1. Form of u(z, β), determination of bandwidth:

X

kz − zk k

1

2

βk Fk

,

u(z, β) = kxk +

2

αk

k

αk ∝

1

ρt (zk )

1

2. Enforcement of the map’s optimality.

d

.

Some applications and extensions

1. Maps: fluid flow from tracers, effects of a medical treatment,

resource allocation, . . .

2. Density estimation: ρ(x)

3. Classification and clustering: ρk (x)

4. Regression: π(x, y ), dim(x) 6= dim(y ),

c(x, y ) =

X

k

wk

ky − yk k2

αk2 + kx − xk k2

5. Determination of worst scenario under given marginals:

π(x1 , x2 , . . . , xn )

Thanks!