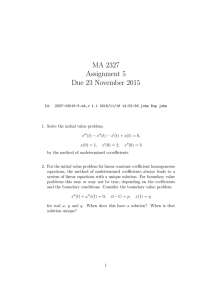

Lecture 10: Forward and Backward equations for SDEs

advertisement

Miranda Holmes-Cerfon

Applied Stochastic Analysis, Spring 2015

Lecture 10: Forward and Backward equations for SDEs

Readings

Recommended:

• Pavliotis [2014] 2.2-2.6, 3.4, 4.1-4.2

• Gardiner [2009] 5.1-5.3

Other sections are recommended too – this is a nice book to read (and own), and it is strongly suggested

to start looking through it.

Optional:

• Oksendal [2005] 7.3, 8.1,

• Koralov and Sinai [2010] 21.3, 21.4

Consider the SDE

dXt = b(Xt ,t)dt + σ (Xt ,t)dWt ,

Xt ∈ Rd .

(1)

We have looked at how to solve for the actual solution trajectories themselves. For the next few lectures we

will consider another approach to study properties of the solutions, via partial differential equations.

Recall (from Lecture 8) that the solutions to (1) are Markov (with sufficient smoothness and growth conditions on the coefficients.) Therefore we expect to be able to describe them via their transition densities

p(x,t|y, s) = P(Xt ∈ [x, x + dx)|Xs = y).

(2)

This has exactly the same interpretation as the transition density for a continuous-time Markov chain, but

now the state space is continuous, not discrete. In Lecture 3, we showed we could describe the joint evolution

of (x,t) or (y, s) by linear equations: the Kolmogorov forward and backward equations. From these we can

obtain equations for the evolution of probability density ρ(x), or the evolution of observables Ex f (Xt ). Our

goal will be to find such equations for the solution to the SDE above.

10.1

Forward and backward equations

The forward and backward equations come nearly directly from Itô’s formula. Consider a function f ∈

Cc∞ (Rd ). Itô’s formula gives

1

d f (Xt ) = (b · ∇ f + σ σ T : ∇2 f )dt + ∇ f · σ · dWt .

2

Integrate from s to t:

f (Xt ) − f (Xs ) =

Z t

s

1

(b · ∇ f + σ σ T : ∇2 f )(Xτ , τ)dτ +

2

Z t

s

∇ f · σ (Xτ , τ)dWτ .

Take the expectation, conditional on Xs = y:

Ey,s f (Xt ) − f (y) = Ey,s

Z t

s

L f (Xτ , τ)dτ,

(3)

where L f = b(x,t) · ∇ f (x) + a(x,t) : ∇2 f is a linear operator, Ey,s f (Xt ) ≡ E[ f (Xt )|Xs = y], and a = 12 σ σ T

is the diffusion matrix.

From this we can immediately calculate the generator of a time-homogeneous diffusion process. Let Ex f (Xt ) =

E[ f (Xt )|X0 = x]. We have that

Ex f (Xt ) − f (x)

1

= lim E

t

t→0+

t→0+ t

Z t

lim

0

L f (Xτ )dτ = L f (x).

The last step follows from the Dominated Convergence Theorem, since f and all its derivatives are bounded

(see Koralov and Sinai [2010], p.323.)

Definition. The generator of a time-homogeneous diffusion process (1) is L f = b(x) · ∇ f (x) + 12 a(x) :

∇2 f (x). Indeed, from (3) we have

Ex f (Xt ) − f (x)

=L f

t

t→0+

A f (x) = lim

(4)

when f ∈ Cc2 .

The domain of the generator is D(A ) = { f ∈ C0 (Rd ) s.t. the limit above exists}. In general, this can include

functions not in Cc2 (in which case the expression L f would not apply directly.)

The coefficients of the operator L can also be obtained from the law of the process, without considering the

SDE it satisfies. We showed on HW5 that

Ex [(Xt )i − xi ]

,

t→0

t

bi (x) = lim

E[((Xt )i − xi )((Xt ) j − x j )]

1

ai j (x) = lim

.

t→0

2

t

This is an approach that is often followed in physics textbooks, such as Gardiner [2009].

Now we can write down the forward and backward equations.

Backward Kolmogov Equation. (Version 1) Let Xt solve a time-homogeneous SDE (1). Let u(x,t) =

Ex f (Xt ) = E[ f (Xt )|X0 = x], where f ∈ Cc2 (Rd ). Then

∂t u = L u,

u(x, 0) = f (x),

t > 0.

(5)

(Version 2) Let Xt solve a (possibly time-inhomogeneous) SDE (1). Let u(y, s) = Ey,s f (Xt ), where f ∈

Cc2 (Rd ). Then

∂s u(y, s) + L u(y, s) = 0,

u(y,t) = f (y),

s < t.

(6)

(Version 3) Let p(x,t|y, s) be the transition density of Xt solving (time-inhomogeneous) (1). Then

∂s p + Ly p = 0,

p(x,t|y,t) = δ (x − y),

s < t.

(7)

Notes

• Equation (6) should be solved backward in time, subject to the terminal condition p(x,t|y,t) = δ (x −

y). This is the origin of the name “backward equation.” Equation (7) should also be solved backward

in time.

2

• Version 1 can also be derived from version 2 for a time-homogeneous process, by relabelling time to

be t 0 = t − s and considering the function u(x,t 0 ).

Proof, Version 1. This basically follows formally from (3), if we assume that Ex , L commute (they do by

Leibniz if f has continuous second derivatives, or see other arguments below.) Formally, we have

Ex f (Xt+h ) − Ex f (Xt )

1

= lim Ex

h→0

h→0

h

h

Z

1 t+h

= lim

L Ex f (Xτ )dτ = L u(x,t).

h→0 h t

Z t+h

∂t u(x,t) = lim

t

L f (Xτ )dτ

Here is a proof (from Oksendal [2005], section 8.1), that uses our previous calculation of the generator, that

doesn’t require formally interchanging E, L . Let’s calculate:

1

(u(x,t + s) − u(x,t))

s

1

= lim (Ex f (Xt+s ) − Ex f (Xt ))

s→0+ s

1 x Xs

= lim

E E f (Xt ) − Ex f (Xt )

+

s→0 s

1

= lim (Ex u(Xs ,t) − u(x,t))

s→0+ s

=Lu

∂t u|(x,t) = lim

s→0+

(Markov property)

Another way to write Oksendal’s proof is as follows. Let’s define an operator Tt f (x) ≡ Ex f (Xt ), which acts

on the set of bounded, measurable functions. The Chapman-Kolmogorov equations1 imply that Ts (Tt f ) =

Ts+t f . Then the time-derivative of the generator of a C2 -function f ∈ D(A ) is

∂t u = lim

h→0

Th (Tt f ) − T0 (Tt f )

Tt+h f − Tt f

= lim

= L Tt f .

h→0

h

h

(8)

To see formally why E, L commute, we could have factored the other way in (8), and found that (assuming

that we can interchange lim and Tt )

∂t u = lim

h→0

Tt (Th f ) − Tt (T0 f )

= Tt L f .

h

Proof, Version 2. (from E et al. [2014]) From Itô’s formula, we have

du(Xτ , τ) = (∂τ u + L u)(Xτ , τ)dτ + ∇u · σ · dWτ .

Integrate from s to t and take the expectation conditional on Xs = y to get

1

1

(Ey,s u(Xt ,t) − u(y, s)) = lim

t→s t − s

t→s t − s

Z t

lim

1 P(B, s|x,t) =

R

R P(B, x|y, u)P(dy, u|x,t),

s

Ey,s (∂τ u + L u)(Xτ , τ)dτ = ∂s u(y, s) + L u(y, s).

t ≤ u ≤ s. See Pavliotis [2014], section 2.2.

3

However, we also have that

Ey,s u(Xt ,t) = Ey,s f (Xt ) = u(y, s),

so the LHS is zero. Therefore (6) holds.

Proof, Version 3. We can write u(y, s) = f (x)p(t, x|s, y)dx. Apply the operator ∂s + L to u(y, s), and use

Version 2. Assuming we can interchange derivatives and integral,2 we have

R

0 = ∂s u(y, s) + L u(y, s) =

Z

f (x) (∂s p(x,t|y, s) + Ly p(x,t|y, s)) dx.

This holds for all test functions f , so the equation also holds for p.

Forward Kolmogov Equation. (Version 1) The transition probabilty density p(x,t|y, s) solves

∂t = Lx∗ p,

p(x, s|y, s) = δ (x − y).

(9)

Here L∗ f = −∇x · (b(x,t) f (x)) + ∇2x : (a(x,t) f (x)) is the formal adjoint of L , i.e. it is the operator that

satisfies hL f , giL2 = h f , L ∗ giL2 .

(Version 2) Let ρ0 (x) be the initial probability density, and ρ(x,t) the density at time t. Then ρ solves

∂t ρ = L ∗ ρ,

ρ(x, 0) = ρ0 (x).

(10)

This is also called the Fokker-Planck equation.

Proof, Version 1. Write (3) as

Z

Rd

f (x)p(x,t|y, s)dx − f (y) =

Z tZ

Rd

s

Take ∂t to find

Z

Rd

L f (x, τ)p(x, τ|y, s)dxdτ.

Z

f (x)∂t pdx =

Rd

(L f )pdx.

This holds for all test functions f , so p is a weak solution to (9).

Proof, Version 2. We have ρ(x,t) =

R

p(x,t|y, 0)ρ0 (y)dy. Integrate both size of (9) over ρ0 (y) to get (10).

Remark. We see that the forward equation is weaker than the backward equation – it is only expected to

only in a weak sense, in general. Indeed, the forward equation requires taking derivatives of b, σ , but these

are not required to be C2 in general, so the derivatives may only exist in a weak sense. Another example

where it holds weakly but not strongly is when the probability density contains singular measures, such as

delta functions – we will see an example of this in section 10.4 below.

Remark. The Fokker-Planck equation for a Stratonovich SDE has a nice form:

dXt = σ ◦ dWt

=⇒

∂t ρ =

∑ ∂i

σik ∂ j σ jk ρ

i, j,k

2 If

R

not, e.g. at t = 0 when derivatives of p blow up, then use the trick from the Lecture 5 notes, of writing p(·|y, s) = ∂s p(·|y, u)du,

and working with integrals instead.

4

10.2

Probability flux

It is useful to think about the Fokker-Planck equation (10) using concepts of flux, borrowing ideas from fluid

dynamics and mechanics. Write (10) as

∂t ρ = ∇ · (−bρ + ∇ · (aρ))

⇔

∂t ρ + ∇ · j = 0,

¯

• This is a conservation law / continuity equation for ρ.

• j is the probability current, or probability flux. The integral

¯crosses a surface S, per unit time.

j = bρ − ∇ · (aρ).

¯

R

S j · n̂

gives the total probability that

¯

• To see why:

Consider a closed surface S bounding domain

Ω. The

change

R

R

in

total probability in Ω per unit time is Ω ∂t ρ = Ω −∇ · j =

R

¯

S −j · n̂, where n̂ is the unit outward normal, by the Diver¯

gence Theorem.

• There is a strong connection to fluid dynamics. Suppose

ρ = concentration of something (tracer, heat, ...)

b = velocity of fluid

a = diffusion tensor (or twice the diffusion tensor)

Then the FP equation says that the tracer is advected with velocity b, and diffuses with diffusion tensor

a. Probability behaves exactly like a passive tracer in a fluid! (Note that the more familiar equation for

a passive tracer is ∂t c + u · ∇c = [diffusion], because in many cases ∇ · u = 0.) In many cases diffusion

is constant an isotropic, in which case the diffusion term has the form A∆ρ for some constant A – this

is the more familiar viscosity term in the fluid equations.

• The total probability changes as dtd D ρ = −

not.) This will be conserved if j · n̂|∂ D = 0.

¯

R

R

D∇·j

¯

=

R

∂ D −j · n̂,

where D is our domain (bounded or

¯

10.3

Boundary conditions for the forward and backward equations

10.3.1

Boundary conditions for the Fokker-Planck equation

The Fokker-Planck equation is a PDE, so it needs to come with boundary conditions to be well-posed. What

should these be? This depends on what happens to the process when it hits the boundary.

Let the process live in domain D, with boundary ∂ D. Here are some possible boundary conditions. (If D is

unbounded, these are decay conditions at ∞.)

• Reflecting boundary

j · n̂ = 0

on ∂ D.

¯

This corresponds to trajectories being reflected at the boundary. There is no net flux of probability

across the boundary, so the total probability is conserved.

5

• Absorbing boundary

ρ =0

on ∂ D.

This corresponds to trajectories being absorbed at the boundary and taken out of the system immediately. The total probability is not conserved.

• Periodic boundary (on interval [a, b])

j|b− = j|a+ ,

ρ|b− = ρ|a+ .

¯

¯

Trajectories that leave one side, immediately re-enter on the other. Total probability is conserved.

• Sticky boundary

j · n̂ = κL ∗ ρ

on ∂ D.

¯

This corresponds to trajectories that can “stick,” or spend finite time, at the boundary. The total

probability is conserved, provided it includes a singular part on the boundary.

Here κ is a constant that measures how “sticky” the boundary is. Notice that when κ → 0 we recover the reflecting boundary condition, and when κ → ∞ we have L ∗ ρ = 0 ⇒ ρt = 0 ⇒ ρ = 0, the

absorbing boundary condition.

• At a discontinuity What happens if the coefficients b(x,t), a(x,t) are discontinuous on some surface

S, but particles can still cross it? Then

j · n̂|S+ = j · n̂|S− ,

ρ|S+ = ρ|S− .

¯

¯

Both the probability, and the normal components of the probability current, are continuous across S.

Note that the derivatives of ρ may not be continuous.

This also conserves total probability. One way to derive the condition is to smooth the coefficients

near the discontinuity, e.g. with an appropriate mollifier, and then consider the limiting equation as

the smoothed equation approaches the discontinuous one.

Note also that when the coefficients are discontinuous, we really have separate equations, one on each

part of the domain where the coefficients are continuous, so this is really a “matching” condition that

says how these different solutions are related.

6

10.3.2

Boundary conditions for the backward equation

These can be derived from the boundary conditions for the FP equation through integration by parts:

hL ∗ ρ, f i = −

Z

Z D

f∇·j = −

¯

Z

D

∇ · ( f j) − j · ∇ f

¯ Z¯

(bρ − ∇ · (aρ)) · ∇ f −

=

ZD

=

∂D

2

ρb · ∇ f − ∇ · (ρa · ∇ f ) + ρa : ∇ f −

ZD

=

f j · n̂

¯

Z

∂D

ρ b · ∇ f + a : ∇2 f −

{z

}

D |

Z

A

= hρ, L f i −

Z

f j · n̂

¯

f j · n̂ + (ρa · ∇ f ) · n̂

{z

}

∂D |¯

B

f j · n̂ + (ρa · ∇ f ) · n̂

{z

}

∂D |¯

B

Term A is the generator L f .

Term B tells us the boundary conditions. We need this term to vanish, so that hL ∗ ρ, f i = hρ, L f i. Given

boundary conditions on ρ from L ∗ , we should choose boundary conditions on f (for L ) to make B=0

everywhere.

Here are some examples:

• Reflecting boundary

(a · ∇ f ) · n̂ = 0

on ∂ D.

In components, this is ∑i, j ni ai j ∂ j f = 0.

• Absorbing boundary

f =0

on ∂ D.

The others are ELFS. See also papers by Feller, e.g. Feller [1952], which discusses the most general class

of boundary conditions for a second-order parabolic equation.

10.4

Stationary distribution

Suppose the SDE (1) is time-homogeneous: b = b(x), σ = σ (x). Is there a probability density that doesn’t

change with time? If so, it must satisfy ∂t ρs = 0, which implies

L ∗ ρs = 0

(11)

∇ · js = 0 ⇔ ∇ · (bρs − ∇ · (aρs )) = 0.

¯

Therefore, in order to understand if a stationary solution exists, we must understand whether (11) has a

solution. If so, is it unique? And does an arbitrary solution to (10) approach it at long times?

⇔

These are the same questions we asked for Markov chains, only now they have turned into questions about

an elliptic PDE. There are many different results. A particularly tractable case is when a is bounded and

7

uniformly elliptic. To be uniformly elliptic means that

c1 |ξ |2 ≤ ∑ ai j (x)ξi ξ j ≤ c2 |ξ |2

∀ ξ , x,

i, j

where c1 , c2 are constants. Another way of saying this is that a is uniformly positive-definite, or that the

eigenvalues of a are uniformly bounded away from 0.

Theorem. If a is bounded and uniformly elliptic, then there is a unique solution in a bounded domain with

periodic or reflecting boundary conditions.

Remark. If the domain is unbounded, then one also has uniqueness results for the Fokker-Planck equation

(stationary or time-dependent), provided the coefficients of the SDE satisfy certain smoothness conditions

(Lipschitz continuity, growth conditions.) In this case, one looks for a solution that satisfies certain growth

2

conditions at ∞ – one option is kρ(x,t)kL∞ (0,T ) ≤ ceαkxk . See Pavliotis [2014], section 4.1 for a statement

of one such result.

Remark. Weaker results are possible, for example if the operator is semi-elliptic but it is hypoelliptic, then

there is a unique solution to (11).

In most applications you will work with (11) as a definition for a stationary distribution. However, in general

a stationary distribution need not have a density (be absolutely continuous) with respect to the Lebesgue

measure, and even if it does, it need not be in C2 . In these cases, we will not be able to find the stationary

distribution by solving (11), but rather we will have to work with its weak formulation. Here is a more

general definition of a stationary distribution, often called an invariant measure.

Definition. An invariant measure is a measure µ such that

Pt∗ µ = µ,

(12)

where Pt∗ is the semi-group acting on probability measures as (Pt∗ µ)(C) = P(Xt ∈ C|X0 = x)dµ(x).

R

Remark. Notice that Tt , Pt∗ are adjoints of each other:

Sinai [2010], p. 333.

R

Tt f (x)dµ(x) =

R

f (x)d(Pt∗ µ)(x). See Koralov and

One can show that (12) is equivalent to

Z

L f dµ = 0

(13)

for all test functions f . This simply says that µ solves (11) in the weak sense.

R

To see why (13) is true, notice that (Tt f − f )dµ(x) =

Z

L f (x)dµ(x) =

Z

R

f d(Pt∗ µ − µ)(x) = 0, so

(Tt f − f )(x)

dµ(x) = lim

t→0

t→0

t

lim

Z

(Tt f − f )(x)

dµ(x) = 0.

t

We can interchange the limit and expectation by the Dominated Convergence Theorem, since (Tt f − f )/t is

uniformly bounded for t > 0 provided the derivatives of f decay quickly enough at infinity. This will be true

if f is in the Schwartz space S(Rd ), for example (Koralov and Sinai [2010], p.333.) The converse is also

true: (13) implies (12).

Definition. Informally, a Markov process is ergodic is 0 is a simple eigenvalue of L , or equivalently the

equation L u = 0 has only constant solutions.

8

Remark. If a process is ergodic, then there is a unique invariant measure µ that is preserved by the semigroup Pt∗ , as Pt∗ µ = µ. If µ has a density ρ with respect to Lebesgue measure, then this implies (11),

L ∗ ρ = 0. Therefore another way of defining ergodicity (informally) is that there is a unique probability

density satisfying the Fokker-Planck equation.

Remark. One can show that for an ergodic process, limt→∞ Pt∗ µ0 = µ for any initial distribution µ0 . In

addition, the long-time average of an observable f converges to Eµ f (X) at long times. This is the physics

way of defining ergodic – that the phase-space average with respect to the unique invariant measure, equals

the long-time average. See Pavliotis [2014], 2.4 for a summary of these ideas.

Example (Ornstein-Uhlenbeck process). Consider dXt = −αXt + σ dWt . The equation to solve for the

stationary distribution is

∂

1 ∂ 2ρ

α (xρ) + σ 2 2 = 0.

∂x

2 ∂x

Let’s look for a solution with js = 0. Then we must solve

¯

1

αxρ + σ 2 ρx = 0

2

ρx

2αx

⇒

=− 2

ρ

σ

α 2

⇒ ln ρ = − 2 x +C

σ

where C is a constant. The stationary distribution is

ρs = p

2

1

2πσ 2 /(2α)

e

− 21 2 x

σ /(2α) .

This shows the stationary distribution is Gaussian, with mean 0, variance σ 2 /2α.

Example (Brownian dynamics). The equation for a system of particles interacting with potential energy

U(x) and forced with white noise is, when the masses of the particles are small enough that inertia can be

neglected:

s

dXt = −

∇U(Xt )

dt +

γ

2β −1

dWt ,

γ

where γ is the damping parameter, and β = (kB T )−1 is the inverse temperature. Here Xt ,Wt ∈ Rd . This is

also known as the overdamped Langevin equation

The corresponding FPE is

β −1

1

∂t ρ = ∇ ·

∇U(x)ρ +

∆ρ.

γ

γ

To find the stationary distrbution, let’s look for a solution with js = 0:

¯

1

β −1

∇U(x)ρ +

∇ρ = 0

γ

γ

∇ρ

⇒

= −β ∇U

ρ

⇒ ln ρ = −βU(x) +C.

9

The stationary distribution is therefore

Z

ρs = Z −1 e−βU(x) ,

Z=

Rd

e−βU(x) dx.

This is the Boltzmann distribution, or Gibbs measure.

Notes

– In these examples we looked for a solution with js = 0. This doesn’t always have to be the case, but

¯

since we found a solution that satisfies this extra condition,

we are done, since the solution is unique.

We will see in a couple of classes that we can tell, from the coefficients of the SDE, when such a

solution will be possible.

– Equations where this is the case are physically very important. We will see they correspond to “equilibrium” systems, with no flux in the steady-state. This will be a version of detailed balance for an

SDE.

Example (Sticky Brownian Motion). Consider a Brownian motion on [0, 1], with a sticky boundary at x = 0

and a reflecting boundary at x = 1. Constructing the trajectories explicitly requires more mathematical tools,

such as the concept of local time – see e.g. Ikeda and Watanabe [1981]. However, we can understand how the

transition probabilities behave, from the corresponding forward and backward equations. The FP equation

is

1

∆ρ = 0,

ρx = κρxx |x=0 , ρx = 0|x=1 .

2

1

(1 + κδ (0)). Notice that we can’t compute L ∗ ρs

Let’s show the stationary distribution is ρs (x) = 1+κ

directly, since ρs contains a delta-function. Therefore we must use the weak formulation of the FP equation,

and show that hL f , ρs i = 0 for all test functions f .

We compute (leaving out the normalization factor):

hL f , ρs i =

Z

fxx (1 + κδ (0))dx

= fx |10 + κ fxx (0)

= 0 − fx (0) + κ fxx (0)

(boundary condition at 1)

= 0.

(boundary condition at 0)

References

W. E, T. Li, and E. Vanden-Eijnden. Applied Stochastic Analysis. In preparation., 2014.

W. Feller. The parabolic differential equations and the associated semi-groups of transformations. The

Annals of Mathematics, 55:468–519, 1952.

C. Gardiner. Stochastic methods: A handbook for the natural sciences. Springer, 4th edition, 2009.

N. Ikeda and S. Watanabe. Stochastic Differential Equations and Diffusion Processes. Elsevier, 1981.

L. B. Koralov and Y. G. Sinai. Theory of Probability and Random Processes. Springer, 2010.

10

B. Oksendal. Stochastic Differential Equations. Springer, 6 edition, 2005.

G. A. Pavliotis. Stochastic Processes and Applications. Springer, 2014.

11