Principle of Maximum Likelihood Linear least Squares Fitting Session 5 Physics 2BL

advertisement

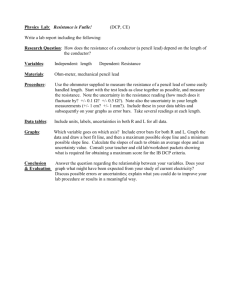

Principle of Maximum Likelihood Linear least Squares Fitting Session 5 Physics 2BL Summer 2009 Outline • Review of Gaussian distributions • Principle of maximum likelihood – weighted averages • Least Squares Fitting Principle of Maximum Likelihood • Best estimates of X and σ from N measurements (x1 - xN) are those for which ProbX,σ (xi) is a maximum Yagil Yagil Yagil Weighted averages (Chapter 7) Yagil Yagil ± ± b) Best estimate is the weighted mean: Linear Relationships: y = A + Bx 8 Slope = 1.01 Y value • Data would lie on a straight line, except for errors • What is ‘best’ line through the points? • What is uncertainty in constants? • How well does the relationship describe the data? 6 4 2 0 0 2 4 X Value 6 8 A Rough Cut 8 Y value • Best means ‘line close to all points’ • Draw various lines that pass through data points • Estimate error in constants from range of values • Good fit if points within error bars of line slope = 1.01 ± 0.07 slope = 1.06 6 4 slope = 0.91 2 0 0 2 4 X Value 6 8 More Analytical 8 Slope = 1.01 ± 0.01 Y value • Best means ‘minimize the square of the deviations between line and points’ • Can use error analysis to find constants, error 6 4 2 0 0 2 4 X Value 6 8 The Details of How to Do This (Chapter 8) y = A + Bx • Want to find A, B that y minimize difference Å deviation yi of yi between data and line • Since line above some y i − y = y i − A − Bx i data, below other, N 2 (y − A − Bx ) ∑ i i minimize sum of i=1 squares of deviations ∂ = ∑ y i − AN − B∑ x i = 0 • Find A, B that ∂A minimize this sum ∂ 2 ∂B = ∑ x i y i − A∑ x i + B ∑ x i = 0 Finding A and B • After minimization, solve equations for A and B • Looks nasty, not so bad… • See Taylor, example 8.1 ∂ = ∑ y i − AN − B∑ x i = 0 ∂A ∂ 2 = ∑ x i y i − A∑ x i + B ∑ x i = 0 ∂B A= B= 2 x ∑ i ∑ yi − ∑ xi ∑ xi yi ∆ N ∑ xi yi − ∑ xi ∑ yi ∆ ∆ = N ∑ xi − 2 (∑ x ) 2 i Uncertainty in Measurements of y • Before, measure several times and take standard deviation as error in y • Can’t now, since yi’s are different quantities • Instead, find standard deviation of deviations σx = σy = N 1 2 (x − x ) ∑ i N i=1 1 N 2 (y − A − Bx ) ∑ i i N − 2 i=1 Uncertainty in A and B • A, B are calculated from xi, yi • Know error in xi, yi ; use error propagation to find error in A, B • A distant extrapolation will be subject to large uncertainty σA = σy σB = σy 2 x ∑ i ∆ N ∆ ∆ = N∑ xi − 2 (∑ x ) 2 i Uncertainty in x • So far, assumed negligible uncertainty in x • If uncertainty in x, not y, just switch them • If uncertainty in both, convert error in x to error in y, then add errors equivalent error in y actual error in x ∆y = B∆x σ y (equiv) = Bσ x σ y (equiv) = σ y 2 + (Bσ x ) 2 Other Functions • Convert to linear • Can now use least squares fitting to get ln A and B y = Ae Bx ln y = ln A + Bx How to minimize uncertainty • Can always estimate uncertainty, but equally important is to minimize it • How can you reduce uncertainty in measurements – Use better components – Have both partners read value – demand consistency – Measure multiple times Remember • • • • Finish Lab Writeup for Exp. 2 Read next week’s lab description, prepare Homework Taylor #6.4, 7.2, 8.6, 8.10 Read Taylor through Chapter 9