Lecture 2: Stability and Reasoning Hannes Leitgeb October 2014 LMU Munich

advertisement

Lecture 2: Stability and Reasoning

Hannes Leitgeb

LMU Munich

October 2014

My main question is:

What do a perfectly rational agent’s beliefs and degrees of belief have to be like

in order for them to cohere with each other?

And the answer suggested in Lecture 1 was:

The Humean thesis: Belief corresponds to stably high degree of belief.

(Where this meant ‘stably high under certain conditionalizations’.)

Plan: Explain what the Humean thesis tells us about theoretical rationality.

Recover the Humean thesis from epistemic starting points.

1

A Representation Theorem for the Humean Thesis

2

Logical Closure and the ‘←’-direction of the Lockean Thesis

3

Belief Revision and the ‘→’-direction of the Lockean Thesis

4

Epistemic Decision Theory and the Humean Thesis

5

An Example: Theory Choice

Once again I will focus on (inferentially) perfectly rational agents only.

A Representation Theorem for the Humean Thesis

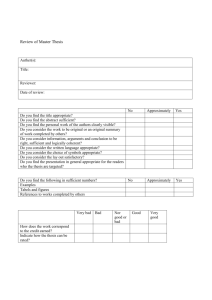

Representation theorem

The following two statements are equivalent:

I. P is a probability measure, P and Bel satisfy the Humean thesis HT rPoss .

II. P is a probability measure, and there is a (uniquely determined)

proposition X , s.t.

– X is a non-empty P-stabler proposition,

– if P (X ) = 1 then X is the least proposition with probability 1; and:

For all Y :

Bel (Y ) if and only if Y ⊇ X

(and hence, BW = X ).

Def.: X is P-stabler iff for all Y with Y ∩ X , ∅ and P (Y ) > 0: P (X |Y ) > r .

Example:

6. P ({w7 }) = 0.00006

(“Ranks”)

5. P ({w6 }) = 0.002

4. P ({w5 }) = 0.018

!

3. P ({w3 }) = 0.058, P ({w4 }) = 0.03994

2. P ({w2 }) = 0.342

!

"!

%&'()!

!"

1. P ({w1 }) = 0.54

%"

%&%,+!

1

This yields the following P-stable 2 sets (BW ):

{w1 , w2 , w3 , w4 , w5 , w6 , w7 }

{w1 , w2 , w3 , w4 , w5 , w6 }

{w1 , w2 , w3 , w4 , w5 }

{w1 , w2 , w3 , w4 }

{w1 , w2 }

{ w1 }

#!

(“Spheres”)

%&%'..(!

$"

#"

%&*(!

%!

'"

%&%%%%-!

%&%%)!

&"

$!

%&%*+!

$"

One can prove: The class of P-stabler propositions X with P (X ) < 1 is

well-ordered with respect to the subset relation (it is a “sphere system”).

!

(!

!

!

!

!

!

!

!

!

)!

!

!

"!

!

!!!#!

!!!$!

!!!%!

*+,#-!

!!!&!

&'$!

*+,&-!

!

*+,$-!

*+,%-!

!

*+,&'$-!

!

$!

"%!

#&!$!

!!%!

$!

#!

%!

#&!$&!%!

!!$!

#&!%!

!!$!

#&!%!

$!

%!

#!

!!%!

#&!$!

%!

$!

#!

#!

$!

%!

!!#!

$&!%!

!!#!

$&!%!

#!

%!

$!

!!%!

#&!$!

%!

#!

$!

"#!

"$!

#&!%!

!!$!

$&!%!

!!#!

1

Almost all P allow for a P-stable 2 set X with P (X ) < 1!

One can also prove that there is a simple algorithm which generates all

P-stabler sets (i.e., all possible ways of satisfying the Humean thesis).

Logical Closure and the ‘←’-direction of the Lockean Thesis

most of the Propositions we think, reason, discourse, nay act

upon, are such, as we cannot have undoubted Knowledge of their

Truth: yet some of them border so near upon Certainty, that we make

no doubt at all about them; but assent to them firmly, and act,

according to that Assent, as resolutely, as if they were infallibly

demonstrated. . . (Locke, Book IV, Essay)

Logical Closure and the ‘←’-direction of the Lockean Thesis

most of the Propositions we think, reason, discourse, nay act

upon, are such, as we cannot have undoubted Knowledge of their

Truth: yet some of them border so near upon Certainty, that we make

no doubt at all about them; but assent to them firmly, and act,

according to that Assent, as resolutely, as if they were infallibly

demonstrated. . . (Locke, Book IV, Essay)

Explication (cf. Foley 1993):

The Lockean thesis: Bel (X ) iff P (X ) ≥ r > 12 .

Logical Closure and the ‘←’-direction of the Lockean Thesis

most of the Propositions we think, reason, discourse, nay act

upon, are such, as we cannot have undoubted Knowledge of their

Truth: yet some of them border so near upon Certainty, that we make

no doubt at all about them; but assent to them firmly, and act,

according to that Assent, as resolutely, as if they were infallibly

demonstrated. . . (Locke, Book IV, Essay)

Explication (cf. Foley 1993):

The Lockean thesis: Bel (X ) iff P (X ) ≥ r > 12 .

!

!

In our next approach we will combine:

≥r > 12

LT←

: Bel (X ) if P (X ) ≥ r > 12 ,

the axioms of probability for P, and

the logic of belief for Bel.

"#!

Representation theorem

The following two statements are equivalent:

I. P is a probability measure, Bel satisfies the logic of belief, not Bel (∅),

≥P (BW )> 21

the right-to-left of the Lockean thesis LT←

holds.

II. P is a probability measure, and there is a (uniquely determined) X , s.t.

1

– X is a non-empty P-stable 2 proposition,

– if P (X ) = 1 then X is the least proposition with probability 1; and:

For all Y :

Bel (Y ) if and only if Y ⊇ X

(and hence, BW = X ).

≥P (BW )> 21

And either side implies the full LT↔

: Bel (X ) iff P (X ) ≥ P (BW ) > 12 .

Back to the example from before:

6. P ({w7 }) = 0.00006

(“Ranks”)

5. P ({w6 }) = 0.002

4. P ({w5 }) = 0.018

!

3. P ({w3 }) = 0.058, P ({w4 }) = 0.03994

2. P ({w2 }) = 0.342

!

"!

%&'()!

!"

1. P ({w1 }) = 0.54

%"

%&%,+!

1

This yields the following P-stable 2 sets (BW ):

{w1 , w2 , w3 , w4 , w5 , w6 , w7 } (≥ 1.0)

{w1 , w2 , w3 , w4 , w5 , w6 } (≥ 0.99994)

{w1 , w2 , w3 , w4 , w5 } (≥ 0.99794)

{w1 , w2 , w3 , w4 } (≥ 0.97994)

{w1 , w2 } (≥ 0.882)

{w1 } (≥ 0.54)

#!

(“Spheres”)

%&%'..(!

$"

#"

%&*(!

%!

'"

%&%%%%-!

%&%%)!

&"

$!

%&%*+!

$"

What does this mean for the Lottery paradox (cf. Kyburg 1961)?

If one is interested in which ticket will be drawn:

P ({w1 }) =

1

, P ({w2 })

1000000

=

1

, . . . , P ({w1000000 })

1000000

=

1

1000000

r

The Humean thesis HTPoss

is only satisfied if the strongest believed

proposition BW is W (that is, by a Lockean threshold of 1):

the agent believes that some ticket will be drawn.

What does this mean for the Lottery paradox (cf. Kyburg 1961)?

If one is interested in which ticket will be drawn:

P ({w1 }) =

1

, P ({w2 })

1000000

=

1

, . . . , P ({w1000000 })

1000000

=

1

1000000

r

The Humean thesis HTPoss

is only satisfied if the strongest believed

proposition BW is W (that is, by a Lockean threshold of 1):

the agent believes that some ticket will be drawn.

If one is interested in whether ticket #i will be drawn (cf. Levi 1967):

P ({wi }) =

1

,

1000000

P ({w 0 }) =

999999

1000000

Now there is another candidate for the strongest believed proposition BW

999999

r

by which HTPoss

);

is satisfied: {w 0 } (and a Lockean threshold of 1000000

the agent believes that ticket #i will not be drawn.

While this takes care of the Lottery Paradox, it also shows that the theory

comes with a price:

First of all, only particular thresholds s [= P (BW )] are permissible to be

used in the Lockean thesis.

Which thresholds one is permitted to choose depends on P and Bel.

In particular: the subject’s degree-of-belief function P must be counted as

belonging to the very context that determines s.

While this takes care of the Lottery Paradox, it also shows that the theory

comes with a price:

First of all, only particular thresholds s [= P (BW )] are permissible to be

used in the Lockean thesis.

Which thresholds one is permitted to choose depends on P and Bel.

In particular: the subject’s degree-of-belief function P must be counted as

belonging to the very context that determines s.

Secondly, belief is partition-dependent (but there is some kind of

partition-invariance,

too):

!

Bel (A)

¬Bel (A)

And the smaller the partition cells in terms of probability,

the greater the threshold needs to be for the Lockean thesis.

On the other hand:

Why shouldn’t BW be required to be a “natural proposition” and the

corresponding threshold s [= P (BW )] a “natural threshold”?

That the permissibility of a threshold co-depends on P is not surprising

either from the viewpoint of decision theory.

On the other hand:

Why shouldn’t BW be required to be a “natural proposition” and the

corresponding threshold s [= P (BW )] a “natural threshold”?

That the permissibility of a threshold co-depends on P is not surprising

either from the viewpoint of decision theory.

Is it really plausible to assume that when we have beliefs we always take

into account every maximally fine-grained possibility whatsoever?

Instead, we might reason categorically relative to some contextually

determined partition of few, salient, and sufficiently likely alternatives, say,

A ∧ B ∧ C , A ∧ B ∧ ¬C , A ∧ ¬B ∧ C , . . . , ¬A ∧ ¬B ∧ ¬C ,

only taking into account the propositions that can be built from them.

(cf. Levi on acceptance, Skyrms on chance; Windschitl and Wells 1998)

Results by Lin & Kelly (2012b) show that the partition-dependence of

belief is unavoidable given the logical closure of belief.

If this theory is right, then very fine-grained partitions will make a rational

agent very cautious concerning her all-or-nothing beliefs.

But that is similar to what epistemologists today say about knowledge:

Rational belief is simply “elusive”, too (cf. Lewis 1996).

(My preferred take is not contextualism about rational belief but something

like sensitive moderate invariantism: see, e.g., Hawthorne 2004.)

Moral: The Humean thesis can be recovered from

the logical closure of belief plus one direction of the Lockean thesis.

The price for this is a strong sensitivity of rational all-or-nothing belief to the

context of reasoning which includes

the agent’s partitioning of possibilities, the permissible Lockean

thresholds, and her degrees of belief.

But that price might well be affordable.

Belief Revision and the ‘→’-direction of the Lockean Thesis

In the third approach we start from:

Conditional belief Bel (.|.)

(the qualitative counterpart of conditional probability).

Read: Bel (Y |X ) iff the agent believes Y on the supposition of X

(iff the agent is disposed to believe Y upon learning X ).

Bel (Y ) iff Bel (Y |W ) iff the agent believes Y (unconditionally).

Belief Revision and the ‘→’-direction of the Lockean Thesis

In the third approach we start from:

Conditional belief Bel (.|.)

(the qualitative counterpart of conditional probability).

Read: Bel (Y |X ) iff the agent believes Y on the supposition of X

(iff the agent is disposed to believe Y upon learning X ).

Bel (Y ) iff Bel (Y |W ) iff the agent believes Y (unconditionally).

Accordingly, logical closure of Bel (.|.) needs to be redefined:

AGM belief revision ≈ Lewisian conditional logic ≈

≈ Nonmonotonic rational consequence ≈ . . .

Belief Revision and the ‘→’-direction of the Lockean Thesis

In the third approach we start from:

Conditional belief Bel (.|.)

(the qualitative counterpart of conditional probability).

Read: Bel (Y |X ) iff the agent believes Y on the supposition of X

(iff the agent is disposed to believe Y upon learning X ).

Bel (Y ) iff Bel (Y |W ) iff the agent believes Y (unconditionally).

Accordingly, logical closure of Bel (.|.) needs to be redefined:

AGM belief revision ≈ Lewisian conditional logic ≈

≈ Nonmonotonic rational consequence ≈ . . .

“→” of the Lockean Thesis (with the threshold r being independent of P).

Representation theorem

The following two statements are equivalent:

I. P is a probability measure, Bel satisfies logical closure (AGM belief

r

revision ≈ Lewisian conditional logic ≈ . . .), not Bel (∅|W ), and LT>

→.

II. P is a probability measure, and there is a (uniquely determined) chain X

of non-empty P-stabler propositions , such that Bel (·|·) is given by X

in a Lewisian sphere-system-like manner.

r

LT>

→ (“→” of Lockean thesis) For all Y , s.t. P (Y ) > 0:

For all Z , if Bel (Z |Y ), then P (Z |Y ) > r .

And either side implies a version of the full Lockean thesis again!

Example: Let P be again as in our initial example.

>1

Then if Bel (·|·) obeys AGM, and if P and Bel (·|·) jointly satisfy LT→2 , then

Bel (·|·) must be given by some coarse-graining of the ranking in red below.

Choosing the maximal (most fine-grained) Bel (·|·) yields the following:

!

Bel (A ∧ B | A)

(A → A ∧ B)

Bel (A ∧ B | B )

(B → A ∧ B)

Bel (A ∧ B | A ∨ B ) (A ∨ B → A ∧ B)

Bel (A | C )

(C → A)

¬Bel (B | C )

(C 9 B)

Bel (A | C ∧ ¬B )

(C ∧ ¬B → A)

!

"!

#!

%&'()!

!"

%"

%&%,+!

%&%'..(!

$"

#"

%&*(!

%!

'"

%&%%%%-!

%&%%)!

&"

$!

%&%*+!

$"

For three worlds again (and r = 12 ), the maximal Bel (·|·) given P and r are

given by these rankings (or sphere systems):

"%!

#&!$!

!!%!

$!

#!

%!

#&!$&!%!

!!$!

#&!%!

!!$!

#&!%!

$!

%!

#!

!!%!

#&!$!

%!

$!

#!

#!

$!

%!

!!#!

$&!%!

!!#!

$&!%!

#!

%!

$!

!!%!

#&!$!

%!

#!

$!

"#!

"$!

$&!%!

!!#!

#&!%!

!!$!

For a stream of evidence E1 , E2 , E3 , . . . such that E1 ∩ E2 ∩ E3 ∩ . . . , ∅,

P

7→ PE1

7→ [PE1 ]E2

7→ [[PE1 ]E2 ]E3

7→ · · ·

Bel 7→ Bel ∗ E1 7→ [Bel ∗ E1 ] ∗ E2 7→ [[Bel ∗ E1 ] ∗ E2 ] ∗ E3 7→ · · ·

each pair hP... , Bel ∗ . . .i satisfies the Humean thesis if the initial pair does.

Moral: The conditional version of the Humean thesis is equivalent to

belief revision theory plus the “other” direction of the Lockean thesis.

One gets new insights into belief revision theory in this way:

Preservation (“if ¬A < K , then K ∗ A = K + A”) yields stability.

For Kevin: If your total relevant belief is Nogot-or-Havit, each of the two

options should be sufficiently more likely than Nobody (where the

meaning of “sufficiently likely” is given by the context).

But then if Nogot is eliminated, you should believe Havit.

What are the fallback positions after receiving recalcitrant evidence?

Positions that are presently permissible but more cautious!

And, by the Humean thesis, all-or-nothing update and probabilistic update

cohere with each other.

Epistemic Decision Theory and the Humean Thesis

(This relates to Ted & Branden’s paper from yesterday.)

Theorem

r

If P is a probability measure, if Bel satisfies the Humean thesis HTPoss

, and if

not Bel (∅), then:

for all worlds w of W , for all pieces X of evidence with P (X ) > 0 and Poss(X ),

the expected epistemic utility for belief to be generated by BW (given evidence

X ) is positive:

P ({w } |X ) · uw (BW ) > 0

∑

w ∈W

where

the epistemic utility uw (Y ), at world w, for belief to be generated by Y is

defined by

(

a ≥ 1 − r , in case w ∈ Y

uw (Y ) =

−r ≤ b < 0, in case w < Y .

Or in a more complex version that allows for coarse-graining by partitions:

Theorem

r

If P is a probability measure, if Bel satisfies the Humean thesis HTPoss

, and if

not Bel (∅), then:

for all partitions π of W , for all pieces X of evidence with P (X ) > 0 and

Poss(X ), the expected epistemic utility for belief to be generated by BW (given

evidence X , in context π) is positive:

∑ P (p |X ) · up (BW ) > 0

p∈π

where

the epistemic utility up (Y ), at the “coarse-grained” world p, for belief to be

generated by Y is defined by

(

up ( Y ) =

a ≥ 1 − r , in case p ∩ Y , ∅

−r ≤ b < 0, in case p ∩ Y = ∅.

Moral: If the Humean thesis holds, then

rational belief is epistemically useful (“aims at the truth”).

One can also prove a converse (and thus gets a representation theorem again):

If Bel is logically closed, not Bel (∅), and the then-part of the last theorem

holds, then the Humean thesis follows.

Other than the above, there is also a different kind of “accuracy” argument for

the Humean thesis which is based on belief aiming at degrees of belief.

(If we have time and you feel like doing it:)

De Finetti and others have emphasized the importance of the qualitative

probability order 4P over propositions that is determined by P:

A 4P B iff P (A) ≤ P (B ).

(If we have time and you feel like doing it:)

De Finetti and others have emphasized the importance of the qualitative

probability order 4P over propositions that is determined by P:

A 4P B iff P (A) ≤ P (B ).

In nonmonotonic reasoning / belief revision theory one generates preferences

over propositions from total pre-orders over worlds (cf. Halpern 2005):

max4 (A) = {w ∈ A | ∀w 0 ∈ A, w 0 4 w }

A 4 B iff ∀w ∈ max4 (A), ∃w 0 ∈ max4 (B ): w 4 w 0 .

(And from max4 (.) one can determine unconditional & conditional belief again.)

(If we have time and you feel like doing it:)

De Finetti and others have emphasized the importance of the qualitative

probability order 4P over propositions that is determined by P:

A 4P B iff P (A) ≤ P (B ).

In nonmonotonic reasoning / belief revision theory one generates preferences

over propositions from total pre-orders over worlds (cf. Halpern 2005):

max4 (A) = {w ∈ A | ∀w 0 ∈ A, w 0 4 w }

A 4 B iff ∀w ∈ max4 (A), ∃w 0 ∈ max4 (B ): w 4 w 0 .

(And from max4 (.) one can determine unconditional & conditional belief again.)

Question: To what extent can 4 approximate 4P ?

Equally importantly: What exactly do we mean by approximation here?

When aiming to approximate the truth by means of belief, and assuming that a

rational agent’s set of beliefs is consistent, there are just two kinds of errors:

The Soundness Error: Believing A when A is false.

The Completeness “Error”: Neither believing A nor ¬A (when one is true).

Equally importantly: What exactly do we mean by approximation here?

When aiming to approximate the truth by means of belief, and assuming that a

rational agent’s set of beliefs is consistent, there are just two kinds of errors:

The Soundness Error: Believing A when A is false.

The Completeness “Error”: Neither believing A nor ¬A (when one is true).

Replacing suspension by indifference leads us to the corresponding two kinds

of errors when aiming to approximate 4P by 4:

The Soundness Error: A ≺ B when it is not the case that A ≺P B.

The Completeness “Error”: A ≈ B when either A ≺P B or B ≺P A is true.

Hence we define:

Definition

For all total pre-orders 4, 40 over W (from which total pre-orders 4, 40 over

propositions can be determined), and for all probability measures P:

4 is more accurate than 40 (when approximating 4P ) iff

1

the set of soundness errors of 4 is a proper subset of the set of

soundness errors of 40 ;

2

or: their sets of soundness errors are equal, but the set of completeness

“errors” of 4 is a proper subset of the set of completeness “errors” of 40 .

Theorem

For all probability measures P (defined on all subsets of W ):

For all total pre-orders 4 on W :

4 is not subject to any soundness errors (relative to 4P ) iff

1

4 is determined by a sphere system of P-stable 2 sets.

For all total pre-orders 4, 40 on W that are not affected by any soundness

errors (relative to 4P ):

4 is more accurate than 40 (when approximating 4P ) iff

4 is more fine-grained than 40 .

In our example, the most accurate 4 is again given by (the reverse of):

{w1 , w2 , w3 , w4 , w5 , w6 ,! w7 }

{w1 , w2 , w3 , w4 , w5 , w6 }

{w1 , w2 , w3 , w4 , w5 }

!

"!

%&'()!

!"

%"

%&%,+!

{w1 , w2 , w3 , w4 }

{w1 , w2 }

{ w1 }

#!

%&%'..(!

$"

#"

%&*(!

%!

'"

%&%%%%-!

%&%%)!

&"

$!

%&%*+!

$"

Moral: the conditional (ordering) version of the Humean thesis is equivalent to

rational belief aiming accurately at qualitative probability.

An Example: Theory Choice

Our example P is from Bayesian Philosophy of Science: J. Dorling (1979)

!

!

"!

#!

&'()*!

&'+)!

&'&+,!

&!

&'&-,!

&'&&&&.!

&'&&*!

&'&(//)!

$%!

E 0 : Observational result for the secular acceleration of the moon.

T : Relevant part of Newtonian mechanics.

H: Auxiliary hypothesis that tidal friction is negligible.

P (T |E 0 ) = 0.8976, P (H |E 0 ) = 0.003.

while I will insert definite numbers so as to simplify the

mathematical working, nothing in my final qualitative interpretation. . .

will depend on the precise numbers. . .

!

!

"!

#!

&'()*!

!"

$"

&'&-,!

&'&(//)!

#"

!"

&'+)!

&!

&'&+,!

#"

&"

&'&&&&.!

&'&&*!

%"

$%!

Bel (T |E 0 ), Bel (¬H |E 0 ) (with r = 34 ).

while I will insert definite numbers so as to simplify the

mathematical working, nothing in my final qualitative interpretation. . .

will depend on the precise numbers. . .

!

!

"!

#!

&'()*!

!"

$"

&'&-,!

&'&(//)!

#"

!"

&'+)!

&!

&'&+,!

#"

&"

&'&&&&.!

&'&&*!

%"

$%!

Bel (T |E 0 ), Bel (¬H |E 0 ) (with r = 34 ).

. . . scientists always conducted their serious scientific debates in

terms of finite qualitative subjective probability assignments to

scientific hypotheses (Dorling 1979). X

Conclusion:

The stability conception of belief can be justified on various independent

grounds that are all to do with theoretical rationality.

In Lecture 3 we will see that the same is true for practical rationality.

References:

“The Stability Theory of Belief”, The Philosophical Review 123/2 (2014),

131–171.

“Reducing Belief Simpliciter to Degrees of Belief”, Annals of Pure and Applied

Logic 164 (2013), 1338–1389.

I used parts of the monograph on The Stability of Belief that I am writing.

Soon a draft will appear at

https://lmu-munich.academia.edu/HannesLeitgeb