Attachment 3 This document provides a summary of: I.

This document provides a summary of:

I.

mCLASS®:Reading 3D™—purpose, assessment goals, and cut-point background

II.

Concurrent validity (mCLASS:Reading 3D and the EOG)

III.

Text complexity and mCLASS:Reading 3D

IV.

Alignment of mCLASS:Reading 3D to the Common Core State Standards for ELA

Attachment 3

Attachment 3

Executive Summary

I.

mCLASS:Reading 3D—purpose, assessment goals, and cut-point background

NC DPI selected mCLASS:Reading 3D to support the legislative requirement to implement a developmentally appropriate diagnostic assessment that would enable teachers to determine student learning needs and individual instruction.

mCLASS:Reading 3D is an early literacy formative assessment that combines the Dynamic

Indicators of Basic Early Literacy Skills (DIBELS Next®, developed by DMG) with the Text Reading and Comprehension (TRC) assessment.

DIBELS Next and TRC are formative assessments intended to guide instruction and are not intended or designed to be summative assessments. The cut points are reflective of expectations for students at the respective times of the year (e.g., cut points for third grade beginning of year reflect what third grade students should be capable of at the beginning of third grade).

DIBELS Next scores are compared to research-derived benchmarks that are predictive of reading success. These cut points for risk are defined by the DIBELS authors as an indication of a level of skill below which a student is unlikely to achieve reading goals at subsequent benchmark administration periods.

The TRC assessment allows teachers to utilize a reading record to analyze a student’s ability to read accurately and comprehend grade-level texts. TRC cut points were revised in 2012-2013 according to the Item Descriptor (ID) Matching method (Ferrara, Perie, & Johnson, 2002; 2008), and rigor increased. Two members of the expert panel were from NC and understood the unique needs of NC educators.

II.

Concurrent validity (mCLASS:Reading 3D and the EOG)

Multiple analyses provide strong evidence for the concurrent validity of mCLASS:Reading 3D as demonstrating similar performance, and therefore measuring similar skills and abilities, as the

EOG.

Students who were classified as both Benchmark on DIBELS Next and Proficient or above on TRC were most likely to also be Proficient or above on the EOG while students who were both below

Benchmark and below Proficient on TRC were most frequently nonProficient on the EOG.

Strong, positive correlations are demonstrated for the relationship of the EOG with DIBELS Next

(0.74) and TRC (0.71), indicating that high performance on each of the mCLASS:Reading 3D measures corresponds well to high performance on the EOG.

Statistical estimation of proficiency on the EOG by the combination of DIBELS Next and TRC demonstrates 79% accuracy when compared to observed student performance.

III.

Text complexity and mCLASS:Reading 3D

Reading passages administered in DIBELS Next were designed and selected according to rigorous content considerations, experimental design, and statistical analysis. Materials were developed to meet the demands of the Passage Difficulty Index developed by DMG and further evaluated against numerous text complexity indices (e.g., Lexiles, Spache, Flesch). These are in line with the CCSS grade-level expectations.

Attachment 3

The Rigby books used in the TRC assessment were developed by Harcourt Publishing. Lexile ranges are available, and official Lexiles for each of the Rigby books are in progress with

MetaMetrics. In addition, Amplify has performed qualitative and quantitative analyses against the books to analyze the increase of complexity across levels.

IV.

Alignment of mCLASS:Reading 3D to the Common Core State Standards for ELA

The assessments align with the intention of the CCSS to ensure students develop foundational skills and the ability to read and comprehend texts of the appropriate complexity, both informational and literal.

Alignment is presented within this report for the strands and substrands also aligned with the

NC Reading Portfolio.

mCLASS

®

:Reading 3D™—purpose, assessment goals, cut-point background

Attachment 3

In the Appropriation Act of 2009 (S.B. 202, Section 7.18(b)), the legislature required that the State Board of Education “investigate and pilot a developmentally appropriate diagnostic assessment [that] will ...

I.

Enable teachers to determine student learning needs and individualize instruction

II.

Ensure that students are adequately prepared for the next level of coursework as set out by the

North Carolina Standard Course of Study”

In 2009-2010, NCDPI conducted a pilot of diagnostic assessments, and the 2010 Budget Act provided funding to implement a diagnostic reading system in more schools. To this purpose, the NC Department of Public Instruction (NCDPI) released RFP#40 – Reading Diagnostic Assessments. mCLASS:Reading 3D was piloted and chosen via the RFP, based on its fit with the requirements stated above. mCLASS:Reading 3D is an early literacy formative assessment for students in grades K-5 that combines the latest version of the Dynamic Indicators of Basic Early Literacy Skills (DIBELS Next® developed by

DMG) with the reading accuracy and comprehension measures of our Text Reading and Comprehension

(TRC) assessment.

The DIBELS Next measures were designed for use in identifying children experiencing difficulty in acquisition of basic early literacy skills in order to provide support early and prevent the occurrence of later reading difficulties. The assessment is a set of procedures and measures for assessing the acquisition of early literacy skills from kindergarten through sixth grade. They are designed to be short

(one minute) measures used to regularly monitor the development of early literacy and early reading skills. The seven DIBELS Next measures function as indicators of phonemic awareness, alphabetic principle, accuracy and fluency with connected text, reading comprehension, and vocabulary.

DIBELS Next scores are compared to research-derived benchmarks that are predictive of reading success. These cut points for risk are defined by the DIBELS authors as an indication of a level of skill below which a student is unlikely to achieve reading goals at subsequent benchmark administration periods. Students in the at-risk categories are likely to be in need of more intensive instructional support as noted here https://dibels.org/papers/DIBELSNextBenchmarkGoals.pdf. For further information please see the DIBELS Next Technical manual (provided along with this summary, and also available at www.dibels.org) .

The TRC assessment, which is based on an approach developed by Marie Clay, author of An Observation

Survey of Early Literacy Achievement, allows educators to utilize a reading record to analyze a student’s oral reading performance. The measure provides insight into students’ word-level reading skills and ability to read fluently and comprehend grade-level texts. TRC cut points were revised in 2012-2013 according to the Item Descriptor (ID) Matching method (Ferrara, Perie, & Johnson, 2002; 2008), a standard-setting procedure appropriate for use with performance-based assessments that yield

Attachment 3 categorical results (e.g., Below Proficient, Proficient) such as TRC. Full technical documentation on the cut point revision procedure is available at https://www.mclasshome.com/support_center/mCLASS_Reading3D_TRC_CutPoints_R.pdf

. Two members of the expert panel were from NC and understood the unique needs of NC educators.

Both DIBELS Next and TRC are intended to be administered three times per school year at the beginning, middle and end of school year. By using this combination of measures, educators and administrators gain a balanced view of student performance, allowing them to tailor instruction based on student needs. Further, DIBELS Next and TRC are formative assessments intended to guide instruction and are not intended or designed to be summative assessments; the cut points on these assessments are reflective of the expectations of students’ early literacy skills at the respective times of the year . For example, the cut points for both assessments for the Grade 3 Beginning-of-Year period reflect what third grade students should be capable of at the beginning of third grade. Amplify understands that this design differs from the NC DPI’s BOG exam in that the BOG measures students at the beginning of the year against the end of year performance expectations. Therefore, we would expect that students may have “failed” the BOG while they were at or above Benchmark on mCLASS:Reading 3D given the different purposes and goals of each assessment.

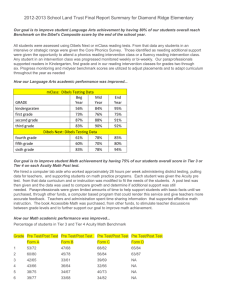

Concurrent validity (mCLASS:Reading 3D and NC EOG)

In prior concurrent validity studies, we found close correspondence between the NC EOG and mCLASS:Reading 3D results. Students who were proficient on both DIBELS Next and TRC were most often (68%) proficient (or above) on the NC EOG, while students who were observed to be nonproficient on both DIBELS Next and TRC were most frequently (96%) observed to also be non-proficient on the NC EOG. Statistical analysis suggests that mCLASS:Reading 3D provides adequate concurrent validity evidence - DIBELS Next demonstrated a correlation of r = 0.74 with NC EOG and TRC correlated with NC EOG at r = 0.71. Lastly, a logistic regression model was used to estimate NC EOG scores from mCLASS:Reading 3D performance—allowing interpretation to be extended beyond the current administration period and population of students. This statistical analysis demonstrated that the combination of DIBELS Next and TRC has an overall accuracy of 79% in estimating proficiency on the

Grade 3 NC EOG reading comprehension assessment.

Text Complexity

Reading passages employed in the Oral Reading Fluency (ORF) measure of DIBELS Next were designed and selected according to rigorous content considerations, experimental design, and statistical analysis

(Powell-Smith, Good, Atkins, 2010). Primarily, the materials were developed to meet the demands of the text complexity, or readability, index developed by DMG: the Passage Difficulty Index (Cummings,

Wallin, Good, & Kaminski, 2007). Each of these materials were additionally subjected to evaluation according to numerous text complexity, or readability, indices (e.g., Lexiles, Spache, Flesch, etc.) to further validate the appropriateness of the passages by grade-level and time of year. The full report is available at http://dibels.org/papers/DIBELSNext_ReadabilityTechReport_2011-08-22.pdf.

Attachment 3

The Rigby books used by NC during benchmark administration of the TRC assessment were developed by Harcourt Publishing. Post-publishing, Amplify has performed various qualitative and quantitative analyses against the books to analyze the increase of complexity across levels. Lexile ranges are provided within the assessment materials

( https://www.mclasshome.com/support_center/mCLASS_Reading3D_RigbyUltra_Assessment_Materials

, pages 1-3). Official Lexiles for each of the Rigby books are in progress with MetaMetrics, while results of Amplify’s further analysis can be made available upon request. Currently, Spache ratings for the Rigby materials have not been determined, but Amplify would be happy to conduct the analysis.

Expected reading behaviors demonstrated during administration of TRC and text complexity of the Rigby materials were both considered during the previously mentioned cut point revision process. As such, the cut points reflect the increased expectations of the CCSS for ELA and consider both qualitative and quantitative text complexity characteristics of the TRC assessment materials.

It should also be noted that Amplify, MetaMetrics, and Dynamic Measurement Group are currently conducting a research study to link DIBELS Next measures with the Lexile Framework in grades 1-6. By linking Lexile measures with DIBELS Next, educators will be able to use this information to more accurately tailor instruction to individual student reading levels.

Alignment of mCLASS:Reading 3D, North Carolina’s Grade 3 Reading

Portfolio, and the CCSS for ELA

The assessments align with the intention of the CCSS to ensure students develop foundational skills and the ability to read and comprehend texts of the appropriate complexity, both informational and literal.

Comprehension questions focus on understanding whether students can comprehend the text read, as opposed to relying on any background they may already have on the subject.

Alignment of the mCLASS:Reading 3D with the CCSS for ELA is presented below for the strands and substrands also aligned with the NC Reading Portfolio.

The key skills assessed by DIBELS Next and TRC are those identified by a body of research as being predictive of achieving important and meaningful reading outcomes (NRP, 2000). As such, these assessments are indicators of broader skill areas signaling adequate progress in mastering the CCSS for

ELA and are not intended as indicators of standards-level mastery; therefore, alignment is presented broadly at the sub-strand level only.

It should be noted that mCLASS:Reading 3D also aligns with CCSS substrands beyond those addressed by the NC Reading Portfolio. Full DIBELS Next alignment information is available at https://dibels.org/DIBELS_Next_Common_Core_Alignment.pdf

; full TRC alignment information is available from Amplify upon request.

Attachment 3

Text

Text

Text

Strand

Literature

Literature

Language

Informational

Informational

Informational

CCSS for ELA / mCLASS:Reading 3D Substrand Alignment

Key Ideas

Key Ideas

Substrand

Craft and Structure

NC Reading

Portfolio

(RL.3.1-RL.3.3)* mCLASS:

Reading3D TRC

** ** **

DIBELS

Next

(RL.3.4) ** **

** **

Craft and Structure

Integration of Knowledge and

Ideas

Vocabulary Acquisition and

Use

* CCSS for ELA code.

** Substrand alignment.

(RI.3.1-RI.3.3)

(RI.3.4)

(RI.3.7-RI.3.8)

(L.3.4a - L.3.5a)

**

**

**

**

**

Attachment 3

References

Cummings, K. D., Wallin, J., Good, R. H. III, & Kaminski, R. A. (2007). The DMG Passage Difficulty Index

[Formula and Computer Software]. Dynamic Measurement Group, Eugene, OR.

Ferrara, S., Perie, M., & Johnson, E. (2002). Matching the Judgmental Task With Standard Setting

Panelist Expertise: The Item-Descriptor (ID) Matching Procedure. Presented to the National Research

Council’s Board of Testing and Assessment (BOTA), Washington, DC.

Ferrara, S., Perie, M., & Johnson, E. (2008). Matching the judgmental task with standard setting panelist expertise: The Item-Descriptor (ID) Matching method. Journal of Applied Testing Technology, 9(1), 1-22.

National Reading Panel (2000). Teaching children to read: An evidence-based assessment of the scientific research literature on reading and its implications for reading instruction [on-line]. Available: http://www.nichd.nih.gov/publications/nrp/report.cfm

Powell-Smith, K. A., Good, R. H., & Atkins, T. (2010). DIBELS Next Oral Reading Fluency Readability Study

(Technical Report No. 7). Eugene, OR: Dynamic Measurement Group. Available at http://dibels.org/papers/DIBELSNext_ReadabilityTechReport_2011-08-22.pdf