Research Article the Consistent Matrix Equations ,

advertisement

Hindawi Publishing Corporation

Journal of Applied Mathematics

Volume 2013, Article ID 125687, 6 pages

http://dx.doi.org/10.1155/2013/125687

Research Article

A New Method for the Bisymmetric Minimum Norm Solution of

the Consistent Matrix Equations 𝐴 1𝑋𝐵1 = 𝐶1, 𝐴 2𝑋𝐵2 = 𝐶2

Aijing Liu,1,2 Guoliang Chen,1 and Xiangyun Zhang1

1

2

Department of Mathematics, East China Normal University, Shanghai 200241, China

School of Mathematical Sciences, Qufu Normal University, Shandong 273165, China

Correspondence should be addressed to Guoliang Chen; glchen@math.ecnu.edu.cn

Received 18 October 2012; Accepted 16 January 2013

Academic Editor: Alberto Cabada

Copyright © 2013 Aijing Liu et al. This is an open access article distributed under the Creative Commons Attribution License,

which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

We propose a new iterative method to find the bisymmetric minimum norm solution of a pair of consistent matrix equations

𝐴 1 𝑋𝐵1 = 𝐶1 , 𝐴 2 𝑋𝐵2 = 𝐶2 . The algorithm can obtain the bisymmetric solution with minimum Frobenius norm in finite iteration

steps in the absence of round-off errors. Our algorithm is faster and more stable than Algorithm 2.1 by Cai et al. (2010).

1. Introduction

Let R𝑚×𝑛 denote the set of 𝑚 × 𝑛 real matrices. A matrix

𝑋 = (𝑥𝑖𝑗 ) ∈ R𝑛×𝑛 is said to be bisymmetric if 𝑥𝑖𝑗 = 𝑥𝑗𝑖 =

𝑥𝑛−𝑖+1,𝑛−𝑗+1 for all 1 ≤ 𝑖, 𝑗 ≤ 𝑛. Let BSR𝑛×𝑛 denote 𝑛 × 𝑛 real

bisymmetric matrices. For any 𝑋 ∈ R𝑚×𝑛 , 𝑋𝑇 , tr(𝑋), ‖𝑋‖,

and ‖𝑋‖2 represent the transpose, trace, Frobenius norm,

and Euclidean norm of 𝑋, respectively. The symbol vec(⋅)

stands for the vec operator; that is, for 𝑋 = (𝑥1 , 𝑥2 , . . . , 𝑥𝑛 ) ∈

R𝑚×𝑛 , where 𝑥𝑖 (𝑖 = 1, 2, . . . , 𝑛) denotes the 𝑖th column of 𝑋,

vec(𝑋) = (𝑥1𝑇 , 𝑥2𝑇 , . . . , 𝑥𝑛𝑇 )𝑇 . Let mat(⋅) represent the inverse

operation of vec operator. In the vector space R𝑚×𝑛 , we define

the inner product as ⟨𝑋, 𝑌⟩ = tr(𝑌𝑇𝑋) for all 𝑋, 𝑌 ∈ R𝑚×𝑛 .

Two matrices 𝑋 and 𝑌 are said to be orthogonal if ⟨𝑋, 𝑌⟩ = 0.

Let 𝑆𝑛 = (𝑒𝑛 , 𝑒𝑛−1 , . . . , 𝑒1 ) denote the 𝑛 × 𝑛 reverse unit matrix

where 𝑒𝑖 (𝑖 = 1, 2, . . . , 𝑛) is the 𝑖th column of 𝑛 × 𝑛 unit matrix

𝐼𝑛 ; then 𝑆𝑛𝑇 = 𝑆𝑛 , 𝑆𝑛2 = 𝐼𝑛 .

In this paper, we discuss the following consistent matrix

equations:

𝐴 1 𝑋𝐵1 = 𝐶1 ,

𝐴 2 𝑋𝐵2 = 𝐶2 ,

𝑋 ∈ BSR𝑛×𝑛 ,

(1)

where 𝐴 1 ∈ R𝑝1 ×𝑛 , 𝐵1 ∈ R𝑛×𝑞1 , 𝐶1 ∈ R𝑝1 ×𝑞1 , 𝐴 2 ∈ R𝑝2 ×𝑛 , 𝐵2 ∈

R𝑛×𝑞2 , and 𝐶2 ∈ R𝑝2 ×𝑞2 are given matrices, and 𝑋 ∈ BSR𝑛×𝑛 is

unknown bisymmetric matrix to be found.

Research on solving a pair of matrix equations 𝐴 1 𝑋𝐵1 =

𝐶1 , 𝐴 2 𝑋𝐵2 = 𝐶2 has been actively ongoing for the past 30 or

more years (see details in [1–6]). Besides the works on finding

the common solutions to the matrix equations 𝐴 1 𝑋𝐵1 = 𝐶1 ,

𝐴 2 𝑋𝐵2 = 𝐶2 , there are some valuable efforts on solving a

pair of the matrix equations with certain linear constraints

on solution. For instance, Khatri and Mitra [7] derived the

Hermitian solution of the consistent matrix equations 𝐴𝑋 =

𝐶, 𝑋𝐵 = 𝐷. Deng et al. [8] studied the consistent conditions

and the general expressions about the Hermitian solutions of

the matrix equations (𝐴𝑋, 𝑋𝐵) = (𝐶, 𝐷) and designed an

iterative method for its Hermitian minimum norm solutions.

Peng et al. [9] presented an iterative method to obtain the least

squares reflexive solutions of the matrix equations 𝐴 1 𝑋𝐵1 =

𝐶1 , 𝐴 2 𝑋𝐵2 = 𝐶2 . Cai et al. [10, 11] proposed iterative methods

to solve the bisymmetric solutions of the matrix equations

𝐴 1 𝑋𝐵1 = 𝐶1 , 𝐴 2 𝑋𝐵2 = 𝐶2 .

In this paper, we propose a new iterative algorithm to

solve the bisymmetric solution with the minimum Frobenius

norm of the consistent matrix equations 𝐴 1 𝑋𝐵1 = 𝐶1 ,

𝐴 2 𝑋𝐵2 = 𝐶2 , which is faster and more stable than Cai’s algorithm (Algorithm 2.1) in [10].

The rest of the paper is organized as follows. In Section 2,

we propose an iterative algorithm to obtain the bisymmetric

minimum Frobenius norm solution of (1) and present some

basic properties of the algorithm. Some numerical examples

are given in Section 3 to show the efficiency of the proposed

iterative method.

2

Journal of Applied Mathematics

2. A New Iterative Algorithm

(ii) Iteration. For 𝑖 = 1, 2, . . ., until {𝑥𝑖 } convergence, do

Firstly, we give the following lemmas.

(a) 𝜉𝑖 = −𝜉𝑖−1 𝛽𝑖 /𝛼𝑖 ; 𝑧𝑖 = 𝑧𝑖−1 + 𝜉𝑖 𝑣𝑖 ;

Lemma 1 (see [12]). There is a unique matrix 𝑃(𝑚, 𝑛) ∈

R𝑚𝑛×𝑚𝑛 such that vec(𝑋𝑇 ) = 𝑃(𝑚, 𝑛) vec (𝑋) for all 𝑋 ∈ R𝑚×𝑛 .

This matrix 𝑃(𝑚, 𝑛) depends only on the dimensions 𝑚 and

𝑛. Moreover, 𝑃(𝑚, 𝑛) is a permutation matrix and 𝑃(𝑛, 𝑚) =

𝑃(𝑚, 𝑛)𝑇 = 𝑃(𝑚, 𝑛)−1 .

(b) 𝜃𝑖 = (𝜏𝑖−1 − 𝛽𝑖 𝜃𝑖−1 )/𝛼𝑖 ; 𝑤𝑖 = 𝑤𝑖−1 + 𝜃𝑖 𝑣𝑖 ;

Lemma 2. If 𝑦0 , 𝑦1 , 𝑦2 , . . . ∈ R𝑚 are orthogonal to each other,

then there exists a positive integer ̂𝑙 ≤ 𝑚 such that 𝑦̂𝑙 = 0.

Proof. If there exists a positive integer ̂𝑙 ≤ 𝑚 − 1 such that

𝑦̂𝑙 = 0, then Lemma 2 is proved.

Otherwise, we have 𝑦𝑖 ≠ 0, 𝑖 = 0, 1, 2, . . . , 𝑚 − 1, and

𝑦0 , 𝑦1 , . . . , 𝑦𝑚−1 are orthogonal to each other in the 𝑚dimension vector space of R𝑚 . So 𝑦0 , 𝑦1 , . . . , 𝑦𝑚−1 form a set

of orthogonal basis of R𝑚 .

Hence 𝑦𝑚 can be expressed by the linear combination of

𝑦0 , 𝑦1 , . . . , 𝑦𝑚−1 . Denote

𝑦𝑚 = 𝑎0 𝑦0 + 𝑎1 𝑦1 + ⋅ ⋅ ⋅ + 𝑎𝑚−1 𝑦𝑚−1

(2)

in which 𝑎𝑖 ∈ R, 𝑖 = 0, 1, 2 . . . , 𝑚 − 1. Then

(c) 𝛽𝑖+1 𝑢𝑖+1 = 𝑀𝑣𝑖 − 𝛼𝑖 𝑢𝑖 ;

(d) 𝜏𝑖 = −𝜏𝑖−1 𝛼𝑖 /𝛽𝑖+1 ;

(e) 𝛼𝑖+1 𝑣𝑖+1 = 𝑀𝑇 𝑢𝑖+1 − 𝛽𝑖+1 𝑣𝑖 ;

(f) 𝛾𝑖 = 𝛽𝑖+1 𝜉𝑖 /(𝛽𝑖+1 𝜃𝑖 − 𝜏𝑖 );

(g) 𝑥𝑖 = 𝑧𝑖 − 𝛾𝑖 𝑤𝑖 .

It is well known that if the consistent system of linear

equations 𝑀𝑥 = 𝑓 has a solution 𝑥∗ ∈ 𝑅(𝑀𝑇 ), then 𝑥∗ is

the unique minimum Euclidean norm solution of 𝑀𝑥 = 𝑓. It

is obvious that 𝑥𝑖 generated by Algorithm 5 belongs to 𝑅(𝑀𝑇 )

and this leads to the following result.

Theorem 6. The solution generated by Algorithm 5 is the

minimum Euclidean norm solution of (5).

If 𝑢1 , 𝑢2 , . . . and 𝑣1 , 𝑣2 , . . . are generated by Algorithm 5,

then 𝑢𝑖𝑇 𝑢𝑗 = 𝛿𝑖𝑗 , 𝑣𝑖𝑇 𝑣𝑗 = 𝛿𝑖𝑗 (see details in [13]), in which

1,

𝛿𝑖𝑗 = {

0,

⟨𝑦𝑖 , 𝑦𝑚 ⟩ = 𝑎0 ⟨𝑦𝑖 , 𝑦0 ⟩ + 𝑎1 ⟨𝑦𝑖 , 𝑦1 ⟩

+ ⋅ ⋅ ⋅ + 𝑎𝑚−1 ⟨𝑦𝑖 , 𝑦𝑚−1 ⟩

= 𝑎𝑖 ⟨𝑦𝑖 , 𝑦𝑖 ⟩ + ∑ 𝑎𝑗 ⟨𝑦𝑖 , 𝑦𝑗 ⟩

(3)

𝑟𝑖 = 𝑓 − 𝑀𝑥𝑖 ,

𝑗=1

𝑗 ≠ 𝑖

𝑖 = 0, 1, 2, . . . , 𝑚 − 1.

From ⟨𝑦𝑖 , 𝑦𝑚 ⟩ = 0 and ⟨𝑦𝑖 , 𝑦𝑖 ⟩ ≠ 0, 𝑖 = 0, 1, 2, . . . , 𝑚 − 1, we

have 𝑎𝑖 = 0, 𝑖 = 0, 1, 2, . . . , 𝑚 − 1; that is,

𝑦𝑚 = 0.

(4)

This completes the proof.

Lemma 3. A matrix 𝑋 ∈ BSR𝑛×𝑛 if and only if 𝑋 = 𝑋𝑇 =

𝑆𝑛 𝑋𝑆𝑛 .

Lemma 4. If 𝑌 ∈ R𝑛×𝑛 , then 𝑌 + 𝑌𝑇 + 𝑆𝑛 (𝑌 + 𝑌𝑇)𝑆𝑛 ∈ BSR𝑛×𝑛 .

Next, we review the algorithm proposed by Paige [13] for

solving the following consistent problem:

𝑀𝑥 = 𝑓,

(5)

𝑧0 = 0;

𝑤0 = 0;

𝜉0 = −1;

𝛽1 𝑢1 = 𝑓;

𝑟𝑖𝑇 𝑟𝑗 = ℎ𝑖𝑗 𝛿𝑖𝑗

𝜃0 = 0;

𝛼1 𝑣1 = 𝑀𝑇 𝑢1 .

(6)

(9)

in which ℎ𝑖𝑗 = 𝛽𝑖+1 𝛽𝑗+1 𝜉𝑖 𝜉𝑗 .

Now we derive our new algorithm, which is based on

Paige algorithm.

Noting that 𝑋 is the bisymmetric solution of (1) if and

only if 𝑋 is the bisymmetric solution of the following linear

equations:

𝐴 1 𝑋𝐵1 = 𝐶1 ,

𝐴 1 𝑆𝑛 𝑋𝑆𝑛 𝐵1 = 𝐶1 ,

𝐴 2 𝑆𝑛 𝑋𝑆𝑛 𝐵2 = 𝐶2 ,

Algorithm 5 (Paige algorithm). (i) Initialization

(8)

where 𝑥𝑖 is the approximation solution obtained by Algorithm 5

after the 𝑖th iteration, it follows that 𝑟𝑖 = −𝛽𝑖+1 𝜉𝑖 𝑢𝑖+1 (see details

in [13]). So we have

𝐴 2 𝑋𝐵2 = 𝐶2 ,

with given 𝑀 ∈ R𝑠×𝑡 , 𝑓 ∈ R𝑠 .

𝜏0 = 1;

(7)

If we denote

𝑚−1

= 𝑎𝑖 ⟨𝑦𝑖 , 𝑦𝑖 ⟩ ,

𝑖 = 𝑗,

𝑖 ≠ 𝑗.

𝐵1𝑇 𝑋𝐴𝑇1 = 𝐶1𝑇 ,

𝐵1𝑇 𝑆𝑛 𝑋𝑆𝑛 𝐴𝑇1 = 𝐶1𝑇 ,

𝐵2𝑇 𝑋𝐴𝑇2 = 𝐶2𝑇 ,

(10)

𝐵2𝑇 𝑆𝑛 𝑋𝑆𝑛 𝐴𝑇2 = 𝐶2𝑇 .

Furthermore, suppose (10) is consistent; let 𝑌 be a solution of

(10). If 𝑌 is a bisymmetric matrix, then 𝑌 is a bisymmetric

solution of (1); otherwise we can obtain a bisymmetric

solution of (10) by 𝑋 = (𝑌 + 𝑌𝑇 + 𝑆𝑛 (𝑌 + 𝑌𝑇 )𝑆𝑛 )/4.

Journal of Applied Mathematics

3

The system of (10) can be transformed into (5) with

coefficient matrix 𝑀 and vector 𝑓 as

vec (𝐶1 )

vec (𝐶1𝑇 )

( vec (𝐶1 ) )

(vec (𝐶𝑇 ))

(

1 )

𝑓=(

).

( vec (𝐶2 ) )

(

𝑇 )

vec (𝐶2 )

vec (𝐶2 )

𝑇

(vec (𝐶2 ))

(11)

𝐵1𝑇 ⊗ 𝐴 1

𝐴 1 ⊗ 𝐵1𝑇

𝑇

(𝐵1 𝑆𝑛 ⊗ 𝐴 1 𝑆𝑛 )

(

𝑇 )

(𝐴 𝑆 ⊗ 𝐵1 𝑆𝑛 )

𝑀 = ( 1 𝑇𝑛

),

( 𝐵2 ⊗ 𝐴 2 )

(

𝑇 )

𝐴 2 ⊗ 𝐵2

𝐵2𝑇 𝑆𝑛 ⊗ 𝐴 2 𝑆𝑛

𝑇

(𝐴 2 𝑆𝑛 ⊗ 𝐵2 𝑆𝑛 )

Therefore, 𝛽1 𝑢1 = 𝑓, 𝛼1 𝑣1 = 𝑀𝑇 𝑢1 , 𝛽𝑖+1 𝑢𝑖+1 = 𝑀𝑣𝑖 − 𝛼𝑖 𝑢𝑖 ,

and 𝛼𝑖+1 𝑣𝑖+1 = 𝑀𝑇 𝑢𝑖+1 − 𝛽𝑖+1 𝑣𝑖 can be written as

𝛼𝑖+1 𝑣𝑖+1

where 𝑈𝑖1 ∈ R𝑝1 ×𝑞1 , 𝑈𝑖2 ∈ R𝑝2 ×𝑞2 , 𝑉𝑖 ∈ R𝑛×𝑛 , and 𝑉𝑖 is a bisymmetric matrix.

And so, the vector form of 𝛽1 𝑢1 = 𝑓, 𝛼1 𝑣1 = 𝑀𝑇 𝑢1 ,

𝛽𝑖+1 𝑢𝑖+1 = 𝑀𝑣𝑖 − 𝛼𝑖 𝑢𝑖 , and 𝛼𝑖+1 𝑣𝑖+1 = 𝑀𝑇 𝑢𝑖+1 − 𝛽𝑖+1 𝑣𝑖 in

Algorithm 5 can be rewritten as matrix form. Then we now

propose the following matrix-form algorithm.

𝑇1 = 𝐴𝑇1 𝑈11 𝐵1𝑇 + 𝐴𝑇2 𝑈12 𝐵2𝑇 ; 𝑉1 = 𝑇1 + 𝑇1𝑇 + 𝑆𝑛 (𝑇1 +

𝑇1𝑇 )𝑆𝑛 ; 𝛼1 = ‖𝑉1 ‖; 𝑉1 = 𝑉1 /𝛼1 .

(ii) Iteration. For 𝑖 = 1, 2, . . ., until {𝑋𝑖 } convergence, do

(a) 𝜉𝑖 = −𝜉𝑖−1 𝛽𝑖 /𝛼𝑖 ; 𝑍𝑖 = 𝑍𝑖−1 + 𝜉𝑖 𝑉𝑖 ;

(b) 𝜃𝑖 = (𝜏𝑖−1 − 𝛽𝑖 𝜃𝑖−1 )/𝛼𝑖 ; 𝑊𝑖 = 𝑊𝑖−1 + 𝜃𝑖 𝑉𝑖 ;

(c) 𝑈𝑖+1,𝑗 = 𝐴 𝑗 𝑉𝑖 𝐵𝑗 − 𝛼𝑖 𝑈𝑖𝑗 , 𝑗 = 1, 2;

2

2

𝛽𝑖+1 = 2√‖𝑈𝑖+1,1 ‖ + ‖𝑈𝑖+1,2 ‖ ;

𝑈𝑖+1,𝑗 = 𝑈𝑖+1,𝑗 /𝛽𝑖+1 , 𝑗 = 1, 2;

(d) 𝜏𝑖 = −𝜏𝑖−1 𝛼𝑖 /𝛽𝑖+1 ;

(e) 𝑇𝑖+1 = 𝐴𝑇1 𝑈𝑖+1,1 𝐵1𝑇 + 𝐴𝑇2 𝑈𝑖+1,2 𝐵2𝑇 ;

𝑇

𝑇

𝑉𝑖+1 = 𝑇𝑖+1 + 𝑇𝑖+1

+ 𝑆𝑛 (𝑇𝑖+1 + 𝑇𝑖+1

)𝑆𝑛 − 𝛽𝑖+1 𝑉𝑖 ;

𝛼𝑖+1 = ‖𝑉𝑖+1 ‖; 𝑉𝑖+1 = 𝑉𝑖+1 /𝛼𝑖+1 ;

𝑖 = 1, 2, . . . ,

(f) 𝛾𝑖 = 𝛽𝑖+1 𝜉𝑖 /(𝛽𝑖+1 𝜃𝑖 − 𝜏𝑖 );

(g) 𝑋𝑖 = 𝑍𝑖 − 𝛾𝑖 𝑊𝑖 .

Remark 8. The stopping criteria on Algorithm 7 can be used

as

𝐶1 − 𝐴 1 𝑋𝑖 𝐵1 + 𝐶2 − 𝐴 2 𝑋𝑖 𝐵2 ≤ 𝜖,

(14)

𝜉𝑖 ≤ 𝜖 or 𝑋𝑖 − 𝑋𝑖−1 ≤ 𝜖,

𝑇

𝐵1𝑇 ⊗ 𝐴 1

𝐴 1 ⊗ 𝐵1𝑇

𝑇

(𝐵1 𝑆𝑛 ⊗ 𝐴 1 𝑆𝑛 )

(

𝑇 )

(𝐴 𝑆 ⊗ 𝐵1 𝑆𝑛 )

= ( 1 𝑇𝑛

) 𝑢 − 𝛽𝑖+1 𝑣𝑖 ,

( 𝐵2 ⊗ 𝐴 2 ) 𝑖+1

(

𝑇 )

𝐴 2 ⊗ 𝐵2

𝑇

𝐵2 𝑆𝑛 ⊗ 𝐴 2 𝑆𝑛

𝑇

(𝐴 2 𝑆𝑛 ⊗ 𝐵2 𝑆𝑛 )

(13)

𝛽1 = 2√‖𝐶1 ‖2 + ‖𝐶2 ‖2 ; 𝑈1𝑗 = 𝐶𝑗 /𝛽1 , 𝑗 = 1, 2;

⊗ 𝐴1

𝐴 1 ⊗ 𝐵1𝑇

𝑇

(𝐵1 𝑆𝑛 ⊗ 𝐴 1 𝑆𝑛 )

(

𝑇 )

(𝐴 𝑆 ⊗ 𝐵1 𝑆𝑛 )

𝛼1 𝑣1 = ( 1 𝑇𝑛

) 𝑢,

( 𝐵2 ⊗ 𝐴 2 ) 1

(

𝑇 )

𝐴 2 ⊗ 𝐵2

𝐵2𝑇 𝑆𝑛 ⊗ 𝐴 2 𝑆𝑛

𝑇

(𝐴 2 𝑆𝑛 ⊗ 𝐵2 𝑆𝑛 )

𝛽𝑖+1 𝑢𝑖+1

𝑣𝑖 = vec (𝑉𝑖 ) ,

𝜏0 = 1; 𝜉0 = −1; 𝜃0 = 0; 𝑍0 = 0 (∈ R𝑛×𝑛 ); 𝑊0 = 𝑍0 ;

𝑇

𝐵1𝑇 ⊗ 𝐴 1

𝐴 1 ⊗ 𝐵1𝑇

𝑇

(𝐵1 𝑆𝑛 ⊗ 𝐴 1 𝑆𝑛 )

(

𝑇 )

(𝐴 𝑆 ⊗ 𝐵1 𝑆𝑛 )

= ( 1 𝑇𝑛

) 𝑣 − 𝛼𝑖 𝑢𝑖 ,

( 𝐵2 ⊗ 𝐴 2 ) 𝑖

(

)

𝐴 2 ⊗ 𝐵2𝑇

𝐵2𝑇 𝑆𝑛 ⊗ 𝐴 2 𝑆𝑛

𝑇

(𝐴 2 𝑆𝑛 ⊗ 𝐵2 𝑆𝑛 )

vec (𝑈𝑖1 )

vec (𝑈𝑖1𝑇 )

( vec (𝑈𝑖1 ) )

(vec (𝑈𝑇 ))

(

𝑖1 )

𝑢𝑖 = (

),

( vec (𝑈𝑖2 ) )

(

)

vec (𝑈𝑖2𝑇 )

vec (𝑈𝑖2 )

𝑇

vec

( (𝑈𝑖2 ))

Algorithm 7. (i) Initialization

vec (𝐶1 )

vec (𝐶1𝑇 )

( vec (𝐶1 ) )

(vec (𝐶𝑇 ))

(

1 )

𝛽1 𝑢1 = (

),

( vec (𝐶2 ) )

(

𝑇 )

vec (𝐶2 )

vec (𝐶2 )

𝑇

(vec (𝐶2 ))

𝐵1𝑇

From (12), we have

where 𝜖 is a small tolerance.

𝑖 = 1, 2, . . . .

(12)

Remark 9. As 𝑉𝑖 , 𝑍𝑖 , and 𝑊𝑖 in Algorithm 7 are bisymmetric

matrices, we can see that 𝑋𝑖 obtained by Algorithm 7 are also

bisymmetric matrices.

Some basic properties of Algorithm 7 are listed in the

following theorems.

4

Journal of Applied Mathematics

Theorem 10. The solution generated by Algorithm 7 is the

bisymmetric minimum Frobenius norm solution of (1).

Theorem 11. The iteration of Algorithm 7 will be terminated

in at most 𝑝1 𝑞1 + 𝑝2 𝑞2 steps in the absence of round-off errors.

Proof. By (8) and (11), we have by simple calculation that

vec (𝑅𝑖1 )

𝑇

vec (𝑅𝑖1

)

( vec (𝑅𝑖1 ) )

(

)

(vec (𝑅𝑇 ))

(

𝑖1 )

𝑟𝑖 = (

),

( vec (𝑅𝑖2 ) )

(

)

(vec (𝑅𝑇 ))

(15)

in which 𝑅𝑖1 = 𝐶1 −𝐴 1 𝑋𝑖 𝐵1 , and 𝑅𝑖2 = 𝐶2 −𝐴 2 𝑋𝑖 𝐵2 , where 𝑋𝑖

is the approximation solution obtained by Algorithm 7 after

the 𝑖th iteration.

By Lemma 1, we have that

= 𝑃 (𝑝2 , 𝑞2 ) vec (𝑅𝑖2 ) ,

(16)

where 𝑃(𝑝1 , 𝑞1 ) ∈ R𝑝1 𝑞1 ×𝑝1 𝑞1 and 𝑃(𝑝2 , 𝑞2 ) ∈ R𝑝2 𝑞2 ×𝑝2 𝑞2

are permutation matrices. For simplicity, we denote 𝑃1 =

𝑃(𝑝1 , 𝑞1 ), 𝑃2 = 𝑃(𝑝2 , 𝑞2 ). Then 𝑃1𝑇 𝑃1 = 𝐼𝑝1 𝑞1 , 𝑃2𝑇 𝑃2 = 𝐼𝑝2 𝑞2 .

Hence

vec (𝑅𝑖1 )

𝑇

)

vec (𝑅𝑖1

( vec (𝑅𝑖1 ) )

(

)

(vec (𝑅𝑇 ))

(

𝑇

𝑖1 )

𝑟𝑖 𝑟𝑗 = (

)

( vec (𝑅𝑖2 ) )

(

)

(vec (𝑅𝑇 ))

𝑖2

vec (𝑅𝑖2 )

𝑇

(vec (𝑅𝑖2 ))

𝑇

vec (𝑅𝑗1 )

𝑇

vec (𝑅𝑗1

)

(vec (𝑅 ))

𝑗1 )

(

(

𝑇 )

(vec (𝑅𝑗1

))

(

)

(vec (𝑅 ))

(

𝑗2 )

(

𝑇 )

(vec (𝑅𝑗2

))

vec (𝑅𝑗2 )

𝑇

(vec (𝑅𝑗2 ))

𝑇

vec (𝑅𝑖1 )

𝑃1 vec (𝑅𝑖1 )

( vec (𝑅𝑖1 ) )

)

(

(𝑃1 vec (𝑅𝑖1 ))

)

=(

( vec (𝑅 ) )

𝑖2 )

(

(𝑃 vec (𝑅 ))

2

𝑖2

vec (𝑅𝑖2 )

(𝑃2 vec (𝑅𝑖2 ))

vec (𝑅𝑗1 )

𝑃1 vec (𝑅𝑗1 )

( vec (𝑅 ) )

𝑗1 )

(

(

)

(𝑃1 vec (𝑅𝑗1 ))

(

)

( vec (𝑅 ) )

(

𝑗2 )

(

)

(𝑃2 vec (𝑅𝑗2 ))

vec (𝑅𝑗2 )

(𝑃2 vec (𝑅𝑗2 ))

𝑇

+ 2(vec (𝑅𝑖2 )) vec (𝑅𝑗2 )

𝑇

+ 2(vec (𝑅𝑖2 )) 𝑃2𝑇 𝑃2 vec (𝑅𝑗2 )

𝑇

𝑇

= 4 ((vec (𝑅𝑖1 )) vec (𝑅𝑗1 ) + (vec (𝑅𝑖2 )) vec (𝑅𝑗2 ))

(17)

𝑖2

𝑇

)

vec (𝑅𝑖2

𝑇

𝑇

vec (𝑅𝑗1 )

vec (𝑅𝑖1 )

) (

= 4(

).

vec (𝑅𝑖2 )

vec (𝑅𝑗2 )

vec (𝑅𝑖2 )

𝑇

vec

( (𝑅𝑖2 ))

𝑇

) = 𝑃 (𝑝1 , 𝑞1 ) vec (𝑅𝑖1 ) ,

vec (𝑅𝑖1

𝑇

= 2(vec (𝑅𝑖1 )) vec (𝑅𝑗1 ) + 2(vec (𝑅𝑖1 )) 𝑃1𝑇 𝑃1 vec (𝑅𝑗1 )

𝑝1 𝑞1 +𝑝2 𝑞2

𝑖1 )

If we let 𝑡𝑖 = ( vec(𝑅

, then we have by (9)

vec(𝑅𝑖2 ) ) ∈ R

that 𝑡0 , 𝑡1 , 𝑡2 , . . . are orthogonal to each other in R𝑝1 𝑞1 +𝑝2 𝑞2 . By

Lemma 2, there exists a positive integer ̂𝑙 ≤ (𝑝1 𝑞1 +𝑝2 𝑞2 ) such

that 𝑡̂𝑙 = 0. Hence

𝑅̂𝑙1 = 𝑅̂𝑙2 = 0,

(18)

that is, the iteration of Algorithm 7 will be terminated in at

most 𝑝1 𝑞1 +𝑝2 𝑞2 steps in the absence of round-off errors.

3. Numerical Examples

In this section, we use some numerical examples to illustrate

the efficiency of our algorithm. The computations are carried

out at PC computer, with software MATLAB 7.0. The machine

precision is around 10−16 .

We stop the iteration when ‖𝑅𝑖1 ‖ + ‖𝑅𝑖2 ‖ ≤ 10−12 .

Example 12. Given matrices 𝐴 1 , 𝐵1 , 𝐶1 , 𝐴 2 , 𝐵2 , and 𝐶2 as

follows:

1

3

4

(

𝐴1 =

2

−1

(−3

−4

1

−3

5

4

−1

−3

2

−1

(

𝐵1 = (

(0

1

3

(0

−19

−55

−74

𝐶1 = (

−36

19

( 55

−2

−1

−3

1

2

1

2

−3

1

1

2

−3

−1

30

47

77

17

−30

−47

−1

3

2

4

1

−3

0

−1

−1

−1

0

1

−1

−1

0

1

3

0

−1

3

−2

1

0

−1

−3

0

11

−8

3

−19

−11

8

19

55

74

36

−19

−55

1

−2

−1

−3

−1

2

−2

3

−1

−1

−2

3

1

−3

1

−2)

,

4

3

−1)

1

−4

1)

2)

),

5

−3

−2)

−30

−47

−77

−17

30

47

41

39

80 )

,

−2

−41

−39)

Journal of Applied Mathematics

5

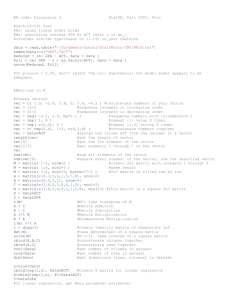

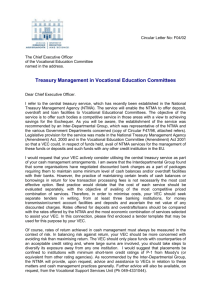

4

2

log10 (∣∣𝑅𝑖1 ∣∣ + ∣∣𝑅𝑖2 ∣∣)

0

−2

−4

−6

−8

−10

−12

−14

2

0

4

6

8

Iterative steps

10

12

14

Cai’s algorithm

Algorithm 7

Figure 1: Convergence curves of log10 (‖𝑅𝑖1 ‖ + ‖𝑅𝑖2 ‖).

3

0

𝐴 2 = (−2

0

1

−2

−3

−4

3

−6

−1

1

1

−1

0

2

−3

(1

𝐵2 = (

(0

−2

1

−1

(

33

17

𝐶2 = ( 27

−17

60

1

−3

−3

3

−2

−4

2

0

−2

−4

1

−1

2

4

0

−5

−2

3

−4

3

4

−2

−4

−3

107

−34

−29

34

78

140

−17

−2

17

138

0

3

3

−3

3

−1

1

1 ),

−1

0

then (1) is consistent, for one can easily verify that it has a

bisymmetric solution:

−2

3

−1)

0)

),

2

−1

1)

1

−1

(1

̂=(2

𝑋

(

1

−1

(1

−33

−17

−27)

17

−60

(19)

𝑋13

0.4755

−0.6822

( 0.6274

=(

( 1.4586

0.2774

−1.2112

(−0.1053

−0.6822

2.6628

0.4046

0.0716

1.0133

0.4001

−1.2112

0.6274

0.4046

−1.0215

−2.2128

−1.6176

1.0133

0.2774

with ‖𝑅13,1 ‖ + ‖𝑅13,2 ‖ = 6.2303𝑒 − 013.

Figure 1 illustrates the performance of our algorithm and

Cai’s algorithm [10]. From Figure 1, we see that our algorithm

is faster than Cai’s algorithm.

−1

3

1

1

1

1

−1

1

1

0

−2

−1

1

1

2

1

−2

1

−2

1

2

1

1

−1

−2

0

1

1

−1

1

1

1

1

3

−1

1

−1

1)

2)

).

1

−1

1)

(20)

We choose the initial matrix 𝑋0 = 0, then using Algorithm 7

and iterating 13 steps, we have the unique bisymmetric

minimum Frobenius norm solution of (1) as follows:

1.4586

0.0716

−2.2128

−1.1548

−2.2128

0.0716

1.4586

0.2774

1.0133

−1.6176

−2.2128

−1.0215

0.4046

0.6274

−1.2112

0.4001

1.0133

0.0716

0.4046

2.6628

−0.6822

−0.1053

−1.2112

0.2774 )

1.4586 )

),

0.6274

−0.6822

0.4755 )

(21)

Example 13. Let

𝐴 1 = hilb (7) ,

𝐴 2 = rand (7, 7) ,

𝐵1 = pascal (7) ,

𝐵2 = rand (7, 7) ,

(22)

6

Journal of Applied Mathematics

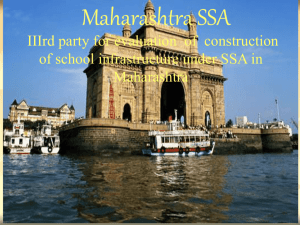

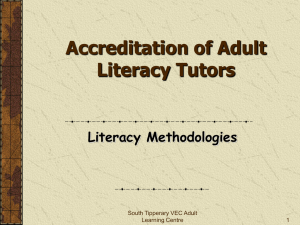

4

2

log10 (∣∣𝑅𝑖1 ∣∣ + ∣∣𝑅𝑖2 ∣∣)

0

−2

−4

−6

−8

−10

−12

−14

20

0

40

60

80

100

Iterative steps

Cai’s algorithm

Algorithm 7

Figure 2: Convergence curves of log10 (‖𝑅𝑖1 ‖ + ‖𝑅𝑖2 ‖).

with hilb, pascal, and rand being functions in Matlab. And

̂ 1 , 𝐶2 = 𝐴 2 𝑋𝐵

̂ 2 , in which 𝑋

̂ is defined in

we let 𝐶1 = 𝐴 1 𝑋𝐵

Example 12. Hence (1) is consistent.

We choose the initial matrix 𝑋0 = 0; Figure 2 illustrates

the performance of our algorithm and Cai’s algorithm [10].

From Figure 2, we see that our algorithm is faster and more

stable than Cai’s algorithm.

Acknowledgments

This research was supported by the Natural Science Foundation of China (nos. 10901056, 11071079, and 11001167),

the Shanghai Science and Technology Commission “Venus”

Project (no. 11QA1402200), and the Natural Science Foundation of Zhejiang Province (no. Y6110043).

References

[1] X. P. Sheng and G. L. Chen, “A finite iterative method for solving

a pair of linear matrix equations (𝐴𝑋𝐵, 𝐶𝑋𝐷) = (𝐸, 𝐹),” Applied

Mathematics and Computation, vol. 189, no. 2, pp. 1350–1358,

2007.

[2] Y. X. Yuan, “Least squares solutions of matrix equation 𝐴𝑋𝐵 =

𝐸; 𝐶𝑋𝐷 = 𝐹,” Journal of East China Shipbuilding Institute, vol.

18, no. 3, pp. 29–31, 2004.

[3] A. Navarra, P. L. Odell, and D. M. Young, “A representation

of the general common solution to the matrix equations

𝐴 1 𝑋𝐵1 = 𝐶1 and 𝐴 2 𝑋𝐵2 = 𝐶2 with applications,” Computers

& Mathematics with Applications, vol. 41, no. 7-8, pp. 929–935,

2001.

[4] S. K. Mitra, “A pair of simultaneous linear matrix equations

and a matrix programming problem,” Linear Algebra and its

Applications, vol. 131, pp. 107–123, 1990.

[5] S. K. Mitra, “The matrix equations 𝐴𝑋 = 𝐶,𝑋𝐵 = 𝐷,” Linear

Algebra and its Applications, vol. 59, pp. 171–181, 1984.

[6] P. Bhimasankaram, “Common solutions to the linear matrix

equations 𝐴𝑋 = 𝐶,𝑋𝐵 = 𝐷 and 𝐹𝑋𝐺 = 𝐻,” Sankhyā A, vol.

38, no. 4, pp. 404–409, 1976.

[7] C. G. Khatri and S. K. Mitra, “Hermitian and nonnegative

definite solutions of linear matrix equations,” SIAM Journal on

Applied Mathematics, vol. 31, no. 4, pp. 579–585, 1976.

[8] Y.-B. Deng, Z.-Z. Bai, and Y.-H. Gao, “Iterative orthogonal

direction methods for Hermitian minimum norm solutions of

two consistent matrix equations,” Numerical Linear Algebra with

Applications, vol. 13, no. 10, pp. 801–823, 2006.

[9] Z.-H. Peng, X.-Y. Hu, and L. Zhang, “An efficient algorithm

for the least-squares reflexive solution of the matrix equation

𝐴 1 𝑋𝐵1 = 𝐶1 ,” Applied Mathematics and Computation, vol. 181,

no. 2, pp. 988–999, 2006.

[10] J. Cai, G. L. Chen, and Q. B. Liu, “An iterative method for

the bisymmetric solutions of the consistent matrix equations

𝐴 1 𝑋𝐵1 = 𝐶1 , 𝐴 2 𝑋𝐵2 = 𝐶2 ,” International Journal of Computer

Mathematics, vol. 87, no. 12, pp. 2706–2715, 2010.

[11] J. Cai and G. L. Chen, “An iterative algorithm for the

least squares bisymmetric solutions of the matrix equations

𝐴 1 𝑋𝐵1 = 𝐶1 ,” Mathematical and Computer Modelling, vol. 50,

no. 7-8, pp. 1237–1244, 2009.

[12] R. A. Horn and C. R. Johnson, Topics in Matrix Analysis,

Cambridge University Press, Cambridge, UK, 1991.

[13] C. C. Paige, “Bidiagonalization of matrices and solutions of the

linear equations,” SIAM Journal on Numerical Analysis, vol. 11,

pp. 197–209, 1974.

Advances in

Operations Research

Hindawi Publishing Corporation

http://www.hindawi.com

Volume 2014

Advances in

Decision Sciences

Hindawi Publishing Corporation

http://www.hindawi.com

Volume 2014

Mathematical Problems

in Engineering

Hindawi Publishing Corporation

http://www.hindawi.com

Volume 2014

Journal of

Algebra

Hindawi Publishing Corporation

http://www.hindawi.com

Probability and Statistics

Volume 2014

The Scientific

World Journal

Hindawi Publishing Corporation

http://www.hindawi.com

Hindawi Publishing Corporation

http://www.hindawi.com

Volume 2014

International Journal of

Differential Equations

Hindawi Publishing Corporation

http://www.hindawi.com

Volume 2014

Volume 2014

Submit your manuscripts at

http://www.hindawi.com

International Journal of

Advances in

Combinatorics

Hindawi Publishing Corporation

http://www.hindawi.com

Mathematical Physics

Hindawi Publishing Corporation

http://www.hindawi.com

Volume 2014

Journal of

Complex Analysis

Hindawi Publishing Corporation

http://www.hindawi.com

Volume 2014

International

Journal of

Mathematics and

Mathematical

Sciences

Journal of

Hindawi Publishing Corporation

http://www.hindawi.com

Stochastic Analysis

Abstract and

Applied Analysis

Hindawi Publishing Corporation

http://www.hindawi.com

Hindawi Publishing Corporation

http://www.hindawi.com

International Journal of

Mathematics

Volume 2014

Volume 2014

Discrete Dynamics in

Nature and Society

Volume 2014

Volume 2014

Journal of

Journal of

Discrete Mathematics

Journal of

Volume 2014

Hindawi Publishing Corporation

http://www.hindawi.com

Applied Mathematics

Journal of

Function Spaces

Hindawi Publishing Corporation

http://www.hindawi.com

Volume 2014

Hindawi Publishing Corporation

http://www.hindawi.com

Volume 2014

Hindawi Publishing Corporation

http://www.hindawi.com

Volume 2014

Optimization

Hindawi Publishing Corporation

http://www.hindawi.com

Volume 2014

Hindawi Publishing Corporation

http://www.hindawi.com

Volume 2014