Coupon Conspiracy Heather Parsons Dickens Nyabuti Ben Speidel

advertisement

Coupon Conspiracy

Heather Parsons

Dickens Nyabuti

Ben Speidel

Megan Silberhorn

Mark Osegard

Nick Thull

Introduction

Today we are going explain two

coupon collecting examples that

incorporate important statistical

elements. We will first begin by using

a well-known example of a coupon

collecting-type of game.

We are going to use the

McDonald’s Monopoly Best

Chance Game to connect a real

world example with our Coupon

Collecting Examples.

McDonald’s Scam

©

List of Prizes Allegedly Won

By Fraudulent Means

Dodge Viper

1996 (1) $100,000 Prize

(2) $1,000,000 Prizes

1997 (1) $1,000,000 Prize

1998 $200,000

1999 (3) $1,000,000 Prizes

2000 (3) $1,000,000 Prizes

2001 (3) $1,000,000 Prizes

McDonald’s Monopoly

©

The Odds of Winning

Instant Win • 1 in 18,197

Collect & Win • 1 in 2,475,041

Best Buy Gift Cards • 1 in 55,000

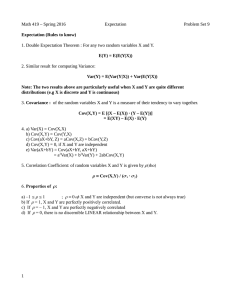

Important Statistical Elements

• Expected Value – gives the average behavior of

the Random Variable X

Denoted as: E X =

xP X =x

[ ]

i

{

i

}

• Variance – measures spread, variability, and

dispersion of X

Denoted as: Var ( X ) = E[( X − E [ X ])2 ]

We will use these concepts in more detail in our

fair coupon collecting examples later in our

discussion.

Review

• Sample space – the set of all possible

outcomes of a random experiment (S)

• Event – any subset of the sample space

• Probability – is a set function defined on

the power set of S

So if A ⊂ S ,

then P(A) = probability of A

Review Continued

Bernoulli Random Variable

• Toggles between 0 and 1 values

• Where 1 represents a success and 0 a failure

• Success probability = p

• Probability of Failure = (1-p)

Bernoulli Trials

• Experiments having two possible outcomes

• Independent sequences of Bernoulli

RV’s with the same success probability

E[X] = p

Var(X) = p(1-p)

Review Continued

Geometric Random Variables

Performing Bernoulli Trials:

P=success probability

X=# of trials until 1st success

Assume 0 < P ≤ 1

1

E[X ] =

P

(

1− p)

Var ( X ) =

p2

Expectations of Sums of Random

Variables

• For single valued R.V.’s:

E[ X ] =

x i P{X = x i }

i

• Suppose g : R

2

→ R

( i.e. g(x,y) = g )

For multiple R.V.’s:

E[g(X, Y)] =

x

g(x,

y)

P{X

=

x,

Y

=

y}

y

Properties of Sums of Random

Variables

– Expectations of Random Variables can be

summed.

– Recall:

E[g(X, Y)] =

g(x,

y)

P{X

=

x,

Y

=

y}

y

x

– Corollary:

E[X + Y] = E[X] + E[Y]

– More generally:

E[

n

i =1

Xi ] =

n

i =1

E[X i ]

Sums of Random Variables

- Sums of Random Variables can be summed

up and kept in its own Random Variable

i.e.

Y =

∞

i=0

X

i

Where Y is a R.V. and X i is the i th instance

of the Random Variable X

Variance & Covariance of

Random Variables

•

Variance a measure of spread and variability

•

Facts:

var (X) = E[(X − E[X]) ]

var( X + Y ) = var( X ) + var(Y ) + 2 cov( X,Y )

2

–

–

•

i)

ii)

var (X) = E[X ] − (E[X] )

var (aX + b) = a 2Var(X)

2

2

Covariance

– Measure of association between two r.v.’s

cov (X,Y) = E[(X-E[X])(Y-E[Y])]

Properties of Covariance

Where X, Y, Z are random variables, C constant:

cov (X,X) = var (X)

cov (X,Y) = E[XY] – E [X]E[Y]

cov (X,Y) = cov (Y,X)

cov (cX,Y) = c * cov (X,Y)

cov (X,Y + Z) = cov (X,Y) + cov (X,Z)

McDonald’s

Coupon Conspiracy I

©

Suppose there are M different types of coupons, each

equally likely.

Let

X = number of coupons one needs to collect in order

to get the entire set of coupons.

Problem:

Find the expected value and variance of X

i.e E [X]

Var (X)

Solution:

Idea: Break X up into a sum of simpler random variables

Let

Xi = number of coupons needed after i distinct types have been

collected until a new type has been obtained.

Note:

X=

m −1

i =1

X

i

the Xi are independent so

E[X ] =

m −1

E

i =1

Var ( X ) =

[X ]

m −1

i =1

i

Var ( X i )

Observe:

If we already have i distinct types of coupons, then using Geometric

Random Variables,

(m − i )

P(next is new) =

m

Regarding each coupon selection as a trial

Xi = number of trials until the next success

and

(m − i )

P(next is new) =

m

We know that the

E

[X ] = 1p = mm− i

i

Var ( X i ) =

1− p

p

2

2

m

(m − i )

i

m

=

2

=

mi

(m − i )

2

so

E[X ] =

m −1

E

i =0

Var ( X ) =

[X ] =

m −1

i =0

i

m −1

i =0

Var ( X i ) =

m

m−i

m −1

i =0

mi

(m−i)

2

so

m −1

m −1

m

1

E[X ] =

=m

i =0 m − i

i =0 m − i

1

1

1

1 1

+ .......... + +

=m

+

+

2 1

m m −1 m − 2

reversing the expression above,

1

1 1

= m 1 + + + .......... +

2 3

m

m

1

=m

i =1 i

Euler’s Constant

γ

=

lim

m →

m

∞

i = 1

1

− log

i

m

so

1

≈ log

i

m

i = 1

∴

E

[X ] =

m

m

m

i = 1

1

i

≈ m log

m

So,

Var ( X ) =

=

m −1

mi

i =0

(m−i)

m −1

mi

i =1

=m

2

(m−i)

m −1

i =1

2

i

(m−i)

2

to simplify this, we will use a trick in the next slide

Trick : adding m to and subtracting m from the

numerator of the sum.

i

(

i − m + m)

=

(m−i) (m−i)

2

2

=

=

∴

m − (m − i )

(m−i )

2

m

−

(m − i )

(m−i ) (m−i )

2

i

=

2

m

−

1

(m−i ) (m−i) (m−i )

2

2

Applying the trick from the previous slide to,

m

m −1

m

(m−i)

m

(m − i )

2

i =1

we get,

2

i =1

m −1

i

−m

m −1

i =1

1

(m − i )

pulling out the m in the first sum,

=m

2

m −1

i =1

1

(m − i )

2

−m

m −1

i =1

1

(m − i )

Expanding the sums from the previous slide

2

=m

1

1

+

2

+.....+

2

(m−1) (m−2)

1

(1)

2

−m

1

+

1

(m−1) (m−2)

+.....+

1

(1)

reversing the order of the sum above,

1

=m

2

1

+

2

+ ...... +

2

(1) (2)

2

=m

m −1

i =1

1

i

2

−m

1

(m−1)

m −1

i =1

2

1

i

−m

1

+

1

(1) (2)

+ ...... +

1

(m−1)

By the Basel series we will

simplify this in the next slide.

Explaining the Basel Series

Basel Series

1

+

2

1

+

2

1 2

π

+

=

......

2

6

3

1

2

for a large m, the Basel Series converges to π

6

Var ( X ) ≈ m π

6

2

2

2

− m log(m) ≈ m

2

In Conclusion

So clearly the variance is:

Var( X ) ≈ m

2

and the expected value is:

E [X

]≈

m log m

These approximations are dependent on the fact that

m is a large number approaching infinity. Where m

is the number of different types of coupons.

McDonald’s

Coupon Conspiracy II

©

The 2nd Coupon Conspiracy

Given:

Sample of n coupons

m possible types

X := number of distinct types of coupons

Find:

E[X]

(expected value of x)

Var(X) (variance of x)

Redefining X

Decompose X into a sum of Bernoulli indicators

L et X

i

=

1 if a ty p e i is p re s e n t

0 o th e rw is e

1 ≤ i ≤ m

N o te : X =

m

X

i=1

N o te : E

[X ] =

m

i =1

R e m a rk : X 1 , X 2 ,

i

E

[X i ]

,X

m

a re d e p e n d e n t

( k n o w in g o n e c o u p o n ty p e o c c u re d lo w e rs

th e o p p o rtu n ity fo r th e o th e r c o u p o n s ty p e s to o c c u r)

Redefining Var(X)

R e c a ll :

Var

Var

(X ) = Var

(X ) =

m

i =1

X

C ov (X , X

i

)

m

= C ov

X

i

X

j

C ov (X i, X

j

i =1

=

m

C ov

i =1

=

m

i =1

m

j =1

X i,

m

X i,

( b y c o v a r i a n c e b i li n e a r a r i t y )

i =1

m

j =1

)

Summary thus far

E

[X ] =

Var ( X ) =

m

E

i=1

m

m

i =1 j =1

[X i ]

Cov ( X i , X j )

Success Probability

Recall: X i is Bernoulli, with success Probabilitly P

P = P{ X

i

= 1} = 1 − P { X

i

= 0}

Note: P{ X i = 0} = P{type i does not occur on jth trial}

n

∏

j=1

P = 1 −

n

∏

P{X

j=1

= 1 −

Thus

E[X i] = 1 −

i

= 0}

n

m -1

m

m -1

m

n

Recap

E [ X

i

V a r [ X

S o ,

] = 1 −

i

n

m -1

m

m -1

m

] =

m

E [ X ] =

n

E [ X

i = 1

m

=

1 −

i = 1

=

m

1 −

1 −

i

m -1

m

]

n

m -1

m

m -1

m

n

n

Var(X)

To calculate Var ( X ), we need to find Cov ( X i , X

C ov (X i, X

j

)=

E [ X i X j ] − E [ X i ]E [ X j ]

In particular when i ≠ j

j

)

E[X

X

]

i

j

Let A k = event that type k is present

E [ X i X j ] = P{ X i X j = 1}

= P (A i

A j ) = 1 − P (A i

B y D e M o rg a n ,

(

= 1 − A ic

A jc

)

Aj)

(A

i

c

A

j

)

c

= A ic

A jc

U s i n g t h e In c lu s i o n / E x c lu s i o n r u le :

{P ( A

P

(B ) −

= 1 − P A i c + P A jc − P A i c

A jc

B

)=

P

(A )+

( ) ( ) (

P

)

(A

B

)}

E[X

R e c a ll :

P

i

(A ) =

c

i

X

m −1

m

P

] c o n t.

j

n

(A

,P

c

i

(A ) =

m −1

m

n

)=

m − 2

m

n

c

j

A

c

j

( ) ( ) (

E [ X i X j ] = 1 − P A i c + P A jc − P A i c

= 1−

A jc

)

m −1 n

m −1 n

m−2 n

+

−

m

m

m

m −1 n

m−2 n

=1− 2

−

m

m

C ov(X

R e c a ll :

E[X i] = E[X

E[X iX

j

] = 1 −

2

j

] =

X

i

m − 1

m

1 −

m −1

m

n

j

−

)

n

m −2

m

n

n 2

n

n

m −1

m −1

m−2

−

− 1−

C ov ( X i , X j ) = 1 − 2

m

m

m

m −1

= 1− 2

m

n

m−2

+

m

=

m−2

m

n

n

m −1

−1+ 2

m

2n

m −1

−

m

n

m −1

−

m

2n

Back to the Var(X)

Re call : Var ( X i ) = Cov ( X i , X j )

V a r ( X )=

m

m

i =1

j =1

C ov( X i, X j )

Cov(X1, X1),

Cov(X1, X2 ), Cov(X2, X2 ),

Cov(X1, X3), Cov(X2, X3), Cov(X3, X3),

Cov(X1, X4 ), Cov(X2, X4 ), Cov(X3, X4 ), Cov(X4, X4 ),

Cov(X1, Xm), Cov(X2, Xm), Cov(X3, Xm), Cov(X4, Xm),

=

m

i =1

C ov ( X i , X i ) + 2

m

m

i =1

j<i

Cov(Xm, Xm)

C ov ( X i , X j )

Var(X) in terms of Covariance

Var ( X ) =

m

i =1

Cov ( X i , X i ) + 2

m

m

i =1 j < i

Cov ( X i , X j )

S im p lify in g th e s u m m a tio n , W e g e t:

=

m

i =1

Cov ( X i , X i ) + 2

m

i =1

m ( m − 1) Cov ( X i , X j )

Var(X) equals

m

Var ( X ) =

i =1

Cov ( X i , X i ) + 2

m −1

Recall: Var ( X i ) = m

m

m −1

=m

m

n

m −1

1−

m

n

n

m

m ( m − 1) Cov ( X i , X j )

i =1

n

m −1

1−

m

(

)

m−2

+ m(m − 1)

m

n

+ 2m 2 − 2m

m−2

m

n

m −1

−

m

Simplified ,

m −1

Var ( X ) = m

m

n

m −1

2

−m

m

2n

2n

In Case you Forgot:

Independent Case:

E [ X ] ≈ m lo g m

Var ( X ) ≈ m

2

Dependent Case:

E [ X ] = m

n

1 −

n

m -1

m

m −1

m−2

+ m(m −1)

Var( X ) = m

m

m

n

m −1

2

−m

m

2n

Analytic vs. Simulation

Approaches

For a simulation we will consider a fair set

of the McDonald’s Coupons from Problem

I.

We will analytically find E[X] and Var(X)

and compare these two values with the

results from our computer simulation.

Analytic Approach

Given M = 10, compute

E

[X ] =

m

m

i = 1

Var ( X ) ≈ m π

6

2

1

i

≈ m log

2

− m log(m) ≈ m

2

m

•References

Sheldon Ross

Author of “Probability Models for Computer

Science”, Academic Press 2002

2-3 pages from C++ books

McDonald’s

Dr. Deckelman

©

Any Questions???