Serverless Network File Systems

advertisement

Serverless Network File Systems

THOMAS

DAVID

E, ANDERSON,

MICHAEL

A, PATTERSON,

University

of California

DREW

D, DAHLIN,

S. ROSELLI,

and

JEANNA

M. NEEFE,

RANDOLPH

Y. WANG

at Berkeley

\V{ pr{~p{)M, :{ new paradigm

for network

fil(, systt,m rfestgn

w,r(v,rfe.w n(,fldrjrlc /{/,, sv.sl(,nl.s

\Vhil<, trari]t]onal

network

tile syst[, ms rely (In ii central

server machln(.,

:( w.rv(,rl{+

~yst{.m

ut)li?.r, s w,ork statinns ctmper;itirrg :is peers t{) provide all tllc system st.r~lccs Any n]ach[nc, 111th[.

~y<t{,m c,In ~tt)r(~, cache, or r{)ntrol tiny block t]f’ datii. our appr(mch UW,S this I{)catl[)n ]nd[,pen [l[,nc~.. 1[] c{)n]hlnatlon \vlth Iiist local area nrtv,,(~rks. tn provide tx,ttcr p(,rfi)rm:]nc(, and wxilability tb:ln

trviditit}n:{l

filr systems

1}11,r[,>p{~msihilit ics of a fhilrd

\ I;l r(xl(lnd:int

data

storage’.

Furthrrmorc.

hrc:Iuse any machin(

in the, system can a>,um(

ctlmponcnt.

our srrvrrlcss clrsi~n also prc,\id{w high availability

TII demonstrate

our apprnach,

w(, huv(, Implrrr)(, nt(d

a prototype”

+{,r.\(,rl(~s> t)(.t~v{)rk fllc svst[, m C;IIled xFS.

I’rc,liminary

p{,rformance

mc:]surem{, nts suggest thiit

,Iiir, :irchttt,c(~]rt~ achievt>k Its L,oul of scalability.

[<”orinstance,

rn a ‘12-n(xJcJ xFS systt,m with :j2

,1(! I\I, cl!c,nts. (,;ich clrcn( r(,ct,ivt+ n(,arly ;[s nruch rciid or write thr[)ugh put :IS [t w{iulrl ..f.{, if It

Jvt,r(, th{, I)nl\ iicti v{, client.

;iddrt],]n;]l

Kty W,)rds and I’hrast,..: Log-based

s! rip]ng.

RA1 l). rc[iundani

duta <t oragc,, scalable pcrf(,rmancc

log clcuning,

logKi ng. Inx >t rurt urcd.

‘1’brs M[Irk IS supported rn par-t I)y thr Advanced Research Projects Agency (NO060(J-93-(’.2481,

F:;[)(i02-95-(’ -(Jolt).” t bt, Natlona[

Science Foundation

(CDA 040”1 156), (’allfhrnla

LTI(lR(), the

,Y~& 1 1,’~~undati(ln, I)igltal Equipment

(k)rporation,

Exabytt,,

}+c\vl{,tt-Pzlck ~ird, 11)}1, Siemen~

(’,,rporti(l{)m

Sun Microsyst{,rns,

tind Xerux Corporation,

Andcrsnn

\v:~s also supp{~rtt,d hy a

N:ltr<~r~;il Sci[, nce Foundkitlon

Pr(sidcrrtial

Faculty

Fellowship,

Nerfc hy ii Natir]rral Sc]enct

F,,und:iti(,n

(;raduatc

Rcscarc h l’cllowship,

and Rosrlli by a [)epar-tmcnt

[If f+klucatmn (;AANN

ftllfl\\+hrp

,JI’ (’;~iif{)rn]t] at Berkeley,

:J&7 SOdU

Sclc,ncc l)ivisi~)n, [Jn]ver+rty

~luth[]r>’ addr(,.ss: Computtr

1{:111, }](,rk{,l[,y, ~.~ :1.17z().177(; , (mail:

{tea: dnhlin;

“neefc; pattrrson:

drew:

r.wvanf+d(~~c+

It(rkt,l(,> .cdu. :]dditi(,n,~l l[lfi)rrn:iti(~n ab{)ut xFS c~in hr found at http:,,

n[~w (’S %rkclcy. cdu

Xl’s xfk,htrnl.

IJt,rl]lisslon to make diglta’hard

cupy of part or all of this wnrk for personal nr classroom

usc is

~r:il~tt,d without

fce pr{]vidcd that copies are not made or dls!rihutcd

for profit or c{]mmcrciaf

:Idviint:lg(,, th~, cupyr-lght notice, the title of tbe publicati(~n,

and its dat[, :ipprur, and n{lticc. }~

~iv~,rr thtlt c(jpyin~r is I]y pcrmiss inn of A(’M, Inc. TO copy {Itherwi se, to republish.

to post [In

.si,r\, (,r,s. or t[] r[>di,stribl]t[, to li.st,s, rrquirc,s prior .sprcif]c pcrmi.s.s ion :]nd tor ;I fc[,

c 1996 A(’M 07:)4-2071

96 /0200-0041 $03.50

42

.

Thomas

E. Anderson

et al.

1. INTRODUCTION

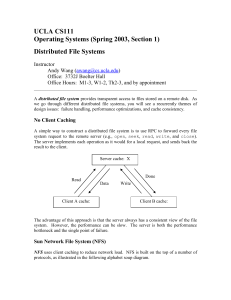

A serverless network file system distributes storage, cache, and control over

cooperating workstations. This approach contrasts with traditional file systems such as Netware [Major et al. 1994], NFS [Sandberg et al. 19851,

Andrew [Howard et al. 1988], and Sprite [Nelson et al. 1988] where a central

server machine stores all data and satisfies all client cache misses. Such a

central server is both a performance and reliability bottleneck. A serverless

system, on the other hand, distributes control processing and data storage to

achieve scalable high performance,

migrates the responsibilities

of failed

components to the remaining machines to provide high availability,

and

scales gracefully to simplify system management.

Three factors motivate our work on serverless network file systems: the

opportunity

provided by fast switched LANs, the expanding demands of

users, and the fundamental limitations of central server systems.

The recent introduction of switched local area networks such as ATM or

Myrinet [Boden et al. 1995] enables serverlessness

by providing aggregate

bandwidth that scales with the number of machines on the network. In

contrast, shared-media networks such as Ethernet or FDDI allow only one

client or server to transmit at a time. In addition, the move toward low-latency

network interfaces [Basu et al. 1995; von Eicken 1992] enables closer cooperation between machines than has been possible in the past. The result is that

a LAN can be used as an 1/0 backplane, harnessing physically distributed

processors, memory, and disks into a single system.

Next-generation

networks not only enable serverlessness; they require it by

allowing applications to place increasing demands on the file system. The

1/0 demands of traditional

applications

have been increasing over time

[Baker et al. 1991]; new applications

enabled by fast networks—such

as

multimedia, process migration, and parallel processing—will

further pressure file systems to provide increased performance. For instance, continuousmedia workloads will increase file system demands; even a few workstations

simultaneously running video applications would swamp a traditional central

server [Rashid 1994]. Coordinated Networks of Workstations (NOWS) allow

users to migrate jobs among many machines and permit networked workstations to run parallel jobs [Anderson et al. 1995; Douglas and Ousterhout

199 1; Litzkow and Solomon 1992]. By increasing the peak processing power

available to users, NOWS increase peak demands on the file system [Cypher

et al. 1993].

Unfortunately, current centralized file system designs fundamentally limit

performance and availability since all read misses and all disk writes go

through the central server. To address such performance limitations, users

resort to costly schemes to try to scale these fundamentally

unscalable file

systems. Some installations rely on specialized server machines configured

with multiple processors,

1/0 channels, and 1/0 processors. Alas, such

machines cost significantly

more than desktop workstations

for a given

amount of computing or 1/0 capacity. Many installations also attempt to

achieve scalability by distributing a file system among multiple servers by

ACM Transactionson ComputerSystems, Vol. 14, No. 1, February 1996.

Serverless Network File Systems

partitioning

the

directm-y

tree

across

multiple

mount

points,

This

.

43

approach

scalability because its coarse distribution often

results in hot spots when the partitioning allocates heavily used files and

directory trees to a single server [Wolf 1989]. It is also expensive, since it

requires the (human) system manager to effectively become part of the file

system

moving

users, volumes, and disks among servers to balance load.

Finally, Andrew [Howard et al. 1988] attempts to improve scalability by

caching data on client disks. Although this made sense on an Ethernet, on

today’s fast LANs fetching data from local disk can be an order of magnitude

slower than from server memory or remote striped disk.

Similarly, a central server represents a single point of failure, requiring

server replication

[Birrell et al. 1993; Kazar 1989; Kistler and Satyanarayanan 1992; Liskov et al, 1991; Popek et al. 1990; Walker et al, 1983] for

high availability. Replication increases the cost and complexity of central

servers and can increase latency on writes since the system must replicate

data al multiple servers.

In contrast t.o central server designs, our objective is to build a truly

distributed network file system—one

with no central bottleneck. We have

designed and implemented xFS, a prototype serverless network file system,

to invc’stigate this goal. xFS illustrates serverless design principles in three

ways. First, xFS dynamically distributes control processing across the system

on a per-file granularity by utilizing a new serverless management scheme.

Second. xFS distributes

its data storage across storage server disks by

implemtlnting a software RAID [Chen et al. 1994; Patterson et al. 1988] using

log-based network striping similar to Zebra’s [Hartman and Ousterhout

1995], Finally, xFS eliminates central server caching by taking advantage of

cooperative caching [Dahlin et al. 1994b; Leff et al. 1991] to harvest portions

of client memory as a large, global file cache.

This article makes two sets of contributions.

First, xFS synthesizes a

number of recent innovations that, taken together, provide a basis for serverIess file system design. xFS relies on previous work in areas such as scalable

cache consistency (DASH [Lenoski et al. 1990] and Alewife [Chaiken et al.

1991 i ), cooperative caching, disk striping (RAID and Zebra), and log-structured file systems (Sprite LFS [Rosenblum and Ousterhout 1992] and BSD

I,FS [Seltzer et al. 1993]). Second, in addition to borrowing

techniques

developed in other projects, we have refined them to work well in our

serverless system. For instance, we have transformed DASH’s scalable cache

consistency approach into a more general, distributed control system that is

also fault tolerant. We have also improved upon Zebra to eliminate bottlenecks in its design hy using distributed management, parallel cleaning, and

subsets of storage servers called stripe groups. Finally, we have actually

implemented cooperative caching, building on prior simulation results.

The primary

limitation

of our serverless

approach

is that it is only appropriate in a restricted environment-–among

machines that communicate over

a fast network and that trust one another’s kernels to enforce security.

However, we expect such environments

to be common in the future. For

instance, NOW systems already provide high-speed networking and tmst to

only

moderately

improves

A(’M Transactmns

on (k,mputer

Systems.

Vo[

14 No

1, February

1996

44.

Thomas E. Anderson et al

run parallel and distributed jobs. Similarly, xFS could be used within a group

or department where fast LANs connect machines and where uniform system

administration

and physical building security allow machines to trust one

another. A file system based on serverless principles would also be appropriate for “scalable server” architectures currently being researched [Kubiatowicz and Agarwal 1993; Kuskin et al. 1994]. Untrusted clients can also

benefit from the scalable, reliable, and cost-effective file service provided by a

core of xFS machines via a more restrictive protocol such as NFS.

We have built a prototype that demonstrates

most of xFS’ key features,

including distributed management,

cooperative caching, and network disk

striping with parity and multiple groups. As Section 7 details, however,

several pieces of implementation

remain to be done; most notably, we must

still implement the cleaner and much of the recovery and dynamic reconfiguration code. The results in this article should thus be viewed as evidence that

the serverless approach is promising, not as “proof’ that it will succeed. We

present both simulation results of the xFS design and a few preliminary

measurements of the prototype. Because the prototype is largely untuned, a

single xFS client’s performance is slightly worse than that of a single NFS

client; we are currently working to improve single-client performance to allow

one xFS client to significantly outperform one NFS client by reading from or

writing to the network-striped

disks at its full network bandwidth. Nonetheless, the prototype does demonstrate remarkable scalability. For instance, in

a 32-node xFS system with 32 clients, each client receives nearly as much

read or write bandwidth as it would see if it were the only active client.

The rest of this article discusses these issues in more detail. Section 2

provides an overview of recent research results exploited in the xFS design.

Section 3 explains how xFS distributes

its data, metadata, and control.

Section 4 describes xFS distributed log cleaner. Section 5 outlines xFS’

approach to high availability, and Section 6 addresses the issue of security

and describes how xFS could be used in a mixed security environment. We

describe our prototype in Section 7, including initial performance measurements. Section 8 describes related work, and Section 9 summarizes

our

conclusions.

2. BACKGROUND

xFS builds upon several recent and ongoing research efforts to achieve our

goal of distributing all aspects of file service across the network. xFS’ network

disk storage exploits the high performance

and availability of Redundant

Arrays of Inexpensive

Disks (RAIDs). We organize this storage in a log

structure as in the Sprite and BSD Log-structured

File Systems (LFS),

largely because Zebra demonstrated

how to exploit the synergy between

RAID and LFS to provide high-performance,

reliable writes to disks that are

distributed across a network. To distribute control across the network, xFS

draws inspiration from several multiprocessor

cache consistency designs.

Finally, since xFS has evolved from our initial proposal [Wang and Anderson

ACM Transactions

on Computer

Systems.

Vol. 14, No. 1, February

1996.

Serverless Network File Systems

1993], we describe the relationship

versions of the xFS design.

2.1

of the design presented

45

here to previous

RAID

xFS exploits RAID-style disk striping to provide high performance and highly

available disk storage [Chen et al. 1994; Patterson et al. 1988]. A RAID

partitions a stripe of data into N – 1 data blocks and a parity block—the

exclusive-OR of the corresponding bits of the data blocks. It stores each data

and parity block on a different disk. The parallelism of a RAID’s multiple

disks provides high bandwidth, while its parity provides fault tolerance-it

can reconstruct the contents of a failed disk by taking the exclusive-OR of the

remaining data blocks and the parity blocks. xFS uses single-parity

disk

striping to achieve the same benefits; in the future we plan to cope with

multiple workstation or disk failures using multiple-parity

blocks [Blaum

et al. 1994],

RAIDs suffer from two limitations. First, the overhead of parity management can hurt performance for small writes; if the system does not simultaneously overwrite all N – 1 blocks of a stripe, it must first read the old parity

and some of the old data from the disks to compute the new parity. Unfortunately, small writes are common in many environments [Baker et aI. 1991],

and larger caches increase the percentage of writes in disk workload mixes

over time. We expect cooperative caching—using

workstation memory as a

global cache—to further this workload trend. A second drawback of commercially available hardware RAID systems is that they are significantly more

expensive than non-RAID commodity disks because the commercial RAIDs

add special-purpose hardware to compute parity.

2.2 LFS

xFS implements log-structured

storage based on the Sprite and BSD LFS

prototypes [Rosenblum and Ousterhout 1992; Seltzer et al. 1993] because this

approach provides high-performance

writes, simple recovery, and a flexible

method to locate file data stored on disk. LFS addresses the RAID small-write

problem by buffering writes in memory and then committing them to disk in

large, contiguous, fixed-sized groups called log segments; it threads these

segments on disk to create a logical append-only log of file system modifications. When used with a RAID, each segment of the log spans a RAID stripe

and is committed as a unit to avoid the need to recompute parity. LFS also

simplifies failure recovery because all recent modifications are located near

the end of the log.

Although log-based storage simplifies writes, it potentially complicates

reads because any block could be located anywhere in the log, depending on

when it was written. LFS’ solution to this problem provides a general

mechanism to handle location-independent

data storage. LFS uses per-file

inodes,

similar to those of the Fast File System (FFS) [McKusick et al. 1984],

to store pointers to the system’s data blocks. However, where FFS’ inodes

reside in fixed locations, LFS’ inodes move to the end of the log each time

ACM Transactions on Computer Systems, Vol 14, No. 1, February 1996

46

.

Thomas E. Anderson et al,

they are modified. When LFS writes a file’s data block, moving it to the end of

the log, it updates the file’s inode to point to the new location of the data

block; it then writes the modified inode to the end of the log as well. LFS

locates the mobile inodes by adding a level of indirection, called an irnap. The

imap contains the current log pointers to the system’s inodes; LFS stores the

imap in memory and periodically checkpoints it to disk.

These checkpoints form a basis for LFS’ efllcient recovery procedure. After

a crash, LFS reads the last checkpoint in the log and then rolls forward,

reading the later segments in the log to find the new location of inodes that

were written since the last checkpoint. When recovery completes, the imap

contains pointers to all of the system’s inodes, and the inodes contain pointers

to all of the data blocks.

Another important aspect of LFS is its log cleaner that creates free disk

space for new log segments using a form of generational garbage collection.

When the system overwrites a block, it adds the new version of the block to

the newest log segment, creating a “hole” in the segment where the data used

to reside. The cleaner coalesces old, partially empty segments into a smaller

number of full segments to create contiguous space in which to store new

segments.

The overhead associated with log cleaning is the primary drawback of LFS.

Although Rosenblum’s original measurements

found relatively low cleaner

overheads, even a small overhead can make the cleaner a bottleneck in a

distributed environment. Furthermore, some workloads, such as transaction

processing, incur larger cleaning overheads [Seltzer et al. 1993; 1995].

2.3 Zebra

Zebra [Hartman and Ousterhout 1995] provides a way to combine LFS and

RAID so that both work well in a distributed environment.

Zebra uses a

software RAID on commodity hardware (workstation, disks, and networks) to

address RAID’s cost disadvantage, and LFS batched writes provide efficient

access to a network RAID. Furthermore, the reliability of both LFS and RAID

makes it feasible to distribute data storage across a network.

LFS’ solution to the small-write

problem is particularly

important for

Zebra’s network striping since reading old data to recalculate RAID parity

would be a network operation for Zebra. As Figure 1 illustrates, each Zebra

client coalesces its writes into a private per-client log. It commits the log to

the disks using fixed-sized log segments, each made up of several log fragments that it sends to different storage server disks over the LAN. Log-based

striping allows clients to efficiently calculate parity fragments entirely as a

local operation and then store them on an additional storage server to provide

high data availability.

Zebra’s log-structured architecture significantly simplifies its failure recovery. Like LFS, Zebra provides efllcient recovery using checkpoint and roll

forward. To roll the log forward, Zebra relies on deltas stored in the log. Each

delta describes a modification to a file system block, including the ID of the

modified block and pointers to the old and new versions of the block, to allow

the system to replay the modification during recovery. Deltas greatly simplifi

ACM Transactions on Computer Systems, Vol. 14, No. 1, February 1996.

Serverless Network File Systems

.

47

Client Memories

-

One Client’sWriteLo=

OneClient’sWriteh~

Log Segment

Log Segment

I I 1 I ...

AB

C...

HEEI?%A

H+imiii!%~2~~

k

Network

>$b

mmm

MUM

B

BBBB

Storage Server Disks

Fig. 1, Log-based

striping

used by Zebra and xFS. Each client writes its new file data into a

private append-only log and stripes this log across the storage servers. Clients compute parity for

segments, not for individual files.

recovery by providing an atomic commit for actions that modify state located

on multiple machines: each delta encapsulates a set of changes to file system

state that must occur as a unit.

Although Zebra points the way toward serverlessness, several factors limit

Zebra’s

scalability.

First, a single file manager tracks where clients store

data blocks in the log; the manager also handles cache consistency operations. Second, Zebra, like LFS, relies on a single cleaner to create empty

segments. Finally, Zebra stripes each segment to all of the system’s storage

servers, limiting the maximum number of storage servers that Zebra can use

efficiently.

2.4 Multiprocessor Cache Consistency

Network file systems resemble multiprocessors

in that both provide a uniform view of storage across the system, requiring both to track where blocks

are cached. This information allows them to maintain cache consistency by

invalidating

stale cached copies. Multiprocessors

such as DASH [Lenoski

et al. 1990] and Alewife [Chaiken et al. 1991] scalably distribute this task by

dividing the system’s physical memory evenly among processors; each processor manages the cache consistency state for its own physical memory locations.l

1In the context of scalable multiprocessor consistency, this state is referred to as a directory. We

avoid this terminology to prevent confhsion with file system directories that provide a hierarchical organization of file names.

ACM Transactions on Computer Systems, Vol. 14, No. 1, February

1996.

48

.

Thomas E. Anderson et al.

Unfortunately,

the fixed mapping from physical memory addresses to

consistency managers makes this approach unsuitable for file systems. Our

goal is graceful recovery and load rebalancing

whenever the number of

machines in xFS changes; such reconfiguration

occurs when a machine

crashes or when a new machine joins xFS. Furthermore,

as we show in

Section 3.2.4, by directly controlling which machines manage which data, we

can improve locality and reduce network communication.

2.5 Previous xFS Work

The design of xFS has evolved considerably

since our original proposal

[Dahlin et al. 1994a; Wang and Anderson 1993]. The original design stored all

system data in client disk caches and managed cache consistency using a

hierarchy of metadata servers rooted at a central server. Our new implementation eliminates client disk caching in favor of network striping to take

advantage of high-speed, switched LANs. We still believe that the aggressive

caching of the earlier design would work well under different technology

assumptions; in particular, its efficient use of the network makes it well

suited for both wireless and wide-area network use. Moreover, our new

design eliminates the central management

server in favor of a distributed

metadata manager to provide better scalability, locality, and availability.

We have also previously examined cooperative caching—using

client memory as a global file cache—via simulation [Dahlin et al. 1994b] and therefore

focus only on the issues raised by integrating cooperative caching with the

rest of the serverless system.

3. SERVERLESS

FILE SERVICE

The RAID, LFS, Zebra, and multiprocessor cache consistency work discussed

in the previous section leaves three basic problems unsolved. First, we need

scalable, distributed metadata and cache consistency management,

along

with enough flexibility to reconfigure responsibilities

dynamically after failures. Second, the system must provide a scalable way to subset storage

servers into groups to provide efficient storage. Finally, a log-based system

must provide scalable log cleaning.

This section describes the xFS design as it relates to the first two problems.

Section 3.1 provides an overview of how xFS distributes its key data structures. Section 3.2 then provides examples of how the system as a whole

functions for several important operations. This entire section disregards

several important details necessary to make the design practical; in particular, we defer discussion of log cleaning, recovery from failures, and security

until Sections 4 through 6.

3.1 Metadata and Data Distribution

The xFS design philosophy can be summed up with the phrase, “anything,

anywhere.” All data, metadata, and control can be located anywhere in the

system and can be dynamically migrated during operation. We exploit this

location independence to improve performance by taking advantage of all of

ACM Transactions on Computer Systems, Vol. 14, No. 1, February 1996

Serverless Network File Systems

.

49

the system’s resources—CPUs,

DRAM, and disks—to distribute load and

increase locality. Furthermore, we use location independence to provide high

availability by allowing any machine to take over the responsibilities

of a

failed component after recovering its state from the redundant log-structured

storage system.

In a typical centralized system, the central server has four main tasks:

(1) The server stores all of the system’s data blocks on its local disks.

(2) The server manages disk location metadata

the system has stored each data block.

that indicates

(3) The server maintains a central cache of data blocks

satisfy some client misses without accessing its disks.

where on disk

in its memory

to

(4) The server manages cache consistency metadata that lists which clients

in the system are caching each block. It uses this metadata to invalidate

stale data in client caches.2

The xFS system performs the same tasks, but it builds on the ideas

discussed in Section 2 to distribute this work over all of the machines in the

system. To provide scalable control of disk metadata and cache consistency

state, xFS splits management among metadata managers similar to multiprocessor consistency managers. Unlike multiprocessor managers, xFS managers can alter the mapping from files to managers. Similarly, to provide

scalable disk storage, xFS uses log-based network striping inspired by Zebra,

but it dynamically clusters disks into stripe groups to allow the system to

scale to large numbers of storage servers. Finally, xFS replaces the server

cache with cooperative caching that forwards data among client caches under

the control of the managers. In xFS, four types of entities—the

clients,

storage servers, and managers already mentioned, and the cleaners discussed

in Section 4—cooperate to provide file service as Figure 2 illustrates.

The key challenge for xFS is locating data and metadata in this dynamically changing, completely distributed system. The rest of this subsection

examines four key maps used for this purpose: the manager map, the imap,

file directories, and the stripe group map. The manager map allows clients to

determine which manager to contact for a file, and the imap allows each

manager to locate where its files are stored in the on-disk log. File directories

serve the same purpose in xFS as in a standard UNIX file system, providing

a mapping from a human-readable

name to a metadata locator called an

index number. Finally, the stripe group map provides mappings from segment identifiers embedded in disk log addresses to the set of physical

machines storing the segments. The rest of this subsection discusses these

four data structures before giving an example of their use in file reads and

writes. For reference, Table I provides a summary of these and other key xFS

z Note that the NFS server does not keep caches consistent. Instead NFS relies on clients tQ

verify that a block is current before using it. We rejected that approach because it sometimes

allows clients to observe stale data when a client tries to read what another client recently wrote.

ACM Transactions on Computer Systems, Vol. 14, No 1, February 1996

50.

Thomas E. Anderson et al.

n

\

1

1

Network

Fig. 2. Two simple xFS installations. In the first, each machine acts as a client, storage server,

cleaner, and manager, while in the second each node only performs some of those roles. The

freedom to configure the system is not complete; managers and cleaners access storage using the

client interface, so all machines acting as managers or cleaners must also be clients.

data structures.

work together.

Figure 3 in Section 3.2.1. illustrates

how these components

3.1.1 The Manager Map.

xFS distributes

management

responsibilities

according to a globally replicated manager map. A client uses this mapping to

locate a file’s manager from the file’s index number by extracting some of the

index number’s bits and using them as an index into the manager map. The

map itself is simply a table that indicates which physical machines manage

which groups of index numbers at any given time.

This indirection allows xFS to adapt when managers enter or leave the

system; the map can also act as a coarse-grained load-balancing

mechanism

to split the work of overloaded managers. Where distributed multiprocessor

cache consistency

relies on a fixed mapping from physical addresses to

managers, xFS can change the mapping from index number to manager by

changing the manager map.

To support reconfiguration, the manager map should have at least an order

of magnitude more entries than there are managers. This rule of thumb

allows the system to balance load by assigning roughly equal portions of the

map to each manager. When a new machine joins the system, xFS can modi~

the manager map to assign some of the index number space to the new

manager by having the original managers send the corresponding

parts of

their manager state to the new manager. Section 5 describes how the system

reconfigures manager maps. Note that the prototype has not yet implemented

this dynamic reconfiguration

of manager maps.

xFS globally replicates the manager map to all of the managers and all of

the clients in the system. This replication allows managers to know their

responsibilities,

and it allows clients to contact the correct manager directly

—with the same number of network hops as a system with a centralized

manager. We feel it is reasonable to distribute the manager map globally

because it is relatively small (even with hundreds of machines, the map

would be only tens of kilobytes in size) and because it changes only to correct

a load imbalance or when a machine enters or leaves the system.

ACM Transactionson ComputerSystems,Vol. 14, No. 1, February 1996

Table I. Summary of Key xFS Data Structures

Purpose

Maps file’s index number+ manager.

Lacation

Globally repliuted.

Imap

Maps file’s index number+ disk log addressof file’s

index node.

Split among managers.

3.1.2

Index Node

Maps file offset - disk log address of data block.

In ondisk log at storage servers.

3.1.2

Index Number

Key used to locate metadatafor a file.

File directory.

3.1.3

File Directory

Maps file’s name - file’s index number.

In ondisk log at storage servers.

3.1.3

Disk hg

Key used to locate blocks on storage server disks.

Includesa stripe group identifier, segment ID,

and offset within segment.

Index nodes and the imap.

3.1.4

Stripe Group Map

Maps disk log address+ list of storage servers.

Globally replicated.

3.1.4

Cache Consistency State

Lists clients caching or holding the write token

of each block.

Split among managers.

3.2.1

3.2.3

Segment Utilization State

Utilization, modification time of segments.

Split among clients.

4

S-Files

Ondisk cleaner statefor cleaner communication

and recovery.

In ondisk log at storage servers.

4

I-File

Gn disk copy of imap used for recovery.

In ondisk log at storage servers.

5

Deltas

Log modifications for recovery roll-forward.

In ondisk log at storage servers.

5

Manager Checkpoints

Record manager statefor recovery.

In ondisk log at storage servers.

5

DataStructure

Manager Map

Address

Section

3.1,1

This table summarizesthe purposeof the key xFS datastructures. The location column indicateswhere thesestructuresare located in

xFS, and the Section column indicateswhere in thisarticle the structureis described.

52

Thomas E. Anderson

●

The

manager

of a file

et al.

controls

two

sets

of information

about

it: cache

consistency

state and disk location

metadata.

Together,

these structures

allow the manager

to locate all copies of the file’s blocks. The manager can

thus forward

client read requests

to where the block is stored, and it can

invalidate

stale data when clients write a block. For each block, the cache

consistency

state lists the clients caching

the block or the client that has

write ownership

of it. The next subsection

describes

the disk metadata.

3.1.2 The Imap.

also

where

in the

Managers

on-disk

track not only where file blocks

log they

are

stored.

xFS

uses

are cached

the

LFS

but

imap

to

encapsulate

disk location metadata;

each file’s index number has an entry in

the imap that points to that file’s disk metadata

in the log. To make LFS’

imap scale, xFS distributes

the imap among

managers

according

to the

manager

map so that managers

handle the imap entries and cache consistency state of the same tiles.

The disk stirage for each file can be thought of as a tree whose root is the

imap entry for the file’s index number and whose leaves are the data blocks.

A file’s imap entry contains the log address of the file’s index node. xFS index

nodes, like those of LFS and FFS, contain the disk addresses

of the file’s data

blocks;

for large

files the index

node can also contain

blocks that contain

more data block addresses,

contain addresses

of indirect blocks, and so on.

log addresses

double

indirect

of indirect

blocks

that

3.1.3 File Directories and Index Numbers.

xFS uses the data structures

described above to locate a file’s manager given the file’s index number. To

determine the file’s index number, xFS, like FFS and LFS, uses file directories

that

contain

mappings

directories

in regular

reading a directory.

files,

from

file

allowing

names

to index

numbers.

xFS

a client

to learn

an index

number

stores

by

In xFS, the index number listed in a directory determines

a file’s manager.

When a file is created, we choose its index number so that the file’s manager

is on the same machine

as the client that creates the file. Section

3.2.4

describes

simulation

results of the effectiveness

of this policy in reducing

network communication.

3.1.4 The Stripe Group Map.

Like Zebra, xFS bases its storage subsystem

on simple storage servers to which clients write log fkagments. To improve

performance and availability when using large numbers of storage servers,

rather than stripe each segment over all storage servers in the system, xFS

implements stripe groups as have been proposed for large RAIDs [Chen et al.

1994]. Each stripe group includes a separate subset of the system’s storage

servers, and clients write each segment across a stripe group rather than

across all of the system’s storage servers. xFS uses a globally replicated stripe

group map to direct reads and writes to the appropriate storage servers as

the system configuration

changes. Like the manager map, xFS globally

replicates the stripe group map because it is small and seldom changes. The

current version of the prototype implements reads and writes from multiple

stripe groups, but it does not dynamically modi& the group map.

ACM Transactions on Computer Systems, Vol. 14, No. 1, February 1996

Serverless Network File Systems

.

53

Stripe groups are essential to support large numbers of’ storage servers for

at least four reasons. First, without stripe groups, clients would stripe each of

their segments over all of the disks in the system. This organization would

require clients to send small, inefficient fragments to each of the many

storage servers or to buffer enormous amounts of data per segment so that

they could write large fragments to each storage server. Second, stripe groups

match the aggregate bandwidth of the groups’ disks to the network bandwidth of a client, using both resources efficiently; while one client writes at

its full network bandwidth to one stripe group, another client can do the

same with a different group. Third, by limiting segment size, stripe groups

make cleaning more efficient, This eftlciency arises because when cleaners

extract segments’ live data, they can skip completely empty segments, but

they must read partially full segments in their entirety; large segments

linger in the partially full state longer than small segments, significantly

increasing cleaning costs. Finally, stripe groups greatly improve availability.

Because each group stores its own parity, the system can survive multiple

server failures if they happen to strike different groups; in a large system

with random failures this is the most likely case. The cost for this improved

availability is a marginal reduction in disk storage and effective bandwidth

because the system dedicates one parity server per group rather than one for

the entire system.

The stripe group map provides several pieces of information about each

group: the group’s ID, the members of the group, and whether the group is

current or obsolete; we describe the distinction between current and obsolete

groups below. When a client writes a segment to a group, it includes the

stripe group’s ID in the segment’s identifier and uses the map’s list of storage

servers to send the data to the correct machines. Later, when it or another

client wants to read that segment, it uses the identifier and the stripe group

map to locate the storage servers to contact for the data or parity.

xFS distinguishes between current and obsolete groups to support reconfiguration. When a storage server enters or leaves the system, xFS changes the

map so that each active storage server belongs to exactly one current stripe

group. If this reconfiguration

changes the membership of a particular group,

xFS does not delete the group’s old map entry. Instead, it marks that entry as

“obsolete.” Clients write only to current stripe groups, but they may read

from either current or obsolete stripe groups. By leaving the obsolete entries

in the map, xFS allows clients to read data previously written to the groups

without first transferring the data from obsolete groups to current groups.

Over time, the cleaner will move data from obsolete groups to current groups

[Hartman and Ousterhout 1995]. When the cleaner removes the last block of

live data from an obsolete group, xFS deletes its entry from the stripe group

map.

3.2

System

Operation

This section describes how xFS uses the various maps we described in the

previous section. We first describe how reads, writes, and cache consistency

ACM Transactions cm Computer Systems, Vol. 14, No. 1, Februsry 1996

54.

Thomas E. Anderson et al.

work and then present simulation results examining

the assignment of files to managers.

the issue of locality

in

3.2.1 Reads and Caching.

Figure 3 illustrates how xFS reads a block

given a file name and an offset within that file. Although the figure is

complex, the complexity in the architecture

is designed to provide good

performance with fast LANs. On today’s fast LANs, fetching a block out of

local memory is much faster than fetching it from remote memory, which, in

turn, is much faster than fetching it from disk.

To open a file, the client first reads the file’s parent directory (labeled 1 in

the diagram) to determine its index number. Note that the parent directory

is, itself, a data file that must be read using the procedure described here. As

with FFS, xFS breaks this recursion at the rooti, the kernel learns the index

number of the root when it mounts the file system.

As the top left path in the figure indicates, the client first checks its local

UNIX block cache for the block (2a); if the block is present, the request is

done. Otherwise it follows the lower path to fetch the data over the network.

xFS first uses the manager map to locate the correct manager for the index

number (2b) and then sends the request to the manager. If the manager is

not colocated with the client, this message requires a network hop.

The manager then tries to satisfy the request by fetching the data fi-om

some other client’s cache. The manager checks its cache consistency state

(3a), and, if possible, forwards the request to a client caching the data. That

client reads the block from its UNIX block cache and forwards the data

directly to the client that originated the request. The manager also adds the

new client to its list of clients caching the block.

If no other client can supply the data from memory, the manager routes the

read request to disk by first examining the imap to locate the block’s index

node (3b). The manager may find the index node in its local cache (4a), or it

may have to read the index node from disk. If the manager has to read the

index node from disk, it uses the index node’s disk log address and the stripe

group map (4b) to determine which storage server to contact. The manager

then requests the index block from the storage server, who then reads the

block from its disk and sends it back to the manager (5). The manager then

uses the index node (6) to identi~ the log address of the data block. (We have

not shown a detail: if the file is large, the manager may have to read several

levels of indirect blocks to find the data block’s address; the manager follows

the same procedure in reading indirect blocks as in reading the index node.)

The manager uses the data block’s log address and the stripe group map

(7) ta send the request to the storage server keeping the block. The storage

server reads the data from its disk (8) and sends the data directly to the

client that originally asked for it.

One important design decision was to cache index nodes at managers but

not at clients. Although caching index nodes at clients would allow them to

read many blocks from storage servers without sending a request through the

ACM Transactionson ComputerSystems,Vol. 14, No. 1, February 1996.

Directo

Name,

Offset

*

1

I@&?l

0

stripe

Y

Access LQcal Data Structure_

Possible NetworkHopData or MetadataBlock (or Cache)

Globally Replicated Data SWheal Portion of GIobal Data E

Group

L

Data

Mgr.

‘%a

Block

Addr.

7

*

%%!zd~[-1[

****

n

~

m

56.

Thomas E. Anderson et al.

manager for each block, doing so has three significant drawbacks. First, by

reading blocks from disk without first contacting the manager, clients would

lose the opportunity to use cooperative caching to avoid disk accesses. Second,

although clients could sometimes read a data block directly, they would still

need to notify the manager of the fact that they now cache the block so that

the manager knows to invalidate the block if it is modified. Finally, our

approach simplifies the design by eliminating client caching and cache consistency for index nodes—only the manager handling an index number directly

accesses its index node.

3.2.2 Writts.

Clients buffer writes in their local memory until committed

to a stripe group of storage servers. Because xFS uses a log-based file system,

every write changes the disk address of the modified block. Therefore, after a

client commits a segment to a storage server, the client notifies the modified

blocks’ managers; the managers then update their index nodes and imaps

and periodically log these changes to stable storage. As with Zebra, xFS does

not need to commit both index nodes and their data blocks “simultaneously”

because the client’s log includes deltas that allows reconstruction

of the

manager’s data structures in the event of a client or manager crash. We

discuss deltas in more detail in Section 5.1.

As in BSD LFS [Seltzer et al. 1993], each manager caches its portion of the

imap in memory and stores it on disk in a special file called the ifile. The

system treats the ifile like any other file with one exception: the ifile has no

index nodes. Instead, the system locates the blocks of the ifile using manager

checkpoints described in Section 5.1.

xFS utilizes a token-based

cache consistency

3.2.3 Cache Consistency.

scheme similar to Sprite [Nelson et al. 1988] and Andrew [Howard et al.

1988] except that xFS manages consistency

on a per-block rather than

per-file basis. Before a client modifies a block, it must acquire write ownership of that block. The client sends a message to the block’s manager. The

manager then invalidates any other cached copies of the block, updates its

cache consistency information tQ indicate the new owner, and replies to the

client, giving permission to write. Once a client owns a block, the client may

write the block repeatedly without having to ask the manager for ownership

each time. The client maintains write ownership until some other client reads

or writes the data, at which point the manager revokes ownership, forcing the

client to stop writing the block, flush any changes to stable storage, and

forward the data to the new client.

xFS managers use the same state for both cache consistency and cooperative caching. The list of clients caching each block allows managers to

invalidate stale cached copies in the first case and to forward read requests to

clients with valid cached copies in the second.

3.2.4 Management Distribution

a client to a manager colocated

Policies.

xFS tries to assign files used by

on that machine. This section presents a

ACMTransactions on Computer Systems, Vol. 14, No. 1, Februa~ 1996.

Servertess Network File Systems

.

57

simulation study that examines policies for assigning files to managers. We

show that collocating a file’s management with the client that creates that file

can significantly

improve locality, reducing the number of network hops

needed to satisfy client requests by over 40!Z0 compared to a centralized

manager.

The xFS prototype uses a policy we call First Writer. When a client creates

a file, xFS chooses an index number that assigns the file’s management to the

manager colocated with that client, For comparison, we also simulated a

Centralized policy that uses a single, centralized manager that is not colocated with any of the clients.

We examined management policies by simulating xFS’ behavior under a

seven-day trace of 236 clients’ NFS accesses to an Auspex file server in the

Berkeley Computer Science Division [Dahlin et al. 1994a]. We warmed the

simulated caches through the first day of the trace and gathered statistics

through the rest. Since we would expect other workloads to yield different

results, evaluating a wider range of workloads remains important work.

The simulator counts the network messages necessary to satisfy client

requests, assuming that each client has 16MB of local cache and that there is

a manager colocated with each client, but that storage servers are always

remote.

Two artifacts of the trace affect the simulation. First, because the trace was

gathered by snooping the network, it does not include reads that resulted in

local cache hits. By omitting requests that resulted in local hits, the trace

inflates the average number of network hops needed to satisfy a read request.

Because we simulate larger caches than those of the traced system, this

factor does not alter the total number of network requests for each policy

[Smith 19’771,which is the relative metric we use for comparing policies.

The second limitation of the trace is that its finite length does not allow us

to determine a file’s “First Writer” with certainty for references to tiles

created before the beginning of the trace. For files that are read or deleted in

the trace before being written, we assign management to random managers

at the start of the trace; when and if such a file is written for the first time in

the trace, we move its management to the first writer. Because write sharing

is rare—960/o of all block overwrites or deletes are by the block’s previous

writer—we

believe this heuristic will yield results close to a true “First

Writer” policy for writes, although it will give pessimistic locality results for

“cold-start” read misses that it assigns to random managers.

Figure 4 shows the impact of the policies on locality. The First Writer

policy reduces the total number of network hops needed to satisfy client

requests by 43$Z. Most of the difference comes from improving write locality;

the algorithm does little to improve locality for reads, and deletes account for

only a small fraction of the system’s network traffic.

Figure 5 illustrates the average number of network messages to satisfy a

read block request, write block request, or delete file request. The communication for a read block request includes all of the network hops indicated in

Figure 3. Despite the large number of network hops that can be incurred by

some requests, the average per request is quite low. Seventy-five percent of

ACM Transactions

on Computer

Systems,

Vol. 14, No. 1, February

1996.

.

Thomas E. Anderson et al,

6,000,000

~ 5,000,000

M

~ 4,000,000

2

& 3,0CQ,000

g 2,000,000

z

I ,Ooo,ooo

0

Centralized

FirstWriter

Management Policy

Fig. 4. Comparison of locality as measured by network traffic for the Centralized and First

Writx3rmanagement policies.

41

Centralized =

First Writer ~

L

HODS

Per

write

HopsPer

Delete

Fig. 5. Average number of network messages needed to satis~ a read block, write block, or

delete file request under the Centralized and First Writer policies. The Hops Per Write column

does not include a charge for writing the segment containing blosk writes to disk because the

segment write is asynchronous to the block write request and because the large segment

amortizes the per-block write cost. ‘Note that the number of hops per read would be even lower if

the trace included all local hits in the traced system.

read request.a in the trace were satisfied by the local cache; as noted earlier,

the local hit rate would be even higher if the trace included local hits in the

traced system. An average local read miss costs 2.9 hops under the First

Writer policy; a local miss normally requires three hops (the client asks the

manager; the manager forwards the request; and the storage server or client

supplies the data), but 12% of the time it can avoid one hop because the

manager is colocat.ed with the client making the request or the client supplying the data. Under both the Centralized and First Writer policies, a read

ACMTransactions

on Computer Systems, Vol. 14, No. 1, February 1996.

Serverless Network File Systems

miss will occasionally

incur a few additional

hops

indirect block from a storage server.

Writes benefit more dramatically

from locality.

quests

that

ownership,

required

the

the manager

client

to contact

was colocated

the

to read

Of the

manager

with the client

.

an index

55%

59

node

of write

to establish

or

re-

write

90Yc of the time. When

a manager

had to invalidate

stale cached data, the cache being invalidated

was local one-third

of the time. Finally,

when clients flushed data to disk,

they informed the manager

of the data’s new storage location,

a local operation 90?l of the time.

Deletes, though rare, also benefit from locality: 68% of file delete requests

went to a local manager,

and 89~o of the clients notified

to stop caching

deleted files were local to the manager.

In the future,

we plan

to examine

other

policies

for assigning

managers.

For instance, we plan to investigate modiffing directories to permit xFS to

dynamically change a file’s index number and thus its manager after it has

been created. This capability would allow fine-g-rained load balancing on a

per-file rather than a per-manager map entry basis, and it would permit xFS

to improve locality by switching managers when a different machine repeatedly accesses a file.

Another optimization

that we plan to investigate is to assign multiple

managers to different portions of the same file to balance load and provide

locality for parallel workloads.

4. CLEANING

When a log-structured

file system such as xFS writes data by appending

complete segments to its logs, it often invalidates blocks in old segments,

leaving “holes” that contain no data. LFS uses a log cleaner to coalesce live

data from old segments into a smaller number of new segments, creating

completely empty segments that can be used for future full segment writes.

Since the cleaner must create empty segments at least as quickly as the

system writes new segments, a single, sequential cleaner would be a bottleneck in a distributed system such as xFS. Our design therefore provides a

distributed cleaner.

An LFS cleaner, whether centralized or distributed, has three main tasks.

First, the system must keep utilization status about old segments-how

many “holes” they contain and how recently these holes appeared—to make

wise decisions about which segments to clean [Rosenblum and Ousterhout

1992]. Second, the system must examine this bookkeeping

information to

select segments to clean. Third, the cleaner reads the live blocks from old log

segments and writes those blocks to new segments.

The rest of this section describes how xFS distributes cleaning. We first

describe how xFS tracks segment utilizations, then how we identify subsets

of segments to examine and clean, and finally, how we coordinate the parallel

cleaners to keep the file system consistent. Because the prototype does not

yet implement the distributed cleaner, this section includes the key simulation results motivating our design.

ACM Transactions on Computer Systems, Vol 14, No. 1, February 1996.

60

4.1

.

Thomas E. Anderson et al,

Distributing Utilization Status

xFS assigns the burden of maintaining each segment’s utilization status to

the client that wrote the segment. This approach provides parallelism by

distributing the bookkeeping,

and it provides good locality; because clients

seldom write-share data [Baker et al. 1991; Blaze 1993; Kistler and Satyanarayanan 1992] a client’s writes usually affect only local segments’ utilization status.

We simulated this policy to examine how well it reduced the overhead of

maintaining utilization information. For input to the simulator, we used the

Auspex trace described in Section 3.2.4, but since caching is not an issue, we

gather statistics for the full seven-day trace (rather than using some of that

time to warm caches).

Figure 6 shows the results of the simulation. The bars summarize the

network communication

necessary to monitor segment state under three

policies: Centralized

Pessimistic,

Centralized

Optimistic, and Distributed.

Under the Centralized Pessimistic policy, clients notify a centralized, remote

cleaner every time they modify an existing block. The Centralized Optimistic

policy also uses a cleaner that is remote from the clients, but clients do not

have to send messages when they modify blocks that are still in their local

write buffers. The results for this policy are optimistic because the simulator

assumes that blocks survive in clients’ write buffers for 30 seconds or until

overwritten, whichever is sooner; this assumption allows the simulated system to avoid communication

more often than a real system since it does not

account for segments that are written to disk early due to syncs [Baker et al.

1992]. (Unfortunately,

syncs are not visible in our Auspex traces.) Finally,

under the Distributed policy, each client tracks the status of blocks that it

writes so that it needs no network messages when modi&ing a block for

which it was the last writer.

During the seven days of the trace, of the one million blocks written by

clients and then later overwritten or deleted, 33% were modified within 30

seconds by the same client and therefore required no network communication

under the Centralized Optimistic policy. However, the Distributed scheme

does much better, reducing communication by a factor of 18 for this workload

compared to even the Centralized Optimistic policy.

4.2

Distributing

Cleaning

Clients store their segment utilization information in s-#iles. We implement

s-files as normal xFS files to facilitate recovery and sharing of s-files by

different machines in the system.

Each s-file contains segment utilization information for segments written

by one client to one stripe group: clients write their s-files into per-client

directories, and they write separate s-files in their directories for segments

stored to different stripe groups.

A leader in each stripe group initiates cleaning when the number of free

segments in that group falls below a low-water mark or when the group is

ACM Transactions on Computer Systems, Vol. 14, No. 1, February 1996.

Setverless Network File Systems

100%

[IN

EL

o%

~

Modified By

Sanx Client

m

Modified By

Different

.

61

Client

Fig. 6. Simulated network communication

between clients and cleaner, Each bar shows the

fraction of all blocks modified or deleted in the trace, based on the time and client that modified

the block. Blocks can be modified by a different client than originally wrote the data, by the same

client within 30 seconds of the previous write, or by the same client after more than 30 seconds

have passed. The Centralized Pessimistic policy assumes every modification requires network

traffic. The Centralized Optimistic scheme avoids network communication when the same client

modifies a block it wrote within the previous 30 seconds, while the Distributed scheme avoids

communication whenever a block is modified by its previous writer,

idle. The group leader decides which cleaners should clean the stripe group’s

segments. It sends each of those cleaners part of the list of s-files that contain

utilization information

for the group. By giving each cleaner a different

subset of the s-files, xFS specifies subsets of segments that can be cleaned in

parallel.

A simple policy would be to assign each client to clean its own segments. An

attractive alternative is to assign cleaning responsibilities

to idle machines.

xFS would do this by assigning s-files from active machines to the cleaners

running on idle ones.

4.3 Coordinating Cleaners

Like BSD LFS and Zebra, xFS uses optimistic concurrency control to resolve

conflicts between cleaner updates and normal file system writes. Cleaners do

not lock files that are being cleaned, nor do they invoke cache consistency

actions. Instead, cleaners just copy the blocks from the blocks’ old segments

to their new segments, optimistically assuming that the blocks are not in the

process of being updated somewhere else. If there is a conflict because a client

is writing a block as it is cleaned, the manager will ensure that the client

update takes precedence over the cleaner’s update. Although our algorithm

for distributing cleaning responsibilities

never simultaneously

asks multiple

cleaners to clean the same segment, the same mechanism could be used to

allow less strict (e.g., probabilistic)

divisions of labor by resolving conflicts

between cleaners.

ACM Transactions on Computer Systems. Vol. 14. No. 1, February 1996

62

.

Thomas E. Anderson et al.

5. RECOVERY

AND RECONFIGURATION

Availability is a key challenge for a distributed system such as xFS. Because

xFS distributes the file system across many machines, it must be able to

continue operation when some of the machines fail. To meet this challenge,

xFS builds on Zebra’s recovery mechanisms, the keystone of which is redundant, log-stmctured

storage. The redundancy provided by a software RAID

makes the system’s logs highly available, and the log-structured

storage

allows the system to quickly recover a consistent view of its data and

metadata through LFS checkpoint recovery and roll-forward.

LFS provides fast recovery by scanning the end of its log to first read a

checkpoint and then roll forward, and Zebra demonstrates how to extend LFS

recovery to a distributed system in which multiple clients are logging data

concurrently. xFS addresses two additional problems. First, xFS regenerates

the manager map and stripe group map using a distributed

consensus

algorithm. Second, xFS recovers manager metadata from multiple managers’

logs, a process that xFS makes scalable by distributing checkpoint writes and

recovery to the managers and by distributing roll-forward to the clients.

The prototype implements only a limited subset of xFS recovery functionality—storage servers recover their local state after a crash; they automatically

reconstruct data from parity when one storage server in a group fails; and

clients write deltas into their logs to support manager recovery. However, we

have not implemented manager checkpoint writes, checkpoint recovery reads,

or delta reads for roll-forward. The current prototype also fails to recover

cleaner state and cache consistency state, and it does not yet implement the

consensus algorithm needed to dynamically reconfigure manager maps and

stripe group maps. This section outlines our recovery design and explains

why we expect it to provide scalable recovery for the system. However, given

the complexity of the recovery problem and the early state of our implementation, continued research will be needed to filly understand scalable recovery.

5.1 Data Structure Recovery

Table H lists the data structures that storage servers, managers, and cleaners recover after a crash. For a systemwide reboot or widespread crash,

recovery proceeds from storage servers, to managers, and then to cleaners

because later levels depend on earlier ones. Because recovery depends on the

logs stored on the storage servers, xFS will be unable to continue if multiple

storage servers from a single stripe group are unreachable due to machine or

network failures. We plan to investigate using multiple parity fragments to

allow recovery when there are multiple failures within a stripe group [Blaum

et al. 1994]. Less widespread changes to xFS membership-such

as when an

authorized machine asks to join the system, when a machine notifies the

system that it is withdrawing,

or when a machine cannot be contacted

because of a crash or network failure—trigger

similar reconfiguration

steps.

For instance, if a single manager crashes, the system skips the steps to

recover the storage servers, going directly to generate a new manager map

ACM Transactions on Computer Systems, Vol. 14, No. 1, February 1996

Serverless Network File Systems

Table H.

Data Structures

.

63

Restored During Recovery

Data Structure

Log Segments

Stripe Group Map

Recovered From

Local DataStructures

Consensus

Manager

Manager Map

Disk Location Metadata

Cache Consistency Metadata

Consensus

Checkpoint and Roll-Forward

Poll Clients

Cleaner

Segment Utilization

S-Files

Storage Server

Recovery occurs in the order listed from top to bottom because lower datastructures

depend on higher ones.

that assigns the failed manager’s

then recovers the failed manager’s

using checkpoint and roll-forward,

by polling clients.

duties to a new manager;

the new manager

disk metadata

from the storage server logs

and it recovers its cache consistency

state

5.1.1 Storage Server Recovery.

The segments stored on storage server

disks contain the logs needed to recover the rest of xFS’ data structures, so

the storage servers initiate recovery by restoring their internal data structures. When a storage server recovers, it regenerates its mapping of xFS

fragment IDs to the fragments’ physical disk addresses, rebuilds its map of

its local free disk space, and verifies checksums for fragments that it stored

near the time of the crash. Each storage server recovers this information

independently from a private checkpoint, so this stage can proceed in parallel

across all storage servers.

Storage servers next regenerate their stripe group map. First, the storage

servers use a distributed consensus algorithm [Cristian 1991; Ricciardi and

Birman 1991; Schroeder et al. 1991] to determine group membership and to

elect a group leader. Each storage server then sends the leader a list of stripe

groups for which it stores segments, and the leader combines these lists to

form a list of groups where fragments are already stored (the obsolete stripe

groups). The leader then assigns each active storage server ti a current stripe

group and distributes the resulting stripe group map to the storage servers.

5.1.2 Manager Recovery.

Once the storage servers have recovered, the

managers can recover their manager map, disk location metadata, and cache

consistency metadata. Manager map recovery uses a consensus algorithm as

described above for stripe group recovery. Cache consistency recovery relies

on server-driven

polling [Baker 1994; Nelson et al. 1988]: a recovering

manager contacts the clients, and each client returns a list of the blocks that

it is caching or for which it has write ownership from that manager’s portion

of the index number space.

The remainder of this subsection describes how managers and clients work

together to recover the managers’ disk location metadata—the

distributed

imap and index nodes that provide pointers to the data blocks on disk. Like

ACM Transactions on Computer Systems, Vol. 14, No. 1, February 1996

Thomas E. Anderson et al

64.

LFS and Zebra, xFS recovers this data using a checkpoint and roll-forward

mechanism. xFS distributes this disk metadata recovery to managers and

clients so that each manager and client log written

before the crash is

assigned

to one manager

or client to read during recovery.

Each manager

reads the log containing

the checkpoint

for its portion of the index number

space,

the

and where

crash.

This

possible,

clients

delegation

read

occurs

as

the same

part

logs that they

of the

consensus

wrote

before

process

that

generates

the manager map.

The goal of manager

checkpoints

is to help managers

recover their imaps

from the logs. As Section 3.2.2 described,

managers

store copies of their imaps

in files called ifiles. To help recover

the imaps from the ifiles, managers

periodically

write checkpoints

that contain lists of pointers to the disk storage

locations

of the

ifiles’

state of the ifile when

of the clients’

blocks.

Because

the checkpoint

logs reflected

each

checkpoint

is written,

by the checkpoint.

corresponds

it also includes

Thus,

to the

the positions

once a manager

reads

a

checkpoint

during recovery, it knows the storage locations of the blocks of the

ifile as they existed at the time of the checkpoint,

and it knows where in the

client logs to start reading

to learn about more recent modifications.

The

main difference between xFS and Zebra or BSD LFS is that xFS has multiple

managers,

so each xFS manager

writes its own checkpoint

for its part of the

index number space.

During recovery,

managers

read their checkpoints

independently

and in

parallel.

Each manager

locates

its checkpoint

by first querying

storage

servers

to locate the newest segment

written to its log before the crash and then

reading backward

in the log until it finds the segment with the most recent

checkpoint.

Next, managers

use this checkpoint

to recover their portions

of

the imap. Although the managers’

checkpoints

were written at different times

and therefore

do not reflect a globally consistent

view of the file system, the

next phase of recovery, roll-forward,

brings all of the managers’

disk location

metadata

to a consistent

state corresponding

to the end of the clients’ logs.

To account for changes

that had not reached the managers’

checkpoints,

the system

uses roll-forward,

where

clients

use the deltas

stored

in their logs

to replay actions that occurred later than the checkpoints.

To initiate roll-forward, the managers

use the log position information

from their checkpoints

to advise the clients of the earliest segments

to scan. Each client locates the

tail of its log by querying storage servers, and then it reads the log backward

to locate the earliest segment needed by any manager. Each client then reads

forward in its log, using the manager map to send the deltas to the appropriate managers.

Managers

use the deltas to update

their imaps and index

nodes as they do during normal

operation;

version

numbers

in the deltas

allow managers

to chronologically

order different

same files [Hartman

and Ousterhout

1995].

clients’

modifications

t.o the

5.1.3 Cleaner Recovery.

Clients checkpoint the segment utilization information needed by cleaners in standard xFS files, called s-files. Because these

checkpoints

are stored in standard

files, they are automatically

recovered by

the storage server and manager

phases of recovery. However, the s-files may

ACM Transactions on Computer Systems, Vol. 14, No. 1, February 1996,

Serverless Network File Systems

.

65

not reflect the most recent changes to segment utilizations

at the time of the

crash, so s-file recovery also includes

a roll-forward

phase. Each client rolls

forward the utilization

state of the segments

tracked in its s-files by asking

the other

clients

are more recent

clients

gather

roll-forward

5.2

Scalability

for summaries

of their modifications

than the s-file checkpoint.

this

phase

segment

for manager

utilization

To avoid

summary

to those

scanning

segments

that

their logs twice,

information

during

the

metadata.

of Recovery

Even with the parallelism provided by xFS’ approach to manager recovery,

future work will be needed to evaluate its scalability. Our design is based on

the observation

that, while the procedures

described above can require

O(N z ) communications

steps (where N refers to the number of clients,

managers, or storage servers), each phase can proceed in parallel across N

machines.

For instance, to locate the tails of the systems logs, each manager and

client queries each storage server group to locate the end of its log. While this

can require a total of 0(l?2 ) messages, each manager or client only needs to

contact N storage server groups, and all of the managers and clients can

proceed in parallel, provided that they take steps to avoid having many

machines simultaneously

contact the same storage server [Baker 1994]; we

plan to use randomization