EM-ONE: An Architecture for Reflective Commonsense ... Push Singh

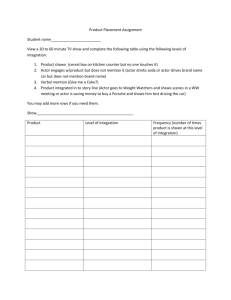

advertisement