Hierarchical Decomposition of Multi-agent

advertisement

Hierarchical Decomposition of Multi-agent

Markov Decision Processes with Application

to Health Aware Planning

by

MASSACHUSETMS WTIUTE

OF TECHNOLOGY

Yu Fan Chen

OCT 0 2 2014

B.A.Sc., Aerospace Engineering

University of Toronto (2012)

LIBRARIES

Submitted to the Department of Aeronautics and Astronautics

in partial fulfillment of the requirements for the degree of

Master of Science in Aerospace Engineering

at the

MASSACHUSETTS INSTITUTE OF TECHNOLOGY

September 2014

@ Massachusetts Institute of Technology 2014. All rights reserved.

Signature redacted

Author ..................

....................

Department of Aeronautics and Astronautics

August 21, 2014

Certified by................Signature

....................

Jonathan P. How

Richard C. Maclaurin Professor of Aeronautics and Astronautics

Thesis Supervisor

Signature redacted.-

..................

Paulo C. Lozano

Associate Professor of Aeronautics and Astronautics

Chair, Graduate Program Committee

Accepted by ................

2

Hierarchical Decomposition of Multi-agent

Markov Decision Processes with Application

to Health Aware Planning

by

Yu Fan Chen

Submitted to the Department of Aeronautics and Astronautics

on August 21, 2014, in partial fulfillment of the

requirements for the degree of

Master of Science in Aerospace Engineering

Abstract

Multi-agent robotic systems have attracted the interests of both researchers and practitioners because they provide more capabilities and afford greater flexibility than

single-agent systems. Coordination of individual agents within large teams is often

challenging because of the combinatorial nature of such problems. In particular, the

number of possible joint configurations is the product of that of every agent. Further,

real world applications often contain various sources of uncertainties. This thesis investigates techniques to address the scalability issue of multi-agent planning under

uncertainties.

This thesis develops a novel hierarchical decomposition approach (HD-MMDP) for

solving Multi-agent Markov Decision Processes (MMDPs), which is a natural framework for formulating stochastic sequential decision-making problems. In particular,

the HD-MMDP algorithm builds a decomposition structure by exploiting coupling relationships in the reward function. A number of smaller subproblems are formed and

are solved individually. The planning spaces of each subproblem are much smaller

than that of the original problem, which improves the computational efficiency, and

the solutions to the subproblems can be combined to form a solution (policy) to the

original problem. The HD-MMDP algorithm is applied on a ten agent persistent

search and track (PST) mission and shows more than 35% improvement over an existing algorithm developed specifically for this domain. This thesis also contributes to

the development of the software infrastructure that enables hardware experiments involving multiple robots. In particular, the thesis presents a novel optimization based

multi-agent path planning algorithm, which was tested in simulation and hardware

(quadrotor) experiment. The HD-MMDP algorithm is also used to solve a multi-agent

intruder monitoring mission implemented using real robots.

Thesis Supervisor: Jonathan P. How

Title: Richard C. Maclaurin Professor of Aeronautics and Astronautics

3

4

Acknowledgments

First and foremost, I would like to thank my advisor, Professor Jonathan How, for his

guidance and support in the past two years. Jon has the ability to accurately convey

arcane concepts through simple diagrams, and always tries to draw connections to

the big picture. Also, Jon is always adamant about making good slides, which helped

me profoundly to improve the quality of my talks.

I would also like to thank all members of the Aerospace Control Lab, whose

support is indispensable to my research. In particular, I am grateful to Mark Cutler,

thanks for bearing with me as I flied quadrotors into the walls over and over again.

He is master of hardware and ROS, and is always willing to lend a hand. I would also

like to thank Kemal Ure, with whom I worked closely on this project. He was always

there to answer questions and provide feedbacks, his input has been essential to this

work. In addition, thanks to Jack Quindlen, Rob Grande, Sarah Ferguson, Chris

Lowe, and Bobby Klein, with whom I shared memories of many late night problem

sets and quals practice sessions. Also, special thanks to Beipeng Mu, for sharing the

cultural heritage; to Luke Johnson, for helping me in Portland; to Trevor Campbell,

for sharing the UofT spirit; and to Ali Agha, for his patience and generosity.

Finally, I would like to thank my wonderful parents. Thank you mom and dad,

for your unconditional support throughout the years of college and graduate school.

Also, I am grateful to my twin brother, whose presence always managed to confuse

and amuse my lab mates. To all my friends from UofT and MIT, thanks for being

there through the ups and downs.

This research has been funded by the Boeing Company. The author acknowledges

Boeing Research & Technology for support of the indoor flight facilities.

5

6

Contents

1 Introduction

1.1 Background and Motivation . . . . . . . . .

1.2 Related Work . . . . . . . . . . . . . . . . .

1.2.1 Dynamic Programming Approaches .

1.2.2 Structural Decomposition Approaches.

1.2.3 Optimization Based Approaches . . .

1.3 Outline and Summary of Contributions . . .

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

13

13

15

15

17

18

19

21

21

23

24

26

26

27

27

3 Hierarchical Decomposition of MMDP

3.1 Structure of Reward Functions . . . . . .

3.1.1 Construction of Reward Functions.

3.1.2 Factored Reward Function . . . .

3.1.3 Useful Functional Forms . . . . .

29

30

30

32

33

36

36

38

38

40

44

.

.

.

.

.

2 Preliminaries

2.1 Markov Decision Processes .......................

2.2 Multi-agent Markov Decision Processes ............

2.3 Dynamic Programming Algorithms . . . . . . . . . . . . .

2.4 Extensions to Markov Decision Processes ...........

2.4.1 Partially Observable Markov Decision Processes . .

2.4.2 Decentralized Markov Decision Process (dec-MMDP)

2.5 Sum mary ............................

.

Hierarchically Decomposed MMDP . . .

3.2.1

3.2.2

3.2.3

3.2.4

3.2.5

Decomposition .............

Solving Leaf Tasks .........

Ranking of Leaf Tasks . . . . . .

Agent Assignment .........

Summary ................

.

3.2

7

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

. . . . . . . . . . . . .

............ . .

Run Time Complexity Analysis .......

Summary ..................

.

3.3

3.4

44

46

47

47

48

49

53

53

58

60

60

62

66

68

69

5 Experimental Setup

5.1 RAVEN Testbed .................

5.2 Autonomous Vehicles ..................

5.3 Software Architecture ..................

5.4 Path Planner ......................

5.4.1 Potential Field ...............

5.4.2 Sequential Convex Program ........

5.4.3 Incremental Sequential Convex Program

5.5 Intruder Monitoring Mission ...........

5.6 Summary .....................

71

71

73

75

78

78

79

83

90

92

6 Conclusions

6.1 Summary .....................

6.2 Future Work ....................

6.2.1 Optimization on Task Tree Structure .

6.2.2 Formulation of Leaf Tasks . . . . . . .

95

95

96

96

97

References

98

.

,

.

.

.

.

.

.

.

4 Application to Health Aware Planning

4.1 Persistent Search and Track Mission (PST)

4.1.1 Mission Description . . .

4.1.2 Problem Formulation . .

4.1.3 Structural Decomposition

4.1.4 Empirical Analysis

4.1.5 Simulation Result.....

4.2 Intruder Monitoring Mission

4.2.1 Mission Description ....

4.2.2 Problem Formulation

4.2.3 Extension to HD-MMDP

4.2.4 Simulation Results . . .

4.3 Summary .. . . . . . . . . . . .

8

List of Figures

14

1-1

Applications of Multi-agent Systems . . . . . . . . . . . . . . . . . .

3-1

3-2

3-3

3-4

3-5

3-6

3-7

3-8

3-9

3-10

Construction of Multi-agent Reward

Two Agent Consensus Problem. . .

Summation Reward Function . . .

Multiplicative Reward Function . .

Example of Task Tree Structure . .

Solution to Leaf Tasks . . . . . . .

Relative Importance of Leaf Tasks .

Ranking of Leaf Tasks . . . . . . .

Computation of Minimum Accepted

Assignment of Agents to Leaf Tasks

Function . . . . .

. . . . . . . . . . .

. . . . . . . . . . .

. . . . . . . . . . .

. . . . . . . . . . .

. . . . . . . . . . .

. . . . . . . . . . .

. . . . . . . . . . .

Expected Reward

. . . . . . . . . . .

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

30

31

34

35

37

38

39

41

43

43

4-1

4-2

4-3

4-4

4-5

4-6

4-7

4-8

4-9

4-10

4-11

4-12

Persistent Search and Track Mission . . . . . . . . . . .

Task Tree of Persistent Search and Track Mission . . .

Three Agent PST Mission Simulation Result . . . . . .

Effect of Adopting Decomposition Structure . . . . . .

Pruning Solution Space . . . . . . . . . . . . . . . . . .

Simulation of Ten Agent PST Mission . . . . . . . . . .

Intruder Monitor Domain . . . . . . . . . . . . . . . .

Leaf Tasks in Intruder Monitoring Domain . . . . . . .

Action Space of Intruder Monitoring Mission . . . . . .

State Transition Model of Intruder Monitoring Mission

Task Tree of Intruder Monitoring Mission . . . . . . . .

Simulation of Intruder Monitoring Mission . . . . . . .

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

. .

. .

. .

. .

. .

. .

. .

. .

. .

. .

. .

...

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

48

53

55

56

58

60

61

62

64

65

67

70

5-1 Robotic Vehicles and Experiment Environment . . . . . . . . . . . . .

5-2 Schematic of RAVEN Testbed . . . . . . . . . . . . . . . . . . . . . .

5-3 Image Projection Capability . . . . . . . . . . . . . . . . . . . . . . .

72

73

74

9

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

Autonomous Robotic Agents . . . . . . . . . . . . . . .

Software Architecture . . . . . . . . . . . . . . . . . . .

A Live Lock Configuration for Potential Field Algorithm

Typical Convergence Pattern of SCP . . . . . . . . . .

Modeling Corner Constraint . . . . . . . . . . . . . . .

Convergence Pattern Around a Corner . . . . . . . . .

Convergence Pattern for Multi-agent System . . . . . .

Mutli-agent Path Planning Test Cases . . . . . . . . .

Hardware Demonstration of iSCP Algorithm . . . . . .

Hardware Demonstration of Intruder Monitoring Mission

.

5-4

5-5

5-6

5-7

5-8

5-9

5-10

5-11

5-12

5-13

10

. . .

. . .

. . .

. . .

.. .

. . .

. . .

. . .

. . .

. . .

76

77

79

83

84

87

88

89

91

93

List of Tables

3.1

Local Optimal Policies can be Jointly Suboptimal . . . . . . . . . . .

35

3.2

Run Time Complexity of HD-MMDP ..................

46

4.1

Computational Time of Value Iteration on PST Mission ........

54

11

12

Chapter 1

Introduction

1.1

Background and Motivation

Multi-agent systems arise in many applications of interest. In the manufacturing domain, efficient resource allocation [17, 58] among a group of robotic agents is crucial

for maximizing production output. Similarly, in military applications, intelligent organization of multiple unmanned vehicles[46, 18, 55} is critical for surveillance and

reconnaissance missions. Also, in the network management domain, smooth coordination of multiple servers[12, 51] is essential for maximizing network traffic. In each

of these scenarios, the objective is to determine an action for each agent to optimize

a joint performance metric for the team.

In addition, various sources of uncertainties arise naturally in such planning problems. In the manufacturing domain, there exist process and sensor noise that affect

robots' performance. In surveillance and reconnaissance missions, the behavior patterns of targets are often uncertain and can only be modeled probabilistically. In

network management, servers may fail due to internal flaws (software bugs) or malicious attack from external sources, both of which can be modeled as stochastic events.

Thus, these applications can be framed as multi-agent stochastic sequential decision

problems, of which a major challenge is to hedge against future uncertainties.

Markov Decision Processes (MDP) is a mathematical framework for stochastic sequential decision problems. In particular, given a probabilistic transition model, the

13

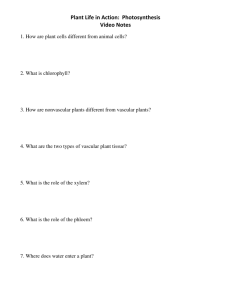

Figure 1-1: Applications of Multi-agent Systems. Left sub-figure shows multiple

robotic agents in a manufacturing setting; middle sub-figure shows a multi-vehicle

surveillance mission; right sub-figure shows a computer network.

goal is to find a policy that maximizes long term reward. A policy is a framework for

actions, which selects an appropriate action for each possible state of the system. A

naive approach is to develop a policy for each agent independently. However, explicit

coordination is desired when (i) a task is inherently multi-agent, such as transportation of a large object with combined effort, and (ii) a task is highly stochastic, such

that each agent should plan for contingencies in case of degraded capabilities of its

teammates. Another approach that explicitly account for coordination among a group

of agents is to plan in the joint spaces. However, given the combinatorial nature of

a multi-agent system, the joint planning space scales exponentially in the number

of agents. In a simple surveillance domain, if the physical space is partitioned into

100 regions, each representing a possible state of a vehicle, a team comprised of ten

vehicles would have 10010 joint states. It is computationally prohibitive to enumerate

and iterate over such enormous planning spaces.

In short, multi-agent planning problems have great application values, but are

technically challenging because (i) the need to model agent level and environmental uncertainties, and (ii) the exponential growth of planning space as the number

of agents increases. The primary objective of this thesis is to develop an algorithm

that can handle uncertainty models and scale favorably with the number of agents.

The proposed algorithm automatically identifies a decomposition structure from the

reward function, and attains computational tractability by exploiting this decomposition structure.

14

1.2

Related Work

Researchers have previously formulated multi-agent sequential decision problems as

Markov Decision Processes (MDP) [42] and Multi-agent Markov Decision Processes

(MMDP) [10]. Although an optimal policy can in theory be computed via dynamic

programming (DP) [5], the exponential growth of joint planning spaces had made

such approaches impractical to implement. Instead, researchers have developed various generic methods (Section 1.2.1) for solving MDP approximately at lower computational cost. In addition, researcher have studied various structural decomposition

methods (Section 1.2.2) to lower computational complexity. Further, researchers have

considered solving multi-agent problems through formulation of a mathematical program (Section 1.2.3). A precise definition of MDP and MMDP, as well as the detailed

descriptions of classical DP methods, will be presented in the next chapter.

1.2.1

Dynamic Programming Approaches

Asynchronous Dynamic Programming

Classical DP methods maintain a tabular value function with an entry every state, and

perform Bellman update once for every state per iteration. Asynchronous DP [7, 35]

is a class of DP algorithms that performs Bellman update at different frequencies for

different parts of the state space. The key idea is that some parts of the state space

are more likely to generate a favorable policy; and thus, it is desirable to focus on

those parts of the state space.

In Prioritized Sweeping algorithm [39], residual (difference between successive

Bellman updates) is used as a metric to determine the best set of states for future

update. LAO* [27] keeps track of all previously explored states, and slowly expands

the set if Blman update suggests that an unexplored state could be useful. Real time

dynamic programming (RTDP) [50, 9] simulates trajectories (Monte Carlo simulation)

through the state space and perform Bellman update on the explored trajectories.

Asynchronous DP has been shown to be very effective in planning problems with

a well defined initiation set and termination set; that is, the goal is to find a policy

15

that can drive the system from one part of the state space to another part of the state

space. For instance, a stochastic path planning problem requires finding a policy that

guides a vehicle from a starting position to a goal position. In such problems, the

algorithm can focus on parts of the state space "connecting" the initiation set and

the termination set. However, in some persistent planning domains where initiation

and termination sets are not specified, such as in reconnaissance missions, it is difficult to determine which states are more important than others. Further, this class

of algorithm still requires explicit enumeration of a substantial portion of the joint

planning spaces, which can be impractical for multi-agent applications.

Approximate Dynamic Programming

Approximate DP [14, 22] is a class of DP algorithms that uses feature vectors to

aggregate states for lowering computational complexity. In particular, given a set of

feature vectors, Oi(s), the value function can be approximated as V(s) = E wi4i(s).

The algorithms attempt to find the set of wi that would best approximate the true

value function. Since number of features (I(I) is typically much smaller than the

number of states (ISi), approximate DP achieves significant computation savings

compared to classical DP.

Unsurprisingly, since 14I

< ISI, the

representation power of feature space (linear

combination of feature vectors) is less than that of tabular enumeration. Thus, various

error metric has been proposed for feature representation of the true value function.

Minimizing different error metric leads to different approximate Bellman update step,

which defines different algorithms. For instance, Least Square Temporal Difference

(LSTD) [13] is a popular approximate DP algorithm that minimizes a projection

error under Bellman update. Approximate Value Iteration and approximate Policy

Iteration are other examples of this class of algorithms. GA-dec-MMDP [44] is an

algorithm developed specifically for multi-agent systems, in which by specifying a set

of feature vectors, allows each agent to model the rest of the team in an approximate,

aggregate manner.

The key idea of approximate DP is that many states are similar. For instance,

16

in a path planning domain, one feature vector could lump together all states near

obstacles. One Bellman update step can change the value of all related states simultaneously. However, it usually require extensive domain knowledge to specify a good

set of feature vectors.

1.2.2

Structural Decomposition Approaches

Structural decomposition methods [26] decompose a planning problem into a number

of subproblems with smaller planning spaces. Then, eachsubproblem is solved individually to compute local policies. Lastly, the local policies are combined to generate

a policy to the original problem.

Serial Decomposition

In serial decomposition, the planning spaces are partitioned into smaller sets. A

subproblem (i.e. MDP) can be formulated around each partitioned set. The size of

the original problem is the sum of that of the subproblems; that is, the planning spaces

of the original problem are the union of that of the subproblems. At each decision

period, serial decomposition identifies one active subproblem [29], and follows the

subproblem's local policy.

Consider a stochastic path planning problem inside a building.

The building

has multiple rooms connected by hallways and corridors. A serial decomposition

algorithm would decompose the physical space into individual rooms and hallways.

Local policies can be computed inside each room to drive the vehicle towards the

door, which connects the room to hallway or other rooms. A policy to the original

problem can be found by first identifying which room the vehicle currently resides,

and then follow the room's local policy. A popular serial decomposition algorithm is

.

MAXQ by Dietterich et al. [21]

Serial decomposition works well when there exist good heuristics for partitioning

the planning space. Also, it usually assumes infrequent transition among different

partitions. Thus, these algorithms typically require .domain knowledge. More importantly, serial decomposition still requires explicit enumeration of the entire state

space, which can be impractical for large scale multi-agent systems.

17

Parallel Decomposition

In parallel decomposition, the joint planning spaces are decomposed along different

dimensions. For instance, vehicles in surveillance mission can have a dimension specifying its geographical location and another dimension specifying its operational health

status. A subproblem is formulated for each dimension (or a subset of dimensions).

The size of the original problem is the product of that of the subproblems, that is,

the planing space of the original problem is the Cartesian product of that of the

subproblems. At each decision period, multiple subproblems are active, and the local

policies are combined to form a policy to the original problem.

It is worth noting that adding an agent is equivalent to adding a dimension in a

planning problem (see next chapter for details). Thus, parallel decomposition methods have been widely applied to multi-agent systems. For instance, the coordination

graph approach [25, 32] assumes different subset of agents are coupled through locally shared value functions. Subproblems are formed around each subset of connected

agents and a policy is computed by maximizing the sum of each subproblem's value

function. Other examples include Large Weakly Coupled MDPs [38], Factored MDPs

[24], Concurent MDPs [37] , and Dynamically Merge MDP [491.

Parallel decomposition methods require user specified decomposition structure,

which rely on domain knowledge. Also, coupling between subproblems is assumed to

take simple forms, such as addition of local value functions or reward functions.

1.2.3

Optimization Based Approaches

The previous two sections described solution approaches in the MDP framework.

In the optimization community, researchers have studied task allocation problems

for multi-agent systems [28, 2]. In particular, the objective is to optimize a joint

performance metric given individual scoring functions [30]

Na

max

Nt

Fi(x, r)

ZZ

i=1 j=1

18

(1.1)

where Fi (x, r) is the scoring function specifying the reward of agent i performing

task j at time r, and xij is binary decision variables. The optimization problem

can also be augmented with various constraints. Exemplar algorithms for solving

this optimization problem approximately are sequential greedy algorithm and auction

based algorithms. Since this class of algorithms avoids enumerating and iterating

through the problem's planning space, it scales favorably with the number of agents

(compared to MDP approaches).

Optimization based algorithms were originally developed for deterministic systems

and has been recently extended to incorporate some aspects of uncertainty models.

For instance, Campbell et al. [15] used agent level MDP to compute scoring function

Ft,. Yet, the structure of a task needs to be specified by the user, which rely on

domain knowledge.

Further, Eqn. 1.1 explicitly assumes additive utility between

agents.

1.3

Outline and Summary of Contributions

This thesis proposes a novel hierarchical decomposition algorithm, which decomposes

a multi-agent planning problem into a hierarchy of tasks by exploiting the structure

of the reward function. Often times the reward function reveals a different degree of

coupling between agents, and thus can guide the decomposition procedure. Smaller

planning problems are formed around the tasks at the bottom of the hierarchy. These

subproblems have state spaces that are orders of magnitudes smaller than that of the

original problem, thus allowing for computational tractability. Lastly, the algorithm

combines solutions of the subproblems to construct a policy to the original problem.

The proposed algorithm is shown to achieve better performance at lower computational cost in a persistent surveillance mission. The algorithm has also been applied

to other multi-agent planning domains with more complex coupling relations. In addition to simulation, the algorithm has been run on real robots to demonstrate that

it is real time implementable.

e Chapter 2 presents the preliminaries for modeling multi-agent systems -under

19

uncertainty. It reviews the formulation of Markov Decision Processes (MDP)

and Multi-agent Markov Decision Processes (MMDP). It describes the mechanics of value iteration (a dynamic programming algorithm) for solving MDPs and

MMDPs.

e Chapter 3 proposes the Hierarchically Decomposed Multi-agent Markov Decision Processes (HD-MMDP) algorithm for solving MMDPs [16]. It motivates

the solution approach by examining different functional structures in a MMDP's

reward function. Then, it describes the mechanics of the HD-MMDP algorithm

for generating an approximate solution to multi-agent planning problems.

e Chapter 4 describes a persistent search and track (PST) mission and applies HDMMDP to solve this planning problem. Then, it presents an empirical analysis

of HD-MMDP. On the ten agent PST domain, simulation results show that HDMMDP outperforms GA-dec-MMDP algorithm by more than 35%. HD-MMDP

is also applied to another planning problem, the intruder monitoring mission

(IMM), and demonstrated sustainable performance. Lastly, several extensions

to HD-MMDP algorithms are proposed.

* Chapter 5 presents the hardware setup of Real-time indoor Autonomous Vehicles ENvironment (RAVEN). It also describes a flexible software architecture that enabled conducting hardware experiments involving multiple robots.

A novel multi-agent path planning algorithm is proposed and implemented in

RAVEN testbed. Hardware implementation of intruder monitoring mission is

presented to demonstrate that HD-MMDP is real time implementable.

e Chapter 6 offers concluding remarks and suggests possible directions for future

works.

20

Chapter 2

Preliminaries

This chapter presents preliminaries for Markov Decision Processes, Multi-agent Markov

Decision Processes, and Dynamic Programming solution approaches.

2.1

Markov Decision Processes

Markov Decision Process (MDP) [42] is a flexible mathematical framework for formulating stochastic, sequential decision problems. MDP was originally developed

for discrete systems, where the number of states and actions is finite. Recent works

have extended MDP formulation to continuous systems, whereas this thesis focuses

on discrete MDP. Mathematically, MDP is defined by a tuple (S, A, P, R, y), which

are state space, action space, state transition function, reward function, and discount

factor, respectively.

* State Space: S

State space is the set of all possible states of a system. For instance, imagine a

room being partitioned into a 5 x 5 grid, then there are 25 possible states, each

of which uniquely identifies a configuration of the system. For some systems, a

state may be comprised of multiple dimensions. Consider a vehicle navigating

in the 5 x 5 grid. In addition to a locational dimension, it may additionally

have a battery level dimension, discretized to the nearest percentage. Then, the

21

state space of system is the Cartesian product of both dimensions. The size of

the state space is the product of that of each dimension, which is 2500 in the

above example. Therefore, the state space of the system grows exponentially

with addition of new dimensions, which phenomenon is referred to as the curse

of dimensionality in the literature.

* Action Space: A

Action space is the set of all actions that a system can exert. For instance, a

vehicle navigating in a 5 x 5 grid must choose to go {forward, backward, left,

right}. In addition, the set of permissible actions may depend on the state of

the system, such that the vehicle cannot go across a physical boundary.

* State Transition Function: P

State transition function specifies the probability of reaching state s' by taking

action a at state s, that is, P : S x A x S

-+

[0,1]. State transition function

reflects the Markovian property of the problem, which meant future uncertainty

distribution solely depends on the system's current state and action, and is independent of the system's state trajectory in the past. State transition function

reflects the degree of stochasticity in the system. A vehicle navigating in the

5 x 5 grid may be a stochastic system if the floor is slippery. In particular, a

vehicle driving straight can have a 90% probability reaching its intended location in the front, but also have 10% probability ending up in adjacent locations

on the left or right, due to slippery conditions.

" Reward Function: 1?

Reward function maps each state-action pair to a scalar value, that is, 1:

S xA

-+

R. Intuitively, reward function is designed to favor a set of desired

states. For instance, a vehicle navigating in the 5 x 5 grid may assign a high

reward to its goal position, thereby favoring a policy that drives the vehicle

toward its goal. This thesis assumes that the objective is to generate a policy

that maximizes expected reward. Alternatively, in some scenarios, it is more

natural to think about cost and the objective is to minimize expected cost.

22

"

Discount Factor: -y

Discount factor is a scalax in range (0,1). Intuitively, a discount factor specifies

that reward in the distant future is less valuable. Computationally, it also has

some important implications on theoretical aspects.

" Policy: ir

A deterministic policy, ir, is a function that maps each state to an action, that is,

ir : S -+ A. Solving a MDP is the process of computing a policy that maximizes

discounted cumulative reward, which is called the value function. For an infinite

horizon MDP, value function is defined as

00

V'(s) = E E YR (s,r(st))

(2.1)

t=O

The discount factor must be less than one or the value function may otherwise

diverge.

Theoretical results have been developed showing that the optimal

policy for an infinite horizon MDP is deterministic and stationary.

2.2

Multi-agent Markov Decision Processes

Multi-agent Markov Decision Processes (MMDP) [11] is the application of MDP to

model multi-agent systems. In particular, MMDP is defined by a tuple (na, S, A, 7?, -y),

where n. is the number of agent, and other variables are defined similarly as that for

a MDP.

MMDP is distinguished from a MDP by its assumption of factored action space,

according to the original definition by Boutilier. There are also two other assumptions,

factored state space and transition independence, that researchers often associate with

MMDP. In this thesis, all three conditions are assumed to hold.

e Factored Action Space

The joint action space is assumed to be the Cartesian product of that of each

agent, that is A = A 1 x A 2

x ...

x Aa. This condition implies that each agent's

23

action space remains unchanged in presence of its teammates, and that the joint

action is specified by actions of all agents.

" Factored State Space

The joint state space is assumed to be the Cartesian product of that of each

agent, that is S = Si x S2 x ...

x S,.. This condition implies that each

agent's state space remains unchanged in presence of its teammates, and that

the joint state is specified by states of all agents. Factored state and action

spaces assumptions reflect the thinking that each agent remains its own entity.

" Transition Independence

The transition function is assumed to be the Cartesian product of that of each

agent, that is P = Ph x P2 x - -- x P,.. This condition implies that each

agent's transition dynamics is only a function of its own state and action, and

is independent of its teammates' states and actions [4]. Low level interaction

between agents, such as interference due to occupying the same state, is not

explicitly modeled given this condition.

In comparison to MDP formulation, it can be seen that adding an agent to MMDP

has similar effect to adding a dimension in MDP. Planning spaces is a phrase used to

refer to state spaces and action spaces. Thus, the size of the planning spaces scales

exponentially with the number of agents.

2.3

Dynamic Programming Algorithms

Dynamic Programming (DR) [7] is a class of algorithms that solves optimization problems by breaking the problem down into a number of overlapping simpler subproblems. The subproblems are solved in different stages, and solution to each subproblem

is maintained in memory and used for solving later subproblems.

In context of MDP, Dynamics Programming exploits the Markovian property of

the problem. The best action to take at a particular state is independent of how the

system has reached the particular state. Thus, the original problem of finding the

24

Algorithm 1: Value Iteration

Input: MDP (S, A, P, R,y), tolerance E

Output: policy ir

initialize V0 +- 0, n +- 0

2 while max, residual(s) > E do

1

I

4

5

6

7

foreach s E S do

V +1(s) +- max{R(s, a) + - ,, P(s, a, s')V"(s')}

_ residual(s) = IVn+ 1 (s) _ Vn(s)j

n +- n + 1

return ir(s) +- arg maxa{R(s, a) +7 ,, P(s, a, s)Vn(s')}

optimal sequence of actions is equivalent to the simpler problem of finding the best

action assuming the system will follow the optimal policy thereafter. This idea leads

to the Bellman optimality condition,

V'*(s) = max{R(s,a) +-yEP(s,a,s')V'*(s')}

(2.2)

81

where V* (s) is the optimal value function associated with the optimal policy lr*. Eqn.

2.2 not only has specified a condition that the optimal value function should satisfy,

but also suggests an iterative algorithm for computing the optimal value function. In

particular, given an initialization V0 , one can apply

Vk+1(s) = max{1Z(s, a) +7

P(s, a, s')Vk(s)}

(2.3)

8'

to drive the next iterate closer to V'*. Eqn. 2.3 is called Bellman update or Bellman

backup, at state s. Theoretical results have been developed to show that V *(s) is

unique and performing Bellman update will drive any initialization of value function

toward the optimal value function asymptotically.

Value Iteration (VI) [36] is shown in Algorithm 1. The algorithm computes a

policy 7r given an MDP and convergence tolerance E. The algorithm first initializes

the value function in line 1. Then, Bellman update (line 3-5) is performed at each state

s E S using the current iterate value. Residual, which is the change in value function

in between successive iterates, is computed and stored. Once the maximum residual

25

falls below the tolerance e, it is assumed that the value function has converged (line

2-6). Lastly, a policy is retrieved from the value function by taking greedy selection

with respect to Bellman update equation (line 7).

Each Bellman Update (line 4) step requires iterating through every possible action

and every possible next state, thus yielding run time complexity O(IA ISI), where I-I

denotes the cardinality of a set. Consequently, each Bellman update sweep (line 3-5)

yields run time complexity O(IA

1S12).

Empirically, VI usually requires between 10

to 100 Bellman update sweeps to converge. Note that run time complexity depends

on the size of the planning spaces. This relates to the curse of dimensionality such

that DP approaches quickly become computationally intractable with addition of new

dimensions to the planning spaces.

There also exists another popular DP approach known as policy iteration (PI) [36].

PI iterates between two steps, policy evaluation and policy update. In its original

form, PI has greater run time complexity than VI. A plethora of algorithms have

been developed based on PI and VI, including those reviewed in Sec. 1.2.1.

2.4

Extensions to Markov Decision Processes

2.4.1

Partially Observable Markov Decision Processes

(POMDP)

Markov Decision Process assumes full observability; that is, after taking an action,

the next state will be known to the system. This model works reasonably well when

measurement noise is small compared to discretization level. Otherwise, uncertainty

distribution after each measurement needs to be explicitly modeled, which leads to the

formulation of Partially Observable Markov Decision Processes(POMDP) [47], defined

by the tuple (S, B, A, P, JZ, -y). B denotes the belief space, which is a distribution over

states. When solving a POMDP, one has to keep track of the evolution of belief states.

It is extremely computationally intensive to compute the optimal policy for POMDP.

In particular, MDP is P-complete whereas POMDP is PSPACE-hard [41]. Recently,

26

researchers have developed more scalable algorithms, such as FIRM [1], that solve

POMDPs approximately.

2.4.2

Decentralized Markov Decision Process (dec-MMDP)

Multi-agent MDP, as defined in section 2.2, is a specialization of MDP, thus also

assumes full observability.

Assuming each agent can only measure its own state,

MMDP requires communication between agents such that a centralized planner can

gain full observation to the system. In contested environments where communication

bandwidth is limited, such assumption may be unrealistic. Thus, many researchers

have studied multi-agent sequential decision problems from a decentralized perspective. One particular modeling framework is dec-MDP [23]. Dec-MDP assumes joint

full observability, which means full observability can be achieved only by combining

observations of all agents. The communication limitation is modeled by a probability measure that specifies the probability of receiving an agent's observation given its

state and action. Thus, the optimal policy should strike a balance between optimizing

the reward function and maintaining a good degree of communication. Dec-MMDP is

NEXP-Complete due to its joint full observability assumption [6]. This thesis assumes

full observability and focuses on MDP and MMDP formulations.

2.5

Summary

This section reviewed formulation of Markov Decision Processes (MDP) and Multiagent Markov Decision Processes (MMDP). It also described dynamic programming

(DP) as a class of algorithms for computing optimal solutions of MDPs and MMDPs.

However, DP approaches are not practical for solving MMDPs due to curse of dimensionality. The next chapter introduces an algorithm that solves MMDPs at lower

computational complexity by exploiting structures common to MMDPs.

27

28

Chapter 3

Hierarchical Decomposition of

MMDP

This chapter presents the mechanics of a novel hierarchical decomposition algorithm

for solving Multi-agent Markov Decision Processes (MMDP). The section starts with

a discussion of various structures of MMDP's reward function, and relates different

function structures to different degrees of coupling between agents. Then, it proposes

an algorithm that exploits such structures for solving MMDPs. In particular, the proposed algorithm would decompose an MMDP into a number of subproblems, solve

each subproblem individually, and combine the subproblem's local policy to form a

global policy to the MMDP. To distinguish between the subproblems and the original MMDP, the phrases local policy, local reward function are used to refer to the

subproblems, and the phrases global policy, global reward function, joint planning

spaces are used to refer to the original MMDP. If it is clear in context, the planning

spaces of a multi-agent subproblem are also referred to as joint planning spaces.

29

r4

.

r1

Figure 3-1: Construction of Multi-agent Reward Function. Each shape represents a

task that can be accomplished by a single agent.

3.1

Structure of Reward Functions

3.1.1

Construction of Reward Functions

The reward function, as defined in Section 2.1, can be specified in a tabular form

with one scalar entry per state-action pair. The scalar values can be arbitrary and

there need to be no correlation between any two scalars. However, this mathematical

perspective is rarely useful in practice. After all, the reward function is designed by

people to induce a favorable policy upon solving the MDP. Thus, reward function is

often compactly represented by algebraic functions, which focuses on a small set of

favorable states. More importantly, the set of favorable states is often expressed as

composition of simple rules. For instance, in a multi-agent surveillance domain, a

reward can be given when (i) a certain number of agents is actively patrolling in a

specific area, and (ii) each patrolling agent has an adequate amount of fuel and fully

operational sensors.

The observation that reward functions usually have simple algebraic structure

is formalized with the definition of factored reward function. Before presenting the

precise mathematical formulation, it is useful to provide a simple motivating example

for construction of a multi-agent problem, as shown in Fig. 3-1. Let each shape

represent a point of interest in a 5 x 5 grid world; each shape is associated with a scalar

reward labeled to the right. Given agent dynamics and environmental constraints,

30

Ar2

Figure 3-2: Two Agent Consensus Problem. Agents need to agree on which grid to

visit together (both choosing green action or red action).

developing a policy to reach each point of interest can be formulated as a single agent

MDP. These MDPs are called leaf tasks. With a slight abuse of terminology, the

state spaces of these MDPs are also referred to as leaf tasks. Given these building

blocks, more complicated missions can be constructed by adjoining these leaf tasks.

For instance, addition ri(@) + r2 (N) is used to indicate that tasks 1 and 2 can be

executed independently, and multiplication r3 ()

- r4 ()

is used to indicate that

task 3 and 4 need to be executed simultaneously. We can build more complex mission

by repeated composition of leaf tasks.

The previous example illustrates composition of single-agent (singleton) leaf tasks.

Yet, there are multi-agent tasks that cannot be decomposed into singleton tasks. For

instance, consider the consensus problem shown in Fig. 3-2. The reward function for

this problem is r(s1, 82)

=

W(S1)I(s1

= 82),

where w(-) is a scalar function determining

the value of each grid and I(.) is the indicator function specifying the condition that

both agents must be in the same grid for collection of reward. Thus, when choosing

an action, each agent must be cognizant of the other agent's state and action. Let

the green and red arrows denote two actions that each agent can choose from. In

this case, depending on magnitude of w(s) and state transition uncertainties, both

agent should either choose the green arrows or the red arrows. Mathematically, it is

difficult to decompose the indicator function into a composition of more elementary

functions of si and s2 separately.

31

3.1.2

Factored Reward Function

This section introduces some terminologies to formalize the observations made in

section 3.1.1.

Task

The i-th task, T, is defined as the joint state space Si = s, x ...

x s, of any set of

nt agents. Task Tz is associated with local reward function rZ, which is a part of the

global reward function R. Local reward function is a mapping from the joint state

space to a scalar, that is, r: T' -+ R. Further, t is defined as a particular state in

task T, that is, ti E T. The definition of a task does not restrict to any particular

subset of agents; reward is given as long as some subset of agents reaches the desired

joint state. The dimension of a task's state space is the minimum required number

of agents for executing the task. More agents can be assigned to a task for adding

redundancy, in which case reward is calculated based on the subset of agents that

best accomplishes the task. Note that the composition of tasks forms another task.

Leaf Task

A leaf task is a task that cannot be decomposed into smaller sub-tasks. More precisely,

a leaf task is associated with a leaf reward function, which is function that cannot be

expressed as composition of simpler functions,

rea(si, ...

where

,s,) $

fi(C)f 2 (CC)

(3.1)

C c {si, ... , sni}, and C 5 0

Intuitively, leaf reward functions are the elementary building blocks of a global reward

function. Leaf tasks are subproblems formed around leaf reward functions.

*,

*

*, *,

from Fig. 3-2 are examples of singleton leaf tasks. Example from Fig. 3-2 is

an example of a two-agent leaf task.

32

Factored Reward Function

Factored reward function is the repeated function composition of leaf reward functions,

1Z(8i,

82,... ,iS.

=

fl{... fA

[ fm(r

mi(tml') ,...

..,rh(thl),...

, rmkl-(tmnk)),.. r11 (t"l,. ,rlk(tlk)],

(3.2)

, rhk(thk )}

where h, 1, m are dummy indices showing association of different terms. f(.)'s are

arbitrary functions, r(t) is leaf reward function r(-) evaluated at state t of task

T. For example, consider a three agent planning problem with global reward function R(si, 82)

=

r3 (t)(r 2 (ti) + r3 (t3)).

This can be written in form of Eqn. 3.2 as

R(si, S2, 83) = fi (f2(r2(t), r3 (t)),rs(t)), where fi is the scalar multiplication func-

tion and

f2

is the scalar addition function. Recall from definition of task, t is the

association of multiple agent's state (i.e. t = (ri, r2 , r3 )).

3.1.3

Useful Functional Forms

Construction of reward function illustrates that different functional forms can be used

to express different coordination specifications. More importantly, different functional

forms reveal the extent to which agents are coupled. Intuitively, coupling between

agents is weak if each agent can choose its own action without regard to its teammates'

states and actions; and coupling is strong if an agent needs to take into account of

all other agents' states and actions. The strongest form of coupling is represented by

leaf reward function, in which further decomposition is difficult. This section presents

two functional forms that can be decomposed into subproblems. Subtask is a phrase

used to describe a subproblem that is formulated as a MDP.

)

Summation Reward Function: R(si,..., s,) = E. rk(tk

This functional form represents weak coupling between agents because the subtasks

are almost independent. In particular, assuming infrequent transition of individual

33

A

r2

E+12

Figure 3-3: Summation Reward Function. Agent A1 performs the red subtask independently of A 2 performing the green subtask. Note that the color of the arrows

are different, which is to show that each agent can perform its own subtask without

concerning the other agent.

agents between subtasks, solving each subtasks optimally in parallel would yield a

jointly optimal solution because max

[Zk rik(tlk)] = E max [E01 yrlk(tlk)]

_1

Consider an example shown in Fig. 3-3, which reward function is ri (0) + r2 (f).

Assuming agent A 1 is assigned to subtask @ and agent A 2 is assigned to subtask

0, the jointly optimal policy is to have each agent execute its local optimal policy.

Therefore, the two subtasks are almost independent in the sense that each agent can

execute its local policy without concerning the other agent's state and action.

Multiplicative Reward Function: R(s,.. ., s,) = ]Jk rk(tk)

Multiplicative reward function represents a stronger degree of coupling than that

of the summation functional form. In particular, solving each subtask optimally in

parallel would not yield a jointly optimal solution. Intuitively, this functional form

specifies that the subtasks should be executed simultaneously.

Let agent A 1 be assigned to subtask

*

r4

and agent A 2 be assigned to subtask

.

Consider an example shown in Fig. 3-3, which reward function is r3

For simplicity, let the transition model be deterministic. The optimal policy of each

subtask,

7r*

can be found via value iteration. One other policy, r', is given for each

subtask. Each policy induces a sequence of reward (one scalar reward for each decision period), of which is listed in table 3.1. Recall the objective is to optimize

discounted cumulative reward, E> -yiRr(si). For each task, it is seen that r* induces

34

A_

,-2'

r

I/

2A

Figure 3-4: Multiplicative Reward Function. Agent A1 is performing the orange

subtask and agent A 2 is performing the blue subtask. The lasso over the two arrows

is showing that the two agents should explicitly coordinate when performing the

subtasks.

Table 3.1: Local Optimal Policies can be Jointly Suboptimal. The first row shows the

sequence of reward following a policy, and second row shows the discounted cumulative

reward calculated from the first row. The better policy, as revealed by discounted

cumulative reward, is shown in red.

Reward induced by r'

subtask

(1, 0,,0,1, 0, 0,. ..)

(0, 0,1, 0,0, 1, ...

subtask

I (0,1,0, 0,1, 0, ...)

K_0

joint task

7 3i+1

r3(*) r4(g)

(0, 0, 0, 0 ,0, 0, ... )

__________________0

ZK= 0 73i+2

(0,0, 1, 0, 0, 1, ...

K 73i+2

(0, 0, 1, 0 ,0, 1, ...

)

0 ^ 3i

)

K~=

)

Reward induced by r*

K=___________

Zy3i+2

higher discounted cumulative reward than that of ir'. However, the joint policy of executing 7r* independently will induce zero discounted cumulative reward. In contrast,

combining the suboptimal local policies 7r' will induce discounted cumulative reward

of K= 0 Y3i+2, which is greater than zero. This example shows that in general, combining local optimal policy does not induce global optimal policy for a multiplicative

reward function.

In discussion of functional form and coupling strength, it was assumed (i) infrequent transition of agents in between different subtasks and (ii) assignment of

minimum required number of agents to subtasks (i.e. one agent is assigned to served

a singleton task). However, there are planning domains where such assumptions can

lead to poor solution quality. For instance, if agents cycle through different states

35

reflecting different capabilities (i.e. battery level), then they should be periodically

assigned to different subtasks. Also, if one subtask is much more important than

all other subtasks, then more agents should be assigned to this important subtask

for added redundancy. These are also important aspects that a planning algorithm

should consider for developing a policy to the original planning problem.

3.2

Hierarchically Decomposed MMDP

(HD-MMDP)

This section develops a hierarchical decomposition algorithm [16] that exploits structure in factored reward function. This algorithm will (1) decompose the original

problem into a number of subproblems, (2) solve each subproblem using classical dynamic programming (DP), (3) rank the subproblems according to some importance

metric, and (4) assign each agent to one of the subproblems. Each agent will query

an action from its assigned subproblem's local policy; which in turn, forms a global

policy to the original MMDP.

3.2.1

Decomposition

The first step is to decompose a factored reward function and to build a task tree

structure. A task tree is a graphical representation of a factored reward function.

The leaf nodes of a task tree are the leaf tasks of the planning problem. Recall a leaf

task is a MDP formed around a leaf reward function. The internal nodes of a task

tree are function nodes.

The Decomposition subroutine, shown in Algorithm 2, builds a task tree structure

recursively. In lines 1-3, an MDP is formed if a leaf task is identified. Otherwise,

a function node (lines 4-8) is formed and each factor (argument of the function) is

converted into a subtree and added as one of its child. The subroutine is invoked

recursively (line 7) until all leaf tasks are identified.

For example, consider the factored reward function rir2 + r3 r4 , where r is used

36

)

Algorithm 2: Decomposition

Input: Agents Nj, State, Action, and Transition model < S, Aj, P > of each

agent, for j = 1, ... , na, Discount factor 7, and Reward Function R

Output: A task tree with leaf nodes as smaller MDPs ti, and function nodes

at all internal nodes

1 if r(t) cannot be decomposed then

2

p +- new MDP(< Sj,AjP>,1?,

3

L

return p

else

p +- new function node

5

6

foreach factor r' in R do

7

node +- Decomposition (Nj, < Sj, Aj, P >, r, 7)

8

_ add node as a child of p

4

9

L

return p

(

r

r

r

Figure 3-5: Task Tree of Example 3.1. This tree is built automatically by invoking

.

decomposition subroutine on the reward function rir2 + r3 r4

as a shorthand for r(t). The decomposition subroutine first identifies an summation

function node, and adds the two arguments, rir2 and r3 r4 , as children of the summation node. Then, the subroutine is invoked separately on each child. In particular,

upon decomposition of rir2 , a multiplication functional node is formed, and two leaf

reward functions are identified. The decomposition of r3 r4 is carried out similarly.

Lastly, leaf tasks are formed around each leaf reward function. The resulting task

tree is illustrated in Fig. 3-5. This example (Example 3.1) will be used throughout

this section to explain the key procedures in HD-MMDP.

37

1499

2

5

T,

T3

T4

T2

Figure 3-6: Solution to Leaf Tasks. Leaf tasks are formed around leaf reward functions

and are solved with value iteration. The maximum of each value function, rma, is

labeled above each respective leaf task. The leaf nodes are labeled T instead of r to

emphasize that leaf tasks are formed around each leaf reward functions.

3.2.2

Solving Leaf Tasks

Given a task tree structure, the second step is to solve each subproblem (leaf task)

at the bottom of the hierarchy. Each leaf task can be viewed as a standalone MDP,

and can be solved using classical dynamic programming approaches. HD-MMDP

algorithm uses value iteration (VI) for computing optimal policy to each leaf task.

Use of approximate algorithms for solving leaf tasks is also possible.

The optimal policy and the associated optimal value function can be found upon

solving each leaf task. The value function of a leaf task is the expected cumulative

reward of performing the particular leaf task. Thus, the relative magnitude of each

leaf task's value function reveals which leaf task is more valuable. As a heuristic,

the maximum attainable expected reward (rma. = max, V(s)) is used to compare

relative importance between the leaf tasks. In Example 3.1, the maximum attainable

expected reward, rma, is calculated for each leaf task and labeled in Fig. 3-6.

3.2.3

Ranking of Leaf Tasks

The third step is to develop an importance ordering for the set of leaf tasks. The rma

values calculated in the previous step will be useful for construction of this ordering.

However, it cannot be used directly. For instance, consider T2 and T4 in Fig. 3-6.

Although T2 has greater rma than that of T4 , the joint task T, x T2 is less rewarding

than the joint task T3 x T4 . Thus, it would be preferable to assign agents to the joint

38

Algorithm 3: rankTaskTree

Input: A task tree, tree

Output: An ordered list of leaf tasks, L

1 foreach leaf task T' in tree do

rd+- maxti Vi(t')

2

3 foreach internal node t' in tree do

L

Lhildn1

child

6

return L

--

)

5

rma

fi (rm. , ...,ma

L +- Sort(leaf tasks, compareTask(tree))

L rm..

19

1

9

10

1

9 72

Ti

T2

1

5

T3

52

91

T4

T,

T2

T3

T4

(b) armax

(a) rmx

Figure 3-7: Relative Importance of Leaf Tasks. To determine an execution order for

leaf tasks, it requires finding which leaf task would contribute more to the joint reward

function. The first step is to compute the maximum attainable expected reward rma

at each internal node in the tree, shown in sub-figure(a). This is carried out by using

rm, at the leaf nodes and percolating up to the root node in the task tree. Then, it

is the to compute the degree in which each sub-task contributes the task's cumulative

reward, arm., shown in sub-figure(b).

task T3 x T4 . Consequently, leaf task T4 should be ranked higher than T2 despite

having lower rma value.

The rankTaskTree subroutine, as shown in Algorithm 3, ranks the leaf tasks by

pairwise comparison. In lines 1-2, the maximum expected cumulative reward, rma,

is determined from each substask's value function. In lines 3-4, rma at each internal

node is calculated by percolating up from the leaf tasks. This procedure is illustrated

in greater detail for Example 3.1, as shown in Fig. 3-7. It should be noted that rma is

a statistic, in this case the mean of a distribution. Thus, rm,

=

f(rd

,. .,rmi)

is only an approximation.

After computing rma for each node in the task tree, the last step in rankTaskTree

is to sort the leaf tasks using rm, as a heuristic (line 5 of Algorithm 3). The degree

39

Algorithm 4: compareTask

Input: A task tree, tree, leaf task T and Tk

Output: leaf task T with lower rank

1

g +- mostRecentCommonAncestor(T , Tk)

2

pi +- children(g) A ancestors(T')

pk - children(g) A ancestors(Tk)

3

4 if Orm. >

5

rm. or (&r,. = OrmT

return

L

o return

and rP. > rm.) then

Tk

in which each sub-task contributes to the task's cumulative reward, is calculated by

The sorting procedure relies on a pairwise comparison subroutine,

compareTask, shown in Algorithm 4. Given two leaf tasks T and Tk, the subroutine

=

-,i

?x.

first identifies their most recent common ancestor, g (line 1). Then, in lines 2-3, the

algorithm finds the ancestors, p' and qk, of the two leaf tasks at one level below g.

Lastly, in lines 4-6, the leaf task whose ancestor has a higher Ormx value is given

a higher priority ranking. If rmx of both sub-tasks are equal, the leaf task whose

ancestor with higher rm, value is ranked higher.

Recall that the composition of tasks form another task with larger planning spaces.

Intuitively, Algorithm 4 first identifies the smallest joint task that the contains both

leaf tasks. Then, it tries to identify which leaf task is more valuable to the execution

of the joint task. The application of compare Task subroutine to Example 3.1, and

the resulting importance ordering, are illustrated in Fig. 3-8.

3.2.4

Agent Assignment

The final step is to assign agents to the leaf tasks in the importance ordering determined in the previous step. Recall the ordering was determined by rm. = max, V(s),

which is the maximum expected cumulative reward. In general, however, an agent's

current state will not coincide with the best state, that is s / argmax, V(s'). Thus,

it requires determining (1) how well would an agent execute a leaf task, and (2) the

condition in which a leaf task can be considered completed, such that the algorithm

can move onto the next task in the importance ordering.

40

19

19

19

T,

T2

10

9

10

9

2

5

T3

T4

25

19

T,

Siii

T2

T3

T4

iv

i

ii

Higher

Priority

(b)

(a)

Figure 3-8: Ranking of Leaf Tasks. In sub-figure a), the algorithm compares whether

leaf task 2 or leaf task 3 is more valuable to the joint reward function. First, the most

recent common ancestor for the two leaf tasks is found, which is the root node in this

case. Second, move one level down from the most recent common ancestor, and find

the two multiplication nodes in this case. Compare the arma and rm, values at this

level to determine the priority ranking between the pair of leaf tasks. In this case,

the values of rm, are equal and so the values of rm, are compared. T3 is of higher

priority than T2 since 10 > 9, as shown by colored rma values. In sub-figure b), each

pair of leaf tasks is compared, which induces the importance ranking illustrated in

this figure. Instead of comparing each pair of tasks, a depth first search algorithm

can be implemented for better computational efficiency.

The value function specifies the expected cumulative reward starting at the current state, and it is a good indication of how well an agent would execute a task. The

completion criterion is implemented by specifying a set of minimum rewards {Cmi.},

for leaf tasks {Tt }. With domain knowledge, a designer can specify each value independently. In this thesis, C

is chosen to be 0.8r'.. The ratio C' -/ri, can

be interpreted as the probability of successfully executing a task. This completion

metric specifies the amount of desired redundancy in each leaf task, such that more

agents (than the minimum required) will be assigned to task T' until at least Cmin

units of reward is expected to be achieved. The computation of Cmii for Example 3.1

is illustrated in Fig. 3-9. Lastly, an action is queried for each agent from the local

policy of its assigned leaf task.

The assignTask subroutine, shown in Algorithm 5, details the task allocation

process. For each leaf task, the expected cumulative reward R' is initialized to zero,

41

the boolean completion flag C' is initialized to false, and the agent assignment list Ai

is initialized as a empty list (line 1). The list of leaf tasks are executed (assign agents)

in the order of decreasing importance (line 2). For each leaf task, the algorithm first

identifies the agent, Nj, that would best execute the task (line 5). Then, it adds

agent Nj to the agent assignment list A' of task T (line 6). Recall leaf tasks can

be either singleton or multi-agent. Leaf task T will be executed when it is assigned

sufficient number of agents (line 7-9), in which an action is queried for each agent and

the expected cumulative reward k is updated.1 If k exceeds the minimum required

cumulative reward CG, then task T is consider being completed and its assigned

agents will be removed from the set of available agents, N (line 10-13). Then, the

algorithm moves onto the next leaf task and the process terminates when each agent

has been assigned a leaf task (lines 3-4). The application of assignTask subroutine

to Example 3.1 is shown in Fig. 3-10.

Algorithm 5: assignTask

Input: Agents Nj, Sorted List of Leaf Tasks L, and Completion Heuristic

Cn, policy 7r' and value function V'(.)

Output: Actions aj for each agent j= 1,...,na

0

- 0,Ci +-0, Ai

1

task

T

in

L do

2 foreach leaf

if N is empty then

a

4

5

6

7

[_

break

foreach best agent N in N do

Ai +- Ai u {Nj}

if T has enough agents then

8

aj-

9

k

11

12

is

14

fV7

Vi({sj}))

if I > G then

C

N

N\A

-

10

7r'(sj) VNj E A

<

break

return a, for j= 1,...,na

1The details of the updating function are explained in Chapter 4.

42

19

9

10

19

9:

2

05

T1

T2

T

T4

0.8

7.2

1.6

4

iii

iv

Hi

Hii

iv

i

Hi

Cmin

Figure 3-9: Computation of Minimum Accepted Expected Reward Cmj.. The values

of Cmj. reflects the degree of desired redundancy in execution of leaf tasks, which

serve as a completion heuristic. These can be specified from domain knowledge, or

using a heuristic approach, such as multiplying rm, by a constant factor.

0.8

7.2

16

4

ill

Iv

I

Ii

0.8

7.2

II

I

v

1.6

4

0.8

7.2

1.6

i

i

ill

iv

i

(b)

I'

1.8

(1.8

(a)

4

3.2

(c)

Figure 3-10: Assignment of Agents to Leaf Tasks. The smiley faces are symbols

for agents. Yellow color indicates that the agent haven't been assigned a task and

red otherwise.

The numbers labeled inside the leaf nodes are Cmii, the minimum

accepted cumulative reward. The Roman numerals under each leaf node indicate the

importance ordering. In sub-figure a), the first agent is assigned to the highest ranked

leaf task, as shown by the green arrow. In sub-figure b), since the first agent achieves

expected cumulative reward of 1.8, which is greater than the minimum required value

of 1.6, the algorithm move onto the second leaf task and assigns the next agent to it.

In sub-figure c), since the second agent only achieves expected cumulative reward of

3.2, which is smaller than the minimum required value of 4, the next agent will be

still assigned to the second leaf task.

43

Algorithm 6: HD-MMDP

Input: Agents Nj, State, Action, and Transition model < S, Aj, P > of each

agent, for j = 1,... , n, Discount Factor -y, Reward Function R, and

Completion Heuristic Cm

Output: Actions aj for each agent j = 1, ... , n.

1 tree +- Decomposition (< S, AI P >, R,)

2 foreach leaf task ' in tree do

ir', V' +- ValueIterate(T7)

3

L

4 L <- rankTaskTree(tree)

5 {a} +- assignTask(Nj, L, Cm, ir, V(

e return aj for j =1,...,n

3.2.5

Summary

This section has developed a hierarchical decomposition (HD-MMDP) algorithm for

Multi-agent Markov Decision Processes. The proposed algorithm is outlined in Algorithm 6, which summarizes its four major steps. First, exploiting structure in

factored reward function, HD-MMDP decomposes the original MMDP into a number

of subproblems (line 1). Second, each subproblem is formed as a MDP and solved

with value iteration (line 2-3). Third, the subproblems were ranked using the rma

heuristic (line 4). Fourth, the subproblems were executed in a decreasing importance

ordering (line 5), in which process determines an action for each agent (generating a

joint policy).

3.3

Run Time Complexity Analysis

This section establishes the run time complexity of HD-MMDP algorithm and shows

how it compares favorably to classical DP approaches. Let nt be the number of leaf

reward functions, na be the number of agents,

1SI

be the size of a single agent's state

space, JAI be the size of a single agent's action space, and Jnm,

1

be the number of

required agents in the largest leaf task.

1. Decomposition O(nt)

In structural decomposition step, each recursive call of Decomposition subrou44

tine expands one of the internal function nodes. Since each internal functional

node has at least two children, the number of internal functional nodes is at

most nt - 1 (in case of a perfect binary tree). Thus, its run time scales linearly

in the number of leaf tasks, nt.

2. Solving Leaf Tasks O(n IS 2nm-xAInm-)

Recall from section 2.3, run time complexity of value iteration is O(ISMDP12IAMDPI).

Also, the size of joint state and action spaces of n. agents are

IAm"-

and ISIn-,

respectively. The largest leaf task (with nm. number of agents) has state and

action spaces of sizes JAnm- and

ISI"-, respectively.

The run time complex-

ity for solving the largest leaf task is O(IS 2nm-Aln-). Since there are at

most nt leaf tasks of equal size, solving every leaf task has run time complexity

0 (nt IS12nm- IA In-).

3. Ranking Leaf Tasks O(nt log nt)

Computing maximum expected cumulative reward (rm.) for every internal

functional nodes has run time complexity O(nt). This is because there are at

most nt -1

internal functional nodes and calculating rm. at each node involves

constant time function evaluation. The sorting of leaf tasks can be implemented

precisely as a depth first search, which has run time complexity O(nt log nt).

4. Task Allocation O(ntn2)

In the task allocation step (Algorithm 5), finding the best agent for executing

leaf task (line 5) requires looping through each agent, which is O(na). For each

leaf task, this procedure may need to be repeated na times to reach desired

degree of redundancy (line 7). In addition, the subroutine will loop through all

nt leaf tasks (line 2). Hence, the task allocation step is O(ntna).

Run time complexity of HD-MMDP is summarized in Table 3.2. For applications

considered in this work, the magnitude of each variable is as follows: nt ~ 10,

ISI

~

100, JAI ~ 10, nma ~ 3, na ~ 10. Thus, it can be seen that the most expensive step

is solving leaf tasks individually, as highlighted in red in the second row of the table.

45

Table 3.2: Run Time Complexity of HD-MMDP

Run Time Complexity

Step

O(nt)

1) Decomposition

2) Solving Leaf Tasks

3) Ranking of Leaf Tasks

4) Task Allocation

O(nt IS 2nm-AJnm)

O(nt log nt)

O(ntn2)

In comparison, value iteration (VI) on the original MMDP has run time complexity

O(IS 2naAIna). Since planning spaces scale exponentially with the number of agents,

VI is orders of magnitude more expensive than the proposed algorithm.

3.4

Summary

This section introduced the Hierarchically Decomposed Multi-agent Markov Decision

Process (HD-MMDP) algorithm. First. common structures in MMDP's reward function are explored and being related to coupling strength between agents. Then, the

mechanics of the HD-MMDP algorithm were developed. The run time complexity of

HD-MMDP was shown to be much lower than classical dynamic programming approaches. The next chapter performs an empirical analysis of HD-MMDP by applying

to solve a multi-agent persistent search and track (PST) mission.