Semiparametric Estimation of Fixed E¤ects Panel Data Models with Smooth Coe¢ cients

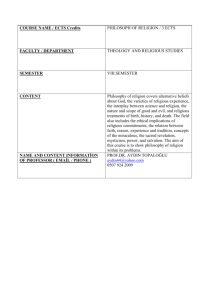

advertisement

Semiparametric Estimation of Fixed

E¤ects Panel Data Models with Smooth

Coe¢ cients

Yiguo Sun

Department of Economics

University of Guelph

Guelph, ON, Canada N1G2W1

yisun@uoguelph.ca

Raymond J. Carroll

Department of Statistics, Texas A&M University

College Station, TX 77843-3134,

carroll@stat.tamu.edu

Qi Li

Department of Economics, Texas A&M University

College Station, TX 77843-4228,

qi@econmail.tamu.edu

Abstract

In this paper we consider the problem of estimating semiparametric …xede¤ects panel data models with smooth coe¢ cients by local linear regression

approach. We show that the proposed estimator has the usual nonparametric

convergence rate and is asymptotically normally distributed under regular conditions. A modi…ed least-squared cross-validatory method is used to …nd the

optimal bandwidth automatically. Moreover, we propose a test statistic for testing the null hypothesis of random e¤ects against …xed e¤ects for semiparametric

panel data regression models with smooth coe¢ cients. Monte Carlo simulations

are used to study …nite sample performance of the proposed estimator and test.

Some key words: Panel data; Local least squares; Smooth coe¢ cients; Bootstrap.

Short title: Fixed E¤ects Panel Data Models with Smooth Coe¢ cients

2

1

Introduction

Panel data traces information on each individual unit across time. Economists

often …nd that they could overcome econometrics di¢ culties and extract more

economic inferences using panel data, which would not be possible using pure

time-series data or cross-section data. With the increased availability of panel

data both theoretical and applied econometrics work in panel data analysis has

become ever popular in the recent years.

To avoid imposing inadequate parametric panel data model, many econometricians and statisticians have been working on theories of nonparametric and

semiparametric panel data regression models. Among many contributions, we

name a few here: Ke and Wang (2001), Li and Stengos (1996), and Ullah and

Roy (1998) for semiparametric panel data models with random e¤ects; Henderson and Ullah (2005), Lin and Carroll (2000, 2001, 2006), Lin, Wang, Welsh and

Carroll (2004), Lin and Ying (2001), Ruckstuhl, Welsh and Carroll (1999), Wu

and Zhang (2002) and Wang (2003) for estimation of nonparametric panel data

models with random e¤ects. Estimation of these types of models are appropriate

when the individual e¤ect is independent of the regressors.

However, random e¤ects estimators are inconsistent if the true model is one

with …xed e¤ects, i.e. individual e¤ects which are correlated with the regressors.

Indeed, economists often view the assumptions for the random e¤ects model as

being unsupported by the data. Su and Ullah (2006) consider a …xed e¤ects

partially linear panel data model. This paper considers …xed e¤ects panel data

models with smooth coe¢ cients. The model is assumed to apply to situations

1

where we see smooth transitive changes across sections and/or across time.

The remainder of the paper is organized as follows: Section 2 introduces

a semiparametric …xed-e¤ects panel data regression model with smooth coe¢ cients and estimation methodology. In Section 3, we propose a nonparametric

estimator for unknown smoothing coe¢ cients and derive its asymptotic results.

In Section 4 we propose a test statistic for testing for random e¤ects versus …xed

e¤ects in semiparametric panel data models with smooth coe¢ cients. Section 5

examines …nite sample properties with a small Monte Carlo study. Finally, Section 6 concludes the paper. We delay detailed mathematical proofs to Appendix

A for …xed-e¤ects estimation and Appendix B for random-e¤ects estimation.

2

Fixed E¤ects Semiparametric Panel Data Models with Smooth Coe¢ cients

We consider the following semiparametric …xed-e¤ects panel data regression

model with smooth coe¢ cients

T

Yi;t = Xi;t

(Zi;t ) +

i

+

i;t ; (i

= 1; :::; n; t = 1; :::; m)

(1)

where the covariate Zi;t = (Zi;t;1 ; :::; Zi;t;q )T is of dimension q, Xi;t = (Xi;t;1 ; :::; Xi;t;k )T

is of dimension k; ( ) = (

1

( );

;

T

k

( )) contains k unknown functions, and

all other variables are scalars. The random errors

with a zero mean, …nite variance

and t. Further,

i

2

v

i;t

are assumed to be i.i.d.

and independent of Zi;t and Xi;t for all i

has a …nite mean and variance. We allow

i

to be correlated

with Zi;t and/or Xi;t with an unknown correlation structure. Hence, model (1)

is a …xed-e¤ects model. Alternatively, when

2

i

is uncorrelated with Zi;t and

Xi;t model (1) is a random-e¤ects model.

For a given …xed e¤ects model, there are many ways of removing the unknown …xed e¤ects from the model.

Example 1 The usual …rst-di¤ erencing (FD) estimation method could deduct

one equation from another to remove the time-invariant …xed e¤ ects. For example, deducting equation for time t from that for time t

t = 2;

1; we have for

;m

y~i;t = yi;t

yi;t

1

T

= Xi;t

(Zi;t )

T

Xi;t

1

(Zi;t

1)

+ v~i;t ; v~i;t = vi;t

vi;t

or deducting equation for time t from that for time 1; we obtain for t = 2;

y~i;t = yi;t

T

yi1 = Xi;t

(Zi;t )

T

Xi;1

(Zi1 ) + v~i;t ; v~i;t = vi;t

vi;1 ;

1;

(2)

;m

(3)

and so on.

Example 2 The conventional …xed e¤ ects (FE) estimation method, on the

other hand, removes the …xed e¤ ects by deducting each equation from the crosstime average of the system, and it gives for t = 2;

m

T

y~i;t = Xi;t

(Zi;t )

where wi: = m

qt;s =

1

Pm

t=1

;m

m

X

1 X T

T

Xi;s (Zi;s ) + v~i;t =

qt;s Xi;s

(Zi;s ) + v~i;t

m s=1

s=1

wi;t and w

~i;t = wi;t wi: with w being y or v: In addition,

1=m if s 6= t and 1

1=m otherwise, and

Pm

s=1 qt;s

In general cases, we could introduce a constant (m

full rank m

(4)

1 such that P em = 0m

1

= 0 for all t.

1)

m matrix P with

where em is an m

1 vector of ones.

Premultiplying P to the m equations for each i will remove the unknown …xed

3

e¤ects

i.

For the FD estimator, each row of P only takes values of -1, 0,

and 1. Denote P = p01 ;

; p0m

vector. Model (2) assumes pt;t =

t = 1;

;m

m

1

0

m

; where pt = (pt;j )j=1 is an m

;m

is an (m

1; pt;t+1 = 1; and pt;j = 0 for

1: For the FE estimator, P = Sm

1)

1 row

1; pt;t+1 = 1; and pt;j = 0 for j 6= t; t + 1;

1: Model (3) assumes pt;1 =

j 6= 1; t + 1; t = 1;

where Sm

1

1

m matrix containing the last m

Im

1

0

m em em

;

1 rows of the

m identity matrix Im : When m = 2; FD estimator and FE estimator will

be exactly the same; this is not true for m > 2.

Many nonparametric local smoothing methods can be used to estimate the

unknown function ( ). However, for each i; the right-hand of equations (2)-(4)

T

contain linear combination of Xi;t

(Zi;t )

m

t=1

over time. If X contains an in-

tercept term, back…tting algorithm has to be used to recover the unknown functions,which brings not only computational burden but also complicate mathematical proofs.

Therefore, this paper proposes a new way of removing the unknown …xed

e¤ects, motived by least square dummy variable (LSDV) model in parametric

panel data analysis. As the text goes along, we will describe how the proposed

method removes …xed e¤ects by deducting a smoothed version of average across

time from each observation.

Rewriting model (1) in matrix format yields

Y = B (X; (Z)) + D0

where Y = Y1T ;

; YnT

T

and V = v1T ;

T

B (X; (Z)) stacks all Xi;t

(Zi;t ) into an (nm)

4

0

+ V;

; vnT

T

(5)

are (nm)

1 vectors,

1 vector in ascending order of

i …rst, then of t;

is a (nm)

0

=[

1;

T

n]

;

is an n

1 vector, and D0 = In

n matrix with main diagonal blocks being em ; where

em

n

refers to

Kroneker product operation. However, we can not work on this model without

T

further restriction on the …xed e¤ects for cases like Xi;t

(Zi;t ) =

Xi;t;2

2

(Zi;t ) +

; since we can not identify

1

which has been used by Su and Ullah (2006). De…ne

Pn

i=1

where D =

i

=[

2;

Pn

i=1

;

T

n]

1

= 0,

: With

I(n

T

em is a (nm)

1) (n 1)

(6)

(n

1) matrix.

De…ne an m m diagonal matrix KH (Zi ; z) = diag (KH (Zi;1 ; z) ;

; KH (Zi;m ; z))

; n; and a (nm) (nm) diagonal matrix WH (z) = diag(KH (Z1 ; z) ;

where KH (Zi;t ; z) = K H

is a q

i

= 0; we can rewrite (5) as

Y = B (X; (Z)) + D + V;

en

for i = 1; 2;

(Zi;t ) +

( ) in the presents of unknown

…xed e¤ects. Therefore, we impose an identi…cation condition like

restriction

1

1

(Zi;t

z) for all i and t; and H = diag (h1 ;

; hq )

q diagonal smoothing matrix. We then solve the following optimization

problem

min (Y

(Z);

B (X; (Z))

T

D ) WH (z) (Y

B (X; (Z))

D ):

(7)

We use the local weight matrix WH (z) to take into account localness of our

nonparametric …tting, and place no weight matrix for data variation since fvit g

are i.i.d. across equations. Taking …rst-order condition with respect to

DT WH (z) (Y

B (X; (Z))

D^ (z)) = 0;

gives

(8)

which yields

^ (z) = DT WH (z) D

1

DT WH (z) (Y

5

B (X; (Z))) :

(9)

; KH (Zn ; z)),

Replacing

in (7) by ^ (z) ; we obtain the concentrated weighted least

squares

T

min (Y

B (X; (Z))) SH (z) (Y

(Z)

B (X; (Z))) ;

(10)

T

where we de…ne SH (z) = MH (z) WH (z) MH (z) and MH (z) = I(nm)

1

D DT WH (z) D

DT WH (z) : Apparently, MH (z) D

0(nm)

1

for all z ef-

fectively removes unknown …xed e¤ects from model (1).

How does MH (z) transform the observed data? Simple calculations give

MH (z) = I(nm)

D A

(nm)

1

A

1

en 1 eTn 1 A 1 =

n

X

!

cH (Zi ; z) DT WH (z) ;

i=1

where cH (Zi ; z)

1

=

Pm

t=1

KH (Zi;t ; z) for i = 1;

We use the formula (A + BCD)

1

1

=A

A

1

; n and A = diag cH (Z2 ; z)

B DA

1

B+C

1

1

DA

1

1

;

; cH (Zn ; z)

to

derive the inverse matrix, see Appendix B in Poirier (1995).

3

Nonparametric Estimator and Asymptotic Theory

Local linear regression approach is usually used to estimate non-/semi-parametric

models. The basic idea of this method is to apply Taylor expansion up to the

second-order derivative. That is, for each l = 1;

; k; we have the following

Taylor expansion around a point z :

l

(zi;t )

l

(z)+ H

0

l

(z)

T

H

1

1

z) + rH;l (zi;t ; z) ; H

2

(zi;t

1

(zi;t

z) = O(1) a.s.,

(11)

1

where rH;l (zi;t ; z) = H

proximates

l

(zi;t ) and

0

l

(zi;t

z)

T

h

2

l (z)

H @@z@z

T H

(z) approximates

6

0

l

i

H

1

(zi;t

z) :

l

(z) ap-

(zi;t ) when zi;t is close to z: De…ne

1

.

l (z) =

and

h

l (z) ; H

(z) = [

umn of

1

0

l

(z) ;

(z)

;

i

T T

k

; a (q + 1)

T

(z)] , a k

1 column vector for l = 1; 2;

(q + 1) parameter matrix. The …rst col-

(z) is (z) : Therefore, we will replace (Zi;t ) in (1) by

1

for each i and t; where Gi;t (z; H) = [1; fH

(z) Gi;t (z; H)

z)gT ]T is a (q +1) 1 vector.

(Zi;t

To make matrix operation simpler, we stack the parameter matrix

a [k (q + 1)]

CT

; k;

(z) into

1 column vector and denote it by vec( (z)). Since vec (ABC) =

T

B) = AT

A vec (B) and (A

B T ; where

refers to Kronecker prodT

T

uct, we have Xi;t

(z) Gi;t (z; H) = (Gi;t (z; H)

Xi;t ) vec ( (z)) for all i and

t. Thus, we consider the following minimization problem

T

min [Y

R (z; H) vec ( (z))] SH (z) [Y

(z)

where

Ri (z; H)

R (z; H)

2

(Gi;1 (z; H)

6

..

= 4

.

=

h

(Gi;m (z; H)

T

R1 (z; H) ;

T

Xi;1 )

T

Xi;m )

R (z; H) vec ( (z))]

3

7

5 is an m

T

; Rn (z; H)

iT

(12)

[k (q + 1)] matrix, (13)

is a (nm)

[k (q + 1)] matrix.

(14)

Simple calculations give

vec b (z)

=

h

i

T

R (z; H) SH (z) R (z; H)

T

R (z; H) SH (z) Y

i

T

= vec ( (z)) + R (z; H) SH (z) R (z; H)

T

where An = R (z; H) SH (z)

element of the column vector

[rH;1 ( ; ) ;

1

h

(15)

1

(An =2 + Bn )(16)

;

T

(z; H) and Bn = R (z; H) SH (z) V: The (t + (i

th

1)m)

T

(z; H) is Xi;t

rH Z~i;t ; z ; where rH ( ; ) =

T

; rH;k ( ; )] and rH;l Z~i;t ; z = H

1

with Z~i;t lying between Zi;t and z for each i and t.

7

(Zi;t

z)

T

H

~i;t )

@ 2 l (Z

H

@z@z T

H

1

(Zi;t

z)

To derive the asymptotic distribution of vec b (z) , we …rst give some reg-

ularity conditions. We use M > 0 to stand for a …nite constant.

Assumption 1: The random variables (Yi;t ; Xi;t ; Zi;t ) are independent and

identically distributed (iid) across the i index, and E

T

T

X1;t X1;s

X1;t X1;s

M < 1 for all t and s. Zi;t are continuos random variables with a pdf ft ( );

conditional pdfs ft ( jxi;t ) and ft (zjxi;t ; xi;s ) : Denote ft;s (z; zjxi;t ; xi;s ) to be the

joint pdf of (Zi;t ; Zi;s ) conditional on (Xi;t ; Xi;s ) = (xi;t ; xi;s ). Also, all these

(marginal or conditional) pdfs and ( ) are twice continuously di¤erentiable

functions and their second-order derivatives satis…es Lipschitz conditions. Let

St denote the support of Zi;t ; then ft (z) is bounded away from zero in its

domain S t .

T

0

0

Assumption 2: X has full rank k, and Xi;t;l 6= Xi;t;l

0 Zi;t;j for any l 6= l (l; l =

1;

; k), and j = 1;

; q: In addition, n

1 out of n variables { i }ni=1 are

independently distributed with zero mean and variance

determined by

Pn

i=1

i

= 0. If Xi;t;l

2

and the other one is

Xi;l for some l, we assume

This is one identi…cation condition. For cases that X1;t

is estimable if MH (z) (en

MH (z) (en

em ) = n

1

Pn

i=1

Xi;l 6= 0:

1; then

1

(z)

em ) 6= 0 for a given z. Simple calculations give

Pn

i=1 cH

(Zi ; z)

1

(cH (Z1 ; z) ;

T

; cH (Zn ; z))

the proof of Lemma 6 in Appendix A can be used to show that MH (z) (en

em ;

em ) 6=

0 for any given z with probability one.

In addition, for cases that Xi;t;l

Xi;l and Xi;l is not constant for some l, i.e.

the lth variable in X is time invariant,

l

8

(z) is estimable if MH (z) (b

em ) 6= 0

for a given z, where b is a n

MH (z) (b

Pn

i=1

em ) =

b1 +

+bn

n

1 vector with bi = Xi;l : Simple calculations give

MH (z) (en

em ). Therefore,

l

(z) is identi…able if

Xi;l 6= 0:

There could be other ways of introducing identi…cation conditions. We use

Su and Ullah’s (2006) assumption,

Pn

i=1

i

= 0, this restriction simpli…es esti-

mation and proofs and there is no need to do back…tting iteration.

Assumption 3: K(v) =

Qq

s=1

k(vs ) is a product kernel, the univariate

kernel function k( ) is a uniformly bounded, symmetric (around zero) probability

density function and its …rst four moments are …nite. In addition, de…ne jHj =

qP

q

2

h1

hq and kHk =

j=1 hj : As n ! 1, kHk ! 0 and n jHj ! 1.

(z) is to be estimated by b(z) which is the …rst column of b (z).

Theorem 3 Under Assumptions 1-3, we have the following bias and variance

for b(z):

Bais b(z)

=

V ar b(z)

where

and

=

Pm

1

2

Z

2

K (u) uuT du

;

H (z) + o kHk

Z

1

1

2 2v n 1 jHj

K 2 (u) du

+o n

q

1

Pm

ft (z)

f (z)

T

E ft (zjX1;t ) X1;t X1;t

; f (z) =

iT

h

2

@ 2 1 (z)

k (z)

; tr H @@z@z

:

H (z) = tr H @z@z T H ;

T H

=

t=1

1

1

jHj

t=1

;

ft (z) ;

Moreover, we have derived the following asymptotic normality results for

b(z):

2+

Theorem 4 Under Assumptions 1-3, E kXi;t k

some

> 0; and

2+

< 1; E jvi;t j

p

p

2

n jHj kHk = o (1) as n ! 1, we have n jHj b(z)

9

< 1 for

d

(z) !

N 0;

(z)

; where

timator for

(z)

^

(z)

(z)

=2

R

2

v

K 2 (u) du

1

. Moreover, a consistent es-

is given as follows:

= Sk ^ (z; H)

1

J^ (z; H) ^ (z; H)

1

p

SkT !

(z) ;

^ (z; H)

= n

1

jHj

1

R (z; H) SH (z) R (z; H)

T

J^ (z; H)

= n

1

jHj

1

T

R (z; H) SH (z) V^ V^ T SH (z) R (z; H)

where V^ is the vector of estimated residuals and Sk includes the …rst k rows of

the [k (q + 1)]

[k (q + 1)] identify matrix.

When calculating J^ (z; H) ; we do not need to estimate , since SH (z) V^ V^ T SH (z)

= WH (z) MH (z) V^

MH (z) V^

T

WH (z) and MH (z) D = 0; MH (z) V^ are

the estimated residuals from the local least squares estimation (12).

4

A Nonparametric Test: Random E¤ects vs

Fixed E¤ects

In this section we discuss how to test for the presence of random e¤ects versus

…xed e¤ects in the semiparametric panel data model with smoothing coe¢ cients.

The model remains as (1). De…ne ui;t =

tion assumes that

…xed e¤ects case,

i

i

i+

i;t .

The random e¤ects speci…ca-

is uncorrelated with the regressors X and Z, while for the

is allowed to be correlated with X and/or Z in an unknown

way.

We are interested in testing the null hypothesis (H0 ) that

e¤ect versus the alternative hypothesis (H1 ) that

10

i

i

is a random

is a …xed e¤ect. The null

and alternative hypotheses can be written as

H0

:

Pr [E( i jZi;1 ; :::; Zi;m ; Xi;1 ;

; Xi;m )

0] = 1 for all i,

(17)

H1

:

Pr [E( i jZi;1 ; :::; Zi;m ; Xi;1 ;

; Xi;m ) 6= 0] > 0 for some i , (18)

while we keep the same setup given in model (1) under both H0 and H1 .

Our statistic is based on the squared di¤erence between the FE and RE

estimators, which is asymptotically zero under H0 and positive under H1 : To

simplify the proofs and save computing time, we use local constant estimator

instead of local linear estimator for the test. Then following the argument in

Section 2 and Appendix B, we have

^F E (z)

=

X T SH (z) X

^RE (z)

=

X T WH (z) X

where X is a (nm)

(Xi;1 ;

1

X T SH (z) Y

1

k matrix with X = X1T ;

X T WH (z) Y

; XnT ; and for each i, Xi =

T

; Xi;m ) is an m k matrix with Xi;t = [Xi;t;1 ;

T

; Xi;t;k ] : Motivated

by Li, Huang, Li, and Fu (2002), we remove the random denominator of ^F E (z)

by multiplying X T SH (z) X and the test statistic is de…ned as

Tn

Z h

iT

^F E (z) ^RE (z)

X T SH (z) X

=

Z

~ (z)T SH (z) XX T SH (z) U

~ (z) dz

=

U

since X T SH (z) X

h

^F E (z)

T

X T SH (z) X

i

^RE (z) = X T SH (z) Y

h

^F E (z)

i

^RE (z) dz

4

~ (z) :

X ^RE (z) = X T SH (z) U

To simplify the statistic, we make several changes in Tn . Firstly, we simplify the

~ (z) by U

^ , where U

^ =Y

integration calculation by replacing U

and B X; ^RE (Z)

B X; ^RE (Z)

T ^

stacks up Xi;t

RE (Zi;t ) in the increasing order of i …rst

11

then of t: Secondly, to overcome the complexity caused by the random denominator in MH (z), we replace MH (z) by MD = Inm

m

1

em eTm , and

In

apparently, MD D = 0. Now removing the term of j = i, we obtain

T~n =

n X

X

^iT Qm

U

i=1 j6=i

where Qm = Im

m

1

Z

^j ;

KH (Zi ; z) XiT Xj KH (Zj ; z) dzQm U

4

em eTm : If jHj ! 0 as n ! 1 and E kXi;t k < M < 1,

we obtain

jHj

1

Z

KH (Zi ; z) XiT Xj KH (Zj ; z) dz

where KH (Zi;t ; Zj;s ) =

R

K H

1

(Zi;t

2

T

KH (Zi;1 ; Zj;1 ) Xi;1

Xj;1

6

..

4

.

T

KH (Zi;m ; Zj;1 ) Xi;m

Xj;1

3

T

KH (Zi;1 ; Zj;m ) Xi;1

Xj;m

7

..

..

5,

.

.

T

KH (Zi;m ; Zj;m ) Xi;m Xj;m

(19)

Zj;s ) + ! K (!) d!. We then replace

KH (Zi;t ; Zj;s ) by KH (Zi;t ; Zj;s ); this replacement will not a¤ect the essence of

the test statistic since the local weight is untouched. Now, our proposed test

statistic is given as

n

T^n =

1 X X ^T

^j

Ui Qm Ai;j Qm U

2

n jHj i=1

(20)

j6=i

where Ai;j equals to the right-hand side of equation (19) after replacing KH (Zi;t ; Zj;s )

by KH (Zi;t ; Zj;s ). Finally, to remove the asymptotic bias term of the proposed

test statistic, the random-e¤ects estimator of (Zi;t ) are leave-one-unit-out random e¤ect estimators; for a given pair of (i; j), i 6= j, ^RE (Zi;t ) is calculated

m

without using observations on f(Xj;t ; Zj;t ; Yj;t )gt=1 :

We present the asymptotic properties of this test below and delay the proofs

to Appendix C.

12

4

Theorem 5 Under Assumptions 1-3, and n jHj kHk ! 0 as n ! 1; we have

under H0 as n ! 1

Jn = n

p

d

jHjT^n =^ 0 ! N (0; 1)

(21)

where

n

^ 20 =

1 XX ^T

Vi Qm Ai;j Qm V^j

2

n jHj i=1

2

(22)

j6=i

is a consistent estimator of

2

0

1

m

=2 1

2

4

v

Z

K 2 (u) du

t

m X

X

t=1 s=1

T ^

Xi;t

F E (Zi;t )

where V^i;t = Yi;t

h

i

2

T

E f2;tj1;s (Z1;s jZ1;s ; X1;s ; X2;t ) X1;s

X2;t

;

^ i and ^F E (Zi;t ) is a leave-two-unit-out

FE estimator without using the observations from the ith and jth units and

^ i = Yi

m

1

Pm

t=1

T ^

Xi;t

F E (Zi;t ) : Under H1 ; Pr [Jn > Bn ] ! 1 as n ! 1;

where Bn is any nonstochastic sequence with Bn = o n

Theorem 5 states that the test statistic Jn = n

p

p

jHj :

jHjT^n =^ 0 is a consistent

test for testing H0 against H1 . It is a one-sided test. If Jn is greater than

the critical values from the standard normal distribution, we will reject the null

hypothesis at the corresponding signi…cance levels.

5

Monte Carlo Simulations

In this section we report some Monte Carlo simulation results to examine the

…nite sample performance of the proposed estimator. The following data generating process is used:

Yi;t =

1 (Zi;t )

+

2 (Zi;t )Xi;t

13

+

i

+ vi;t ;

(23)

where

= 1 + z + z2,

1 (z)

2 (z)

= sin(z ), Zi;t = wi;t + wi;t

uniformly distributed in [0; =2], Xi;t = 0:5Xi;t

addition,

i

= c0 Zi +ui for i = 1; 2;

; n 1 and

0:5;and 1.0, ui is i.i.d. N (0; 1): When c0 6= 0,

c0 to control the correlation between

vi;t is i.i.d. N (0; 1), wi;t ,

i;t ,

1

i

i

+

n

i;t ,

wi;t is i.i.d.

is i.i.d. N (0; 1). In

i;t

Pn

1

i=1

=

1,

i

where c0 = 0;

and Zi;t are correlated; we use

and Zi = m

1

Pm

t=1

Zi;t . Moreover,

ui and vi;t are independent of each other.

We report estimation results for both the proposed …xed-e¤ects (FE) estimator and the random-e¤ects (RE) estimator (see Appendix B for the asymptotic

results of the RE estimator). To learn how the two estimators perform when

we have …xed-e¤ects model and when we have random-e¤ects model, we use the

integrated squared error as a standard measure of estimation accuracy:

ISE ^l =

Z h

^l (z)

l

i2

(z) f (z) dz;

(24)

which can be approximated by the average mean squared error AM SE(^l ) =

nm)

1

Pn

i=1

Pm ^

t=1 [ j;l (Zi;t )

2

j;l (Zi;t )]

for l = 1; 2: Finally, in Table 1 we

present the average value of AM SE(^l ) from 1000 Monte Carlo experiments.

We take m = 3 and the sample size n=50, 100, and 200.

Since the bias and variance of the proposed FE estimator do not depend on

the values of the …xed e¤ects, our estimates are the same for di¤erent values of

c0 ; however, it is not true if random-e¤ects model is true. Therefore, the results

derived from the FE estimator are only reported once for each c0 in Table 1.

It is well-known that the performance of non-/semi-parametric models depends on the choice of bandwidth. Therefore, we propose a leave-one-unit-out

cross validation method to automatically …nd the optimal bandwidth for es14

timating both the FE and RE models. Speci…cally, when estimating

( ) at a

m

point Zi;t ; we remove f(Xi;t ; Yi;t ; Zi;t )gt=1 from the data and only use the rest of

1) m observations to calculate ^(

(n

(Zi;t ). In computing the RE estimate,

i)

the leave-one-unit-out cross validation method is just a trivial extension of the

conventional leave-one-out cross validation method. However, such simple extension fails to provide satisfying result when we calculate a FE estimator due

to the existence of unknown …xed e¤ects. Therefore, based on the arguments

made in Section 2, when calculating the FE estimator, we use the following

modi…ed leave-one-unit-out cross validation method:

B X; ^(

^ opt = arg min Y

H

H

T

1)

T

MD

MD Y

(Z)

B X; ^(

1)

(Z)

;

(25)

where MD = I(nm)

(nm)

1

m

In

em eTm satis…es MD D = 0 (this is

n

used to remove the unknown …xed e¤ects) and B X; ^(

T ^

Xi;t

(

i)

1)

(Z)

stacks up

(Zi;t ) in the increasing order of i …rst then of t. Simple calculations

give

Y

B X; ^(

T

1)

(Z)

T

=

B (X; (Z))

B X; ^(

1)

(Z) + V

=

B (X; (Z))

B X; ^(

1)

(Z)

+2 B (X; (Z))

B X; ^(

T

MD

MD Y

T

B X; ^(

(Z)

(Z)

T

MD

MD B (X; (Z))

T

MD

MD B (X; (Z))

T

1)

1)

B X; ^(

B X; ^(

1)

; n; s = 1;

T

MD

MD V + V T MD MD V;

mg for all i and t, or (Xi;t ; Zi;t ) is

strictly exogenous variable, then the second term has zero expectation because

15

(Z) + V

(Z)

where the last term does not depend on the bandwidth at all. If vi;t is independent of f(Xj;s ; Zj;s ) : j = 1;

1)

(26)

the linear transformation matrix MD removes a cross-time not cross-sectional

average from each variable, e.g. Y~i;t = Yi;t

m

1

Pm

t=1

Yi;t for all i and t.

Therefore, the …rst term is the dominate term in large sample and (25) is used

to …nd an optimal smoothing matrix minimizing a weighted mean squared error

n

ot=1;m

of ^ (Zi;t )

: Of course, we could use other weight matrix in (25) instead

i=1;n

of MD as long as the weight matrix can remove the …xed e¤ects and does not

trigger non-zero expectation of the second term in (26).

Table 1 shows that the RE estimator performs much better than the FE

estimator when the true model is a random e¤ects model, and the opposite is

true when the true model is a …xed-e¤ects model. This observation is consistent

with what we have derived in a parametric panel data regression model analysis.

Therefore, our simulation results indicate that a test for random e¤ects against

…xed e¤ects will be always in demand when we analyze panel data models. In

Table 2 we give Monte Carlo simulations of the proposed nonparametric test of

random e¤ects against …xed e¤ects.

How to choose the bandwidth h for the test? For univariate case, Theorem

5 indicates that nh ! 1 and nh9=2 ! 0 as n ! 1; if we take h

Theorem 5 requires

2

2

9; 1

2=7

;

: If we balance the two conditions nh ! 1

and nh9=2 ! 0 as n ! 1, we have

2, we use h = c (nm)

n

= 2=7: Therefore, in producing Table

^ z to calculate the RE estimator with c being value

of :8, 1:0, and 1:2. The results in Table 2 are consistent with the …ndings in

the nonparametric tests literature in that a smaller bandwidth is good for size

approximation and a larger bandwidth is good for power approximation.

16

6

Conclusion

In this paper we proposed using a local least squares method to estimate a

semiparametric panel data model with …xed e¤ects and smooth coe¢ cients. In

addition, we suggested using a bootstrap procedure to test for random e¤ects

against …xed e¤ects. A data-driven method has been introduced to automatically …nd the optimal bandwidth for the proposed FE estimator. Monte Carlo

simulations indicate that the proposed estimator and test statistic have good

…nite sample performance. Finally, the choice of bandwidths for the test is

important when investigating the size and power of the test.

Acknowledgments

Sun’s research was supported from the Social Sciences and Humanities Research

Council of Canada (SSHRC). Carroll’s research was supported by a grant from

the National Cancer Institute (CA-57030), and by the Texas A&M Center for

Environmental and Rural Health via a grant from the National Institute of

Environmental Health Sciences (P30-ES09106). Li’s research was partially supported by the Private Enterprise Research Center, Texas A&M University.

Appendix A: Technical Sketch–Proof of Theorem

3

To make our mathematical formula short, we introduce some simpli…ed notaPm

1

tions …rst: for each i, t, and s; it = KH (Zit ; z) ; cH (Zi ; z) = t=1 it ; and

17

for any positive integers i; j; t; s

2

6

6

= Git (z; H) GTjs (z; H) = 6

4

[ ]it;js

"

=

1

H

th

where the (l + 1)

1

(Zit

1

Git1

..

.

Gjs1

Git1 Gjs1

..

.

Gitq

Gitq Gjs1

.

T

H 1 (Zjs z)

H 1 (Zit z) H

z)

..

1

7

7

7

5

Gitq Gjsq

#

T

(1)

zl ) =hl ; l = 1;

; q.

(Zjs

element of Gjs (z; H) is Gjsl = (Zjsl

3

Gjsq

Git1 Gjsq

..

.

z)

Simple calculations show that

0

= @1 +

[ ]i1 t1 ;i2 t2 [ ]j1 s1 ;j2 s2

T

Ri (z; H) KH (Zi ; z) em eTm KH (Zj ; z) Rj (z; H)

=

q

X

j=1

m X

m

X

1

;

Gj1 s1 j Gi2 t2 j A [ ]i1 t1 ;j2 s(2)

2

it js

T

Xit Xjs

(3)

[ ]it;js

s=1 t=1

In addition, we have for a …nite positive integer j

jHj

jHj

where

m

X

1

t=1

2

E4

St;j;1

St;j;2

where RK;j =

m

X

1

E

t=1

2j

it

q

X

j 0 =1

h

j

it

[ ]it;it jXit

i

m

X

=

t=1

3

m

X

G2itj 0 [ ]it;it jXit 5 =

t=1

#

R

(zjXit )

ft (zjXit ) K j (u) du @ft@z

HRK;j

T

=

it )

RK;j H @ft (zjX

ft (zjXit ) RK;j

@z

"

#

R 2j

(zjX1t )

H K;2j

ft (zjXit ) K (u) uT udu @ft @z

T

=

@ft (zjXit )

ft (zjXit ) K;2j

K;2j H

@z

K j (u) uuT du and

K;2j

=

R

(4)

;

2

(5)

;

E (St;j;2 jXit ) + Op kHk

"

R

2

E (St;j;1 jXit ) + Op kHk

K 2j (u) uT u

(6)

(7)

uuT du.

Moreover, for any …nite positive integer j1 and j2 ; we have

jHj

=

2

m X

X

t=1 s6=t

m X

X

t=1 s6=t

E

h

j1 j2

it is

(t;s)

[ ]it;is jXit ; Xis

i

E Tj1 ;j2 ;1 jXit ; Xis + Op kHk

18

(8)

2

jHj

=

m X

X

2

t=1 s6=t

m X

X

t=1 s6=t

E4

(t;s)

j1 j2

it is

0

@

q

X

j 0 =1

(t;s)

1

R

3

Gitj 0 Gisj 0 A [ ]it;is jXit ; Xis 5

E Tj1 ;j2 ;2 jXit ; Xis + Op kHk

where we de…ne bj1;j2 ;i1 ;i2 =

Tj1 ;j2 ;1 =

2

1

K j1 (u) u2i

1 du

R

(9)

2

K j2 (u) u12i2 du

ft;s (z; zjXit ; Xis ) bj1 ;j2 ;0;0

H 5t ft;s (z; zjXit ; Xis ) bj1 ;j2 ;1;0

5Ts ft;s (z; zjXit ; Xis ) Hbj1 ;j2 ;0;1

H 52t;s ft;s (z; zjXit ; Xis ) Hbj1 ;j2 ;1;1

tr H 52t;s ft;s (z; zjXit ; Xis ) H

H 5s ft;s (z; zjXit ; Xis )

5Tt ft;s (z; zjXit ; Xis ) H

ft;s (z; zjXit ; Xis ) Iq q

and

(t;s)

Tj1 ;j2 ;2 =

bj1 ;j2 ;1;1 ;

with 5s ft;s (z; zjXit ; Xis ) = @ft;s (z; zjXit ; Xis ) =@zs and 52t;s ft;s (z; zjXit ; Xis ) =

@ 2 ft;s (z; zjXit ; Xis ) = @zt @zsT .

The conditional bias and variance of vec b (z) are given as follows:

h

i

h

i 1

T

T

Bias vec b (z) j fXit ; Zit g = 21 R (z; H) SH (z) R (z; H)

R (z; H) SH (z) (z; H)

h

i

h

i 1 h

i

T

T 2

and V ar vec b (z) j fXit ; Zit g = 2v R (z; H) SH (z) R (z; H)

R (z; H) SH

(z) R (z; H)

h

i 1

T

R (z; H) SH (z) R (z; H)

:

Lemma 6 Under Assumptions 1-3, we have

h

n

1

jHj

where

1

=

i

T

R (z; H) SH (z) R (z; H)

Pm

t=1

1

q

ft (z)

f (z)

1

1

Op (kHk)

T

E ft (zjX1t ) X1t X1t

.

19

Op (kHk)

R

K (u) uuT du

1

1

;

Proof: First, simple calculation gives

An

T

T

= R (z; H) SH (z) R (z; H) = R (z; H) WH (z) MH (z) R (z; H)

n

X

T

=

Ri (z; H) KH (Zi ; z) Ri (z; H)

i=1

n X

n

X

T

qij Ri (z; H) KH (Zi ; z) em eTm KH (Zj ; z) Rj (z; H)

j=1 i=1

=

n X

m

X

i=1 t=1

n

X

i=1

qii

m X

X

it is

T

Xit Xit

[ ]it;it

n X

X

T

Xit Xis

[ ]it;is

qij

An4 ;

2

it js

T

Xit Xjs

[ ]it;js

em eTm WH (z), and the typical elements of Q

Q

(nm)

m X

m

X

s=1 t=1

j=1 i6=j

An3

are qii = cH (Zi ; z) cH (Zi ; z) =

Pn

i=1 cH

(Zi ; z) and qij =

for i 6= j: Simple calculations give

1=2

KH (Zit ; z)

= jHj f (z) + jHj

KH (Zit ; z)

= jHj ft (z) + jHj

t=1

2

it

s=1 t6=s

An2

where MH (z) = I(nm)

m

X

m

X

t=1

i=1

qii

= An1

n

X

T

Xit Xit

it [ ]it;it

f (z)

1=2

and it follows that cH (Zi ; z) = jHj

1=2

Z

ft (z)

cH (Zi ; z) cH (Zj ; z) =

1=2

K 2 (u) du

Z

the leading term of qii ; and that qij = Op n

1

i=1 cH

(Zi ; z)

1

+ Op jHj 2 kHk ;

1=2

K 2 (u) du

R

f (z) K 2 (u) du

Pn

1

+ Op jHj 2 kHk ;

1=2

+ op jHj

1

2

is

. As a result, it is easy to show

that An4 is dominated by the other three terms.

Applying (4), (5), (8), and (9) to An1 ; we have n

Op kHk

2

+ Op n

1

2

jHj

1

2

1

jHj

1

Pm

An1

t=1

T

Xit Xit

+

E St;1;1

if kHk ! 0 and n jHj ! 1 as n ! 1:

To cope with the di¢ culty caused by the random denominator cH (Zi ; z)

when taking expectations and variations of An2 and An3 ; we apply the technique

used for kernel curve estimation. Speci…cally, de…ne !

^ it (z) =

1=2

It then follows that !

^ it (z) = (f (z) =ft (z))

20

1

it

cH (Zi ; z)

1

:

1=2

+op (1) : De…ne ! t (z) = (f (z) =ft (z))

and g^it (z) =

it

T

Xit Xit

: Then

[ ]it;it

n X

n X

m

m

X

X

^ it (z)

g^it (z)

g^it (z) !

=

+ 1

!

^ (z)

!

^ (z) ! t (z)

i=1 t=1 it

i=1 t=1 it

An2

Pn

Since !

^ it (z) = ! t (z) + op (1) ; it can be shown that

!

^ it (z)

! t (z)

i=1

Pm

:

^it

t=1 g

(z) =! t (z)

is the leading term of An2 : Again applying (4), (5), (8), and (9), we have

Pm

2

1 Pn Pm

1

T

+Op kHk +

n 1 jHj

^it (z) =! t (z)

E St;1;1

Xit Xit

i=1

t=1 g

t=1 ! t (z)

Op n

1

2

jHj

1

2

:

Similarly, for An3 , we de…ne !

^ i (z) = jHj

R 2

1=2

f (z) K (u) du

, and we can show that n

Op n

1=2

jHj

1=2

1=2

1

cH (Zi ; z)

jHj

1

An3

1

and ! i (z) =

1=2

Op jHj

+

if kHk ! 0 and n jHj ! 1 as n ! 1: Taking all the

results together and will complete the proof of this lemma, where the inverse

matrix is derived by using Theorem A.4.4. in Poirier (1995, p.627) .

R

"

1

Lemma 7 Under Assumptions 1-3, we have n

where

H

h

2

1 (z)

;

(z) = tr H @@z@z

T H

2

jHj

1

k (z)

; tr H @@z@z

T H

Cn

iT

K (u) uuT du

Op kHk

H

(z)

3

#

,

:

Proof: Simple calculations give

Cn

T

= R (z; H) SH (z) (z; H)

n X

m

X

T

=

Xit ) Xit

rH Z~it ; z

it (Git

=

i=1 t=1

n X

m

X

i=1 t=1

n

X

qii

i=1

it

m X

X

is it

qij

j=1 i6=j

where

T

Xit ) Xit

rH Z~it ; z

s=1 t6=s

m X

m

X

n X

X

= Cn1

(Git

Cn2

(Git

js it

n X

n

X

qij

m X

m

X

s=1 t=1

j=1 i=1

n

m

X X

2

qii

it (Git

t=1

i=1

js it

(Git

T

Xit ) Xit

rH Z~it ; z

T

Xit ) Xis

rH Z~is ; z

(Git

T

Xit ) Xjs

rH Z~js ; z

s=1 t=1

Cn3

Cn4 ;

(z; H) is de…ned in Section 3. Similar to the proof of Lemma 6, it is

easy to show that Cn;3 and Cn;4 are dominated by the other two terms of Cn :

21

T

Xit ) Xjs

rH Z~js ; z

For l = 1;

; k we have

jHj

1

E[

it rH;l

(Zit ; z) jXit ] = ft (zjXit ) tr

jHj

1

E

it rH;l

(Zit ; z) H

1

1

and E n

jHj

1

n

Cn1

(Zit

R

1

ilarly we can show that V ar n

K (u) uuT du H

3

z) jXit = Op kHk

K (u) uuT du

jHj

Z

1

H

(z)

Cn1 = O n

1

T

jHj

4

+ Op kHk

;

3

; O kHk

1

@ 2 l (z)

H

@z@z T

4

kHk

oT

if E

. SimT

T

Xit Xis

Xit Xis

<

M < 1 for all t and s:

For Cn2 ; we use the same method to cope with the random denominator

problem as in the proof of Lemma 6. Then we can show that the dominant

Pn Pm q (z)

T

term of Cn2 is i=1 t=1 fft(z)

Xit ) Xit

rH (Zit ; z) :

it (Git

This will complete the proof of this lemma.

Lemma 8 Under Assumptions 1-3, we have

n

1

jHj

1

Bn

2

R

K 2 (u) du

Op (kHk)

ORp (kHk)

K 2 (u) uuT du

:

Proof: Simple calculations give

Bn

T

T

T

2

= R (z; H) SH

(z) R (z; H) = R (z; H) WH (z) MH (z) MH (z) WH (z) R (z; H)

n

n X

n

X

X

T

T

2

2

=

Ri (z; H) KH

(Zi ; z) Ri (z; H)

qji Rj (z; H) KH

(Zj ; z) em eTm KH (Zi ; z) Ri (z; H)

i=1

j=1 i=1

n X

n

X

T

2

qij Ri (z; H) KH (Zi ; z) em eTm KH

(Zj ; z) Rj (z; H)

j=1 i=1

+

n X

n X

n

X

T

2

qij qji0 Ri (z; H) KH (Zi ; z) em eTm KH

(Zj ; z) em eTm KH (Zi0 ; z) Ri0 (z; H)

j=1 i=1 i0 =1

= Bn1

Bn2

T

Bn2

+ Bn3 :

1

1

Similar to the proof of Lemma 6, we can show that n 1 jHj Bn 2n 1 jHj (Bn1

Pn Pm

T

and that the leading term of Bn1 is i=1 t=1 2it [ ]it;it

Xit Xit

and the

22

Bn2 )

leading term of Bn2 is

Pm

1

n 1 jHj Bn

t=1 1

Pn

Pm

i=1 qii

q

3

it

t=1

ft (z)

f (z)

T

Xit Xit

: Then we obtain

[ ]it;it

E St;2;1

2

T

Xit Xit

+Op kHk

+Op n

1=2

jHj

1=2

The three lemmas above are enough to give the result of Theorem 3. More-

over, applying Liaponuov’s CLT will give the result of Theorem 4. Since the

proof is a rather standard procedure, we drop the details for compactness of the

paper.

Appendix B: Technical Sketch–Random E¤ects

Estimator

The RE estimator, ^RE ( ), is the solution to the following optimization problem:

T

min [Y

R (z; H) vec ( (z))] WH (z) [Y

(z)

R (z; H) vec ( (z))] ;

that is, we have

i 1

T

T

R (z; H) WH (z) R (z; H)

R (z; H) WH (z) Y

h

i 1

T

T

R (z; H) WH (z) D

= vec ( (z)) + R (z; H) WH (z) R (z; H)

h

i 1

T

T

~n

R (z; H) WH (z) A~n =2 + B

+ R (z; H) WH (z) R (z; H)

vec ^ RE (z)

h

=

T

where A~n = R (z; H) WH (z)

~n = R (z; H)T WH (z) V .

(z; H) and B

Its

asymptotic results are as follows.

Lemma 9 Under Assumptions 1-3,

2+

E jvit j

2+

< 1; E j i j

all i and t and for some

where

(z);RE

=

< M < 1 and E kXit k

Pn

i=2

2+

< M < 1 for

> 0, we have under H0

p

n jHj bRE (z)

2

n

p

2

n jHj kHk = o (1) as n ! 1, and

2

i

+

2

v

d

(z) ! N 0;

1

R

23

(z);RE

K 2 (u) du and

=

;

(10)

Pm

t=1

T

E ft (zjX1;t ) X1;t X1;t

:

:

Under H1 , we have

Bias bRE (z)

Z

=

K (u) uuT du

n

H

(z)

m

1 XX

2

E [ft (zjXi;t ; i ) i Xi;t ] + o kHk

2n i=1 t=1

Z

1

2

1

1

jHj

K 2 (u) du:

vn

+

h

i

V ar ^RE (z) j fXit ; Zit g

=

Proof of Lemma 9: First, we have the following decomposition

p

h

n jHj ^RE (z)

i p

h

(z) = n jHj ^RE (z)

E ^RE (z)

i p

h

+ n jHj E ^RE (z)

i

(z) ;

where we are going to show that the …rst term converges to a normal distribution with mean zero and the second term contributes to the asymptotic bias.

Without confusing readers, we drop the subscription ‘RE’.

a. Under H0 , the conditional bias and variance of ^ (z) are as follows:

h

i

h

i 1

T

T

Bias0 ^ (z) j fXit ; Zit g = Sk R (z; H) WH (z) R (z; H)

R (z; H) WH (z) (z; H) =2

h

i

h

i 1 h

T

T

and V ar ^ (z) j fXit ; Zit g = Sk R (z; H) WH (z) R (z; H)

R (z; H) WH (z) V ar(U U T )WH (z) R (z; H)

h

i 1

T

R (z; H) WH (z) R (z; H)

SkT : It is simple to show that V ar(U U T ) = D DT +

; 2n is the covariance matrix of ( 2 ;

; n ).

h

i

h

i

b. Under H1 , we notice that Bias1 ^ (z) j fXit ; Zit g is the sum of Bias0 ^ (z) j fXit ; Zit g

h

i 1

T

T

plus an additional term Sk R (z; H) WH (z) R (z; H)

R (z; H) WH (z) D .

2

v I(nm) (nm) ,

where

= diag

2

2

;

2+

It is easy to show that under Assumptions 1-3, and E j i j

E kXit k

2+

n

< M < 1 for all i and t and for some

1

1

< M < 1 and

>0

T

jHj Sk R (z; H) WH (z) D

n X

m

X

2

= n 1

E [ft (zjXit ; i ) i Xit ] + Op kHk + Op

i=1 t=1

p

1

n jHj

!

(; 11)

which is op (1) under H0 and is a non-zero constant plus a term of op (1) under

T

H1 : Based on the facts that R (z; H) WH (z) R (z; H) is An1 in Lemma 6 and

24

T

R (z; H) WH (z)

(z; H) is Cn1 in Lemma 7 we have

Z

h

i

2

Bias0 ^ (z) j fXit ; Zit g =

K (u) uuT du

;

H (z) + o kHk

which is the same as the bias term of the FE estimator. Our results indicate

that under H1 the bias of the RE estimator will not vanish as n ! 1 and this

leads to the inconsistency of the RE estimator under H1 :

Pm

T

;

As for the conditional variance, if we denote = t=1 E ft (zjX1t ) X1t X1t

we can easily show that under H0

h

i

V ar ^ (z) j fXit ; Zit g = n

1

jHj

1

2n

1

n

X

2

ui

2

v

+

i=2

!

1

Z

K 2 (u) du;

(12)

and under H1

h

i

V ar ^ (z) j fXit ; Zit g =

2

1

vn

jHj

T

1

2

1

Z

K 2 (u) du;

(13)

where we have recognized that R (z; H) WH (z) R (z; H) is Bn1 in Lemma 8.

A

Appendix C: Technical Sketch–Proof of Theorem 5

T

De…ne 4i = (4i;1 ;

; 4i;m )

T

with 4i;t = Xi;t

(Zi;t )

^RE (Zi;t ) . Since

MD D = 0; we can decompose the proposed statistic into four terms

n

T^n

=

1 X X ^T

^j

Ui Qm Ai;j Qm U

2

n jHj i=1

j6=i

=

1

2

n jHj

+

n X

X

4Ti Qm Ai;j Qm 4j +

i=1 j6=i

n X

X

1

n2 jHj

n

2 XX T

4i Qm Ai;j Qm Vj

n2 jHj i=1

j6=i

ViT Qm Ai;j Qm Vj

i=1 j6=i

= Tn1 + 2Tn2 + Tn3

where Vi = (vi;1 ;

T

; vi;m )

1 error vector. Since ^RE (Zi;t ) is the

is the m

leave-one-unit-out estimator for a pair of (i; j); it is easy to see that E (Tn2 ) = 0:

25

The proofs fall into the standard procedures seen in the literature of nonparametric tests. We therefore give a very brief proof below.

Firstly, applying Hall’s (1984) CLT, we can show that under both H0 and

H1

n

by de…ning Hn

i;

j

p

d

jHjTn3 ! N 0;

= ViT Qm Ai;j Qm Vj with

2

0

i

(14)

= (Xi ; Zi ; Vi ), which a sym-

metric, centred and degenerate variable. We are able to show that

E G2n (

1;

2)

+n

fE [Hn2 ((

1

E Hn4 ((

1;

2 ))]g

1;

2 ))

2

3

=

O jHj

+O n

1

jHj

2

O jHj

if jHj ! 0 and n jHj ! 1 as n ! 1; where Gn (

2

0

1;

2)

=E

1 2

!0

[Hn (( 1 ; i )) Hn (( 2 ; i ))] :

h

i

Pm Pm

2

2

2

T

jHj

E

K

(Z

;

Z

)

X

X

:

1s

2t

2t

v

1s

H

t=1

s=1

i

= 2 jHj E Hn2 ( 1 ; 2 )

2 1 m

p

2

1=2

Secondly, we can show that n jHjTn2 = Op kHk + Op n 1=2 jHj

p

under H0 and n jHjTnj = Op (1) under H1 : Moreover, we have, under H0 ,

p

p

p

p

4

n jHjTn1 = Op n jHj kHk ; under H1 , n jHjTn1 = Op n jHj :

In addition, jHj

Finally, to estimate

2

0

consistently under both H0 and H1 , we replace the

unknown Vi and Vj in Tn3 by the estimated residual vectors from FE estimator. Simple calculations show that the typical element of V^i Qm is e

v~it =

P

m

T^

T^

yit Xit

yi m 1 t=1 Xit

vi = 4it (vit vi ),

F E (Zit ) vit

F E (Zit )

P

m

T

T

where 4it = Xit

(Zit ) ^F E (Zit )

m 1 t=1 Xit

(Zit ) ^F E (Zit ) =

Pm

T

(Zil ) ^F E (Zil ) with qtt = 1 1=m and qlt = 1=m for l 6= m:

l=1 qlt Xil

The leave-two-unit-out FE estimator does not use the observations from the ith

h

2

Pm Pm

2

T

and jth units, and this leads to E V^iT Qm Ai;j Qm V^j

t=1

s=1 E KH (Zis ; Zjt ) Xis Xjt

Pm

where v~it = vit vi and vi = m 1 t=1 vit :

References

Hall, P. (1984). Central limit theorem for integrated square error of multivariate

nonparametric density estimators. Annals of Statistics, 14, 1-16.

26

2

42it 42js + 42i

Härdle, W. and Mammen, E. (1991). Bootstrap simultaneous error bars for

nonparametric regression. Annals of Statistics, 19, 778-796.

Henderson, D. J. and Ullah, A. (2005). A Nonparametric Random E¤ects Estimator. Economics Letters, 88, 403-407.

Ke, C. and Y. Wang (2001). Semiparametric Nonlinear Mixed-E¤ects Models

and their Applications. Journal of the American Statistical Association,

96, 1272-1281.

Li, Q. and Huang, C.J., Li, D. and Fu, T. (2002). Semiparametric Smooth

Coe¢ cient Models. Journal of Business & Economic Statistics, 20, 412422.

Li, Q. and Stengos, T. (1996). Semiparametric Estimation of Partially Linear

Panel Data Models. Journal of Econometrics, 71, 389-397.

Li, Q. and Wang, S. (1998). A Simple Consistent Bootstrap Test for a Parametric Regression Function. Journal of Econometrics, 87, 145-165.

Lin, D. Y. and Ying, Z. (2001). Semiparametric and Nonparametric Regression

Analysis of Longitudinal Data (with Discussion). Journal of the American

Statistical Association, 96, 103-126.

Lin, X., and Carroll, R. J. (2000). Nonparametric Function Estimation for Clustered Data when the Predictor is Measured without/with Error. Journal

of the American Statistical Association, 95, 520-534.

Lin, X. and Carroll, R. J. (2001). Semiparametric Regression for Clustered

Data using Generalized Estimation Equations. Journal of the American

Statistical Association, 96, 1045-1056.

Lin, X. and Carroll, R. J. (2006). Semiparametric Estimation in General Repeated Measures Problems. Journal of the Royal Statistical Society, Series

27

B, 68, 68-88.

Lin, X., Wang, N., Welsh, A. H. and Carroll, R. J. (2004). Equivalent Kernels of

Smoothing Splines in Nonparametric Regression for Longitudinal/Clustered

Data. Biometrika, 91, 177-194.

Poirier, D.J. (1995). Intermediate Statistics and Econometrics: a Comparative

Approach. The MIT Press.

Ruckstuhl, A. F., Welsh, A. H. and Carroll, R. J. (2000). Nonparametric Function Estimation of the Relationship Between Two Repeatedly Measured

Variables. Statistica Sinica, 10, 51-71.

Su, L. and Ullah, A. (2006). Pro…le likelihood estimation of partially linear

panel data models with …xed e¤ects. Economics Letters 92, 75-81.

Ullah, A. and Roy, N. (1998). Nonparametric and Semiparametric Econometrics

of Panel Data, in Handbook of Applied Economics Statistics, A. Ullah and

D. E. A. Giles, eds., Marcel Dekker, New York, 579-604.

Wang, N. (2003). Marginal Nonparametric Kernel Regression Accounting for

Within-Subject Correlation. Biometrika, 90, 43-52.

Wu, H. and Zhang, J. Y. (2002). Local Polynomial Mixed-E¤ects Models for

Longitudinal Data.

Journal of the American Statistical Association, 97,

883-897.

Zheng, J. (1996). A Consistent Test of Functional Form via Nonparametric

Estimation Techniques. Journal of Econometrics 75, 263-289.

28

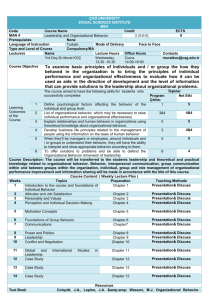

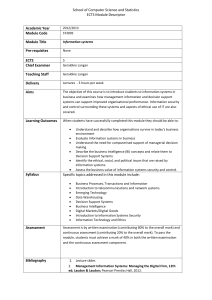

Table 1: Average mean squared errors (AMSE) of the …xed and random e¤ects

estimators when the data generation process is a random e¤ects model and when

it is a …xed e¤ects model.

Random E¤ects Estimator

Fixed E¤ects Estimator

Data Process

n = 50 n = 100 n = 200 n = 50 n = 100 n = 200

Estimating 1 ( ):

c0 = 0

.0951

.0533

.0277

c0 = 0:5

.6552

.5830

.5544

.1381

.1163

.1021

c0 = 1:0

2.2010

2.1239

2.2310

Estimating 2 ( ):

c0 = 0

.1562

.0753

.0409

c0 = 0:5

.8629

.7511

.7200

.1984

.1379

.0967

c0 = 1:0

2.8707

2.4302

2.5538

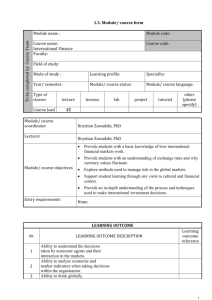

Table 2: Percentage Rejection Rate from

c=1.0

c0

n = 50

1%

5% 10%

0

.011 .023 .041

0.5 .682 .780 .819

1.0 .908 .913 .921

30

1000 Monte Carlo Simulations with

1%

.025

.935

.962

n = 100

5%

.04

.943

.966

10%

.062

.951

.967