Software Simulation of Depth of ... Video from Small Aperture Cameras

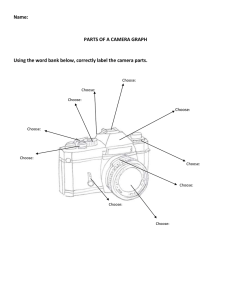

advertisement