Narrated Guided Tour Following and Interpretation by an Autonomous Wheelchair

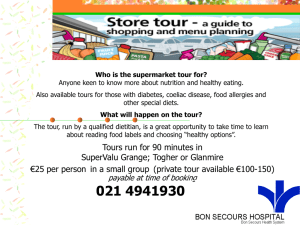

advertisement