Document 10802228

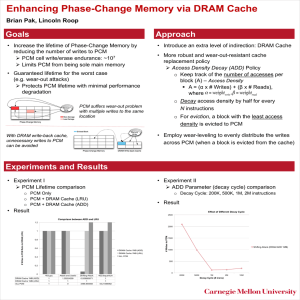

advertisement

15-740/18-740 Computer Architecture Lecture 5: Project Example Jus%n Meza Yoongu Kim Fall 2011, 9/21/2011 Reminder: Project Proposals • Project proposals due NOON on Monday 9/26 • Two to three pages consisAng of – Problem – Novelty – Idea – Hypothesis – Methodology – Plan • All the details are in the project handout 2 Agenda for Today’s Class • • • • Brief background on hybrid main memories Project example from Fall 2010 Project pitches and feedback Q & A 3 Main Memory in Today’s Systems CPU DRAM HDD/SSD 4 Main Memory in Today’s Systems CPU Main memory DRAM HDD/SSD 5 DRAM • Pros – Low latency – Low cost • Cons – Low capacity – High power • Some new and important applicaAons require HUGE capacity (in the terabytes) 6 Main Memory in Today’s Systems CPU Main memory DRAM HDD/SSD 7 Hybrid Memory (Future Systems) Hybrid main memory DRAM (cache) CPU New memories (high capacity) HDD/SSD 8 Row Buffer Locality-Aware Hybrid Memory Caching Policies Jus%n Meza HanBin Yoon Rachata Ausavarungnirun Rachael Harding Onur Mutlu Motivation • Two conflicAng trends: 1. ITRS predicts the end of DRAM scalability 2. Workloads conAnue to demand more memory • Want future memories to have – Large capacity – High performance – Energy efficient • Need scalable DRAM alternaAves 10 Motivation • Emerging memories can offer more scalability • Phase change memory (PCM) – Projected to be 3−12× denser than DRAM • However, cannot simply replace DRAM – Longer access latencies (4−12× DRAM) – Higher access energies (2−40× DRAM) • Use DRAM as a cache to large PCM memory [Mohan, HPTS ’09; Lee+, ISCA ’09] 11 Phase Change Memory (PCM) • Data stored in form of resistance – High current melts cell material – Rate of cooling determines stored resistance – Low current used to read cell contents 12 Projected PCM Characteristics (~2013) 32 nm Cell size Read latency Write latency Read energy Write energy Durability DRAM 6 F2 60 ns 60 ns 1.2 pJ/bit 0.39 pJ/bit N/A PCM 0.5–2 F2 300–800 ns 1400 ns 2.5 pJ/bit 16.8 pJ/bit 106–108 writes Rela%ve to DRAM 3–12× denser 6–13× slower 24× slower 2× more energy 40× more energy Limited life%me [Mohan, HPTS ’09; Lee+, ISCA ’09] 13 Row Buffers and Locality • Memory array organized in columns and rows • Row buffers store contents of accessed row • Row buffers are important for mem. devices – Device slower than bus: need to buffer data – Fast accesses for data with spaAal locality – DRAM: DestrucAve reads – PCM: Writes are costly: want to coalesce 14 Row Buffers and Locality A D D R ROW DATA Row buffer h mit! iss! LOAD X LOAD X+1 15 Key Idea • Since DRAM and PCM both use row buffers, – Row buffer hit latency same in DRAM and PCM – Row buffer miss latency small in DRAM – Row buffer miss latency large in PCM • Cache data in DRAM which – Frequently row buffer misses – Is reused many Ames • à because miss penalty is smaller in DRAM 16 Hybrid Memory Architecture CPU Memory Controller DRAM Cache (Low density) PCM (High density) Memory channel 17 Hybrid Memory Architecture CPU DRAM Ctlr DRAM Cache (Low density) PCM Ctlr PCM (High density) 18 Hybrid Memory Architecture CPU Tag store: 2 KB rows DRAM Cache (Low density) Memory Controller PCM (High density) 19 Hybrid Memory Architecture LOAD X Tag store: X à DRAM CPU Memory Controller DRAM Cache (Low density) PCM (High density) 20 Hybrid Memory Architecture LOAD Y Tag store: Y à PCM CPU Memory Controller DRAM Cache (Low density) PCM (High density) How does data get migrated to DRAM? Caching Policy 21 Methodology • Simulated our system configuraAons – Collected program traces using a tool called Pin – Fed instrucAon trace informaAon to a Aming simulator modeling an OoO core and DDR3 memory – Migrated data at the row (2 KB) granularity • Collected memory traces from a standard computer architecture benchmark suite – SPEC CPU2006 • Used an in-­‐house simulator writen in C# 22 Conventional Caching • Data is migrated when first accessed • Simple, used for many caches 23 Conventional Caching • Data is migrated when first accessed • Simple, used for many caches LD Rw1 w2 Tag store: Z à PCM CPU Memory Controller DRAM Cc ache PCM perform How does onven%onal c aching Row Data (Low density) (High density) in a hybrid m ain m emory? Bus contenAon! 24 Conventional Caching IPC Normalized to All DRAM No Caching (All PCM) ConvenAonal Caching 1 0.9 0.8 0.7 0.6 0.5 0.4 0.3 0.2 0.1 0 25 Conventional Caching IPC Normalized to All DRAM No Caching (All PCM) 1 0.9 0.8 0.7 0.6 0.5 0.4 0.3 0.2 0.1 0 Beneficial for some benchmarks ConvenAonal Caching 26 Conventional Caching IPC Normalized to All DRAM No Caching (All PCM) Performance degrades due to ConvenAonal Caching bus contenAon 1 0.9 0.8 0.7 0.6 0.5 0.4 0.3 0.2 0.1 0 27 Conventional Caching IPC Normalized to All DRAM No Caching (All PCM) Many row buffer hits: don’t need to ConvenAonal migrate Caching data 1 0.9 0.8 0.7 0.6 0.5 0.4 0.3 0.2 0.1 0 28 Conventional Caching IPC Normalized to All DRAM No Caching (All PCM) 1 0.9 0.8 0.7 0.6 0.5 0.4 0.3 0.2 0.1 0 Want to idenAfy data which misses in row ConvenAonal Caching buffer and is reused 29 Problems with Conventional Caching • Performs useless migraAons – Migrates data which are not reused – Migrates data which hit in the row buffer • Causes bus contenAon and DRAM polluAon – Want to cache rows which are reused – Want to cache rows which miss in row buffer 30 Problems with Conventional Caching • Performs useless migraAons – Migrates data which are not reused – Migrates data which hit in the row buffer • Causes bus contenAon and DRAM polluAon – Want to cache rows which are reused – Want to cache rows which miss in row buffer 31 A Reuse-Aware Policy • Keep track of the number of accesses to a row • Cache row in DRAM when accesses ≥ A – Reset accesses every Q cycles • Similar to CHOP [Jiang+, HPCA ’10] – Cached “hot” (reused) pages in on-­‐chip DRAM – To reduce off-­‐chip bandwidth requirements • We call this policy A-­‐COUNT 32 A Reuse-Aware Policy IPC Normalized to All DRAM No Caching (All PCM) ConvenAonal Caching A-­‐COUNT.4 1 0.9 0.8 0.7 0.6 0.5 0.4 0.3 0.2 0.1 0 33 A Reuse-Aware Policy IPC Normalized to All DRAM No Caching (All PCM) Performs fewer migraAons: reduces ConvenAonal Caching channel A-­‐COUNT.4 contenAon 1 0.9 0.8 0.7 0.6 0.5 0.4 0.3 0.2 0.1 0 34 IPC Normalized to All DRAM A Reuse-Aware Policy Too few migraAons: too many aConvenAonal ccesses go Caching No Caching (All PCM) to PCM 1 A-­‐COUNT.4 0.9 0.8 0.7 0.6 0.5 0.4 0.3 0.2 0.1 0 35 A Reuse-Aware Policy IPC Normalized to All DRAM No Caching (All PCM) Rows with many hits sAll needlessly ConvenAonal Caching migrated A-­‐COUNT.4 1 0.9 0.8 0.7 0.6 0.5 0.4 0.3 0.2 0.1 0 36 Problems with Reuse-Aware Policy • AgnosAc of DRAM/PCM access latencies – May keep data which row buffer misses in PCM – Missed opportunity: could save cycles in DRAM 37 rt No. 2011-005 (September 5, 2011) Problems with Reuse-Aware Policy • AgnosAc of DRAM/PCM access latencies Data with frequent row buffer hits Time PCM Miss Hit Hit Hit Hit Hit Hit Hit Hit Hit Hit DRAM Miss Hit Hit Hit Hit Hit Hit Hit Hit Hit Hit Saved cycles if placed in DRAM Data with frequent row buffer misses Time PCM Miss Hit Miss Hit Miss DRAM Miss Hit Miss Hit Miss Saved cycles if placed in DRAM Figure 2: Data placement affects service time. 38 Row Buffer Locality-Aware Policy • Cache rows which benefit from being in DRAM – I.e., those with frequent row buffer misses • Keep track of number of misses to a row • Cache row in DRAM when misses ≥ M – Reset misses every Q cycles • We call this policy M-­‐COUNT 39 Row Buffer Locality-Aware Policy IPC Normalized to All DRAM No Caching (All PCM) ConvenAonal Caching A-­‐COUNT.4 M-­‐COUNT.2 1 0.9 0.8 0.7 0.6 0.5 0.4 0.3 0.2 0.1 0 40 Row Buffer Locality-Aware Policy Recognizes rows with IPC Normalized to All DRAM No Caching (All PCM) many hits and does not migrate them M-­‐COUNT.2 ConvenAonal Caching A-­‐COUNT.4 1 0.9 0.8 0.7 0.6 0.5 0.4 0.3 0.2 0.1 0 41 Row Buffer Locality-Aware Policy IPC Normalized to All DRAM Lots oPf CM) data w ith just enough isses to No Caching (All ConvenAonal Caching mA-­‐COUNT.4 1 0.9 0.8 0.7 0.6 0.5 0.4 0.3 0.2 0.1 0 get cached but litle reuse azer being cached à need to also track reuse M-­‐COUNT.2 42 Combined Reuse/Locality Approach • Cache rows with reuse and which frequently miss in the row buffer – Use A-­‐COUNT as predictor of future reuse and – M-­‐COUNT as predictor of future row buffer misses • Cache row if accesses ≥ A and misses ≥ M • We call this policy AM-­‐COUNT 43 Normalized to All DRAM Combined Reuse/Locality Approach No Caching (All PCM) ConvenAonal Caching A-­‐COUNT.4 M-­‐COUNT.2 AM-­‐COUNT.4.2 1 0.8 0.6 0.4 0.2 0 44 Normalized to All DRAM Combined Reuse/Locality Approach Reduces useless migraAons No Caching (All PCM) ConvenAonal Caching A-­‐COUNT.4 M-­‐COUNT.2 AM-­‐COUNT.4.2 1 0.8 0.6 0.4 0.2 0 45 Combined Reuse/Locality Approach No And Caching (All Pw CM) ConvenAonal Caching A-­‐COUNT.4 data ith litle Normalized to All DRAM reuse kept out AM-­‐COUNT.4.2 of M-­‐COUNT.2 1 DRAM 0.8 0.6 0.4 0.2 0 46 Dynamic Reuse/Locality Approach • Previously menAoned policies require profiling – To determine the best A and M thresholds • We propose a dynamic threshold policy – Performs a cost-­‐benefit analysis every Q cycles – Simple hill-­‐climbing algorithm to maximize benefit – (Side note: we simplify the problem slightly by just finding the best A threshold, because we observe that M = 2 performs the best for a given A.) 47 Cost-Benefit Analysis • Each quantum, we measure the first-­‐order costs and benefits of the current A threshold – Cost = cycles of bus contenAon due to migraAons – Benefit = cycles saved at the banks by servicing a request in DRAM versus PCM • Cost = MigraAons × tmigraAon • Benefit = ReadsDRAM × (tread,PCM − tread,DRAM) + WritesDRAM × (twrite,PCM − twrite,DRAM) 48 SAFARI Technical Report No. 2 Cost-Benefit Maximization Algorithm Each quantum (10 million cycles): = Benefit Cost // net benefit 1 Net < 0 then // too many migrations? 2 if Net 3 A++ // increase threshold // last A beneficial 4 else 5 if Net > PreviousNet then // increasing benefit? 6 A++ // try next A 7 else // decreasing benefit 8 A - // too strict, reduce 9 end 10 end 11 PreviousNet = Net 49 Figure 6: Cost-benefit maximization algorithm. IPC Normalized to All DRAM Dynamic Policy Performance No Caching (All PCM) ConvenAonal Caching Best StaAc Dynamic 1 0.8 0.6 0.4 0.2 0 50 IPC Normalized to All DRAM Dynamic Policy Performance No Caching (All PCM) ConvenAonal Caching Best StaAc Dynamic 1 0.8 0.6 0.4 0.2 0 29% improvement over All PCM, Within 18% of All DRAM 51 Evaluation Methodology/Metrics • 16-­‐core system • Averaged across 100 randomly-­‐generated workloads of varying working set size – LARGE = working set size > main memory size IPCtogether • Weighted speedup (performance) = ∑ IPCalone IPCalone • Maximum slowdown (fairness) = max IPCtogether 52 the working sets do not reside mainly in the DRAM cache. Longer ones. 3 We find least frequently used to perform the best, however, the performance of l 16-core Performance & Fairness 4 2 0 0% 25% 50% 75% 100% Fraction of LARGE Benchmarks (a) Weighted speedup. 30 Conventional Caching A-COUNT AM-COUNT DAM-COUNT 0.3 Harmonic Speedup 6 Conventional Caching A-COUNT AM-COUNT DAM-COUNT 40 Maximum Slowdown Weighted Speedup 8 20 10 0 0% 25% 50% 75% 100% Fraction of LARGE Benchmarks 0.2 0.1 0 (b) Maximum slowdown. 53 Figure 8: Performance, fairness, and power r the working sets do not reside mainly in the DRAM cache. Longer ones. 3 We find least frequently used to perform the best, however, the performance of l 16-core Performance & Fairness AM-COUNT DAM-COUNT 4 2 0 0% 25% 50% 75% 100% Fraction of LARGE Benchmarks (a) Weighted speedup. 30 0.3 Harmonic Speedup 6 40 More c ontenAon Conventional Caching Conventional Caching A-COUNT à more benefit A-COUNT AM-COUNT Maximum Slowdown Weighted Speedup 8 DAM-COUNT 20 10 0 0% 25% 50% 75% 100% Fraction of LARGE Benchmarks 0.2 0.1 0 (b) Maximum slowdown. 54 Figure 8: Performance, fairness, and power r the working sets do not reside mainly in the DRAM cache. Longer ones. 3 We find least frequently used to perform the best, however, the performance of l 16-core Performance & Fairness 4 2 0 0% 25% 50% 75% 100% Fraction of LARGE Benchmarks 30 Conventional Caching A-COUNT AM-COUNT DAM-COUNT 0.3 Harmonic Speedup 6 Conventional Caching A-COUNT AM-COUNT DAM-COUNT 40 Maximum Slowdown Weighted Speedup 8 20 10 0 0% 25% 50% 75% 100% Fraction of LARGE Benchmarks Dynamic policy can (a) Weighted speedup. (b) Maximum slowdown. adjust to different workloads 55 0.2 0.1 0 Figure 8: Performance, fairness, and power r Versus All PCM and All DRAM • Compared to an All PCM main memory – 17% performance improvement – 21% fairness improvement • Compared to an All DRAM main memory – Within 21% of performance – Within 53% of fairness 56 No. 2011-005 (September 5, 2011) 4 2 0 Power (W) 6 Normalized Weighted Speedup Robustness to System Configuration 3 16 cores 8 cores 4 cores 2 cores 2.5 2 1.5 1 0.5 0 0 50 100 150 Sorted Workload Number Figure 10: Number of cores. 200 57 Implementation/Hardware Cost • Requires a tag store in memory controller – We currently assume 36 KB of storage per 16 MB of DRAM – We are invesAgaAng ways to miAgate this overhead • Requires a sta1s1cs store – To keep track of accesses and misses 58 Conclusions • DRAM scalability is nearing its limit – Emerging memories (e.g. PCM) offer scalability – Problem: must address high latency and energy • We propose a dynamic, row buffer locality-­‐ aware caching policy for hybrid memories – Cache rows which miss frequently in row buffer – Cache rows which are reused many Ames • 17/21% perf/fairness improvement vs. all PCM • Within 21/53% perf/fairness of all DRAM system 59 Thank you! Questions? 60 Backup Slides 61 0 50 100 150 Sorted Workload Number 200 Figure 10: Number of cores. Related Work 6 Weighted Speedup PCM M-COUNT 8⇥ g Factor M latency. DIP Probabilistic Probabilistic+RBL DAM-COUNT 4 2 0 A 62 Figure 13: Related techniques. 0 WS MS 0 P HS 0 Versus DRAM/PCM. PCM Latency g 6 MB 512 MB e Size Figure 10: Numbe Weighted Speedup 4 2 0 6 All PCM DAM-COUNT 6 Weighted Speedup 50 100 Sorted Workload 1⇥ 2⇥ 4⇥ 8⇥ DIP Pro Pro DA 4 2 0 PCM Latency Scaling Factor DRAM size. Figure 12: Effects of PCM latency. Figure 13: 63 0 0 0 WS MS P HS Figure 9: Versus DRAM/PCM. DRAM Cache Size Conventional Caching DAM-COUNT 3 2 1 0 64 MB 128 MB 256 MB 512 MB DRAM Cache Size 6 Weighted Speedup Weighted Speedup 4 4 2 0 1⇥ PCM Figure 11: Effects of DRAM size. Figure 12: E 64 SAFARI Technical Report No. 2011- Versus All DRAM and All PCM 4 2 0 20 10 0 0.2 All PCM Conventional Caching DAM-COUNT All DRAM 4 0.15 0.1 2 0.05 0 WS MS HS Figure 9: Versus DRAM/PCM. 4 Power (W) 6 30 Harmonic Speedup Maximum Slowdown Weighted Speedup 8 0.25 6 P 0 65 Performance vs. Statistics Store Size (8 ways, LRU) 512-­‐entry (0.2 KB) 1024-­‐entry (0.4 KB) 2048-­‐entry (0.8 KB) IPC Normalized to All DRAM 4096-­‐entry (1.6 KB) ∞-­‐entry 1 0.8 0.6 0.4 0.2 0 66 Performance vs. Statistics Store Size (8 ways, LRU) 512-­‐entry (0.2 KB) 1024-­‐entry (0.4 KB) 2048-­‐entry (0.8 KB) IPC Normalized to All DRAM 4096-­‐entry (1.6 KB) ∞-­‐entry 1 0.8 0.6 0.4 0.2 0 Within ~1% of infinite storage with 200 B of storage 67 All DRAM 8 Banks IPC Normalized to All DRAM with 8 Banks No Caching (All PCM) ConvenAonal Caching Best StaAc Dynamic 1 0.9 0.8 0.7 0.6 0.5 0.4 0.3 0.2 0.1 0 68 All DRAM 16 Banks IPC Normalized to All DRAM with 16 Banks No Caching (All PCM) ConvenAonal Caching Best StaAc Dynamic 1 0.9 0.8 0.7 0.6 0.5 0.4 0.3 0.2 0.1 0 69 Simulation Parameters 70 Overview • DRAM is reaching its scalability limits – Yet, memory capacity requirements are increasing • Emerging memory devices offer scalability – Phase-­‐change, resisAve, ferroelectric, etc. – But, have worse latency/energy than DRAM • We propose a scalable hybrid memory arch. – Use DRAM as a cache to phase change memory – Cache data based on row buffer locality and reuse 71 Methodology • Core model – 3-­‐wide issue with 128-­‐entry instrucAon window – 32 KB L1 D-­‐cache per core – 512 KB shared L2 cache per core • Memory model – 16 MB DRAM / 512 MB PCM per core • Scaled based on workload trace size and access paterns to be smaller than working set – DDR3 800 MHz, single channel, 8 banks per device – Row buffer hit: 40 ns – Row buffer miss: 80 ns (DRAM); 128, 368 ns (PCM) – Migrate data at 2 KB row granularity 72 Outline • • • • • • Overview MoAvaAon/Background Methodology Caching Policies MulAcore EvaluaAon Conclusions 73 the working sets do not reside mainly in the DRAM cache. Longer ones. TODO: change diagram to two channels so that this can be explained 3 We find least frequently used to perform the best, however, the performance of l 16-core Performance & Fairness 4 2 0 0% 25% 50% 75% 100% Fraction of LARGE Benchmarks 30 Conventional Caching A-COUNT AM-COUNT DAM-COUNT 0.3 Harmonic Speedup 6 Conventional Caching A-COUNT AM-COUNT DAM-COUNT 40 Maximum Slowdown Weighted Speedup 8 20 10 0 0% 25% 50% 75% 100% Fraction of LARGE Benchmarks DistribuAng data (a) Weighted speedup. (b) Maximum slowdown. benefits small working sets, too 74 0.2 0.1 0 Figure 8: Performance, fairness, and power r