Stat 602X Exam 1 Spring 2013

advertisement

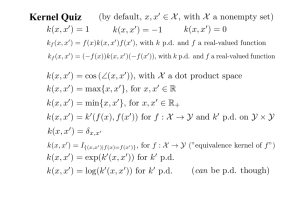

Stat 602X Exam 1 Spring 2013 I have neither given nor received unauthorized assistance on this exam. ________________________________________________________ Name Signed Date _________________________________________________________ Name Printed This is a very long exam consisting of 12 parts. I'll score it at 10 points per problem/part and add your best 8 scores to get an exam score (out of 80 points possible). Some parts will go (much) faster than others, and you'll be wise to do them first. 1 1. Consider the p 1 prediction problem with N 8 and training data as below y x 8 4 4 0 2 3 6 5 .125 .250 .375 .500 .625 .750 .875 1.000 where use of the order M 2 Haar basis functions on the unit interval produces 1 1 1 1 X 1 1 1 1 1 2 0 1 2 0 1 2 0 1 2 0 1 0 2 1 0 2 1 0 2 1 0 2 0 2 0 0 0 0 2 0 0 0 2 0 0 0 0 2 0 0 0 2 0 0 0 0 2 0 0 0 2 2 0 0 Use the notation that the jth column of X is x j . a) Find the fitted OLS coefficient vector β̂ OLS for a model including only x1 , x 2 , x3 , x 4 as predictors. 2 b) Center Y to create Y* and let x*j 1 2 2 x j for each j . Find βˆ lasso 7 optimizing 2 8 * 8 * yi b j xij 5 b j i 1 j 2 i 2 8 over choices of b 7 . * 0 c) The LAR algorithm applied to Y* and the set of predictors x*j for j 2,3, ,8 begins at Y * OLS . Identify the first two points in 8 at which and takes a piecewise linear path through 8 to Y the direction of the path changes, call them W1 and W2 . (Here you may well wish to use both the connection between the LAR path and the lasso path and explicit formulas for the lasso coefficients.) 3 ˆ penalty 8 optimizing d) Find Y Y v Y v v,x*2 2 2 v,x*3 2 v,x*4 2 8 4 v,x*j 2 j 5 over choices of v 8 . 4 e) Find an 8 8 smoother matrix S corresponding to the penalty in d) (a matrix so that for any Y 8 a Ŷ penalty optimizing the form in part d) is SY ) and plot values in the 4th row of this matrix against x .500 below. 5 f) If one accepts the statistical conventional wisdom that (generalized) "spline" smoothing is nearly equivalent to kernel smoothing, in light of your plot in e) identify a kernel that might provide smoothed values similar to those for the penalty used in d). (Name a kernel and choose a bandwidth.) 6 2. Consider the p 1 prediction problem with N 6 and training data as below. y x 1.6 .4 3.5 1.5 5 6 1 2 3 4 5 6 Forward selection of binary trees for SEL prediction produces the sequence of trees represented below. If one determines to prune back from the final tree in optimal fashion, there is a nested sequence of subtrees that are the only possible optimizers of C T T SSE T for positive . Identify that nested sequence of sub-trees of Tree 5 below. Tree Number Subsets of values of x SSE 0 123456 24.22 1 1 2 3 4 || 5 6 5.47 2 1 2 || 3 4 || 5 6 3.22 3 1 2 || 3 || 4 || 5 6 1.22 4 1 || 2 || 3 || 4|| 5 6 .50 5 1 || 2 || 3 || 4 || 5 || 6 0 7 3. Consider a p 1 prediction problem for x 0,1 and random forest predictor fˆB* based on a training set of size N 101 with xi i 1 /100 for i 1, 2, ,101 and nmax 5 (so no split is made in creating a single tree predictor fˆ *b that would produce a leaf representing fewer than 5 training points). Consider the bias of prediction at x 1.00 , namely E fˆB* 1.00 1.00 under a model where Eyi xi . Say/prove what you can about this bias. (Is it 0? Is it positive? Is it negative? How big is it?) 8 4. Consider a small fake data set consisting of N 6 data vectors in 2 and use of a kernel function (mapping 2 2 ) K x, z exp 3 x z 1.01 .99 1 1 1 .99 1.01 1 1 .998 .01 .003 .01 0 0 X and K 0 0 0 0 .003 .01 .003 0 0 .01 2.00 2.00 2 2 .000 .998 1 .003 .003 .003 .000 .003 .003 1 .999 .998 .000 2 . The data and Gram matrices are .003 .003 .999 1 .999 .000 .003 .003 .998 .999 1 .000 .000 1 .000 1 .000 0 .000 0 .000 0 1 0 1 1 0 0 0 0 0 0 1 1 1 0 0 0 1 1 1 0 0 0 0 0 0 1 0 0 1 1 1 0 As it turns out (using the approximate form for K ) 1 1 1 K JK KJ JKJ 6 6 36 has (approximately) a SVD with two non-zero singular values (namely 2.43 and 1.43) and corresponding vectors of principal components u1 .39, .39, .39,.51,.51,.15 and u 2 .12, .12, .12, .27, .27,.9 Say what both principal components analysis on the raw data and kernel principal components indicate about these data. Raw PCA: Kernel PCA: 9 5. Consider here prediction of a 0-1 (binary) response using a model that says that for two (standardized) predictors z1 and z2 P yi 1| z1i , z2i exp 1 z1i 2 z2i 1 exp 1 z1i 2 z2i (Training data are N vectors z1i , z2i , yi .) For this problem, one might define a (log-likelihoodbased) training error as N N i 1 i 1 TE a, b1 , b2 ln 1 exp a b1 z1i b2 z2i yi a b1 z1i b2 z2i How would you regularize fitting of this model in "ridge-regression" style (penalizing only b1 and b2 and not a )? Derive 3 equations that you would need to solve simultaneously to carry out regularized fitting. 10 6. Consider a simple Bayes model averaging prediction problem. Training data are xi , yi where xi 0,1 and we assume that these are independent with yi xi i for i N 0,1 . Two models are contemplated. Model 1 says that 0 1 and a priori N 0, 10 . Model 2 says that 0 and 1 are a priori independent with both 0 N 0, 10 2 2 and 1 N 0, 10 . Assume that a priori the two models are equally likely. Training pairs xi , yi 2 are 0,5 , 0, 7 , 0, 6 , 1,12 . Find an appropriate predicted value of y if x 1 . HELPFUL FACT (you need NOT prove): If conditioned on , observations z1 , , zn are iid n N ,1 and is itself N 0, 2 , then conditioned on z1 , , zn , is N n 1 2 1 1 z, n 2 . 11 7. Consider approximations to simple functions using single layer feed-forward neural network forms. First say how you might produce an approximation of a function on 1 that is an indicator function of any interval, I a, b (finite or infinite), say I a x b . Then argue that it's possible to M approximate any function of the form g x cl I al x bl on 1 using a neural network l 1 form. 12