Section 3.6 Definitions

advertisement

Section 3.6

Definitions

• A vector space V is a set that is equipped with two operations, vector

addition and scalar multiplication, which satisfy the following properties

for all u, v, w ∈ V and c, d ∈ R:

1. u + v = v + u

2. (u + v) + w = u + (v + w)

3. There exists a vector 0 ∈ V such that 0 + u = u for all u ∈ V .

4. For each u ∈ V there exists a vector −u ∈ V with the property that

u + (−u) = 0.

5. c(du) = (cd)u

6. c(u + v) = cu + cv

7. (c + d)u = cu + du

8. 1u = u.

• A vector space V is finite dimensional if there exists an integer k and

vectors v1 , . . . , vk that span V . Otherwise, V is infinite dimensional.

• An inner product is a map h·, ·i : V × V → R with the following properties

for all u, v, w ∈ V and c ∈ R:

1. hu, vi = hv, ui

2. hcv, vi = chu, vi

3. hu + v, wi = hu, wi + hv, wi.

4. hv, vi ≥ 0 and hv, vi = 0 if and only if v = 0.

• A vector space equipped with an inner product is called an inner product

space.

Main ideas

• Lots of familiar sets of mathematical objects form vector spaces.

• Lagrange interpolation theorem.

1

Section 4.1

Definitions

• Let V ⊂ Rm be a subspace, and let b ∈ Rm . The projection of b onto

V is the unique vector p ∈ V with the property that b − p ∈ V ⊥ . The

vector b − p is the projection of b onto V ⊥ .

• Given an m × n matrix A of rank n, the least squares solution of the

equation Ax = b is the unique solution of the normal equations

(A> A)x = A> b.

Main ideas

• Given a subspace V ⊂ Rm with basis {v1 , . . . , vn }, if we let A be the

m × n matrix whose columns are v1 , . . . , vn , then the standard matrix of

the projection onto V is given by

PV = A(A> A)−1 A> .

Section 4.2

Definitions

• Let V ⊂ Rn be a subspace. A set {v1 , . . . , vk } ⊂ V is an orthogonal

basis for V if it is a basis whose elements are mutually orthogonal. It is

an orthonormal basis if it is an orthogonal basis whose elements are unit

vectors.

Main ideas

• An orthogonal set of nonzero vectors is linearly independent.

• If {v1 , . . . , vk } is an orthogonal basis for a subspace V ⊂ Rn , then for any

x∈V

x = projv1 x + · · · + projvk x.

• If {v1 , . . . , vk } is an orthogonal basis for a subspace V ⊂ Rn , then for any

x ∈ Rn

projV x = projv1 x + · · · + projvk x.

2

• (Gram-Schmidt process) If {w1 , . . . , wk } is a basis for a subspace V ⊂ Rn ,

the set {v1 , . . . , vk } defined by

v1 = w1

v2 = w2 − projv1 w2

v3 = w3 − projv1 w3 − projv2 w3

..

.

vk = wk − projv1 wk − · · · − projvk−1 wk

is an orthogonal basis for V .

Section 4.3

Definitions

• The standard matrix of a linear transformation T : Rn → Rm is the

m × n matrix whose columns are T (e1 ), . . . , T (en ) where {e1 , . . . , en } is

the standard basis of T .

• Let T : Rn → Rn be a linear transformation and let B = {v1 , . . . , vn } be

a basis for Rn . The matrix of T with respect to B is the matrix [T ]B whose

columns are the B-coordinate vectors of T (v1 ), . . . , T (vn ).

• Two n × n matrix A and B are similar if there exists an invertible matrix

P such that A = P AP −1 .

• A matrix A is diagonalizable if it’s similar to a diagonal matrix.

Main ideas

• Let T : Rn → Rn be a linear transformation with standard matrix [T ]stand .

Let B = {v1 , . . . , vn } be a basis for Rn and let [T ]B be the matrix of T

with respect to B. Let P be the matrix whose columns are v1 , . . . , vn .

Then

[T ]stand = P [T ]B P −1 .

3

Section 4.4

Definitions

Let T : V → W be a linear transformation.

• The set

ker(T ) = {v ∈ V : T (v) = 0}

is called the kernel of T . The set

image(T ) = {w ∈ W : T (v) = w for some v ∈ V }

is called the image of T .

• The map T is one-to-one if T (v1 ) = T (v2 ) implies v1 = v2 . The map T

is onto if for every w ∈ W there exists v ∈ V such that T (v) = w.

• If T is both one-to-one and onto then it is called an isomorphism.

• Let B1 = {v1 , . . . , vn }, B2 = {w1 , . . . , wm } be bases for V and W respectively. The matrix of T with respect to B1 and B2 is the m × n matrix

whose columns are the B2 -coordinate vectors of T (v1 ), . . . , T (vn ).

Main ideas

Let T : V → W be a linear transformation.

• Let T : V → W be a linear transformation.

– The map T is one-to-one if ker T = {0}.

– The map T is onto if image(T ) = W .

– The map T is an isomorphism if and only if there exists a map S :

W → V such that T ◦ S(w) = w for all w ∈ W and S ◦ T (v) = v for

all v ∈ V .

• Let V be a finite dimensional vector space and let B = {v1 , . . . , vn } be a

basis for V . Then the map CB : V → Rn that assigns a vector v ∈ V to

its B-coordinate vector is an isomorphism. That is, for any v ∈ V there

exist unique scalars c1 , . . . , cn such that v = c1 v1 + · · · cn vn and the map

CB is given by

c1

c2

CB (v) = . .

..

cn

4

• Let B1 , B2 be bases for V and W respectively and let A be the matrix of

T with respect to B1 , B2 . Let µA : Rn → Rm be the linear map given by

µA (x) = Ax. Then

T = CB−1

◦ µA ◦ CB1 .

2

Section 5.1

Definitions

• A determinant map is a map det : Mn×n → R with the following properties

– linear in each row

– anti-symmetric (ie. swapping rows changes the sign of the determinant)

– det I = 1.

Main ideas

Let A be an n × n matrix.

• Let A0 be obtained from A by doing a swap elementary row operation on

A. Then det A0 = − det A.

• Let A0 be obtained from A by doing a scale elementary row operation with

scalar c. Then det A0 = c det A.

• Let A0 be obtained by doing a replace elementary row operation. Then

det A0 = det A.

• det A 6= 0 if and only if A is nonsingular.

• If A is upper or lower triangular then det A is the product of the diagonal

entries of A.

• Let E be an elementary matrix. Then det(EA) = det E det A.

• Let A and B be n × n matrices. Then det(AB) = det A det B.

• If A is nonsingular, then det(A−1 ) =

1

det A .

• det A> = det A.

• If two rows of A are equal then det A = 0.

5

Section 5.2

Definitions

• The ijth cofactor of an n × n matrix A is the scalar Cij given by

Cij = (−1)i+j det Aij .

where Aij is the (n − 1) × (n − 1) submatrix of A formed by deleting the

ith row and jth column of A.

Main ideas

• Let A = [aij ] be an n × n matrix. Then

det A =

n

X

aij Cij

j=1

and

det A =

n

X

aij Cij

i=1

• There exists a unique determinant map given by the formulas above.

• (Cramer’s rule) Let A be a nonsingular n × n matrix, and let b ∈ Rn .

Then the unique solution x = (x1 , . . . , xn )> of the equation Ax = b is

given by

det Bi

xi =

det A

where Bi is the matrix obtained by replacing the ith column of A by b.

• Let A be a nonsingular matrix, and let C = [Cij ] be the matrix of cofactors

of A. Then

1

A−1 =

C >.

det A

Comments

The exam will be roughly six questions, some with multiple parts, and include

both computational and theoretical questions. The questions will mostly be

similar to those in the sample exam below, but there will potentially be something new. Before anything else, be comfortable with the main ideas outlined

for each section above.

6

Sample Exam

1. Find the closest point to y = (3, 1, 5, 1)> in the subspace of R4 spanned

by v1 = (3, 1, −1, 1) and v2 = (1, −1, 1, −1). What is the distance from y

to this subspace?

2. Let W be the subspace of R3 given by W = {(x1 , x2 , x3 ) : x1 +2x2 +3x3 =

0}.

(a) Find an orthonormal basis {b1 , b2 } for W .

(b) Find a third unit vector b3 that is orthogonal to both b1 , b2 so that

B = {b1 , b2 , b3 } is an orthonormal basis for R3 .

(c) Let T : R3 → R3 be the linear transformation of rotation by π/3

about the axis spanned by b3 in the direction governed by the right

hand rule. Write the matrix of T with respect to B.

(d) Write the matrix of T with respect to the standard basis.

3. Consider the linear transformation T : P3 → R2 given by

R1

f (t)dt

0

T (f ) =

.

f 0 (1)

(a) Compute the matrix of T with respect to the standard bases for P3

and R2 .

(b) Find the dimensions of ker T and image T .

(c) State whether T is one-to-one or onto or both.

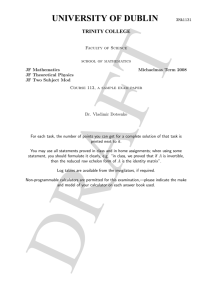

4. Let

5

0

A=

0

0

0

0

−2

1

,

1 −2

2

2

0

2

2

1

0

0

B=

0

0

0

2

2

1

5

0

−2

1

.

1 −2

2

2

Compute det A and det B.

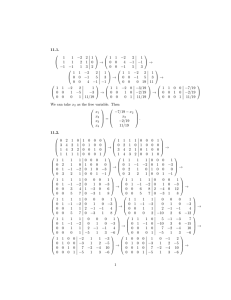

5. Let

0

0

C=

1

0

1

0

0

0

0

1

0

0

0

0

,

0

2

1

0

D=

0

0

Compute det C and det D.

6. Let

1

A= 2

1

7

2

3

4

1

0 .

2

3

2

0

0

5 −9

−2

1

.

1 −2

0

2

(a) Find the solution to Ax = b with b = (1, 2, −1)> using Cramer’s

rule.

(b) Find A−1 using cofactors.

7. Let A be an n × n matrix. Use the fact that det A> = det A and a

homework problem to prove that if A has a column of 0 entries then

det A = 0.

8. Let A be an n × n matrix with integer entries.

(a) Explain why det A must be an integer.

(b) Suppose A−1 also has integer entries. Prove that det A = ±1. [Hint:

Use the fact that det A−1 = 1/ det A.

9. Let A and B be 4 × 4 matrices and suppose det A = 5 and det B = 3.

Compute the following if possible.

(a) det A>

(b) det A−1

(c) det AB.

(d) det(A + B)

(e) det(2A)

(f) det(−B)

8