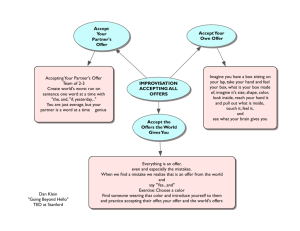

Improvisational Interaction ::

advertisement