An Exploratory Analysis of a Large Health Cohort Study Using

Bayesian Networks

By Delin Shen

S.B. Biomedical Engineering, S.B. Mechanical Engineering

Tsinghua University, 1994

S.M. Biomedical Engineering

Tsinghua University, 1997

SUBMITTED TO THE HARVARD-MIT DIVISION OF HEALTH SCIENCES AND

TECHNOLOGY IN PARTIAL FULFILLMENT OF THE REQURIEMENTS FOR THE

DEGREE OF

DOCTOR OF PHILOSOPHY IN MEDICAL ENGINEERING

AT THE

MASSACHUSETTS INSTITUTE OF TECHNOLOGY

February 2006

@ 2006 Massachusetts Institute of Technology

All rights reserved

:'Ji.

A

-N

Signature of Author ...................................

..................................................

Harvard-MIT Division of Health Sciences and Technology

January 26, 2006

Certified by

......

...........................

62'

Peter Szolovits,Ph.D.

Professor of Computer Science and Engineering

Professor of Health Sciences and Technology

Thesis Supervisor

Acceptedby........................................ -

.

............................

--Martha L. Gray, Ph.D.

Edward Hood Taplin Professor of Medical ar Electrical Engineering

Co-Director, Harvard-MIT Division of Health Sziences and Technology

An Exploratory Analysis of a Large Health Cohort Study Using Bayesian Networks

By Delin Shen

Submitted to the Harvard-MIT Division of Health Sciences and Technology on January 26, 2006

in partial fulfillment of the requirements for the degree of

Doctor of Philosophy in Health Sciences and Technology

ABSTRACT

Large health cohort studies are among the most effective ways in studying the causes, treatments

and outcomes of diseases by systematically collecting a wide range of data over long periods. The

wealth of data in such studies may yield important results in addition to the already numerous

findings, especially when subjected to newer analytical methods.

Bayesian Networks (BN) provide a relatively new method of representing uncertain relationships

among variables, using the tools of probability and graph theory, and have been widely used in

analyzing dependencies and the interplay between variables. We used BN to perform an

exploratory analysis on a rich collection of data from one large health cohort study, the Nurses'

Health Study (NHS), with the focus on breast cancer.

We explored the data from the NHS using BN to look for breast cancer risk factors, including a

group of Single Nucleotide Polymorphisms (SNP). We found no association between the SNPs

and breast cancer, but found a dependency between clomid and breast cancer. We evaluated clomid

as a potential riskfactor after matching on age and number of children. Our results showed for

clomid an increased risk of estrogen receptor positive breast cancer (odds ratio 1.52, 95% CI

1.11-2.09) and a decreased risk of estrogen receptor negative breast cancer (odds ratio 0.46, 95%

CI 0.22-0.97).

We developed breast cancer risk models using BN. We trained models on 75% of the data, and

evaluated them on the remaining. Because of the clinical importance of predicting risks for

Estrogen Receptor positive and Progesterone Receptor positive breast cancer, we focused on this

specific type of breast cancer to predict two-year, four-year, and six-year risks. The concordance

statistics of the prediction results on test sets are 0.70 (95% CI: 0.67-0.74), 0.68 (95% CI:

0.64-0.72), and 0.66 (95% CI: 0.62-0.69) for two, four, and six year models, respectively.

We also evaluated the calibration performance of the models, and applied a filter to the output to

improve the linear relationship between predicted and observed risks using Agglomerative

Information Bottleneck clustering without sacrificing much discrimination performance.

Thesis Supervisor: Peter Szolovits, Ph.D.

Title: Professor of Computer Science and Engineering

Professor of Health Sciences and Technology

-2-

To my grandmother,

my parents,

and my wife

-3-

Acknowledgments

There are many people who have helped me during my thesis research. First and foremost, I

would like to thank my thesis supervisor, Professor Peter Szolovits, of the MIT Computer Science

and Artificial Intelligence Lab. I am grateful for his continuous guidance in learning and doing

research, his careful reading of my thesis, and especially his moral support during my study at

MIT. From time to time, I had challenges in my research work, and talking with Peter always

helped me to overcome the difficulties, while his sense of humor made it easier for me to deal

with the pressure. I would also very much like to thank my mentor and friend, Professor Marco

Ramoni, of the Harvard Medical School and Children's Hospital, especially for his tremendous

help with my research and the encouragement I received from his own experiences. Marco spent

a large amount of time discussing with me the research, going through with me the presentations,

without which I could never have finished the thesis work. I am also grateful to Professor Graham

Colditz, of the Harvard School of Public Health, whose insights and expertise in breast cancer

and the Nurses' Health Study were instrumental in both my research and thesis defense. Finally, I

cannot continue without expressing my lasting gratitude to Professor Tommi Jaakkola, of the MIT

Computer Science and Artificial Intelligence Lab, and Professor Lucila Machado, of the Harvard

Medical School and Brigham Women's Hospital, who gave me invaluable feedbacks and

suggestions in how to apply machine learning techniques.

I am grateful to the members of the Channing Lab, who gave me so many help and support

during the research. Dr. Karen Corsano helped me to get familiar with the Nurses' Health Study,

and always answered my questions promptly. Lisa Li spent tremendous amount of time extracting

the data for our research, which is an extra to her already heavy loaded daily work. Dr. David

Hunter and Rong Chen provided and helped me understand the genomics data. Marion McPhee

helped me on the log-incidence model and provided related data. Their contributions are all

crucial to this thesis work. I would also like to thank all MEDG members, past and present, at the

MIT Computer Science and Artificial Intelligence Lab, who have each helped in their individual

ways. I would especially like to mention Fern DeOliveira, our awesome assistant, for her always

being there and prompt help.

I am indebted to my friends and family who have continued to believe in me, and who have kept

me sane, entertained, and happy. I would particularly like to thank Alfred Falco, Wei Guo, Jinlei

Liu, Jingsong Wu, Chao Wang, Yi Zhang, Hao Wang, Selina Lam, Jianyi Cui, Jing Wang, Minqu

Deng, Xingchen Wang, and Song Gao.

This thesis is based upon work supported in part by a HST-MEMP fellowship, the Defense

Advanced Research Projects Agency grant F30602-99-1-0509, and the National Institute of

Health grant U54LM008748-01.

-4-

Table of Content

Chapter 1 Introduction .............................................................................-

8 -

Chapter 2 Background........................................................................... 10

- 2.1 Nurses'Health

Study ...................................................................................................-

2.2 Breast Cancer Models ..................................................................................................2.3 Evaluation and Validation of Breast Cancer Models ...................................................2.4 Breast Cancer and Genomics .......................................................................................

2.5 Machine Learning Techniques .....................................................................................2.6 Bayesian Networks ......................................................................................................2.7 Bayesware Discoverer .................................................................................................-

10-

11 17 19

- 23 27 30 -

Chapter 3 Landscaping Clinical and Life-style and Genotypic Data- 32 3.1 Landscaping Clinical and Life-style Variables and SNPs............................................3.1.1 Data.......................................................................................................................3.1.2 Exploring the SNPs and Breast Cancer .................................................................3.1.3 Exploring Clinical and Life-style Factors and SNPs ............................................3.2 Estrogen Receptor Status and Clomid .........................................................................3.3 Summary......................................................................................................................-

32 33 36 38 41 47 -

Chapter 4 Breast Cancer Model............................................................- 49 4.1

4.2

4.3

4.4

4.5

4.6

4.7

4.8

Log-]Incidence Model for Breast Cancer ......................................................................Evaluating Risk Score as an Index for Breast Cancer .................................................Learning a Classifier for Breast Cancer Incidence ......................................................Classifier for ER+/PR+ Breast Cancer ........................................................................Evaluation of Risk Prediction Model ...........................................................................

Filtering of Risk Prediction Probabilities Based on Clustering ...................................Classifiers Predicting Long Term Risks.......................................................................Summary......................................................................................................................-

Chapter 5 Discussion ..............................................................................

49 51 54 59 60

- 64 71 75 -

78

- -

5.1 On Exploratory Analysis ..............................................................................................- 78 5.1.1 Expert Knowledge .................................................................................................- 78 5.1.2 Exploration of the Dependencies in Learned Bayesian Networks ........................ - 78 5.1.3 Split of Training and Test Set................................................................................- 79 5.1.4 Discretization and Consolidation of Variable Values............................................- 80 5.2 On Learning Bayesian Networks from Data................................................................- 80 -5-

5.2.1 Reading a Bayesian Network Learned From Data ................................................- 81 5.2.2 Bayesian Network and Highly Interdependent Data.............................................- 82 5.3 On Evaluation of Risk Predicting Models ...................................................................- 83 5.3.1 Evaluation of Models ............................................................................................- 83 5.3.2 Comparison with the Model in Clinical Use .........................................................- 84 5.3.3 Clustering of Bayesian Network Prediction ..........................................................- 85 5.4 Summary of Contributions ...........................................................................................- 86 5.5 Future Work.................................................................................................................- 87 5.5.1 Data Pre-processing ..............................................................................................- 87 5.5.2 Evaluation of Risk Score As an Index for Breast Cancer ..................................... - 87 5.5.3 Dealing with Highly Interdependent Data ............................................................- 88 5.5.4 Prediction of 5-year Risks .....................................................................................- 88 5.5.5 Clomid and Breast Caner ......................................................................................- 88 -

Bibliography ............................................................................................-

-6-

91 -

List of Figures

Figure Bayesian Network structures of three variables ..........................................................- 28 Figure 2 Bayesian Network of 12 SNPs and breast cancer.......................................................- 37 Figure 3 Bayesian Network for interactions among clinical and life-style variables and SNPs- 40 Figure 4 Clomid use and breast cancer with ER status .............................................................- 42 Figure 5 Comparison of birth year between nurses who used and never used clomid..............- 43 Figure 6 Comparison of number of children on clomid use ......................................................- 46 Figure 7 Bayesian Network learned for evaluating risk score as an index for breast cancer ....- 53 Figure 8 Bayesian Network for predicting breast cancer ..........................................................- 58 Figure 9 Bayesian Network for predicting ER+/PR+ breast cancer ..........................................- 59 Figure 10 ROC curve of ER+/PR+ breast cancer prediction on test set....................................- 60 Figure 11 Calibration curve of ER+/PR+ breast cancer prediction on test set ..........................- 63 Figure 12 Clustering of prediction on training set.................................................................... - 68 Figure 13 ROC curve after filtering on test set .........................................................................- 70 Figure 14 Calibration curve after filtering on test set................................................................- 71 Figure 15 Bayesian Network for predicting 4-year risk of ER+/PR+ breast cancer ................. - 73 Figure 16 Bayesian Network for predicting 6-year risk of ER+/PR+ breast cancer ................. - 73 List of Tables

Table 1 Variable list for exploratory analysis ............................................................................- 34 Table 2 Variable orders for exploring clinical and life-style factors and SNPs .........................- 39 Table 3 Clomid and breast cancer with ER status, no matching ...............................................- 45 Table 4 Clomid and breast cancer with ER status, matched on age for clomid use .................. - 45 Table 5 Clomid and breast cancer with ER status, matched on age and parity for clomid use .- 47 Table 6 Variable counts in Markov Blankets of breast cancer in ten networks with permuted order

............................................................................................................................................

- 56 Table

Table

Table

Table

Table

7 Variable order................................................................................................................8 Prediction results of ER+/PR+ breast cancer ................................................................9 Prediction results on test set after filtering ...................................................................10 Prediction of 2-year, 4-year, and 6-year risk models for ER+/PR+ breast cancer ......11 Prediction after filtering of 2-year, 4-year, and 6-year ER+/PR+ risk models ...........-

-7-

57 62 69 74 74 -

Chapter 1 Introduction

Health care is one of the major concerns of modern society, and much effort has been invested in

studying the causes, treatments and outcomes of disease. Large health cohort studies are among

the most effective, because they follow a large and relatively stable population over a long period

of time and systematically collect comparable longitudinal data, sometimes over decades. There

are numerous large health cohort studies being carried on, such as the Framingham Heart Study

from 1948, The British Doctors Study from 1954, the Dunedin Multidisciplinary Health and

Development Study started in 1972, and the Nurses' Health Study since 1976. Such studies have

led to numerous important insights and many publications, and they often form the basis for

health care recommendations and policies. We suspect that the wealth of data in such studies may

yet yield many additional important results, especially when subjected to newer analytical

methods.

In order to work efficiently, we focused our analysis on breast cancer, one of the most frequently

diagnosed cancers in the US, and one of the motivating diseases for the Nurses' Health Study. We

explored the data from the Nurses' Health Study using Bayesian Networks to look for potential

risk factors for breast cancer, and also developed and evaluated risk predicting models of breast

cancer.

Bayesian Networks provide a relatively new method of representing uncertain relationships

among variables, using the tools of probability and graph theory. Such a network allows a concise

representation of probabilistic dependencies and is often used to model potential causal pathways.

We have used heuristic techniques that automatically induce a Bayesian Network that fits

observational data to suggest the likely dependencies in the data.

Bayesian Networks have been

widely used in analyzing such dependencies and the interplay between variables. In this work, we

-8-

have used Bayesian Networks to perform an exploratory analysis on a rich collection of data from

one large health cohort study, the Nurses' Health Study [1].

This thesis is organized into several major chapters. We first introduce the background of this

research in Chapter 2, including the Nurses' Health Study, a large health cohort study from which

we obtained the data, the tools we used to explore the data, a brief literature review of breast

cancer models and their evaluation, and a brief introduction to machine learning and Bayesian

Networks.

In Chapter 3, we describe the exploratory analysis performed on the first data sets we obtained,

including a proof of concept Bayesian Network, dependency analysis among clinical and

life-style variables and a small set of genotypic data, and a finding of association between clomid

use and breast cancer with specific estrogen receptor status.

Chapter 4 presents a group of risk predicting models for breast cancer based on the same data

used to derive a log-incidence model previously published by Colditz et al. We built the new

models using Bayesian Networks, an approach different from the original log-incidence model.

The models were evaluated on discrimination and calibration abilities. We also used

agglomerative information bottleneck clustering to filter the prediction results, and achieved

improved linear relationship between the predicted risks and observed risks.

Chapter 5 discusses issues we encountered in this work, summarizes lessons learned from the

research, and gives some possible future research direction from this work.

-9-

Chapter 2 Background

This chapter introduces the background of our research, including a brief introduction to breast

cancer and beast cancer models, Bayesian Networks and other machine learning techniques we

employed in our research, and the Nurses' Health Study data on which we based our study.

2.1 Nurses' Health Study

The Nurses' Health Study (NHS), established in 1976 by Dr. Frank Speizer and funded by the

National Institute of Health, is among the "largest prospective investigations into the risk factors

for major chronic diseases in women" [1]. Registered nurses were selected to be followed

prospectively because they were anticipated to be able to "respond with a high degree of accuracy

to brief, technically-worded questionnaires and would be motivated to participate in a long term

study" due to their nursing education [1]. A short questionnaire with health-related questions is

sent to the members every two years starting from 1976, and a long questionnaire with food

frequency questions is sent every four years starting from 1980. Questions about quality of life

were added to questionnaires since 1992. 33,000 blood samples were collected in 1989 and are

stored and used in case/control analyses. 2448 blood samples have been genotyped at 47 Single

Nucleotide Polymorphism (SNPs) sites [1][2].

With a follow-up rate exceeding 90% [3], the Nurses' Health Study has a very good longitudinal

record of phenotypes and clinical and life-style factors, including physical data, health status, life

styles, nutritional intake, family history, etc. Questionnaires were sent to the nurses every other

year, and the data available to this study are generally from 1976 to 2000, except for those who

passed away or left the study for other reasons.

The collected variables can be divided into different categories, and below is an incomplete but

indicative list (with the focus on breast cancer).

- 10-

*

general information: year of birth, month of birth, race, height, weight, geographic

location, education, parity (number of children), age at first birth, breast feeding history,

weight of heaviest child, father's occupation, marital status, husband's education, living

status, years worked in operating room, total number of years on night shifts, hours of

sleep, sleeping position, snoring, birth weight, breast fed in infancy, and handedness

*

clinically related information: smoking status, age at start of smoking, passive smoking,

body mass index, waist and hip measurement, age at menopause, type of menopause, post

menopausal hormone use, oral contraceptive use, breast cancer history of mother and

sisters, total activity score, menstrual cycle age, regularity of period, tan or sunburn, tan

or sunburn as a child, moles, lipstick use, breast implant, multi-vitamin use, social and

psychological characteristics

*

medical history: alcohol dependency problem, aspirin use, tagamet use, tubal ligation,

clomid use, tamoxifen use, talcum powder use, hip or arm fracture, TB test, elevated

cholesterol, heart disease, high blood pressure, diabetes, cancer report, lung cancer,

ovarian cancer, DES treatment, breast cancer diagnosis and type, estrogen receptor and

progesterone receptor test

*

diet information: different kinds of food intake

Many valuable medical findings have originated from this study, though contemporary machine

learning techniques (except logistic regression) were rarely employed. Therefore, we hope that by

exploring the data from NHS using modern machine learning tools we can find interesting new

medical evidence, e.g. develop a new breast cancer model, or learn helpful experiences from such

an exploratory analysis.

2.2 Breast Cancer Models

Breast cancer has been the most frequently diagnosed and the second most deadly cancer in

women in the US for many years. In 1998, breast cancer constituted about 30% of all cancers and

- 11 -

caused about 16% of cancer deaths in women. [1] Recently available statistics from the American

Cancer Society estimate that more than two hundred thousand newly diagnosed cases and more

than forty thousand deaths resulted from breast cancer in 2004. [6]

The effort to understand the risk factors of breast cancer, and one step further, to predict breast

cancer risks, can be traced back to the late 60's. [7-11] In early studies, it was first recognized that

age at menarche (the onset of menstruation), age at first birth, and age at menopause are three

major risk factors for breast cancer. Generally, early age at menarche and late age at menopause

are considered to be associated with higher risk of breast cancer, while early first full-term

pregnancy is associated with lower risk. Postmenopausal weight, family history, duration of

having a menstrual period, age, pregnancy history, and other risk factors were also investigated in

later studies. [ 13-21 ]

Starting from the early 80's, scientists started to build mathematical models that can predict a

probability, or risk, of getting breast cancer. Interestingly enough, most of these research efforts

fall into two groups. Some of them focused on the inheritance of breast cancer, building models

based on family history and/or genotype data. The others tried to build the model by combining

individual risk factors, mostly reproductive variables such as age at menarche, age at menopause,

parity, etc.

Ottman et al. published a simple model in 1983 that calculates a probability of breast cancer

diagnosis for mothers and sisters of breast cancer patients. [24] They used life-table analysis to

estimate the cumulative risks to various ages based upon two groups of patients from the Los

Angeles County Cancer Surveillance Program, then derived a probability within each decade

between ages 20 and 70 for mothers and sisters of the patients, according to the age of diagnosis

of the patient and whether the disease was bilateral or unilateral.

- 12-

Claus et al. developed a genetic model to estimate age-specific breast cancer risks for women

with at least one relative with breast cancer. [25-26] The data they used were from the Cancer and

Steroid Hormone (CASH) Study, a population-based, case-control study evaluating the impact of

oral contraceptives on the risk of breast cancer. The model derived risk estimates based on the

relative's age at diagnosis and the degree of relationship of the relative(s). Their results showed

that women with first-degree relatives who were diagnosed with breast cancer at early ages have

very high lifetime risks of breast cancer. They also suggested BRCA1 susceptibility for breast

cancer.

Inspired by the discovery of breast cancer susceptibility genes BRCA1 and BRCA2 between

1994 and 1995, risk models were also developed to predict the probability that an individual

might be a carrier of a mutant gene, either the known BRCA1 and BRCA2, or a hypothetical

unknown gene BRCAu.

Couch et al. examined families with at least two breast cancer cases for germline mutations in

BRCA1, and built a model with logistic regression, using average age at breast cancer diagnosis,

ovarian cancer history, and Ashkenazi Jewish ancestry as risk factors. [29] Shattuck-Eidens et al.

developed a similar model on a different group of families, but without the limitation to a family

history of breast cancer. [30] Using logistic regression, Frank et al. identified ovarian cancer,

bilateral breast cancer, and age of diagnosis for breast cancer before 40 as predictors for both

BRCA1 and BRCA2. [31] Parmigiani et al. developed a Bayesian model to evaluate the

probabilities that a woman is a carrier of a mutation of BRCA1 and BRCA2 using breast and

ovarian cancer history of first and second degree relatives as predictors. [33]

In the literature, there are many more research projects trying to identify carriers of a mutant gene

based on family history, than those trying to predict the risk of breast cancer using genotype data.

This is probably due to the high cost of genotyping and the unavailability of a reliable genotype

- 13 -

data set in past times. As indicated by Parmigiani, it cost $2400 to test for both BRCAI and

BRCA2 in 1997.[33] Recently, however, scientists started to build models to predict breast cancer

risk using BRCA1 and BRCA2. For example, Tyrer et al. published a model that incorporated

BRCA1, BRCA2, and a hypothetical low penetrance gene, as well as some personal risk factors.

[39] No doubt in the foreseeable future we will see more and more models using genotypic data.

In the other group using individual risk factors, Moolgavkar et al. proposed one of the earliest

risk prediction models in 1980, which predicts age-specific incidence of breast cancer in females,

based upon physiologic response of breast tissue to menarche, menopause, and pregnancy on the

cellular level. [18] They suggested a two-stage model that incorporates growth of breast tissues to

derive an age-specific incidence curve, which can explain with close quantitative agreement the

observed risk due to age at menarche, age at menopause, and parity in a combined data set

including data from Connecticut, Denmark, Osaka (Japan) Iceland, Finland, and Slovenia. This

"tissue aging theory" has been modified and extended in many later research projects.

Pike et al. proposed a quantitative description of "breast tissue age" based on age at menarche,

first full-term pregnancy, and age at menopause, which fits well a linear log-log relationship

between breast cancer incidence and age. [23] The Pike model assumes breast tissue aging started

from menarche at a constant f0, dropped after the first full-term pregnancy to another constant f,

and then decreased linearly from age 40, which they called the perimenopausal period, to the last

menstrual period, and finally kept constant at that level.

In 1989, Gail et al. proposed what is now called the Gail model, a breast cancer risk model

clinically applied today, based on the Breast Cancer Detection Demonstration Project (BCDDP).

[27] The relative risk of the original model was derived from a matched case-control subset from

BCDDP, using unconditional logistic regression on five risk factors: age, age at menarche, age at

first live birth, number of previous biopsies, and number of first-degree relatives with breast

- 14-

cancer. Women in BCDDP regularly received mammographic screening, so special caution must

be applied when applying this model to women who don't receive regular mammographic

screening to avoid risk overestimation or other inaccurate results. The model expresses the log of

the odds ratio for disease as:

logO(D: D)

= - 0.74948 + 0.0940 1m+ 0.52926b

+0.218631+ 0.95830n +0.0108 la

-0.28804b x a- 0.190811xn

where

m

=

age at menarche

b

=

number of previous breast biopsies

I

=

age at first live birth

n

=

number of first degree relatives who have breast cancer

a

=

1 if age>50, 0 otherwise

This equation suggests that breast cancer risks increase with older age at menarche, more number

of previous breast biopsies, older age at first live birth, more number of first degree relatives who

have breast cancer, and older age. It is worth noting that the model also compensated for the

covariance of two pairs of variables: number of previous breast biopsies and age, and the

covariance of age at first live birth and number of first degree relatives who have breast cancer,

using the last two terms with negative coefficients. That is, the increased risk of breast cancer due

to the two risk factors together in either of the above two pairs is less than the sum of the effects

of the two risk factors alone. In other words, there are interactions between the two risk factors in

either pair and their effects on breast cancer are dependent.

The equation is used to derive relative risk only, because it is trained on a case-control data set. In

order to predict absolute risks, a baseline risk must be established for a specific configuration of

the variables, for which the relative risk is 1. The absolute risks of women with different

configurations can then be calculated by multiplying this baseline risk with the relative risks

- 15 -

derived from the equation. This absolute risk is then projected to get long-term probabilities . The

Gail model has been widely used in breast cancer counseling and research subject screening.

Anderson et al. modified the original Gail model, or Gail model 1, to project the risk of

developing invasive breast cancer, and this model was referred to as model 2. [28] They used the

same model structure and risk factors, but derived the model parameters from the Surveillance,

Epidemiology, and End Results (SEER) Program of the National Cancer Institute (NCI) instead

of BCDDP, and included only invasive breast cancer cases.

Pathak and Whittemore fit a biologically motivated breast cancer incidence rate function to data

from published case-control studies conducted in different countries at high, moderate and low

incidence of breast cancer. [32] The data include 3,925 breast cancer cases and 11,327 controls

interviewed in selected hospitals in 1964-1968. The function parameters specify the dependence

of age-specific breast cancer incidence rates on age at menarche, age at menopause, occurrence

and timing of full-term pregnancies, and body mass. They reported three patterns: "1) Incidence

rates jump to a higher level after first childbirth, but then increase with age more slowly thereafter.

2) Rates increase with age more slowly after menopause than before. 3) Rates change

quadratically with body mass index among all women, although the main trend varies: Rates

decrease with body mass among premenopausal women in high-risk countries, but increase with

body mass in all other groups of women." [32]

In the late 90's, Colditz and Rosner developed a log-incidence model of cumulative breast cancer

risks to incorporate temporal relations between risk factors and incidence of breast cancer. [34, 35]

They evaluated reproductive history, benign breast disease, use of postmenopausal hormone,

weight, alcohol intake, and family history as risk factors, and derived the model based on the

Nurses' Health Study (NHS). Colditz et al. also modified this model to fit incidence data from

patients having breast cancer with specific estrogen receptor (ER) and progesterone receptor (PR)

- 16-

status, and the result suggested better discrimination ability on ER positive and PR positive

incidence than on ER negative and PR negative incidence. [36] Part of our research is based on

this model, and it will be introduced in more detail later.

2.3 Evaluation and Validation of Breast Cancer Models

After reviewing many breast cancer models from the literature, it appeared that almost all models

were developed in the following steps. The scientists designed a mathematical model based on

expert knowledge and known risk factors, selected a target population, then fit the model to the

data, in many cases using logistic regression, to derive model parameters, relative risks and

sometimes absolute risks. The natural question is: how well do these models perform in practice,

on general population data?

To answer this question, models need to be evaluated and validated. In machine learning, it is a

common practice to build the model on training set data, and then evaluate the model on a

separate test set. Methods such as cross-validation and leave-one-out are also popular. In the

breast cancer research described above, model validation did not receive as much attention as the

models themselves. The Gail model, however, as one of the most widely used models clinically,

was validated in a few studies.

Bondy et al. evaluated Gail model 1 in 1994 on a cohort of women who participated in the

American Cancer Society 1987 Texas Breast Cancer Screening Project and had a family history

of breast cancer. [57] They compared the observed ()

and expected (E) breast cancer incidence,

and found that Gail model 1 had a better performance among women who adhered to the

American Cancer Society mammographic screening guidelines (O/E = 1.12, 95% CI: 0.75-1.61)

than it did for those who did not adhere to the guidelines (O/E = 0.41, 95% Cl: 0.2 - 0.75). This

finding confirmed that the Gail model overestimates breast cancer risks for women not taking

annual mammnographicscreening. They also employed the Hosmer-Lemeshow Goodness-of-fit

- 17-

test, which did not find an overall lack of fit between the observed and expected number of breast

cancers, despite the overestimation result. (When a goodness-of-fit test gives no significant lack

of fit result, it does not prove nor guarantee a good fit. Actually this test can only prove lack of fit,

but it is always helpful to report a null finding of this test.)

About the same time, Spiegelman et al. evaluated Gail model 1 on another cohort of women from

the Nurses' Health Study, showing that the model overestimated risk among premenopausal

women, women with extensive family history of breast cancer, and women with age at first birth

younger than 20 years. [58] They also evaluated the model using the correlation coefficient

between observed and predicted risk, which was 0.67.

Costantino et. al evaluated both Gail model 1 and Gail model 2 on data from women enrolled in

the Breast Cancer Prevention Trial. [38] They compared the ratio of expected to observed number

of breast cancers, and the result showed better performance by model 2 than model 1, which

underestimated breast cancer risk in women more than 59 years of age.

Rockhill et al. evaluated Gail model 2 both on goodness of fit and its discriminatory accuracy

using the Nurses' Health Study data. [59] They evaluated goodness of fit by comparing the ratio

of expected to observed number of breast cancers, and evaluated discriminatory accuracy using

the concordance statistic (i.e. C-index, equivalent to Area Under ROC curve). They also

compared the highest and lowest deciles of relative risks derived from Gail model 2 to get a range

of discrimination of the model. They reported that the model fit well in the sense of predicting

stratified breast cancer incidence, but with modest discriminatory accuracy.

Recently, Gail et al. published a paper on criteria for evaluating models of absolute risk, showing

that the community is now paying more attention to model risk prediction evaluation. [121] In

their paper, Gail summarized general criteria for assessing absolute risk models, including

- 18-

calibration, discrimination, accuracy, and proportion of variation explained.

For calibration evaluation, they cited goodness-of-fit statistics and comparison of expected

number of cases with observed number of cases from [38] and [59], and also mentioned the Brier

statistic, or

ean squared error, which is a combined measurement of calibration and

discrimination. For discrimination evaluation, they reviewed area under the receiver operator

characteristics (ROC) curve, which has been widely used, and the Lorenz curve, which is more

frequently used in economics research. The Lorenz curve describes the relationship between the

proportion of disease cases and the proportion of population that has a risk up to a specific risk

value, and hence measures the concentration of risk populations. For rare diseases, the Lorenz

measurement is approximately the same as the ROC curve. They also mentioned the

measurement of proportion of variation explained using entropy and fractional reduction in

entropy.

2.4 Breast Cancer and Genomrics

The human genome has roughly 3 billion base pairs (bp), and now is estimated to have 30,000 to

40,000 genes [74]. It has been a well-known fact that most of the base pairs in the genome

sequence are identical across the population, while approximately one out of a thousand base

pairs will be different when comparing the genome sequences from two different persons. Such

differences, or polymorphisms, are used as markers in gene mapping and linkage analysis.

Genetic polymorphism is defined as "the occurrence of multiple alleles at a locus, where at least

two alleles appear with frequencies greater than 1 percent," or a heterozygote frequency of at

least 2 percent [81]. Commonly used polymorphisms include restriction fragment length

polymorphism (RFLP), variable number of tandem repeats (VNTR), microsatellites, and single

nucleotide polymorphisms (SNPs).

- 19-

A SNP is a sequence polymorphism differing in a single base pair, and is the most common type

of polymorphism. SNPs can occur in the gene coding regions, while other types of

polymorphisms mainly occur in non-coding regions. Such properties make SNPs the best marker

for gene mapping and linkage analysis, because they are common (about 1 per thousand base

pairs) and can be very close to the genes and mutations of interest.

SNPs have been reported from many research groups [82-85], while an accumulated public SNPs

database (roughly 1.2 million SNPs) can be found on line at several websites, including the

ENSEMBL (http://www.ensembl.org), NCBI (National Center for Biotechnology Information;

http://www.ncbi.nlm.nih.gov), and TSC (http://snp.cshl.org) websites. The estimated total number

of SNPs in the human genome may be over 10 million [86], perhaps as many as 30 million [87].

Breast cancer has long been known to be related to family history, and therefore, to be a

hereditary disease. Two relatively high penetrance genes, BRCA1 and BRCA2, have been

identified [88-90], but they do not account for all hereditary breast cancers. At least one

susceptible area of another major breast cancer gene has been proposed [91-92], while quite a few

polymorphisms have been investigated, including rare genetic syndromes associated with

increased breast cancer risks and low penetrant breast cancer susceptibility genes. These

suspected genes include proto-oncogenes (HRAS1), metabolic pathway genes (NAT1, NAT2,

GSTM1, GSTP1, GSTT1, CYPlA1,

and CYPlB1), estrogen pathway genes (CYP17 and

CYP19), estrogen receptor gene (ER), progesterone receptor gene (PR), androgen receptor gene

(AR), and many other genes (COMT, UGT1A1, HLA, TNF[alpha], HSP70, HFE, TFR, VDR,

and VPC) [93].

However, convincing results are hard to achieve. Some of the studies show positive association of

one polymorphism with breast cancer while others show mild or no association of the same

polymorphism. For example, Helzlsouer [94] and Charrier [95] suggested positive association

- 20 -

between GSTM1 and breast cancer risk, while Ambrosone [96] and Garcia-Closas [102] showed

evidence against this hypothesis. Another example is that Kristensen [97] reported increased

breast cancer risk with CYP19, and Haiman's reports [98-99] find no evidence of positive

association. The current findings need to be treated with caution, and further studies are necessary

to make a definitive conclusion.

The lack of affirmative results is possibly due to the fact that breast cancer is a complex trait.

Only a relatively small portion of breast cancer is hereditary, and a considerable part of these

familial cases are involved with BRCA1 and/or BRCA2, whose strong association may shadow

possible weak associations with other genes. In addition, there may be multiple genes interacting

with each other or interacting with clinical and life-style factors leading to the incidence. All these

issues make it difficult to reach a convincing conclusion.

Some of the studies examined the combinations of a few polymorphisms. Bailey et al. examined

CYPlA1, GSTM1, and GSTT1, reported no significant association with breast cancer risk of

these polymorphisms individually or combined [100]. Huang et al. examined CYP17, CYPlA1,

and COMT, and reported that COMT genotype has a significant association with breast cancer,

either individually or combined with CYP 17 and CYP 1Al, and CYP 17 and CYP lA 1 play a

minor role in the association [101]. Garcia-Closas et al. evaluated the association between

GSTM 1 and (GSTT1 gene polymorphisms and breast cancer risk, and provided evidence against a

substantially increased risk of breast cancer associated with GSTM1 and/or GSTT1 homozygous

gene deletions [102]. These combined investigations are very few in number compared to the

abundant number of studies of a single polymorphism.

Some of the studies investigated certain polymorphisms and a few clinical and life-style factors.

Ishibe et al. evaluated the associations between the CYPlA1 polymorphisms and breast cancer

risk, as well as the potential modification of these associations by cigarette smoking, and report a

-21 -

suggestive increase in breast cancer risk among women who had commenced smoking before the

age of 18 and had the CYPlA1-MspI variant genotype compared to nonsmokers who were

homozygous wild type for the polymorphism [103]. Millikan et al. examined the effects of

smoking and N-acetylation genetics on breast cancer risk, and reported little evidence for

modification of smoking effects according to genotype, except among postmenopausal women

[104]. Hunter et al. assessed the relation between NAT2 acetylation status and breast cancer risk,

and its interaction with smoking, and reported that cigarette smoking was not appreciably

associated with breast cancer among either slow or fast NAT2 acetylators [105].

Clinical and life-style factors other than smoking are also investigated in some studies. Gertig et

al. examined the associations between meat intake, cooking method, NAT2 polymorphism and

breast cancer risk, and observed no significant association between meat intake, NAT2, and breast

cancer risk, therefore suggesting that heterocyclic amines produced by high-temperature cooking

of meat and animal protein may not be a major cause of breast cancer [106]. Hines et al.

calculated relative risks and confidence intervals to assess breast cancer risk for ADH3 genotype

and alcohol consumption level, and suggested that the ADH3 polymorphism modestly influences

the response of some plasma hormones to alcohol consumption but is not independently

associated with breast cancer risk and does not modify the association between alcohol and breast

cancer risk [107]. Haiman et al. assessed the association between the A2 allele of CYP17 and

breast cancer risk, and observed that the inverse association of late age at menarche with breast

cancer may be modified by the CYP17 A2 allele through endogenous hormone levels [108].

Polymorphisms related to breast cancer also have been studied together with family history and

ethnic groups, respectively [ 109-1 1 ].

Limited studies examined more polymorphisms and some clinical and life-style factors. For

example, Haiman et al. studied 10 polymorphisms in 8 genes and two clinical and life-style

factors (menopausal status and postmenopausal hormone use), and suggested some interaction

- 22 -

between UGTlAl

genotype and menopausal status [112], which can possibly be modified by

postmenopausal hormone use. These previous successful examples imply that we need to put

genotypic data, phenotypic data, and clinical and life-style factors together to explain the complex

traits.

The Nurses' Health Study provides an abundant collection of clinical and life-style data as well as

personal risk factors for breast cancer, and recently a nested case-controlled group of nurses were

genotyped for a collection of SNPs in suspected gene areas. Combing these data together, we

have a chance to look for gene interaction and clinical and life-style contributors, and explore

their relationships from an overall point of view.

2.5 Machine Learning Techniques

Empirically, machine learning techniques are computer algorithms that attempt to find the best of

a class of possible models and to tune its parameters so as to best fit a data set of interest

according to specified criteria. When the learning process is completed, and if the model's

performance is reasonably good, the model and its parameters will reveal, at least to some extent,

the intrinsic structure of the data set.

Machine learning techniques can be divided into two major groups: supervised learning and

unsupervised learning. Supervised learning will try to relate the variables in the data set to one

specific variable, the class variable, and therefore disclose which variable or variable

combinations in the data set are best predictors for the class variable. For example, we can put

polymorphism and phenotype data together to make a data set, and use this data set to train a

model using one of the supervised learning algorithms. If we pick a variable of interest, say,

breast cancer as the class variable, the trained model will try to find the best predictors, maybe

one or more of the polymorphism or phenotype variables, or maybe a combination of certain

variables (which will require extensive work if using traditional statistical methods to obtain).

- 23 -

Unsupervised learning doesn't have a class variable, and it doesn't focus on the associations to

any specific variable. Rather, it explores the associations among all the variables and tries to

group them according to their own dependencies. If we use the above hypothetical data set to

train an unsupervised model, it will try to find the relationships and possible interactions among

all the polymorphisms and phenotypes, including breast cancer, but not exclusively.

Both supervised and unsupervised machine learning are non-hypothesis driven processes. When

training the model, the algorithm will automatically search the hypothesis space and try to find a

best fit. Hence, such techniques can examine a large number of hypotheses in one single run and

save researchers' labor.

The limitation of machine learning is computation power and the problem of overfitting. One

critical component of a machine learning algorithm is the model. If the model is not flexible

enough, the algorithm doesn't have the power to search a large enough hypothesis space, and thus

may miss important possible models. If the model is too flexible, the hypothesis space will be so

large that it is impractical to search the whole space. In such situations, we need to determine a

search strategy to make good use of the available computation power.

In many applications, the available data set has a very limited sample size compared to the

number of variables, thus there will be many hypotheses that can fit the data, causing the

overfitting problem. Overfitting can be checked by using a separate testing data set to confirm the

trained model. Another way to avoid overfitting is to reduce the number of variables, usually

referred to as feature selection.

Bayesian Network

Induction of Bayesian networks (BN) is one of the most popular machine

learning techniques. Bayesian networks can explore the relationships or dependencies among all

- 24 -

variables within a data set, not restricted to pair-wise models of interactions, and therefore can

describe and assess complex associations and dependencies of multiple variables. A Bayesian

network (BN) is a directed acyclic graph (DAG), in which the nodes represent statistical variables

and the links represent the conditional dependencies among the variables. By looking at the

network, one can easily tell the underlying relationships of the variables within the data set,

which are now represented by the links connecting the variables. A brief introduction to Bayesian

networks will follow in the next section.

Clustering

Clustering is an unsupervised machine learning technique, which has been widely

used in functional genomics. A clustering algorithm tries to group data into clusters based on

certain similarity or distance measurements, and to find natural or intrinsic partitions in the data.

It may involve finding a hierarchic structure of partitions (a cluster of clusters).

Support Vector Machine

Support vector machine (SVM) is a supervised learning algorithm. A

support vector machine tries to maximize the discrimination "margin" between the samples with

different class labels. Usually only relatively few samples are at the borders or intersections

between different classes, and these samples alone will determine the margin, so they are called

"support vectors." SVM uses a kernel function (which can be linear, polynomial, or radial) to find

the margin and support vectors.

Nonlinear kernels permit the representation of complex

discrimination boundaries.

Logistic Regression

Logistic regression (LR) is one of the most commonly used machine

learning algorithms in medical applications. The underlying model for logistic regression is two

Gaussian distributions with equal covariance, and LR tries to fit the data to this model using

maximum likelihood criteria. An additional advantage of logistic regression, which probably is

the reason that it is popular, is that a trained LR model gives weights for each variable in the form

of a likelihood ratio, so that the importance of the correlation of each variable with the class

- 25 -

variable is clearly represented.

Classification Tree A classification tree fits the data with a hierarchical tree structure. At each

branch point, the algorithm performs a test on one variable to decide on which branch to continue.

The leaves are labeled by the values of the class variable. In the learning processes, the algorithm

learns what test (on which variable) to perform, and which label to give each leaf, based on a

local optimization of information gain. One advantage of the classification tree method is that the

tree structure is a convenient representation of knowledge.

Naive Bayes

Naive Bayes is a relatively simple classifier based on probability and

independence assumptions. It assumes all variables except the class variable are conditionally

independent given the class variable. Thus one can calculate the joint distribution by multiplying

the marginal distributions of every variable using Bayes' rule. Empirically, even though the

independence assumptions don't stand, this algorithm works surprisingly well in many

applications.

Ensemble Classifiers

Above are a few examples of common machine learning algorithms.

Many other algorithms have been developed and tried in various applications, such as genetic

algorithm, kernel density methods, K-nearest neighbors, etc. In order to get more robust

performance, sometimes multiple algorithms or multiple classifiers with the same algorithm are

combined to form a ensemble classifier. Ensemble classifiers often have better performance but

require more computation power. Examples include stacking and bootstrapping.

Stacking is a combination of classifiers based on different algorithms. Each classifier is trained on

the same training set, and gives its own output. The output of these classifiers and the class

variable together constitute a meta data set. A further classifier is trained on this meta data set and

the output of this final classifier becomes the final output. [64]

- 26 -

Bootstrapping, or bagging, is an ensemble algorithm that can increase stability and reduce

variance. Given a training set D of size N. We creates M new training sets also of size N, each

uniformly re-sampled from D with replacement. M classifiers are trained on these M training sets,

and their output are combined by voting. [65]

Random forest is an example of an ensemble classifier that consists of many classification trees,

each generated using a small portion of the variables randomly picked from all variables. Every

tree is trained on a bootstrapping of the training set and not pruned. The classification result of the

random forest is the vote of all trees. [66] Such voting can be a plurality vote, or can be a

normalized sum of the probability outputs of the trees.

2.6 Bayesian Networks

Bayesian Networks (BN) are Directed Acyclic Graphs (DAG) describing dependency structures

among variables. [69] A Bayesian Network uses arrows, or links, to depict the dependency

relationship between variables, or nodes. An arrow pointing from variable A to variable B means

variable B is dependent on variable A, and vice versa. When there is a link pointing from A to B,

A is a parent of B, and B is a child of A. Using these graphical symbols, Bayesian Networks

visualize conditional independency structures in such a way that the probability of a variable can

be fully described by the probability of its parents, and its conditional probability table given the

parents.

- 27 -

CB(-

CBrs

(a)

(b)

(c)

A

(f)

(e)

(d)

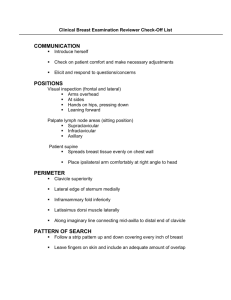

Figure 1 Bayesian Network structures of three variables

Six very simple Bayesian Networks are shown in Figure 1 for illustration. In each of the six

figures, there are three nodes representing three random variables A, B, and C. In Figure 1a, the

three variables are mutually independent, as completely separated nodes, which corresponds to

P(ABC) = P(A)P(B)P(C). In Figure lb, two variables A and B are dependent, while variable C is

independent of A or B. In Figure

c, all three variables are dependent on each other,

corresponding to the general representation of a joint probability distribution P(ABC) =

P(CIAB)P(BIA)P(A).

Conditional independence is a very important concept in Bayesian Networks. In Figure d, C is

dependent on B, which in turn depends on A. A and C becomes conditional independent, or

d-separated, given B. The joint distribution can be written as P(ABC) = P(CIB)P(BIA)P(A). In

Figure le, B and C are d-separated by A and represent a similar conditional independence

structure.

Figure If shows a different situation where A and B are independent without knowing C, but

- 28 -

becomes dependent given C.

In a Bayesian Network, the set of variables including all parents of variable X, all its children,

and all parents of its children is call the Markov blanket for variable X. The Markov blanket will

d-separate X rom all other variables. In other words, X is conditionally independent of all other

variables given the variables in the Markov blanket.

Bayesian Networks have been widely used to represent human expert knowledge and for

probabilistic reasoning. In early applications, Bayesian Networks were constructed by asking

human experts the dependencies among the variables of interest as well as the conditional

probabilities. People have also tried to learn Bayesian Networks directly from data. Current

Bayesian techniques to learn the graphical structure of a Bayesian Network from data are based

on the evaluation of the posterior probability of network structures [72]. Searching the space of

possible network structures has been shown to be NP-hard, [70] but many approximation methods

have been developed. We used Bayesware Discoverer as a Bayesian Network learning tool, which

applies a greedy search approach. [74][75]

By learning a Bayesian Network from the data, we can obtain a landscape view of the variables

and study the interactions among them, as well as an opportunity to discover conditional

independency structures that would be overlooked otherwise.

An important difference between a Bayesian Network constructed from human expert knowledge

and a Bayesian Network learned from data is that the former often represents causal relationships,

while the latter represents conditional independency structures, not necessarily causal or

chronological. In statistical and artificial intelligence applications, however, it is a common

objective to find causal interpretations of the observed data. Therefore, when learning a Bayesian

Network from data with causal interpretations in mind, the variables shall be ordered in such a

- 29 -

way that respects the direction of time and causation. When such ordering is infeasible due to

lack of knowledge or other concerns, the learned Bayesian Network must be interpreted with care,

and no causal relationship shall be suggested without further investigation.

2.7 Bayesware Discoverer

"Bayesware Discoverer is an automated modeling tool able to transform a database into a

Bayesian network, by searching for the most probable model responsible for the observed data."

[73] Discoverer represents Bayesian Networks with a graphical interface as nodes and directed

edges, and integrates convenient analysis tools to manipulate the network and variables, as well

as to display useful information such as conditional distribution tables and Bayes Factors (log

likelihood ratios between possible parent sets of a variable). In most of the exploratory analysis of

this work, we employed Discoverer as the major tool.

Discoverer can learn both the structure and parameters (or the parameters of a given structure)

from the data, based on the K2 algorithm proposed by Cooper et al. in 1992. [74][75] As is

necessarily typical of computer programs that approximate NP-hard problems by heuristic

techniques, Discoverer runs efficiently enough to be useful, but cannot explore the vast space of

all possible hypotheses. Therefore, the network it chooses to fit a set of data may not, in fact, be

the most likely ("best") network.

Discoverer searches the space of possible conditional dependency structures using a greedy

approach based on a list of ordered variables. When searching for the optimal parent set of a

variable X, Discoverer uses a greedy approach, i.e. compare the log-likelihood score of a current

parent set (start from empty set) and that of the current set plus any additional candidate parent,

and update the current set with the additional parent if the score is higher, and then repeat this

process until no more parent can be added. The ratios between the log-likelihood score of this

final parent set and the other possible parent sets are called Bayes Factors (BFs), and a high BF

- 30 -

generally suggests that the final parent set is much more probable than others.

BF's are often

astronomically large, because even a slightly better-fitting model can make a large data set

enormously more likely.

Consequently, a BF that appears large, say 100, may not be very

significant.

Discoverer uses the ordering of the variables to guarantee the acyclic constraint in a way such that

only variables ordered before variable X are eligible as a candidate parent for variable X. We

sometime put the variables in temporal order, hoping that the found dependencies will be

consistent in temporal succession and thus could possibly reveal causal relationships, while

sometime we order the variables to emphasize certain dependencies based on expert knowledge

and known causal relationships. More details will be discussed in later chapters.

-31 -

Chapter 3 Landscaping Clinical and

Life-style and Genotypic Data

In this chapter, we review our attempt to explore the dependencies among a group of clinical and

life-style variables, personal risk factors, and a few Single Nucleotide Polymorphisms (SNPs)

using Bayesian Networks. The data are drawn from the Nurses' Health Study. We also describe

the dependency between breast cancer and clomid use, a possible new risk factor that we found in

the exploratory analysis.

3.1 Landscaping Clinical and Life-style Variables and SNPs

Breast cancer has long been known to be related to family history, and therefore, to be a

hereditary disease. The recently identified BRCA1 and BRCA2 mutations are responsible for

only part of hereditary breast cancer. There could be a "BRCA3," or some modifier genes and

clinical and life-style contributors. Most of the previous research on breast cancer has focused on

only one or a few genes, and rarely considered the influence of clinical and life-style factors. The

lack of definite results from past research illustrates that breast cancer is a complex trait and

therefore exploring the susceptible genes and possible contributing clinical and life-style factors

systematically can be a fruitful alternative to evaluating the genes individually and separately.

NHS provides an abundant collection of clinical and life-style data as well as personal risk factors

for breast cancer, and recently a nested case-control group of nurses were genotyped for a

collection of SNPs in suspected gene areas. Combing these data together, we had a chance to look

for gene interactions and clinical and life-style contributors, and explore their relationships from

an overall point of view.

- 32 -

3.1.1 Data

The genotypic data we had is provided by Ms. Rong Chen in the Channing Lab, including 12

SNPs picked from all SNPs genotyped in the nested case-control study by Dr. David Hunter in

the Channing Lab. These SNPs are:

*

atml,

atm2, atm3, atm4, and atm5: hyplotype-tagging

SNPs in ATM (ataxia

telangiectasia mutated protein) gene

*

ephxl and ephx2: non-synonymous SNPs in epoxide hydrolase

*

vdrbsmil 1 and vdrfokl: SNPs that make Restriction Fragment Length Polymorphisms in

the vitamin D receptor gene

*

xrcc3.466, xrcc3471, and xrcc3472: SNPs in XRCC3 (X-ray repair complementing

defective repair) gene

The data contain 1007 incident breast cancer cases and 1416 controls. The cases were selected

from breast cancer cases diagnosed by June 1, 1998, excluding any other prior cancer diagnosis

except for non-melanoma skin cancer. The controls were matched on year of birth, menopausal

status, PMH use at time of blood draw (1989), and time of day, time in the menstrual cycle and

fasting status at the time of blood draw.

The clinical and life-style data we had include 97 variables, manually selected among the

thousands of variables in NHS by Dr. Graham Colditz, Dr. Karen Corsano, and Ms Lisa Li from

the Channing Lab (different from the data in the example given at the beginning of this chapter).

The variables range from general information, such as race and age, to very specific information,

e.g. usage of specific medications. It also includes suspected or known risk factors for breast

cancer such as parity and menopausal status. A list of the variables and their meanings can be

found in Table 1. These data records are from various years (many from 1990), and most of them

don't include temporal information.

- 33 -

Table 1 Variable list for exploratory analysis

Name

Meaning

actmet

Met score of activity measurement

adep

Alcohol dependent problem

agedx

Age of diagnosis of breast cancer

agefb

Age of giving birth to first child

agesmk

Age started smoking

amnp

Age at menopause

asprin

Whether takes Aspirin

ball

Sunburn overall

bback

Sunburn on back

bbd

Benign breast disease

bface

Sunburn on face

bfeed

Whether was breast fed during infancy

blimb

Sunburn on limbs

bmi

Body Mass Index (BMI)

bp

SF-36 pain index

brfd

Breast fed children

brimp

Breast implant

bwt

Birth weight

case_ctrl

Breast cancer

chol

High cholesterol

clomid

Clomid (a fertility drug) use

crown

Crown-crisp scores, a measure of neurotic symptomatology

dadocu

Occupation of father

db

Diabetes

des

DES (a fertility drug) use

dmnp

Menopause status

dtdth

Date of death

dtdx

Date of birth

durmtv

Durartion of taking multi-vitamin

duroc

Duration of oral contraceptive use

durpmh

Duration of post menopausal hormone use

edu

Education level

era

Estrogen receptor status of breast cancer

fhxbr

Family history of breast cancer

frac

Fracture history

hand

Handedness

hbp

High blood pressure

- 34 -

Name

Meaning

hdye

Hair dye

height

Height

hip

Hip measurement

hrt

Heart disease

hsleep

Hours of sleep

husbedu

Education level of husband

id

ID number of the nurse within NHS

insi

Whether breast cancer is insitu

inva

Whether breast cancer is invasive

kidbwt

Birth weight of the heaviest child

kidsun

Sunburn as a kid

kidtan

Suntan as a kid

kpasmk

Passive smoking as a kid

lipst

Lip stick use

live

Living status (alone or with someone)

lungca

Lung cancer

marry

Marriage status

mh

SF-36 mental health index

mnp

Menopausal status

mnty

Menopausal type

moles

Amount of moles

nod

Whether lymph nodes present at breast cancer diagnosis

ocuse

Oral contraceptive use

oprm

Worked in operating room

ovca

Ovarian cancer

packyr

Total pack-year of smoking

parity

Number of children

pasmk

Passive smoking

Pctl0

DIAGRAMof body size at age 10

Pct2O

DIAGRAM of body size at age 20

Pct30

DIAGRAMof body size at age 30

Pct40

DIAGRAM of body size at age 40

Pct5

DIAGRAM of body size at age 5

pctfa

DIAGRAM of body size of father

pctma

DIAGRAM of body size of mother

pctnow

DIAGRAM of body size in 1988

pmh

Post menopausal hormone use

pra

Progesterone receptor status of breast cancer

race

Race

-35 -

Name

Meaning

re

SF-36 role-emotional index

regpd

Regularity of period

rp

SF-36 role-physical index

sf

SF-36 social functioning index

shift

Worked on night shift

sipos

Sleeping position

smkst

Smoking status

snore

Whether snore when sleeping

st1 5

State living at age 15

st3O

State living at age 30

state

State currently living

stborn

State born

tagamet

Tagamet (antiacid) use

talcum

Talcum powder use

tamox

Tamoxifen (a drug used in breast cancer treatment and prevention)

tb

Tuberculosis

tubal

Tubal ligation

tv

Time watching TV

vt

SF-36 vitality index

waist

Waist measurement

work

Working status

3.1.2 Exploring the SNPs and Breast Cancer

In the first step, we learned a Bayesian Network from the SNPs data plus a variable marking

breast cancer cases or controls. We used all available data, and imputed the missing values using

K-nearest neighbor method. This network is shown in Figure 2

- 36 -

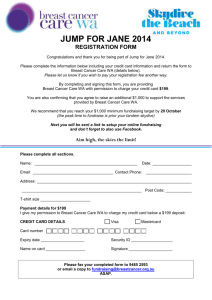

Figure 2 Bayesian Network of 12 SNPs and breast cancer

The Bayesian Network shown in Figure 2 didn't find any dependencies between the SNPs and

breast cancer (the BF between breast cancer having no dependent variable and having one

dependent variable is 97, suggesting no dependencies with a high confidence), though it did show

dependencies among the SNPs from the same genes (atml-atm5, xrcc3 SNPs). We also tried

removing all missing values, but that result didn't show any dependencies between the SNPs and

breast cancer, either.

Considering the fact that some breast cancer may not be genetically related, and that breast cancer

genes generally have low penetrance, we removed from the data breast cancer cases without a

family history of breast cancer and controls with a family history. We hoped to reduce the noise

caused by the complexity of breast cancer as a hereditary disease with complex traits, and to

increase the chance to find the dependency between the SNPs and breast cancer, if there is any.

After such manipulation, we got a sub set with 152 cases and 1274 controls. The Bayesian

Network learned from this sub set has the same structure as in Figure 2, showing no dependencies

between the SNPs and breast cancer.

- 37 -

While SNPs (or genes) alone are independent of breast cancer, there might be some clinical and

life-style factors that can "turn on" these genes. To explore such possible interactions, we need to

study the SNPs and the clinical and life-style variables together.

3.1.3 Exploring Clinical and Life-style Factors and SNPs

In the second step, we put the SNPs data together with the clinical and life-style variables to

construct a combined data set. This data set contains 96 variables, 12 SNPs and 84 clinical and

life-style variables. 13 out of the 97 clinical and life-style variables were not used because of

different reasons. Some variables were not included because they were not applicable for this

analysis, such as id, and date of death (dtdth). Some variables were combined with other variables

to reduce the search space, including pack year (packyr) combined with smoking status, date of

diagnosis (dtdx) combined with age of diagnosis, duration of oral contraceptive use (duroc)

combined with oral contraceptive use, duration of post menopausal hormone use (durpmh)

combined with post menopausal hormone use, and menopause status (mnp) combined with

menopause type (mnty). Some variables are characteristics of breast cancer, and thus not included

in the Bayesian Network learning, but were used to select cases in a later study. These include

estrogen receptor (era), progesterone receptor (pra), in-situ (insi), invasive (inva), and nodular

(nod). One variable (kidbwt, birth weight of heaviest child) was removed because of too many

missing values.

We used all records and imputed the missing values using K-nearest neighbor method. The

variables are ordered in a way such that closely related variables are grouped together, while we

tried to make the overall order consistent with temporal order, as in the example we discussed at

the beginning of this chapter. The detailed order of variables is listed in Table 2.

- 38 -

Table 2 Variable orders for exploring clinical and life-style factors and SNPs

#

Variable

#

Variable

#

Variable

#

Variable

regpd

1

atml

25

marry

49

hdye

73

2

atm2

26

husbedu

50

moles

74

amnp

3

atm3

27

pasmk

51

kidsun

75

dmnp

4

atm4

28

oprm

52

kidtan

76

mnty

5

atm5

29

shift

53

bback

77

pmh

6

ephxl

30

pctma

54

bface

78

hbp

7

ephx2

31

pctfa

55

blimb

79

chol

8

vdrbsmrn11

32

pct5

56

ball

80

db

9

vdrfokl1

33

pct10

57

asprin

81

tb

10

xrcc3466

34

pct20

58

tagamet

82

hrt

11

xrcc3471

35

pct30

59

talcum

83

frac

12

xrcc3472

36

pct40

60

tamox

84

bbd

13

race

37

pctnow

61

clomid

85

lungca

14

dadocu

38

height

62

des

86

ovca

39

waist

63

tubal

87

actmet

15

stborn

16

st15

40

hip

64

ocuse

88

rp

17

st30

41

bmi

65

parity

89

bp

18

state

42

sipos

66

agefb

90

vt

19

fhxbr

43

snore

67

brfd

91

sf

44

lipst

68

adep

92

re

20

kpasrnk

21

bwt

45

brimp

69

work

93

mh

22

bfeed

46

agesmk

70

live

94

crown

23

hand

47

smkst

71

hsleep

95

agedx

24

edu

48

durmtv

72

tv

96

casectrl

The Bayesian Network learned from these 96 variables is shown in Figure 3. The majority of the