NEURONAL NETWORKS AND CONNECTIONIST (PDP) MODELS “Law of Effect” Hebb’s “reverberatory circuits”

advertisement

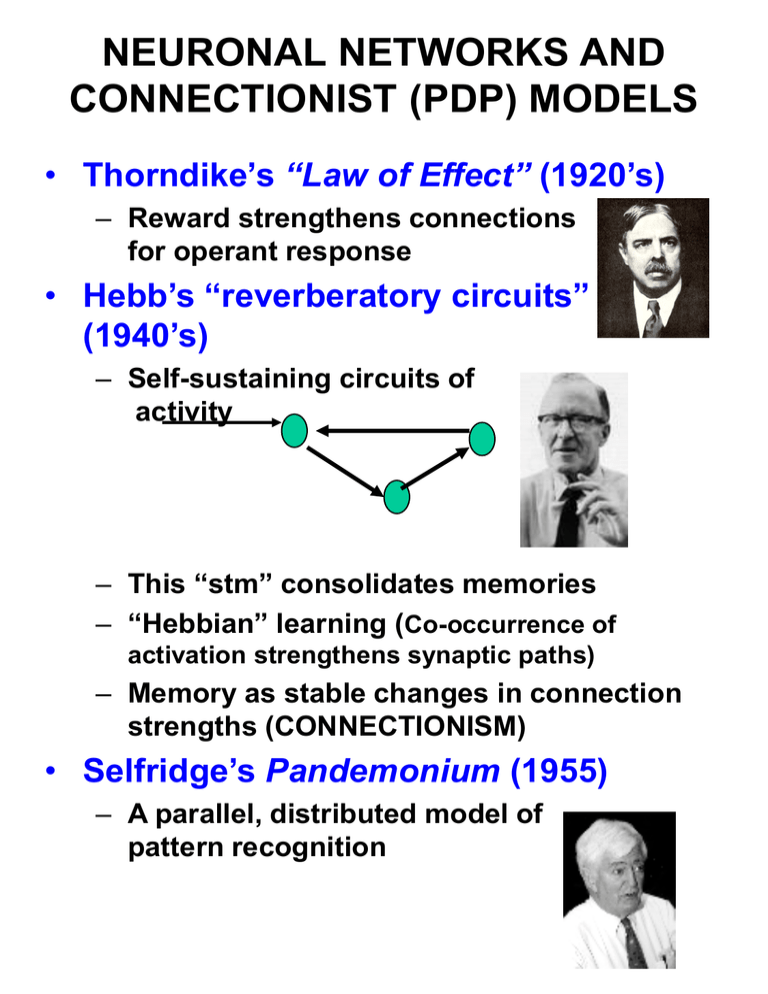

NEURONAL NETWORKS AND CONNECTIONIST (PDP) MODELS • Thorndike’s “Law of Effect” (1920’s) – Reward strengthens connections for operant response • Hebb’s “reverberatory circuits” (1940’s) – Self-sustaining circuits of activity – This “stm” consolidates memories – “Hebbian” learning (Co-occurrence of activation strengthens synaptic paths) – Memory as stable changes in connection strengths (CONNECTIONISM) • Selfridge’s Pandemonium (1955) – A parallel, distributed model of pattern recognition Selfridge’s Pandemonium Model of Letter Recognition • Rosenblatt’s Perceptrons (1962) – Simple PDP model that could learn – Limited to certain kinds of associations • Hinton & Anderson (1981) – PDP model of associative memory – Solves the “XOR” problem with hidden layers of units – Adds powerful new learning algorithms • McClelland & Rumelhart (1986) – Explorations in the Microstructure of Cognition – Shows power and scope of PDP approach – Emergence of “subsymbolic” models and brain metaphor – PDP modeling explodes A symbolic-level network model (McClelland, 1981) • The Jets and Sharks model – Parallel connections and weights between units – Excitatory and inhibitory connections – Retrieval, not encoding – “units” still symbolic A PDP TUTORIAL • A PDP model consists of – A set of processing units (neurons) – Usually arranged in “layers” – Connections from each unit in one layer to ALL units of the next Input Hidden Output – Each unit has an activity level – Knowledge as distributed patterns of activity at input and output – Connections vary in strength – And may be excitatory or inhibitory input -.5 -.5 output +.5 +.5 – Input to unit k = sum of weighted connections – for each connection from layer j: input from (j) = activity(j) x weight(j to k) j2 j1 +1 +1 -.5 -.5 +.5 +.5 -1 k1 +1 k1 – The same net can associate a large number of distinct I/O patterns -1 +1 0 -.5 -.5 +.5 +.5 0 – The I/O function of units can be linear or nonlinear threshold – Hidden layers add power and mystery e.g., solving the XOR problem: +1 +1 +1 +1 +1 +1 threshold +1 -2 +1 LEARNING IN PDP MODELS • Learning algorithms – Rules for adjusting weights during “training” • The Hebbian Rule – Co-activation strengthens connection – Change (jk) = activation (j) x activation (k) • The Delta Rule – Weights adjusted by amount of error compared to desired output – Change (jk) = activation (j) x r[ error (k)] • Backpropagation – Delta rule adjustments cascade backward through hidden unit layers EVALUATION OF PDP MODELS • Wide range of successes of learning – – – – XOR and other “nonlinear” problems Text-to-speech associations Concepts and stereotypes Grammatical “rules” and exceptions • Powerful and intuitive framework – At least some “biological face validity” • Parallel processing • Distributed networks for memory • Graceful degradation • complex behavior emerges from “simple” system • Limitations and issues – Too many “degrees of freedom”? – Learning even “simple” associations requires thousands of cycles – Some processes (e.g., backpropagation) have no known neural correlate • (yes, but massive feedback and “recurrent” loops in cortex) – Some failures to model human behavior • Pronouncing pseudohomophones • Abruptness of stages in verb learning • Catastrophic retroactive interference – Difficulty in modelling “serially ordered” and “rule-based” behavior – Need for processes outside of model