Technical Report North Carolin a NCEXTEND1

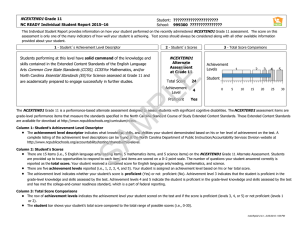

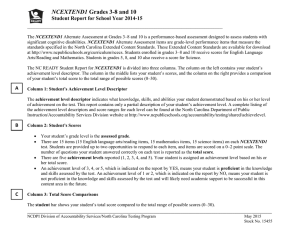

advertisement