Stat 534 Fall 2015 Homework # 1, PMD answers

advertisement

Stat 534

Fall 2015

Homework # 1, PMD answers

Problem 1. cottontail rabbits. R code for all parts at end.

1. Estimate the population size and capture probability under model M0.

N̂ = 79.40, p̂ = 0.294

2. Estimate the population size and capture probabilities under model Mt. N̂ = 79.25, p̂ =

0.227, 0.290, 0.353, 0.278, 0.315, 0.303

3. Estimate the population size and capture probabilities under model Mb. N̂ = 81.23, ĉ =

0.276, r̂ = 0.299

4. Which of these three models is the most appropriate for these data? Briefly explain your

choice.

M0 is the most appropriate: it has the smallest AIC and BIC statistics. You could also

conduct LR tests to compare Mt to M0 and Mb to M0. Neither supports the alternative

model over M0.

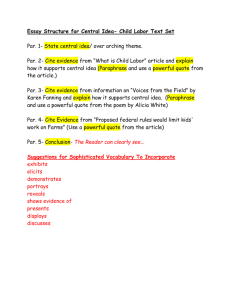

Model

M0

Mt

Mb

Deviance AIC BIC

62.33 66.33 71.84

58.93 72.93 92.21

62.20 68.20 76.46

Note: I used the number of releases, not counting those caught at time 6, = 116 in my

BIC computation.

5. Using the most appropriate model, calculate 99% confidence intervals using Wald’s method

and the profile method.

√

The se of N̂ for model M0 is 17.56 = 4.19, so the Wald interval is 79.4 ± 2.576 ∗ 4.19

= (68.6, 90.2). The profile interval is (71.4, 93.7), or (71,94) if you look for the integer

endpoints for which coverage is at least 99%.

6. These two confidence intervals are somewhat different. Using these data or quantities

derived from these data, explain why they differ.

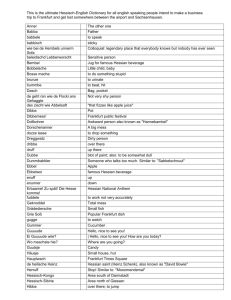

The profile likelihood trace (lnL as a function of N) explains why. The Wald interval is

based on a quadratic approximation to the profile lnL. The actual curve is most definitely

not a symmetric quadratic.

1

−32

−40

Profile log likelihood

−38

−36

−34

70

75

80

85

N

90

95

100

7. Calculate the model averaged estimate of the population size and its standard error.

There are many possible ways to do this. My question would have been better written had

it asked you calculate a model averaged estimate. Even using the Buckland et al. approach,

you could choose AIC or BIC to compute the model weights. Using AIC weights, I got

N̂ave = 79.9 with se = 5.1. The details are:

Model N̂

se

AIC ∆AIC Weight: wi

M0

79.40 4.19 66.33 0

0.699

Mt

79.25 4.15 72.93 6.60

0.026

Mb

81.23 7.29 68.20 1.86

0.275

So N̂ave =

P

wi N̂i = 79.9 and se N̂ave ≈

P

q

wi se2i + (N̂i − 79.9)2 = 5.10.

8. Describe a reasonable method to assess whether a particular model actually fits the data.

You don’t need to carry out that assessment.

Various possible answers. Basically, you need compare some feature of the data to what

is expected if the model fits the data. The usual approach is to compare occasion-specific

counts or counts of newly-seen individuals.

PMD’s R code for problem 1:

# you have lnlM0 and lnlMt functions.

Here’s my lnlMb function:

lnlMb <- function(param, data) {

t <- data[1]

Mt1 <- data[2]

Mdot <- data[3]

mdot <- data[4]

N <- param[1]

p <- param[2]

# initial capture prob.

2

c <- param[3]

# recapture prob.

lfactorial(N) - lfactorial(N-Mt1) + Mt1*log(p) + (t*N-Mdot-Mt1)*log(1-p) +

mdot*log(c)+(Mdot-mdot)*log(1-c)

}

# define relevant data,

# really only need sums for m and M, but I find it easier to check data entry

#

when you enter the vector

rabbit.Mt1 <- 70

rabbit.n <- c(18, 23, 28, 22, 25, 24)

rabbit.m <- c( 0, 7, 10, 12, 19, 22)

rabbit.M <- c( 0, 18, 34, 52, 62, 68)

rabbitMb <- c(6, rabbit.Mt1, sum(rabbit.M), sum(rabbit.m))

rabbitM0 <- c(6, rabbit.Mt1, sum(rabbit.n))

rabbitMt <- c(6, rabbit.Mt1, rabbit.n)

rabbitM0.mle <- optim(c(100, 0.4), lnlM0, method=’BFGS’,

hessian=T, data=rabbitM0, control=list(fnscale=-1))

vc <- solve(-rabbitM0.mle$hessian)

sqrt(diag(vc))

rabbitMt.mle <- optim(c(100, rep(0.4, 6)), lnlMt, method=’BFGS’,

hessian=T, data=rabbitMt, control=list(fnscale=-1))

vc <- solve(-rabbitM0.mle$hessian)

sqrt(diag(vc))

rabbitMb.mle <- optim(c(72, 0.4, 0.4), lnlMb, method=’BFGS’,

hessian=T, data=rabbitMb, control=list(fnscale=-1))

vc <- solve(-rabbitMb.mle$hessian)

sqrt(diag(vc))

# answers for questions 1-3:

rabbitM0.mle$par

rabbitMt.mle$par

rabbitMb.mle$par

# function to compute AIC and BIC from fitting one of the Mx models

# also need to provide sample size (# releases for Mx models)

AIC.M <- function(l, n=NA) {

3

p <- length(l$par)

# number of parameters

dev <- -2*l$value

aic <- dev + 2*p

bic <- dev + log(n)*p # calculate if n provided

c(Dev = dev, AIC=aic, BIC=bic)

}

Nrelease <- sum(rev(rabbit.n)[-1]) # number of releases, not including last

round( rbind(M0 = AIC.M(rabbitM0.mle, Nrelease),

Mt = AIC.M(rabbitMt.mle, Nrelease),

Mb = AIC.M(rabbitMb.mle, Nrelease) ), 2)

# calculating and plotting the profile lnL for model M0

N <- 70:110

lnlN <- rep(NA, length(N))

for (i in 1:length(N)) {

lnlN[i] <- lnlM0p(N[i], rabbitM0)

}

plot(N, lnlN)

# Finding the 99% profile ci graphically

abline(h=max(lnlN) - qchisq(0.99,1)/2)

# 0.99 quantile of a Chisq 1 is 6.634; draw a horizontal line at 6.634/2

N[identify(N, lnlN)]

# use identify() to find the two values of N just below the horiz. line

# finding the 99% profile ci using uniroot()

profileN <- function(N, lnlMax, data, coverage) {

# function that returns 0 when profile lnL crosses CI endpoint

lnlMax - lnlM0p(N, data) - qchisq(coverage, 1)/2

}

# search from Mt+1 to mle of N, gives lower bound

uniroot(profileN, c(70, 81), lnlMax=rabbitM0.mle$value, data=rabbitM0, coverage=0.99)

# search from mle to much larger value, gives upper bound

uniroot(profileN, c(81, 120), lnlMax=rabbitM0.mle$value, data=rabbitM0, coverage=0.99)

# finding the Wald 99% ci. Get the se’s for each parameter

vc <- solve(-rabbitM0.mle$hessian)

rabbitM0.se <- sqrt(diag(vc))

# and calculate interval

rabbitM0.mle$par[1] + c(-1,1)*qnorm(0.995)*rabbitM0.se[1]

selogN <- 5.29/83.19

exp(log(83.19) + c(-1,1)*2.576*selogN)

4

Problem 2. We have discussed model Mt with time varying parameters and model Mb with

behavioural heterogeneity. These can be combined. The general form of model Mtb allows both

P[capture]’s to vary by trapping occasion. That is, for each of the i trapping occasions, P[capture

| not previously caught] = ci and P[capture | has been previously caught] = ri . Consider three

capture occasions (i.e. a total of 8 possible capture histories).

1. How many parameters are in the general Model Mtb for 3 capture occasions?

6 parameters: N , c1 , c2 , c3 , r2 , and r3 .

Note: no r1 because no previously caught animals at time 1

2. Write out the 8 possible capture histories and their associated probabilities.

I’ve added a data column to clarify my notation for the next part. The 8 histories are:

Caught at

#

1 2 3

Caught

P[history]

Y Y Y

x1

c1 r2 r3

Y Y N

x2

c1 r2 (1 − r3 )

Y N Y

x3

c1 (1 − r2 ) r3

Y N N

x4

c1 (1 − r2 ) (1 − r3 )

N Y Y

x5

(1 − c1 ) c2 r3

N Y N

x6

(1 − c1 ) c2 (1 − r3 )

N N Y

x7

(1 − c1 ) (1 − c2 ) c3

N N N N − Mt+1 (1 − c1 ) (1 − c2 ) (1 − c3 )

3. Write out the log-likelihood function for a multinomial model using 8 possible capture

histories. Combine terms wherever possible.

The loglikelihood before simplification is:

lnL = log N ! − constant − log(N − Mt+1 )! + x1 log(c1 r2 r3 ) + x2 log(c1 r2 (1 − r3 )) +

x3 log(c1 (1 − r2 )r3 ) + x4 log(c1 (1 − r2 ) (1 − r3 )) + x5 log((1 − c1 )c2 r3 ) +

x6 log((1 − c1 ) c2 (1 − r3 )) + x7 log((1 − c1 )(1 − c2 )c3 ) +

(N − Mt+1 ) log((1 − c1 ) (1−2 ) (1 − c3 ))

Collecting terms for each parameter gives:

lnL = log N ! − constant − log(N − Mt+1 )! +

(x1 + x2 + x3 + x4 ) log c1 + (x5 + x6 + x7 + N − Mt+1 ) log(1 − c1 ) +

(x1 + x2 + x5 + x6 ) log c2 + (x3 + x4 + x7 + N − Mt+1 ) log(1 − c2 ) +

(x1 + x3 + x5 + x7 ) log c3 + (x2 + x4 + x6 + N − Mt+1 ) log(1 − c3 ) +

(x1 + x2 ) log r2 + (x3 + x4 ) log(1 − r2 ) +

(x1 + x3 + x5 ) log r3 + (x2 + x4 + x6 ) log(1 − r3 )

4. Use the simplified expression of the log-likelihood to determine the sufficient statistics.

How many sufficient statistics are there?

On the surface it looks like there are 11 sufficient statistics:

Mt+1 = (x1 + x2 + x3 + x4 + x5 + x6 + x7 ), (x1 + x2 + x3 + x4 ), (x5 + x6 + x7 − Mt+1 ),

(x1 + x2 + x5 + x6 ), (x3 + x4 + x7 − Mt+1 ), (x1 + x3 + x5 + x7 ), (x2 + x4 + x6 − Mt+1 ),

(x1 + x2 ), (x3 + x4 ), (x1 + x3 + x5 ), and (x2 + x4 + x6 ).

Using the rank of the matrix of coefficients, you see there are 5 sufficient statistics.

5

5. Are the parameters in the general Mbt identifiable?

No, there are more parameters (6) than sufficient statistics (5).

One simplification of the general Mtb model is assume the initial capture probability differs

among occasions, but the recapture probability is the same at all times. That is, for each of the

i trapping occasions, P[capture | not previously caught] = ci and P[capture | has been previously

caught] = r. Again consider three capture occasions.

7. What are the sufficient statistics for the “simplified” Mtb ?

You don’t have to redo a lot of the work because the common r replaces r2 and r3 (remember there is no r1 ). The simplified log likelihood from above becomes:

lnL = log N ! − constant − log(N − Mt+1 )! +

(x1 + x2 + x3 + x4 ) log c1 + (x5 + x6 + x7 + N − Mt+1 ) log(1 − c1 ) +

(x1 + x2 + x5 + x6 ) log c2 + (x3 + x4 + x7 + N − Mt+1 ) log(1 − c2 ) +

(x1 + x3 + x5 + x7 ) log c3 + (x2 + x4 + x6 + N − Mt+1 ) log(1 − c3 ) +

(2 x1 + x2 + x3 + x5 ) log r + (x2 + x3 + 2 x4 + x6 ) log(1 − r)

Using the rank of the matrix of coefficients, you see there are still 5 sufficient statistics.

8. Are the parameters in the “simplified” Mtb identifiable? Explain why or why not.

Yes - there are five sufficient statistics, which is sufficient to estimate five parameters.

PMD’s R code:

# original Mtb model

C <- rbind(c(1,1,1,1,1,1,1),

c(1,1,1,1,0,0,0), c(0,0,0,0,1,1,1),

c(1,1,0,0,1,1,0), c(0,0,1,1,0,0,1),

c(1,0,1,0,1,0,1), c(0,1,0,1,0,1,0),

c(1,1,0,0,0,0,0), c(0,0,1,1,0,0,0),

c(1,0,1,0,1,0,0), c(0,1,0,1,0,1,0) )

qr(C)$rank

# or:

round(svd(C)$d, 5)

# simplified Mtb model

C2 <- rbind(c(1,1,1,1,1,1,1),

c(1,1,1,1,0,0,0), c(0,0,0,0,1,1,1),

c(1,1,0,0,1,1,0), c(0,0,1,1,0,0,1),

c(1,0,1,0,1,0,1), c(0,1,0,1,0,1,0),

c(2,1,1,0,1,0,0), c(0,1,1,2,0,1,0) )

6

qr(C2)$rank

# or:

round(svd(C2)$d, 5)

Problem 3. Dippers. The investigators are interested in:

a) The number of dippers in the study area

b) Whether the sex ratio deviates from 1:1, and

c) Whether the capture probability or probabilities are the same for males and females.

This is an open-ended data problem, so there were many possible ways to approach it.

How I would examine each of the investigator’s questions:

Estimating the number of dippers: estimate Nmale and Nf emale and add.

Whether data are consistent with a sex ratio of 1:1:

Simplest approach is a Wald test of Nmale − Nf emale = 0.

Could also construct a likelihood ratio test, but that would involve fitting both male and

female data with a common N .

Comparing capture probabilities: Same ideas as above

My expectations:

Fit the basic Otis models (M0 , Mt , and Mb ) to the male and female capture histories.

Choose an appropriate model (or model average)

Answer the investigator’s questions.

Organize your answers as given in the homework guidelines

An extremely good answer might have:

a profile likelihood ci for N̂

a discussion of model averaging for N̂ . In this case, one model dominates.

Some comments after reading your work:

1. A number of folks (almost all if I remember correctly) evaluated the 1:1 sex ratio question

by comparing the CI’s for Nmale and Nf emale . The logic was that if the CI’s do not

overlap, then Nmales is significantly different from Nf emales but if they do overlap, there

is no evidence of a difference (and hence the sex ratio is consistent with 1:1). I wrote

“conservative” on your papers but did not take off points.

This comparison of CI’s is conservative in the sense that overlap or non-overlap of 95%

intervals corresponds to a test with an α-level of quite a bit less than 0.05. Easiest way

to see this is for estimates that are normally distributed with the same standard error.

The 95% intervals are µ1 ± 1.96se and µ2 ± 1.96se. Those intervals just touch when

µ1 − 1.96se

√ = µ2 + 1.96se, i.e., when µ1 − µ2 = 2 × 1.96se. The se of the difference is

sedif f = 2se, so the intervals just touch when

µ1 − µ2

2 × 1.96 √

= √

= 21.96 = 2.77

sedif f

2

. When the t-statistic for the test of µ1 = µ2 = 2.77, the p-value is 0.0056. That’s very

different from the implied 5% and very conservative.

7

Very common approach, though.

2. You have all the pieces to do Wald tests, both of equal N ’s and the 4 df F test that all p’s

are the same for males and females. All you need are the estimates and their VC matrices

for males and for females. My code below implements those computations.

3. Doing LRT’s will be very much harder because it requires fitting models with parameters

for male- and female-specific parameters.

4. As I mentioned in class, MARK gave a different total population size than did handwritten likelihood functions. That was even after combining male and female counts, so

the model uses the same capture probabilities for males and females. It did give the same

numbers when fitting just the males or just the females. I don’t know why there was a

difference.

5. I saw all sorts of different ci’s for N̂ . There isn’t one “right” ci because there are many

different ways it could be computed. Almost no-one said how the CI was computed.

(e.g., Wald on N̂ , Wald on log N̂ , profile on N̂ , computed by adding males and females,

computed by lumping all data, and the options go on). I recognized some answers because

they matched some obvious computations. I had absolutely no idea how some other ci’s

were obtained.

The lesson to be learned: Report how you did all the computations. Especially when there

isn’t a single standard way to compute something.

# PMD dipper code

# uses lnl functions used in problem 1

# and the summstat function defined below that computes

# summary statistics from the capture history

summstats <- function(H, count) {

# compute summary statistics given capture history matrix H

#

with counts in count

nhist <- dim(H)[1]

nocc <- dim(H)[2]

# number of capture histories

# number of trapping occasions

Mt1 <- sum(count)

n <- apply(H*count, 2, sum)

# to get m (marked caught), compute prevmark = 1

#

if that capture hist was marked prior to occasion i

H0 <- as.matrix(cbind(rep(0, nhist), H))

prevmark <- matrix(NA, nrow=nhist, ncol=nocc)

for (i in 2:nocc) {

prevmark[,i] <- (apply(H0[,1:i], 1, sum) > 0) + 0

8

}

prevmark[,1] <- rep(0, nhist)

m <- apply(H*count*prevmark, 2, sum)

M <- apply(prevmark*count, 2, sum)

list(t = nocc, Mt1 = Mt1, n = n, m=m, ndot=sum(n), mdot=sum(m), Mdot = sum(M), M=M)

}

dipper <- read.csv(’dipper.csv’)

mstats <- summstats(dipper[,1:4], dipper$m)

fstats <- summstats(dipper[,1:4], dipper$f)

ostats <- summstats(dipper[,1:4], dipper$f+dipper$m)

female0 <- optim(c(90, 0.4), lnlM0, control=list(fnscale=-1), method=’BFGS’,

data=c(4, fstats$Mt1, fstats$ndot), hessian=T)

male0 <- optim(c(90, 0.4), lnlM0, control=list(fnscale=-1), method=’BFGS’,

data=c(4, mstats$Mt1, mstats$ndot), hessian=T)

femalet <- optim(c(90, rep(0.4, 4)), lnlMt, control=list(fnscale=-1), method=’BFGS’,

data=c(4, fstats$Mt1, fstats$n), hessian=T)

malet <- optim(c(70, rep(0.4, 4)), lnlMt, control=list(fnscale=-1), method=’BFGS’,

data=c(4, mstats$Mt1, mstats$n), hessian=T)

femaleb <- optim(c(90, rep(0.4, 2)), lnlMb, control=list(fnscale=-1), method=’BFGS’,

data=c(4, fstats$Mt1, fstats$Mdot, fstats$mdot), hessian=T)

maleb <- optim(c(70, rep(0.4, 2)), lnlMb, control=list(fnscale=-1), method=’BFGS’,

data=c(4, mstats$Mt1, mstats$Mdot, mstats$mdot), hessian=T)

femalet$par

sqrt(diag(solve(-femalet$hessian)) )

malet$par

sqrt(diag(solve(-malet$hessian)) )

malet$par+femalet$par

sqrt(diag(solve(-femalet$hessian)) + diag(solve(-malet$hessian)) )

# comparison of N and capture probabilities

vmale <- diag(solve(-malet$hessian))

vfemale <- diag(solve(-femalet$hessian))

sediff <- sqrt(vmale + vfemale)

(femalet$par - malet$par)/sediff

9

# comparison of logN

vmalelog <- vmale / malet$par^2

vfemalelog <- vfemale / femalet$par^2

(log(malet$par[1]) - log(femalet$par[1]))/sqrt(vmalelog[1] + vfemalelog[1])

(log(malet$par[1]) - log(femalet$par[1])) + c(-1.96,1.96)*sqrt(vmalelog[1] + vfemalelog[1])

# comparison of capture probabilities

# use a quadratic form to do the 4 df F test of equal male and female

#

probabilities

VCd <- solve(-femalet$hessian) + solve(-malet$hessian)

VCd <- VCd[2:5,2:5] # VC matrix for difference at each time

d <- matrix(femalet$par - malet$par)[2:5]

t(d) %*% solve(VCd) %*% d

# obs. differences

Problem 4. No one answered this question, so I haven’t written a solution.

10