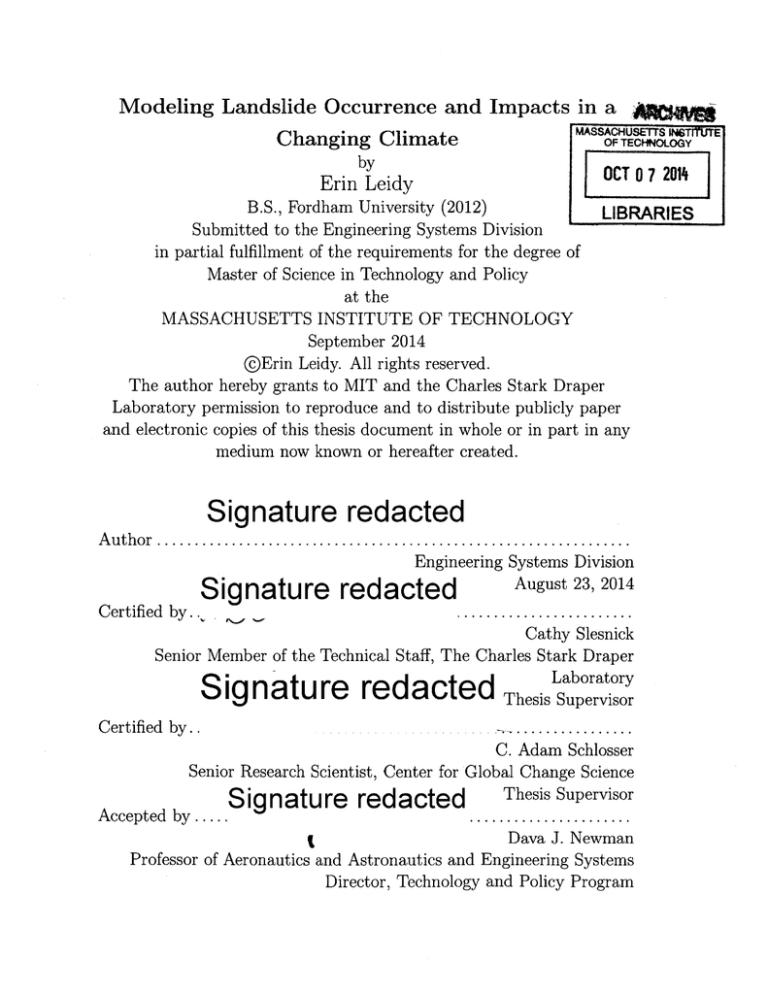

Modeling Landslide Occurrence and Impacts in a

M^SS^CHU

Changing Climate

OF TE ~CHNOLOGY

[7nr

by

Erin Leidy

07 201

B.S., Fordham University (2012)

LIB RARIES

Submitted to the Engineering Systems Division

in partial fulfillment of the requirements for the degree of

Master of Science in Technology and Policy

at the

MASSACHUSETTS INSTITUTE OF TECHNOLOGY

September 2014

@Erin Leidy. All rights reserved.

The author hereby grants to MIT and the Charles Stark Draper

Laboratory permission to reproduce and to distribute publicly paper

and electronic copies of this thesis document in whole or in part in any

medium now known or hereafter created.

Signature redacted

A u th o r ................................................................

Engineering Systems Division

August 23, 2014

Signature redacted

C ertified by .

.......................

Cathy Slesnick

Senior Member of the Technical Staff, The Charles Stark Draper

Signature redacted

Certified by

Acptpd b

.

Laboratory

Thesis Supervisor

................

C. Adam Schlosser

Senior Research Scientist, Center for Global Change Science

Thesis Supervisor

Ririn tiH r- r mr tc i

I

I

y . ..

I

~U

I

I

~U

. . . . . . . . . . . . . . . . . . .. ..

Dava J. Newman

Professor of Aeronautics and Astronautics and Engineering Systems

Director, Technology and Policy Program

2

Modeling Landslide Occurrence and Impacts in a Changing

Climate

by

Erin Leidy

Submitted to the Engineering Systems Division

on August 23, 2014, in partial fulfillment of the

requirements for the degree of

Master of Science in Technology and Policy

Abstract

In the coming years and decades, shifts in weather, population, land use, and other

human factors are expected to have an impact on the occurrence and severity of landslides. A landslide inventory database from Switzerland is used to perform two types

of analysis. The first presents a proof of concept for an analogue method of detecting

the frequency in landslide activity with future climate change conditions. Instead

of relying on modeled precipitation, it uses composites of atmospheric variables to

identity the conditions that are associated with days on which a landslide occurred.

The analogues are compared to relevant meteorological variables in MERRA reanalysis data to achieve a success rate of over 50% in matching observed landslide days

within 7 days. The second analysis explores the effectiveness of machine learning as a

technique to evaluate the likelihood of a slide to create high damage. The algorithm is

tuned to accommodate unbalanced data, extraneous variables, and variance in voting

to achieve the best predictive success. This method provides an efficient way of calculating vulnerability and identifying the spatial and temporal factors which influence

it. The results are able to identify high damage landslides with a success of upwards

of 70%. A machine-learning based model has the potential for use as a policy tool to

identify areas of high risk.

Thesis Supervisor: Cathy Slesnick

Title: Senior Member of the Technical Staff, The Charles Stark Draper Laboratory

Thesis Supervisor: C. Adam Schlosser

Title: Senior Research Scientist, Center for Global Change Science

3

4

Acknowledgments

Numerous thanks to the many who have provided support, encouragement, and advice throughout the writing of this thesis and my time at MIT.

First, my advisors, Dr. Cathy Slesnick and Dr. Adam Schlosser, and also to Dr. Natalya Markuzon, for their consistent guidance and support throughout this project.

Their expertise and advice have been vital to the learning experience that this research has been.

I would like to thank my friends and classmates in the Technology and Policy Program at MIT, for consistently being a source of inspiration and support. You are all

amazing and have been a highlight of my time in Boston. I will treasure the memories.

Final thanks to my family, without whose encouragement I would certainly not be

where I am now. My appreciation of their unwavering support and love is immeasurable.

This thesis was prepared at the Charles Stark Draper Laboratory, Inc., under Project

24254-001, IDS.

5

6

Contents

0.1

1

Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

An Analogue Method to Detecting Landslide Response to Climate

Change: Proof of Concept

19

1.1

Background .......

1.2

Methodology .......

1.3

D ata . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

22

1.3.1

Observed Landslides

. . . . . . . . . . . . . . . . . . . . . . .

22

1.3.2

NASA-MERRA . . . . . . . . . . . . . . . . . . . . . . . . . .

22

1.3.3

Data Processing . . . . . . . . . . . . . . . . . . . . . . . . . .

24

1.4

1.5

2

16

Application

................................

...............................

19

21

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

24

1.4.1

Creating the Composites . . . . . . . . . . . . . . . . . . . . .

24

1.4.2

Analogue Determination . . . . . . . . . . . . . . . . . . . . .

33

1.4.3

Success Rate

. . . . . . . . . . . . . . . . . . . . . . . . . . .

39

Discussion and Future Work . . . . . . . . . . . . . . . . . . . . . . .

40

Modeling the Damage Incurred by Landslides

43

2.1

Background . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

43

2.1.1

Vulnerability Analysis

. . . . . . . . . . . . . . . . . . . . . .

43

2.1.2

Machine Learning Approach . . . . . . . . . . . . . . . . . . .

45

2.1.3

Random Forest Algorithm . . . . . . . . . . . . . . . . . . . .

47

2.2

M ethodology

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

48

2.3

D ata . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

49

2.3.1

50

The Swiss Flood and Landslide Database . . . . . . . . . . . .

7

.55

2.4

2.3.2

NASA SEDAC

2.3.3

GDP .......

2.3.4

Weather Data ......

2.3.5

Transportation Data .......................

2.3.6

Buildings

2.3.7

Land Cover . . . . . . . . . . . . . . . . . . . . . . . . . . . .

A pplication

..........................

51

................................

52

...........................

53

53

. . . . . . . . . . . . . . . . . . . . . . . . . . . .

55

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

55

2.4.1

Determining Important Variables . . . . . . . . . . . . . . . .

56

2.4.2

Unbalanced Data . . . . . . . . . . . . . . . . . . . . . . . . .

66

2.4.3

Voting . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

76

2.4.4

Separation into Seasons

. . . . . . . . . . . . . . . . . . . . .

81

2.5

Results . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

82

2.6

Discussion and Future Work . . . . . . . . . . . . . . . . . . . . . . .

84

3 Using Landslide Risk Models in a Policy Context: Best Practice and

Recommendations

87

3.1

Rationale for Using a Model of Vulnerability . . . . . . . . . . . . . .

87

3.2

Potential Uses . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

88

3.3

M odel Application

90

. . . . . . . . . . . . . . . . . . . . . . . . . . . .

A Damage Modeling in Oregon

93

A .0.1 D ata . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

A .0.2 M odeling

93

. . . . . . . . . . . . . . . . . . . . . . . . . . . . .

103

A .0.3 Results . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

103

B Variable Importance Results

105

B.0.4 KS-Test results . . . . . . . . . . . . . . . . . . . . . . . . . .

B.0.5

105

Sensitivity analysis results, removing one variable at a time. . 110

B.0.6 Sensitivity analysis results, removing all of one type of variable,

and adding in one variable individually. . . . . . . . . . . . . .

8

116

List of Figures

1-1

Number of landslides in Switzerland per month, period 1979-2012.

23

1-2

Swiss landslides by date, 1979-2012.

23

1-3

Composites of all Swiss DJF slide dates. The colors of a) show the

. . . . . . . . . . . . . . . . . .

standardized anomaly of 500-hpa geopotential height (Z500) and the

arrows show vertical integral atmospheric vapor flux. The colors of b)

show total precipitable water (TPW) and the contour lines are 500-hpa

vertical pressure velocity (w500).

1-4

. . . . . . . . . . . . . . . . . . . .

25

Composites of all Swiss JJA slide dates. The colors of a) show the

standardized anomaly of 500-hpa geopotential height (Z500) and the

arrows show vertical integral atmospheric vapor flux. The colors of b)

show total precipitable water (TPW) and the contour lines are 500-hpa

vertical pressure velocity (w500).

1-5

. . . . . . . . . . . . . . . . . . . .

26

Composites of all Swiss DJF slide dates with 2-day period. The colors

of a) show the standardized anomaly of 500-hpa geopotential height

(Z500) and the arrows show vertical integral atmospheric vapor flux.

The colors of b) show total precipitable water (TPW) and the contour

lines are 500-hpa vertical pressure velocity (w500).

1-6

. . . . . . . . . .

27

Composites of all Swiss DJF slide dates with 5-day period. The colors

of a) show the standardized anomaly of 500-hpa geopotential height

(Z500) and the arrows show vertical integral atmospheric vapor flux.

The colors of b) show total precipitable water (TPW) and the contour

lines are 500-hpa vertical pressure velocity (w500).

9

. . . . . . . . . .

27

1-7

Composites of all Swiss DJF slide dates with 7-day period. The colors

of a) show the standardized anomaly of 500-hpa geopotential height

(Z500) and the arrows show vertical integral atmospheric vapor flux.

The colors of b) show total precipitable water (TPW) and the contour

lines are 500-hpa vertical pressure velocity (w500).

1-8

. . . . . . . . . .

28

Composites of all Swiss DJF slide dates with 10-day period. The colors

of a) show the standardized anomaly of 500-hpa geopotential height

(Z500) and the arrows show vertical integral atmospheric vapor flux.

The colors of b) show total precipitable water (TPW) and the contour

lines are 500-hpa vertical pressure velocity (w500).

1-9

. . . . . . . . . .

28

Composites of all Swiss DJF slide dates with 14-day period. The colors

of a) show the standardized anomaly of 500-hpa geopotential height

(Z500) and the arrows show vertical integral atmospheric vapor flux.

The colors of b) show total precipitable water (TPW) and the contour

lines are 500-hpa vertical pressure velocity (w500).

. . . . . . . . . .

29

1-10 Composites of all Swiss DJF slide dates with 30-day period. The colors

of a) show the standardized anomaly of 500-hpa geopotential height

(Z500) and the arrows show vertical integral atmospheric vapor flux.

The colors of b) show total precipitable water (TPW) and the contour

lines are 500-hpa vertical pressure velocity (w500).

. . . . . . . . . .

29

1-11 Peak Standardized Anomaly of Composite Z500 for Various Time Spans.

The trough (negative anomaly, here labeled as min), and ridge (positive anomaly, here labeled as max) are both variables of interest and

so are included.

. . . . . . . . . . . . . . . . . . . . . . . . . . . . .

30

1-12 Peak Standardized Anomaly of Composite w500 for Various Time Spans. 30

1-13 Peak Standardized Anomaly of Composite TPW for Various Time Spans. 31

10

1-14 Composites of all Swiss DJF slides that incurred high damage. The colors of a) are the standardized anomaly of 500-hpa geopotential height

(Z500) and the arrows show vertical integral atmospheric vapor flux.

The colors of b) show total precipitable water (TPW) and the contour

lines are 500-hpa vertical pressure velocity (w500).

. . . . . . . . . .

32

1-15 Composites of all Swiss DJF slides that incurred low damage. The colors of a) are the standardized anomaly of 500-hpa geopotential height

(Z500) and the arrows show vertical integral atmospheric vapor flux.

The colors of b) show total precipitable water (TPW) and the contour

lines are 500-hpa vertical pressure velocity (w500).

. . . . . . . . . .

32

1-16 Spatial Correlation of Z500. . . . . . . . . . . . . . . . . . . . . . . .

34

1-17 Density Plot of Z500 spatial correlation.

. . . . . . . . . . . . . . . .

34

1-18 Spatial Correlation of w500. . . . . . . . . . . . . . . . . . . . . . . .

35

1-19 Density Plot of w500 spatial correlation.

. . . . . . . . . . . . . . . .

35

1-20 Spatial Correlation of TPW. . . . . . . . . . . . . . . . . . . . . . . .

36

1-21 Density Plot of TPW spatial correlation. . . . . . . . . . . . . . . . .

36

2-1

2-2

Depiction of the steps to create a supervised classification model. b)

Prediction is the same as testing. (Bird et al. 2009) . . . . . . . . . .

46

Structure of a decision tree. (Safavian 1991) . . . . . . . . . . . . . .

48

2-3 All Swiss landslides used in modeling. Red dots indicate high damage,

yellow are medium damage, and green are low damage. . . . . . . . .

2-4

51

This figure displays the count of all slides that were included and excluded, separated by the amount of damage. . . . . . . . . . . . . . .

52

2-5

Modeled slides, separated by canton/GDP and damage level. . . . . .

53

2-6

Distribution of land cover for all Swiss slides used in modeling.

56

2-7

Features of the 30 Day Rain Distribution. Red is the high damage

landslides, blue is the low damage landslides.

2-8 Features of the 4 Day Rain Distribution.

landslides, blue is the low damage landslides.

11

. .

. . . . . . . . . . . . .

58

Red is the high damage

. . . . . . . . . . . . .

58

2-9

Features of the Population Density Distribution. Red is the high damage landslides, blue is the low damage landslides.

. . . . . . . . . . .

59

2-10 Features of the Length of Road in 2 km Distribution. Red is the high

damage landslides, blue is the low damage landslides.

. . . . . . . .

59

2-11 Correlation of all Anthropogenic Variables. The larger the circle, the

higher the absolute value of the correlation. Blue indicates positive

correlation, red indicates negative.

An X indicates an insignificant

correlation. Key is located to the right.

. . . . . . . . . . . . . . . .

62

2-12 Correlation plot of all Rain Variables. The larger the circle, the higher

the absolute value of the correlation. Blue indicates positive correlation, red indicates negative. An X indicates an insignificant correlation.

63

2-13 Correlation plot of all Pressure Variables. The larger the circle, the

higher the absolute value of the correlation. Blue indicates positive

correlation, red indicates negative. An X indicates an insignificant

correlation.

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

64

2-14 Correlation plot of all Temperature Variables. The larger the circle,

the higher the absolute value of the correlation. Blue indicates positive

correlation, red indicates negative.

correlation.

An X indicates an insignificant

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

65

2-15 Combined rank of variables, from results of sensitivity analysis (red),

and KS-test (blue). Rank determined by the test results, lowest number being the most significant variable.

. . . . . . . . . . . . . . . .

66

2-16 Model accuracy by number of variables, fit with a LOESS (Locally

weighted scatterplot smoothing) curve. . . . . . . . . . . . . . . . . .

67

2-17 ROC curve, Undersampled data . . . . . . . . . . . . . . . . . . . . .

70

2-18 ROC curve, Oversampled Data . . . . . . . . . . . . . . . . . . . . .

70

2-19 ROC curve, SMOTE . . . . . . . . . . . . . . . . . . . . . . . . . . .

71

2-20 ROC curve, CNN . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

72

2-21 ROC curve, ENN . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

73

2-22 ROC curve, Tomek Links . . . . . . . . . . . . . . . . . . . . . . . . .

74

12

2-23 ROC curve, NCR . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

74

2-24 ROC curve, OSS

. . . . . . . . . . . . . . . . . . . . . . . . . . . . .

75

2-25 Results of balancing training data . . . . . . . . . . . . . . . . . . . .

76

2-26 Density plot of the voting results, with SMOTE data.The red line

shows high damage slides, the green line shows low damage instances.

77

2-27 Density plot of the voting results, with undersampled data. The red

line shows high damage slides, the green line shows low damage instances. 78

2-28 Variance of voting results for data that has been balanced with SMOTE 79

2-29 Variance of voting results for data that has been undersampled . . . .

80

2-30 Summary of voting techniques . . . . . . . . . . . . . . . . . . . . . .

80

2-31 Swiss landslides separated by season. . . . . . . . . . . . . . . . . . .

81

2-32 Summary of season-separated results . . . . . . . . . . . . . . . . . .

82

2-33 Summary of best results on JJA slides. Tuning methods include separating the seasons, reducing the variables, using the mean vote, and

balancing the training data. . . . . . . . . . . . . . . . . . . . . . . .

83

A-i Location of slides in Oregon, mapped with the highway system. Green

points do not contain records of damage, red points do. . . . . . . . .

94

A-2 Type of damage caused by landslides in the SLIDO records . . . . . .

95

A-3 Length of detours caused by Oregon landslides.

96

. . . . . . . . . . . .

A-4 Distribution of values of direct damage for Oregon slides, measured on

an intensity scale. . . . . . . . . . . . . . . . . . . . . ... . . . . . . . .

97

A-5 Distribution of total damage for Oregon slides, measured on an intensity scale.

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

98

A-6 Distribution of dollar amount of Oregon landslide damage, plotted on

a logarithmic scale.

. . . . . . . . . . . . . . . . . . . . . . . . . . .

99

A-7 Location of slides in Oregon, separated by amount of damage, as determined in Figure A-6.

. . . . . . . . . . . . . . . . . . . . . . . . .

A-8 Distribution of land cover for Oregon slides.

13

. . . . . . . . . . . . . .

100

101

A-9 Distribution of population density for Oregon slides. The red line is

high damage slides, the blue line is low damage slides.

. . . . . . . . 102

A-10 Distribution of distance to nearest highway for Oregon slides. The red

line is high damage slides, the blue line is low damage slides.

14

. . . .

102

List of Tables

2.1

Confusion Matrix, Undersampled Data . . . . . . .

2.2

Confusion Matrix, Oversampled Data . . . . . . . .

. . . .

70

2.3

Confusion Matrix, SMOTE

. . . . . . . . . . . . .

. . . .

71

2.4

Confusion Matrix, CNN . . . . . . . . . . . . . . .

. . . .

72

2.5

Confusion Matrix, ENN . . . . . . . . . . . . . . .

. . . .

73

2.6

Confusion Matrix, Tomek Links . . . . . . . . . . .

. . . .

74

2.7

Confusion Matrix, NCR . . . . . . . . . . . . . . .

. . . .

74

2.8

Confusion Matrix, OSS . . . . . . . . . . . . . . . .

. . . .

75

2.9

Votes produced when data is balanced with SMOTE.

. . . .

77

2.10 Votes produced when training data is undersampled.

. . . .

78

.

.

.

.

.

.

.

.

70

B.1 KS-test results for all continuous variables. Includes the test variable

105

B.2 KS-test results for all continuous variables. . . . . . . . . . . . . . .

111

B.3 Sensitivity Analysis with only one rain variable included. . . . . . .

117

B.4 Sensitivity Analysis with only one min temperature variable included.

118

B.5 Sensitivity Analysis with only one max temperature variable included.

119

.

.

. . . . . . . . . . . . . . . . . . . . .

.

and a p-value of significance.

B.6 Sensitivity Analysis with only one mean temperature variable included. 120

.

B.7 Sensitivity Analysis with only one pressure variable included. . . . .

15

121

0.1

Introduction

Shifts in weather extremes are one on the most dangerous expected impacts of climate

change, due to their tendency to cause natural disasters. When averaged across the

globe, extreme precipitation events have been found to be increasing (Alexander et

al. 2006). As precipitation is the most common trigger of landslides, an increase in

extreme precipitation is expected to result in increased landslide frequency (Dale et al.

2001) (Crozier 2010). Measurements of this frequency increase are at an experimental

phase, because of high degrees of uncertainty in landslide data, slope models, and

precipitation estimations from general circulation models (GCMs) (Crozier 2010). A

method is used here to bypass the modeled rain that comes from GCMs to lessen

one type of uncertainty. Large-scale atmospheric conditions associated with landslide

activity are determined from composites of atmospheric conditions over all days with

observed landslides. These atmospheric variables can be more confidently predicted

using climate models than modeled precipitation can be (Gao et al. 2014). The

method is presented here as a proof of concept and further work will create a numeric

estimate of the expected change in frequency that landslides will undergo in with

future climate change scenarios.

Many high damage landslides come as a surprise. The land movement occurs at

a high velocity, leaving little chance for the impacted area to be evacuated. Knowing

where landslides are going to occur and which areas are particularly vulnerable to

high damage slides would help reduce the risk that many people consistently live

under. The implications of this research are underscored by the recent landslide in

Washington that resulted in 41 casualties, one of the most fatal landslides ever to

occur in the United States (Berman 2014). Each year, landslides cause hundreds of

deaths globally, a number that could potentially be reduced with a better method

of predicting high damage slides (Petley 2008). Climate change, along with other

predicted global shifts such as population and land use that will influence landslide

frequency and severity, increase the importance of understanding landslide risk.

Quantifying landslide risk has to overcome challenges such as the lack of high

16

quality data, incomplete and uncertain models of landslide processes, and complex

formulas for risk measurement (van Westen et al. 2006). What robust risk quantification does occur largely takes place on a site-specific level, for example by governments

interested in evaluating their transportation networks or geological engineers looking

to evaluate the danger to specific buildings. This high cost and inefficient method of

measurement is unusable in places that do not have the resources to perform it, or

on larger scales where a map of all risk over an area is desired. The accepted formula

for landslide risk, initially proposed by Varnes (1984) is:

R=E*H*V

E is the elements at risk, H represents hazard, and V is a measurement of vulnerability.

H is expressed as the probability of landslide occurrence. V is the expected degree

of loss, on a scale of 0 to 1 (no damage to full destruction). E, the elements at

risk, are the population, transportation networks, buildings, economic activity and

other features of an environment that could be impacted, measured by the cost of the

features. The formula appears simple, but calculating these individual measurements

is complicated and causes the formula to become difficult to apply over large areas

(van Westen et al. 2006). Machine learning techniques may be able to bypass this

complex method of risk quantification. Several studies have successfully used machine

learning to measure the susceptibility of slopes to movement, but it has been little

used for complete landslide risk detection, or for measuring vulnerability (Yao et al.

2008) (Brenning 2005). If refined effectively, machine learning algorithms could be

used to determine risk for large regions in an efficient way. Historical data about

damage caused by many landslides, along with mapped data about anthropogenic

and weather features at the time of the slide can be used to identify patterns about

the intensity of landslide damage. This method could help a policy maker or planning

official better decide land use and zoning patterns and identify which areas are worth

preemptive action to avoid damage.

Historical databases are one of the most valuable resources for studying landslides

17

and estimating their future occurrence. Switzerland is used as a study area, because

of the availability of a comprehensive database that contains records of landslides

spanning 40 years, including a measurement of damage along with other necessary

information about the time and place of event occurrence (Hilker et al. 2009). This

data is used as a test site for two types of analysis: 1) an analogue approach to

detecting the occurrence of landslide days, determined by compiling the composite

atmospheric conditions of days on which landslides were observed and 2) a machine

learning approach to estimating the damage a landslide will cause, based on anthropogenic and weather conditions at the time of the slide. Following this is a discussion

of best practice in the real world applications of these models.

18

Chapter 1

An Analogue Method to Detecting

Landslide Response to Climate

Change: Proof of Concept

1.1

Background

The Intergovernmental Panel on Climate Change (IPCC) has reported that the frequency of extreme precipitation events has increased due to anthropogenic climate

change (Solomon et al. 2007). Because the primary trigger of landslide activity is

precipitation, in many areas landslides are expected become more frequent as a result.

Because of the mechanisms that cause water to accumulate in slopes and affect their

stability, an increase in total precipitation and extreme precipitation events will cause

higher rates of failure (Crozier 2010). Several studies have described the mechanisms

that will influence slope stability in response to climate change and though they have

come to the same theoretical conclusion about landslide response to climate change,

high uncertainty plagues attempts at quantifying an increase (Cruden and Varnes

1996) (Crozier 2010) (Collison et al. 2000). A common method of estimation involves applying downscaled GCMs to slope stability models, but doing this requires

a high spatial resolution that many climate models cannot reach. Other studies have

19

tried to statistically establish a relationship between landslide occurrence and an increase in local rainfall, though this method can be complicated by natural variability

in climate and environmental factors that is difficult to attribute to climate change or

account for in this sort of analysis. High magnitude events, which are likely to cause

more damage, may also increase with climate change because heavier rain events may

cause more land to be displaced (Matsuura et al. 2008).

Crozier observes that results from studies linking climate change and landslide

frequency are subject to very high margins of error, largely due to factors related to

climate models such as uncertainty in modeled weather predictions and the inability of

projections to reach high spatial resolution (2010). Previous studies have found that,

in general, GCMs do not reliably reproduce precipitation frequency (Dai 2006). Many

models have a tendency to underestimate heavy precipitation, while overestimating

less severe events. When these general circulation models are used to predict changes

in landslide activity any uncertainty in precipitation is carried through. Coupled with

other biases and uncertainties that landslide measurement is subject to, quantifying

the increase in landslides becomes subject to extremely high uncertainty that reduces

the robustness and usability of predictions. Gao et. al (2014) developed an analogue method to reduce the errors produced by climate models in estimating extreme

precipitation. Precipitation generally results from the interaction of large-scale atmospheric features, many of which can be simulated more realistically by GCMs than

precipitation currently can be. It was found that composites of these atmospheric

conditions more faithfully reproduced extreme precipitation than the measurements

of precipitation given directly from models (Gao et al. 2014). This technique was

applied here to the prediction of landslides in a proof of concept whose end goal is

to determine the effect that an increase in precipitation, and climate change more

generally, will have on landslide occurrence. The results show that landslide-inducing

atmospheric conditions can be identified and reproduce the occurrence of landslide

days with reasonable accuracy.

20

1.2

Methodology

Because landslides are frequently caused by extreme precipitation, when applying the

analogue approach to landslide prediction many of the same atmospheric conditions

will be present between landslides and precipitation. This study looks to improve

predictions for the number of landslide dates resulting from an atmosphere altered

by climate change over the current best available estimates. A proof of concept is

presented here, later to be applied to climate models to identify a quantification of

the general increase or decrease in landslide activity with climate change.

The approach builds off the work done by Gao et al., which used large-scale

atmospheric features to identify days of extreme precipitation (2014).

Instead of

targeting extreme precipitation to identify days of interest, in this study landslide

days are targeted. Dates of observed landslides were gathered from the Swiss Flood

and Landslide Damage database, a source which has 3366 records of landslides from

1972-present. Any days that had more than one slide were only considered once. The

atmospheric variables that were considered as predictors of landslides are 500-hpa

geopotential height, 500-hpa vertical pressure velocity, and total precipitable water.

The atmospheric conditions of each landslide day were determined, and a composite

was created which averages all of those days into one pattern. One way in which

landslide events differ from extreme precipitation is that longer-term atmospheric

conditions may be highly influential in producing the correct conditions for landslides.

Landslides are dependent on conditioning factors such as soil moisture which are

determined by a long timescale of precipitation patterns (Iverson 2000). The use of

composites which averaged conditions over extended time periods was explored. The

results of this will be shown later, but the one day composites proved strongest and

created the most distinct patterns so they were used. From the composites, cutoffs for

the identification of atmospheric conditions present on landslide days were identified,

in the form of spatial correlation from a day to the composites, and the presence of

"hotspots" -

localized areas of continuity between the composite and an individual

day.

21

1.3

1.3.1

Data

Observed Landslides

The Swiss Flood and Landslide Database was used to gather observed dates of landslide occurrence (Hilker et al. 2009). This database contains 3366 records of landslides

that have occurred from 1972 to the present. The records largely originate from news

sources. The dates were narrowed from a list of all slide dates based on criteria about

certainty and the availability of atmospheric data. Any dates that were indicated as

uncertain were removed and any date which had more than one slide was counted

once. Because of the variation in weather patterns among seasons, the data was also

separated by season for analysis purposes. The summer months (June, July, and August) have the highest landslide frequency but, as will be shown, the winter months

(December, January, and February) have the strongest composite patterns. For this

reason, the focus season is winter. Because of the availability of MERRA reanalysis

data, only the years from 1979 and 2012 were used, even though the dataset begins

in 1972. Figures 1-1 and 1-2 show the distribution of landslides in the Swiss Flood

and Landslide Databased during the period 1979-2012 by the dates and months on

which they occurred.

1.3.2

NASA-MERRA

The composites of atmospheric conditions were generated from NASA's Modern Era

Retrospective-analysis for Research and Applications (MERRA) (Rienecker 2001).

MERRA is based on the GEOS-5 atmospheric data assimilation system, which is

reanalyzed with NASA's Earth Observing System (EOS) satellite observations using

an Incremental Analysis Updates (IAU) procedure to gradually adjust the model to

observational data.

Its special focus is on conditions relating to the hydrological

cycle. The MAI3CPASM and MAI1NXINT products were used. These are MDSIC

modeling and assimilation history files. MAI3CPASM contains pressure variables on

42 levels in 3 hour increments. Geopotential height, sea level pressure, and vertical

22

Number of Landslides by Month

6001

-

400

-

300

200

-

--

-

--

_

-

-

100

-

0

\f

(~4

~

.~

4'

~

*~$~

\

\'~

el

Z

~'

Figure 1-1: Number of landslides in Switzerland per month, period 1979-2012.

Distribution of Slides by Date

50

45

L

40

35k

30

25

E

Z20

10

-

-

15

5

1975

2i015

Figure 1-2: Swiss landslides by date, 1979-2012.

23

pressure velocity were drawn from this file. MAI1NXINT has a single level resolution

and is measured in daily increments. Total precipitable water was sourced from this.

The data is all at a 1.250 resolution.

1.3.3

Data Processing

The Swiss Flood and Landslide Damage Database was used to identify on which dates

landslides occurred. MERRA data was drawn from those dates for composite generation. The variables drawn from MERRA were those that have been confirmed to be

associated with heavy precipitation, including 500-hpa geopotential height, 500-hpa

vertical pressure velocity, sea level pressure, and total precipitable water. To standardize the resolution with climate models, the resolution was linearly interpolated

to 2.5'x2'. All variables have been converted to a standardized anomaly, defined as

the anomaly from the seasonal climatological mean over the 34-year period under

consideration, divided by its standard deviation.

1.4

1.4.1

Application

Creating the Composites

Composites are an average of the atmospheric conditions (presented as standardized

anomalies) over all the days on which there were one or more landslides. The atmospheric conditions are determined for each day which has landslides and then all

of those days are averaged together to obtain the composite atmospheric conditions.

Several variables were used, which also have an association with precipitation events.

They include 500-hpa geopotential height (Z500), 500-hpa vertical pressure velocity (w500), sea level pressure (SLP), and total precipitable water (TPW). Sea level

pressure is not shown, as it was found to be redundant when also using geopotential

height.

Figure 1-3 shows the composites for DJF slide days in Switzerland. The shading in

1-3.a) is the standardized anomaly of 500-hpa geopotential height (Z500) and features

24

75N

55N70N

1AW

-W2W

-1

-0.8

0

1QE

-0.6

2E

-0.4

3

-0.2

5Ei3 W

4-E

0

0.2

20W

0.4

1

10W

0.6

0.8

20E

1

30E

4E

50E

1.2

Figure 1-3: Composites of all Swiss DJF slide dates. The colors of a) show the

standardized anomaly of 500-hpa geopotential height (Z500) and the arrows show

vertical integral atmospheric vapor flux. The colors of b) show total precipitable

water (TPW) and the contour lines are 500-hpa vertical pressure velocity (w500).

a dipole pattern with a trough above Northern Europe and Scandinavia and a ridge

over Algeria in Northern Africa and the Western Mediterranean. This image also

shows vertical integral atmospheric vapor flux, in the arrows. The colors of 1-3.b)

show total precipitable water (TPW) in the shading. The peak anomaly for this

variable is over Switzerland and Southern France. The contour lines in this figure are

500-hpa vertical pressure velocity (w500).

The composites that were used for analysis were based on 1-day atmospheric

conditions over Switzerland, for all levels of damage and only for the DJF months.

The composites for other time periods and seasons are shown in Figures 1-5 through 110, and they visually explain why the composite that was used was chosen. Figure 14 shows the composite 1-day conditions over landslides during the summer months

(June, July, and August), and when compared to the DJF composites, the pattern is

not as strong. The peak standardized anomaly is lower. The variables that are shown

in each figure are the same as in 1-3.a) and b).

Figures 1-5 through 1-10 show composites over longer time periods. Multi-day

composites were considered because landslides can be caused by atmospheric condi25

63N

54N51N

-

67N

---

48N4-5N

l"w

D

1

IOE

-0.8

20E

-0.6

-0.4

30E

-0.2

4D-E

0

0

1lOW

0.2

0.4

1OE

0.6

0.8

20E

1

30E

40E

1.2

Figure 1-4: Composites of all Swiss JJA slide dates. The colors of a) show the

standardized anomaly of 500-hpa geopotential height (Z500) and the arrows show

vertical integral atmospheric vapor flux. The colors of b) show total precipitable

water (TPW) and the contour lines are 500-hpa vertical pressure velocity (w500).

tions and precipitation that occurred several days beforehand, or can be influenced

by average conditions over a longer time period instead of a sudden triggering event.

In these cases, the conditions over the several days preceding a landslide are averaged,

and then all those averages are combined to create one composite of the average of the

preceding days for all landslide target days. Figure 1-11 reinforces the visual determination that the one day composites are the strongest. It plots the peak maximum and

minimum values of the standardized anomaly for the composite Z500 value, as well

as the difference between the ridge (positive values) and trough (negative values), for

each of the multi-day composites. Figure 1-12 does the same for w500 and Figure 1-13

shows TPW. In all cases except for TPW, the strongest peak anomalies are seen in

the one day composite. For this reason, the one-day composite was used instead of

any of the multi-day composite options.

The Swiss Flood and Landslide Damage Database also contains information about

how much damage a slide produces. The final consideration in deciding the target

days was whether or not to separate the high damage and low damage slides. The

analysis that will be shown in Chapter 2, where the features of a landslide that are

26

70N

65N

6ON

55N

SON

45N

*

40 N

20W

b

16w

-1

-0.8

16E

-0.6

26E

-0.4

3 E

-0.2

W

E

0

0.2

1

0.4

1

0.6

2 E

0.8

1

30

4ME

1.2

Figure 1-5: Composites of all Swiss DJF slide dates with 2-day period. The colors of a)

show the standardized anomaly of 500-hpa geopotential height (Z500) and the arrows

show vertical integral atmospheric vapor flux. The colors of b) show total precipitable

water (TPW) and the contour lines are 500-hpa vertical pressure velocity

(w500).

70N

65N

6ON

55N

5N

2

W

16W

-1

b

-0.8

10E

-0.6

2

-0.4

E

-0.2

4E

0

20

0.2

1

1

O.4

0.6

0.8

*E

1

36E

44 E

1.2

Figure 1-6: Composites of all Swiss DJF slide dates with 5-day period. The colors of a)

show the standardized anomaly of 500-hpa geopotential height (Z500) and the arrows

show vertical integral atmospheric vapor flux. The colors of b) show total precipitable

water (TPW) and the contour lines are 500-hpa vertical pressure velocity (w500).

27

70N

65N

6ON

55N

SON

45N

40N

35N

3ON

a)

16W

-1

b

16

-0.8

-0.6

20E

-0.4

4E

30E

-0.2

0

b)

20W

0.2

loW

0.4

0.6

0.8

1

1.2

Figure 1-7: Composites of all Swiss DJF slide dates with 7-day period. The colors of a)

show the standardized anomaly of 500-hpa geopotential height (Z500) and the arrows

show vertical integral atmospheric vapor flux. The colors of b) show total precipitable

water (TPW) and the contour lines are 500-hpa vertical pressure velocity (w500).

55N

50N

46N

4ON

35N

x

jr

'W

jr

A' - X. jj .*,,

fta V

T:

3ON

.:Z4

A

A:& a

25N

21-W

V

V:-%

11

1W

-1

0

-U -

-Y

.1

A.-*

.6

-

a)

IE

-U.b

20E

-U.4

4 DE

30E

-0.2

0

20W

0.2

10W

0.4

IDE

0

0.6

0.8

1

1.2

Figure 1-8: Composites of all Swiss DJF slide dates with 10-day period. The colors

of a) show the standardized anomaly of 500-hpa geopotential height (Z500) and the

arrows show vertical integral atmospheric vapor flux. The colors of b) show total

precipitable water (TPW) and the contour lines are 500-hpa vertical pressure velocity

(w500).

28

70N65N

60N'

55N,

SON

40N

-4--+-*--Y.-I -

-

45N

-E

35N

30N

-

-)

0)

0W

low

6

-1

-0.8

1E

-0.6

24E

-0.4

b)

3XE

-0.2

4 IE

0

2

0.2

0.4

0.6

0.8

1

1.2

Figure 1-9: Composites of all Swiss DJF slide dates with 14-day period. The colors

of a) show the standardized anomaly of 500-hpa geopotential height (Z500) and the

arrows show vertical integral atmospheric vapor flux. The colors of b) show total

precipitable water (TPW) and the contour lines are 500-hpa vertical pressure velocity

(w500).

70N

65N

-A.mp

6ON

map

55N

5N,

-

45N

A.

dW0.

40N

.W

35N

.7---

30N-

0)

25N

21

W

16W

-

1

b

-U.8

16E

-U.6

26E

-U.4

36E

-0.2

4 DE

2

0.2

0

W

6

16W

0.4

0.6

16E

0.8

26E

1

-6E

40E

1.2

Figure 1-10: Composites of all Swiss DJF slide dates with 30-day period. The colors

of a) show the standardized anomaly of 500-hpa geopotential height (Z500) and the

arrows show vertical integral atmospheric vapor flux. The colors of b) show total

precipitable water (TPW) and the contour lines are 500-hpa vertical pressure velocity

(w500).

29

Z500

0.8

0.6

0.4

a0.

-$-Min

1

0

Z

2

3

4

5

10

7

-

14

--0.2

Max

r2-Difference

-0.4

-0.6

-0.8

--

_______________

Number of Days

Figure 1-11: Peak Standardized Anomaly of Composite Z500 for Various Time Spans.

The trough (negative anomaly, here labeled as min), and ridge (positive anomaly, here

labeled as max) are both variables of interest and so are included.

W500

0

1

2

3

4

s

7

10

14

30

-0.1

E

-0.2

-0.3

*

C.

-0.4

-0.5

-0.6

Number of Days

Figure 1-12: Peak Standardized Anomaly of Composite w500 for Various Time Spans.

30

TPW

0.45

0.4

0.35

101 0.3

S0.25

-.- Max

0.2

0

z

0.15

0.1

0.05

0

1

2

3

4

5

7

10

14

30

Number of Days

Figure 1-13: Peak Standardized Anomaly of Composite TPW for Various Time Spans.

likely to produce high damage were studied, had shown that precipitation was relevant

to the amount of damage a landslide would incur. Separating severe slides from less

damaging ones could help create an individual estimate of the future increase in

specifically high damage slides. Evidence of a difference in atmospheric conditions

between high and low damage slides could be used to infer a change in frequency of

particularly high damage slides as a result of climate change. The composites for these

two sets of target dates, Figures 1-14 and 1-15, show a difference in the peak anomalies

of atmospheric conditions, however, the high damage landslide dates did not show a

consolidated atmospheric pattern. The high damage composite for the Z500 variable

shows a disperse pattern which spans a large area, from Greenland to Scandinavia,

over much of the North Atlantic. For comparison, the low damage composite for the

same variable shows a pattern focused over Scandinavia and Northwestern Africa.

Identifying hotspots, a localized pattern of atmospheric consistency among the days

which will be discussed later on, is difficult for such a broad pattern. There were only

17 high damage landslides in this period, which is likely the cause of the disperse

atmospheric conditions.

31

3 ON

IMIj, 10 11111111 ji

251-40W

30W 20W

0

10W

10E

20E

30E

40E

50W 40W .3DW 20W

SDE

1OW

0

16E

20E

30E

40E

50E

b)

a)

-1

-0.6

-0.8

-0.4

-0.2

0.2

0

0.4

0.6

1.2

1

0.8

Figure 1-14: Composites of all Swiss DJF slides that incurred high damage. The

colors of a) are the standardized anomaly of 500-hpa geopotential height (Z500) and

the arrows show vertical integral atmospheric vapor flux. The colors of b) show total

precipitable water (TPW) and the contour lines are 500-hpa vertical pressure velocity

(w500).

7N

)0N

55N

SON4,5N

35N

25N V

15N

loW

20W

-1

0

-0.8

1

-0.6

20E

-0.4

3E

-0.2

20W

46E

0.2

0

0.4

loW

0.6

0

0.8

30E

2E

16E

1

40E

1.2

Figure 1-15: Composites of all Swiss DJF slides that incurred low damage. The

colors of a) are the standardized anomaly of 500-hpa geopotential height (Z500) and

the arrows show vertical integral atmospheric vapor flux. The colors of b) show total

precipitable water (TPW) and the contour lines are 500-hpa vertical pressure velocity

(w500).

32

1.4.2

Analogue Determination

Hotspots

Following the method of Gao et al., the composites shown in Figure 1-3 are used to

identify a pattern which can detect the occurrence of landslide events (2014). The first

part of detection is the identification of hotspots. Each grid from the atmospheric

conditions of each day has either a positive or negative value for the standardized

anomaly. The composite, as an average of the anomalies, has its own sign for each grid.

A map is produced which measures the number of individual days that make up the

composite that have the same sign as the composite at the same grid. From this map,

a "hotspot" is identified, defined as a cluster of grid cells which show strong evidence

of consistency among many members. The grid(s) with the maximum consistent sign

count serve as a lower threshold to the smallest "hotspot" that must be matched.

One criteria for identification as a landslide event is that the atmospheric variables

consistently match the signs of the hotspot grid cells. This is one feature that will be

used to identify the atmospheric conditions for a landslide day.

Spatial Correlation

The second part of detection is a cutoff in the spatial correlation between the composite and an individual day. Spatial anomaly correlation coefficients (SACCs) are

calculated between the composite members and the composite, and between the daily

MERRA values and the composites. Figures 1-16 through 1-21 show density plots

and histograms of spatial correlation, normalized as a percentage of days in the set.

In general, as spatial correlation increases, a day has a higher likelihood of being an

observed slide day. The statistical difference in spatial correlation between observed

landslide days (members of the composite) and the remaining days is analyzed to

determine a cutoff in SACC value that makes a day more likely to have a landslide.

The cutoff is measured as the spatial correlation value above which a day has a

statistically determined higher than random chance of being a landslide day. Of all

the days in the 34 year time span, 125 of them are landslide days and 2944 are not.

33

Z500

55

50

I

45

I

40

;;. 35

o

30

* Landslide Days

25

* Non-Landslide Days

15

-

4) 20

10

____R__L

9

05._

<0

0-0.1

0.1-0.2

0.2-0.3

0.3-0.4

0.4-0.5

0.5-0.6

0.6-0.7

0.7-0.8

0.8-0.9

0.9-1.0

Correlation

Figure 1-16: Spatial Correlation of Z500.

Z500

c'

-

Slide Days

-

All Days

-

Non-Extreme Days

62

46

0

0

0

0

N

0

_

0

0

-1.0

-0.5

0.0

0.5

Spatial Correlation

Figure 1-17: Density Plot of Z500 spatial correlation.

34

1.0

w500

60

55

45

40

035

0

00 30

i

Landslide Days

25 - -Non-Landslide

Days

20

10

-

15-

a

5

0

<0

0-0.1

0.1-0.2

0.2-0.3

0.3-0.4

0.4-0.5

0.5-0.6

0.6-0.7

0.7-0.8

0.8-0.9

0.9-1.0

Correlation

Figure 1-18: Spatial Correlation of w500.

o500

-Slide

-

-0.5

0.0

All Days

Non-Extreme Days

0.5

Spatial Correlation

Figure 1-19: Density Plot of w500 spatial correlation.

35

Days

TPW

60

55

50

45

40

M 35

too

"

25

Landslide Days

" Non-Landslide Days

is3

20

10

5

0

<0

0-0.1

0.1-0.2

0.2-0.3

0.3-0.4

0.4-0.5

0.5-0.6

0.6-0.7

0.7-0.8

0.8-0.9

0.9-1.0

Correlation

Figure 1-20: Spatial Correlation of TPW.

TPW

-

Slide Days

All Days

-

Non-Extreme Days

a

C4

0

-0.5

0.0

0.5

Spatial Correlation

Figure 1-21: Density Plot of TPW spatial correlation.

36

Drawing from this population at random, the probability of drawing a landslide day

is 0.0407. If the landslide and non-landslide days had the same distribution across

spatial correlation, the percentage of slide/non-slide days would stay consistent with

this 0.0407 value for each bin in the histogram of spatial correlation. However, because

slide days are more likely to have a high correlation measurement, the proportion of

slide days over total population in each bin increases.

The proportion of landslide days can be taken as a binomial distribution, where

p=0.0407 is the expected probability that a random day is going to be a landslide day.

An example of a binomial distribution is a coin toss, where there are two possible

outcomes; in this case, the two outcome options are whether a day has landslide

activity or not. There is some uncertainty in the stated probability, because of the

likelihood that landslides go unrecorded in datasets, for reasons such as they are in

remote areas or do not have any human or urban impact. Therefore, the formula for

standard deviation of a binomial distribution is:

np(l -1-p)

where n is the population count and p is the probability of success (it is a landslide

day).

,/np(l - p) = V/3069 * 0.0407(1 - 0.0407) = 10.9464

So the number of landslide days has a standard deviation of 10.9464. Using that in

the formula for probability over a binomial distribution,

NL + 3a

P

NL + NN

===

125 + (3 * 10.9464)

125+2944

0.0514

In this formula, NL is the number of landslide days and NN is the number of total

days in the subset. This value can be used as a statistically significant minimum

from which setting the cutoffs can begin. Above this cutoff probability, the likelihood

that a day will be a landslide is higher than if it was a random day in the dataset,

37

to a statistically significant degree. The increase in landslide likelihood is due to the

increase in spatial correlation. Days with a higher spatial correlation have a higher

chance of being a landslide day than could be attributed to randomness or chance.

The spatial correlation that this probability measurement corresponds to is 0.4 for

Z500 and TPW, and 0.3 for w500.

Criterion of Detection

Further following the method from Gao et al., the hotspots and spatial correlation

are combined to create a criterion of detection for the occurrence of landslides (2014).

It was determined that many of the observed landslide days share these common

features. In order to be identified as a landslide event, a day must meet the following

criteria:

1. Three or more variables (ridge (positive anomaly) of Z500, trough (negative

anomaly) of Z500, w500, and TPW) must have signs consistent with the hotspot

grid cells.

2. At least one of three variables (Z500, w500, TPW) meets the cutoff for spatial

correlation.

3. The spatial correlation of the same three variables all have to be positive. If

these three conditions are met, the day is tagged as a landslide day. The cutoffs

and hotspots previously identified serve as a minimum threshold, and can be

refined to stricter values if the criterion identifies too many days in calibration.

The criterion of detection begins with the values defined above, the statistically

significant minimum for spatial correlation and the smallest number of grids that can

define a hotspot. These cut-offs are further refined into stricter values until the same

number of observed landslide days is approximately matched with the number of days

that fit this criterion in 34 years of MERRA observations.

38

1.4.3

Success Rate

The criterion of detection was evaluated on 34 years of MERRA data, including all

the DJF days between 1979 and 2012. All days are compared against the composite

constructed from all observed DJF landslide days to determine hotspot sign consistency and spatial correlation. If a day meets the criterion, it is considered a landslide

day. Performance is measured by the success rate in identifying an observed landslide

date, and the number of false positives that the criterion detects. The success rate

is the fraction of days that the criterion detected that match the observed landslide

days over the number of total observed days. False positive is the fraction of falsely

identified days over total identified days. The success rate reaches 20-25% when exact

matches are considered. It improves to 25-29% if the window for event matching is

increased so that a date generated by the analogue method is within 1 day previous

to an observed day. Increase this window to 2 days and the success reaches 29-30%,

3 days and it reaches 35-36%, up to 7 days and it reaches 45-50%. The false positive

rate for an exact match is 79-81%, for 1 day it drops to 70-73%, 2 days at 67-71%, 3

days to 63-70%, up to 7 days where the false positive rate is 52-56%. The criteria was

allowed to be relaxed because oftentimes the triggering weather event for a landslide

occurs several days previous. Relaxing the criteria is another way to accommodate

longer timescale conditioning factors which influence landslide occurrence, besides

using multi-day composites.

There is a naturally high uncertainty rate in predicting landslides, particularly

when estimating their occurrence from solely one aspect of their mechanisms, as is

done here with the weather pattern. The high false positive rate may be partially

due to the incompleteness of data, when there may be landslides but they were not

observed or recorded. Some of the dates identified during the calibration may have

had a landslide, but it would have been in a remote area or did not cause damage.

39

1.5

Discussion and Future Work

What has been presented exists as a proof of concept for an analogue detection of

landslides. Calibration has produced robust rates of success, high enough to trust that

this method is valid. The criteria of detection can replicate the number of observed

landslide days within a time period with MERRA data, and can reach a better than

50% success rate at getting the day right to within a small window. The next step

is to use these results with climate models in order to quantify a change in landslide

activity. The results will be applied to CMIP5 climate models, which simulate all the

atmospheric conditions of interest. The frequency with which the criteria of detection

occur in CMIP5 will serve as an estimate of the frequency of landslide activity in the

future, and can be measured under various climate change scenarios.

This study has been able to show that the atmospheric conditions present at the

time of a landslide are a good means of detection for occurrence and can be used

in the future to estimate the change in landslide activity according to predicted climate change patterns. This method presents a novel means of correlating landslides

with climate change and expected alterations in precipitation occurrence, offering

improvements over alternatives by removing the reliance on modeled precipitation.

Most methods of correlating landslides with climate change extract a direct measurement of precipitation change from climate models. Since landslides generally

occur under the most severe precipitation events, and since climate models have been

proven to unreliably estimate extreme precipitation, this method improves upon climate change-landslide impact measurements driven by modeled rain estimates.

One potential source of bias is the non-reporting of slides, a common shortcoming

in many landslide datasets. It may be suspected that the conditions are right for a

landslide, yet the slide could have taken place in an area which was too remote to

record a slide or the data could be complete and no slide occurred. This analysis also

occurs on a large scale -

the weather patterns are widespread and the study area

is all of Switzerland, which necessarily ignores more smaller scale conditions which

influence landslide occurrence, such as slope stability or local weather patterns. The

40

results should be viewed as an estimation of slide frequency rather than any prediction

as to exactly when a slide will occur. In future work, this method could also be coupled

with other changes that are expected to occur with climate change, such as a change

in vegetation, temperature, and soil moisture to more fully estimate the impact that

climate change will have on landslide activity.

Despite these shortcomings, the analogue method has the opportunity for improving on large-scale predictions of landslide frequency. It offers a robust way to analyze

landslide response to climate change, over calculating a percentage increase in slide

activity as a direct function of a percent increase in rain, and it can be applied to any

area with reliable weather data and a list of dates on which slides have occurred. The

ultimate measurement that can be determined from this method, a quantification of

change in landslide frequency, can help direct priorities for landslide maintenance. An

increase in landslide activity would indicate that mitigation should be done, perhaps

a monitoring system should be put in place, and further emphasis should be placed

on directing construction away from unstable slopes. If a nation of other jurisdiction

spends X amount of money on landslide damage and resultant repairs yearly, this

could provide a measurement of how much they can expect to spend in the future,

and can budget accordingly. With better data, this method could provide an estimate for the amount of damage landslide will create by correlating increases with the

amount of money spent, or correlating atmospheric conditions with damage produced

by a slide.

41

42

Chapter 2

Modeling the Damage Incurred by

Landslides

2.1

2.1.1

Background

Vulnerability Analysis

The equation for landslide risk, as explained in the introduction is R = E * H * V

(Varnes 1984). This part of the study aims to quantitatively evaluate one part of this

equation: vulnerability. Switzerland is used as a case study, as it was in Chapter 1

of this thesis.

Measuring vulnerability provides a basis for decision-making in response to a potential landslide threat.

In fields such as disaster response, urban planning, and

transportation planning, knowing the vulnerability of an area is important because

when combined with a hazard measurement (which shows where and when landslides

are likely to occur), vulnerability produces a picture of total risk (Alexander 2005).

Hazard maps and analyses are common, and the field of measuring hazard is

well-studied (van Westen et al. 2006). It largely relies on understanding the causal

factors -

geophysical and weather-related -

that go into landslide occurrence and

recognizing them over a wide area. Spatial analysis tools such as GIS are used to

derive relevant data features of landslide prone areas (Carrara et al. 1999). Once

43

the causal factors are known, and those features have been identified over the area of

interest, a hazard map can be made.

Vulnerability can be evaluated in largely the same way. With an understanding

of causal factors and an identification of where the influential features are present,

vulnerable areas can be recognized. Literature reviews have noticed a decided lack

of vulnerability studies within research on landslide risk assessment (Glade 2003).

They assert that a lack of information about vulnerability inhibits determination of

risk (Galli and Guzzetti 2007). Techniques for vulnerability studies for landslides are

largely drawn from those developed from other hazards such as earthquakes or floods.

Two general types of approaches can be taken towards evaluating landslide hazard.

One is a qualitative approach, which creates a list of exposed elements (all buildings

or other urban features over an area) and assigns them an empirically evaluated

index for vulnerability, generally on a 0-1 scale (none to total destruction likely)

(Maquaire et al. 2004). This method is difficult to apply efficiently over large areas

because each element is evaluated individually, but it is potentially useful for local or

urban governments (van Westen et al. 2006). A quantitative approach to evaluating

vulnerability uses models and damage functions to decide the impact of a landslide

(Maquaire et al. 2004). This method depends on the ability to acquire detailed data

about past occurrences.

The approach taken here is quantitative. We aim to identify the causes of landslide

vulnerability from an analysis of historical landslide occurrence, which includes consideration of both spatial and temporal factors, anthropogenic features and weather

conditions respectively. It will do this by applying data mining and machine learning

procedures to the data, techniques which have previously been utilized in studies on

hazard analysis (Yao et al. 2004) (Brenning 2005). A benefit to this approach is

that it combines detailed mapping on a small scale with a wide area of study. By

using part of the data for training and part for testing, the model success can be

validated, a process which is frequently missing from hazard analysis (Chung and

Fabbri 2003). Machine learning is efficient, requiring relatively small amounts of time

for computation once models have been properly refined and given all the necessary

44

data (Kostiantis 2007). It can also easily accommodate changing future conditions,

such as shifts in population density or expected climate change.

2.1.2

Machine Learning Approach

Data mining is a field within computer science that is used for "the extraction of

implicit, previously unknown, and potentially useful information from data" (Witten

and Frank 2005). Machine learning uses algorithms to infer the underlying structure

of data and extracts information from data into a usable format. In the application

here, it will be used to learn about landslide vulnerability, taking data about each

historic occurrence and drawing conclusions and predictions about resultant damage.

Machine learning has been successfully applied to a variety of real world problems,

from cancer detection to text identification to business decision making. It can be

broadly separated into two categories: supervised and unsupervised learning (Hastie

et al. 2009). Supervised learning works on data where the output labeling is already

known. It uses a group of inputs, which may have some level of influence over the

output, as predictive variables (Hastie et al. 2009). Unsupervised learning uses data

that comes with no output labeling, and its primary purpose is in clustering the data

into groups with some level of homogeneity. Because the data from the study area

here has an output in the form of level of damage, supervised learning is used. A

problem can be one for either classification or regression (Hastie et al. 2009). In

regression, the output is continuous. In classification, the output is a class labeling.

The problem here is one for supervised classification, because the data has a labeling

which consists of two classes, high or low damage.

Landslide damage will be classified using a machine learning algorithm based on

available information about conditions at the time of each slide. The dataset is made

up of N vector samples, which combine to form a matrix X, and where xi represents

the ith sample in the set. Each sample xi is an individual feature vector, containing all

the information, or features, of each sample (Hastie et al. 2009). Each feature vector

is associated with a class label, Y within [0, 1] in the case of a binary classification.

In this case the options are low or high damage, which can be represented as binary.

45

Figure 2-1: Depiction of the steps to create a supervised classification model.

Prediction is the same as testing. (Bird et al. 2009)

b)

The combination (xi, yi) of feature vector and labeling is one sample or instance.

The classification algorithm aims to find a function f(X) that accurately reproduces

Y.

There are two general steps in machine learning:

training and testing.

The

algorithm determines f(X) on a subset of all the data, in a process called training.

The function produced by the algorithm is meant to perform well on the training

data, though rarely does it reach perfect accuracy.

Perfect accuracy on the train-

ing data is also undesirable because the resulting classifier has likely overfit the

data(Hastie et al.

2009).

For the function to be usable, it must perform well on

a separate set of testing data. Test data is a set of feature vectors which the classifier

has not seen before, but which are presumably drawn from the same distribution as

the training set. In practice, a certain percentage of the entire source data is removed

and reserved for testing before any modeling has taken place.

Testing error is one measurement of model success. It is a measure of how well the

model generalizes. A successful model will minimize test error (Hastie et al. 2009).

In summary, the steps taken in creating a classification model are shown in Figure 2-1. If the test success is unsatisfactory, the model parameters can be tuned along

each step of the building process.

46

Preliminary tests to determine which classification algorithm to use were done in

Weka (Waikato Environment for Knowledge Analysis), a software which is able to

apply many standard machine learning techniques to data (Witten and Frank 2005).

Preliminary results indicated promising success with the random forest algorithm.

Before tuning, accuracy on all the data was recorded at close to 90%, though with

much higher error in classifying high damage slides. This will be discussed in more

detail later on.

2.1.3

Random Forest Algorithm

Random forest, first developed by Breiman, is an algorithm which consists of an ensemble of decision trees with vote amongst themselves to decide upon a classification

(2001). It uses bagging (bootstrap aggregating) to average individual models. Bagging is a technique where each model in the ensemble votes with equal weight, in this

case each decision tree in the ensemble forest. Random forests have a reputation for

strong performance, even before tuning (Hastie et al. 2009).

The general structure of a decision tree classifier is shown in Figure 2-2. It is the

basis of random forest algorithms and on its own is another supervised classification

algorithm. A decision tree sorts data on m levels. Each level has internal nodes, each

node representing a feature of the data, each branch from the node corresponding to

a value of that feature (Mitchell 1997).

Random forests consist of n trees with m levels each. n is generally a very large

number, limited by the fact that very high numbers may introduce longer computation

time. The default value for m is the square root of the number of variables in the

feature vectors. Each tree is grown in a subspace of m randomly chosen variables,

which means it is trained on only those random variables. This introduces variability

into the trees (Breiman 2001).

47

..-.. ...... . -....... -..

root

.....-.---------------------------

depth 0

node t

---

~~C)

---Mee

c66 ,D( ---------.---

depth I

-----

-------

A

.

j

k

depth (M-1)

iterminals

(class labels)

CQ) - subset of classes accessible from node i

F(I) - feature subset used at node t

D(I) - decision rule used at node t

Figure 2-2: Structure of a decision tree. (Safavian 1991)

2.2

Methodology

Machine learning techniques will be used to build a robust model of landslide damage,

based on the hypothesis that information about past events can help us predict the

damage of future events. A historical database of landslide occurrence and damage,

geospatial data, and weather data are used to prepare the feature vectors. Feature

vectors are created which contain elements reflecting both spatial risk, in an anthropogenic sense, and temporal risk, in the weather patterns surrounding each slide date

taken from the historical inventory. Each slide has been classified into high or low

damage landslides, and the output of the algorithm is a classification of landslides,

based on the information in the feature, into low damage or high damage. Two classes

were decided on because of the organization of the data, as discussed in a later section. The algorithm trains on a subset of 80% of the data, and tests on the remaining

20% in order to assess the success that it achieves. The training and test data sets

are randomly drawn without replacement from the full set.

48

To validate a model's

success, the data is resampled five times. The algorithm is trained on each subsample

ten times. Any measure of accuracy is an average of fifty times that the algorithm is

applied. Based on the results, the algorithm is refined and tuned. Some features of the

data required refinement of the process for better success, such as the feature vectors

having a large number of features which may not be significant, severely unbalanced

classes, and unbalanced success between the classes. Total success is presented based

on the best refinements of the model.

2.3

Data

Inferences will be drawn about the future of landslide activity based on an examination of past occurrences. Each instance if therefore drawn from a historic dataset

that contains thousands of individual slide records. To create feature vectors which