T est: Compa ring

advertisement

Comparing two proportions

Example:

Chinook Salmon

Ho : Sex ratio is the same for the

two capture methods during

the early run

Test:

H0 : 1 = 2 vs. HA : 1 6= 2

Data:

A 2 2 contingency table

Dene

1 = Probability of catching a

female with hook and line

during the early run

1 = Probability of catching a

female with a net during

the early run

Females

Hook

and

Line

Net

Y1 = 172

Y2 = 165

Males n1 Y1 = 119 n2 Y2 = 202

Totals

n1 = 291

n2 = 367

124

Estimated proportions:

Estimated variance:

Y

172 = 0:5911

p1 = 1 =

n1 291

p2 =

Y2 165

=

= 0:4496

n2 367

Sp21 p2 =

p1(1 p1) p2(1 p2)

+

n1

n2

= (:5911)(:4089) + (:4496)(:5504)

291

367

= :0015

Is the dierence between

p1 = 0:5911 and p2 = 0:4496

greater than what can be

attributed to sampling variation?

V ar(p1 p2) = V ar(p1) + V ar(p2)

2Cov(p1; p2)

= 1(1 1) + 2(1 2)

n1

125

n2

126

Standard Error:

p

Sp1 p2 = :0015 = :0388

127

Test:

p1 p2

Sp1 p2

= :5911 :4496

:0388

= 3:65

95% condence interval for 1 2

Z =

(p1 p2) z=2Sp1 p2

%

.5911

)

"

.4496

(separate variance estimates)

"

z:025 = 1:96

.0388

0:1415 :0760

)

(:064; :218)

p-value = 2(:00013) = :00026

Conclusion:

Conclusion:

128

Test:

(pooled variance estimates)

First compute a pooled estimate of the

success probability

n p + n2p2

= Y1 + Y2

p= 1 1

n1 + n2

n1 + n + 2

= 172 + 165 = :51216

291 + 367

Estimate

V ar(p1 p2) = V ar(p1) + V ar(p2)

as

= p(1 p) + p(1 p) = :00154

S2

p1 p2

Then

n1

n2

129

and

p1 p2

Sp1 p2

= :5911 :4496

:0392

= 3:61

Z =

p-value = 2(:000156) = :00031

Note:

Z 2 is equal to the Pearson

chi-square statistic .

p

Sp1 p2 = :00154 = :03924

130

2-sample test for equality of proportions

without continuity correction

S-PLUS for windows:

data: V1 and V2 from data set salmon2

X-square = 13.0018, df = 1, p-value = 0.0003

alternative hypothesis: two.sided

95 percent confidence interval:

0.06544146 0.21750656

sample estimates:

prop'n in Group 1 prop'n in Group 2

0.5910653

0.4495913

Create a spreadsheet

V1 V2

1 172 291

2-sample test for equality of proportions

with continuity correction

2 165 367

Statistics ) Compare samples

) Counts and proportions

) proportion parameters

data: V1 and V2 from data set salmon2

X-square = 12.4417, df = 1, p-value = 0.0004

alternative hypothesis: two.sided

95 percent confidence interval:

0.06236085 0.22058717

sample estimates:

prop'n in Group 1 prop'n in Group 2

0.5910653

0.4495913

Fill in the boxes and check o

Yates continuity correction

131

S-PLUS code

#

#

#

#

This code compares proportions

for two independent binomial samples

It is stored in the file

binomial2.ssc

cdat<-read.table("c:/courses/st557/sas/hdata.dat",

sep=c(1, 5, 7, 9, 10, 11, 12, 14, 15 ),

col.names=c("year","month","day","biweek","run",

"gear","age", "sex", "length" ))

# Construct a 2x2 contingency table

# for the sex by gear categories

# for the two runs

132

# Construct vector of total counts

# (all fish caught for the two gear

# types in the early run)

ny <- apply(ytab[,,1],2,sum)

#

#

#

#

#

#

Apply the prop.test function to

test the null hypothesis of equal

proportions. The alt="two.sided"

option specifies a two-sided

alternative. The correct="F" option

turns off the continuity correction

prop.test(y,ny,alt="two.sided",correct="F")

ytab<-table(cdat$sex,cdat$gear,cdat$run)

# Repeat the test with continuity correction

prop.test(y,ny,alt="two.sided",correct="T")

# Construct vector of success counts

# (female counts for the two gear types

# in the early run)

# Make a 99% confidence interval

prop.test(y,ny,conf.level=.99)$conf.int

y <- ytab[1,,1]

133

134

SAS code

/* Program to analyze the 1999 Chinook

salmon data. This program is stored

in the file chinook2.sas

*/

data set1;

infile 'c:\courses\st557\sas\hdata.dat';

input (year month day biweek run gear

age sexa length)

(4. 2. 2. 1. 1. 1. 2. $1. 4.);

rage=int(age/10);

oage=age-(10*rage);

if(sexa = 'F') then sex=1; else sex=2;

run;

/* Attach labels to categories */

proc format;

value run 1 = 'Early'

2 = 'Late';

value sex 1 = 'Female'

2 = 'Male';

value gear 1 = 'Hook'

2 = 'Net';

run;

/* Examine partial association between

sex and method of capture within

each run. */

proc sort data=set1; by run;

run;

proc freq data=set1; by run;

table gear*sex / chisq Fisher

nopercent nocol expected;

format sex sex. gear gear. run run.;

run;

136

135

Frequency

Expected

Row Pct Female Male

Sample Size Determination

Hook

172

119

149.04 141.96

59.11 40.89

291

Net

165

202

187.96 179.04

44.96 55.04

367

Total

337

(n responses in each group)

Total

321

95% condence interval for 1 2

p1 p 2

658

]

%

(p1

j

Statistic

DF

Value

Prob

Chi-Square

1 13.0018 0.0003

Likelihood Ratio Chi-Square 1 13.0545 0.0003

Continuity Adj. Chi-Square

1 12.4417 0.0004

p2) + z=2Sp1 p2

]

"

L = half length

Sample size needed for each group

z=2 #2

n=

[1(1 1) + 2(1 2)]

L

"

Fisher's Exact Test

Table Probability (P)

Two-sided Pr <= P

j

%

[

9.352E-05

4.022E-04

137

138

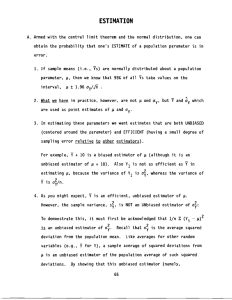

Sample Size Determination

Two sided alternative:

(n responses in each group)

Ha : 1 6= 2

Test of the null hypothesis

Compute

Ho : 1 2

p =

Signicance level ( = :05)

R =

Power (power = 1 = :80)

1 2

v

u

u

t

2

2p(1 p)

1(1 1) + 2(1 2)

Sample size needed for each group

2

z + Rz=2 [1(1 1) + 2(1 2)]

n=

(1 2)2

Size of alternative

j1 2j = 0:08

140

139

One sided alternative:

Ha : 1 > 2 or Ha : 1 < 2

Correction given by Fleiss, J. L.

(1981), Statisitcal Methods for Rates

and Proportions, Wiley, New York.

(pages 42-43 and Table A3)

Compute

p =

R =

1 2

v

u

u

t

2

2p(1 p)

1(1 1) + 2(1 2)

Sample size needed for each group

z + Rz 2 [1(1 1) + 2(1 2)]

n=

(1 2)2

141

ncorrected =

2

n4

4

v

u

u

t

1+ 1+

4

njp1 p2j

32

5

142

/* This program computes sample sizes

needed to obtain tests with a

specified power values for detecting

specified differences between two

proportions. This program is stored

in the file

size2p.sas

*/

* Enter a set of power values;

power = {.8 .9 .95 .99};

* Enter the type I error level;

alpha = .05;

options nodate nonumber linesize=68;

* Compute sample sizes;

proc iml;

start samples;

za = probit(1-alpha/2);

za1 = probit(1-alpha);

nb = ncol(power);

np = ncol(power);

size = j(1,np);

size1 = j(1,np);

/* Enter the probability of success

for the first population */

p1 = .58;

/* Enter the probability of success

for the second population */

p = (p1+p2)/2;

rp = sqrt(2*p*(1-p)/

(p1*(1-p1)+p2*(1-p2)));

p2 = .50;

144

143

* Cycle across power levels;

do i2 = 1 to np;

zb = probit(power[1,i2]);

size[1,i2] = ((za*rp+zb)**2)*

(p1*(1-p1)+p2*(1-p2))/((p1-p2)**2);

size1[1,i2] = ((za1*rp+zb)**2)*

(p1*(1-p1)+p2*(1-p2))/((p1-p2)**2);

end;

/* Compute corrected sample sizes using

the Fleiss (1981) correction */

sizec=(size/4)#(j(1,np)+sqrt(j(1,np)

+4/(size*abs(p1-p2))))##2;

print,,,,, 'Sample sizes for testing equality',

' of two proportions';

print, 'The number of observations needed',

' for each treatment group';

print,,, p1 p2 alpha power;

sizer = int(size) + j(1,np);

print 'Sample sizes (2-sided test):' sizer;

sizerc = int(sizec) + j(1,np);

print 'Corrected sizes (2-sided test):' sizerc;

size1c=(size1/4)#(j(1,np)+sqrt(j(1,np)

+4/(size1*abs(p1-p2))))##2;

size1rc = int(size1c) + j(1,np);

print 'Corrected sizes (1-sided test):' size1rc;

size1r = int(size1) + j(1,np);

print 'Sample sizes (1-sided test):' size1r;

145

146

/* Determine sample sizes for

constructing confidence intervals */

/* Enter confidence level */

level=0.95;

percent = level*100;

print,,,,'Sample sizes for' percent 'percent',

'

confidence intervals';

print,,'The number of observations for each',

' treatment group';

print,,,'

Half length

Sample size';

print me '

' n;

/* Enter values for margin of error */

me = {0.04, 0.03, 0.02, 0.01};

alpha = 1-level;

za = probit(1-alpha/2);

np = ncol(me);

size = j(1,np);

finish;

run samples;

/* Compute sample sizes */

n = ((za/me)##2)#(p1*(1-p1)+p2*(1-p2));

n = int(n) + j(np,1);

percent = level*100;

148

147

Sample sizes for testing equality

of two proportions

S-PLUS code:

The number of observations needed

for each treatment group

P1

P2

ALPHA

0.58

0.5

0.05

Sample sizes(2-sided test):

Sample sizes(1-sided test):

Corrected sizes (2-sided ):

Corrected sizes (1-sided ):

POWER

0.8

609

479

633

504

0.9 0.95 0.99

814 1006 1422

663 838 1220

839 1031 1447

688 863 1245

Sample sizes for 95 percent

confidence intervals

The number of observations for each

treatment group

Half length

ME

0.04

0.03

0.02

0.01

Sample size

N

1186

2107

4741

18962

149

#

#

#

#

#

#

#

#

This program computes sample sizes needed

to obtain a test of a hypothesis about a

difference between two proportion with a

specified power value. It also computes

the number of observations needed to obtain

a confidence interval with a specified

accuracy. This program is stored in the file

size2p.ssc

# Specify the probability of success

# for the first population

p1 <- c(.58)

# Specify the probability of success

# for the second population

p2 <- c(.50)

# Enter power values

power <- c(.8, .9, .95, .99)

150

# Increase sample size to next largest integer

# and print results

# Enter the type I error level

alpha <- .05

size <- ceiling(size)

cat("\n \n \n p1=",p1,"p2=", p2, "alpha=",

alpha,"power=", power)

cat("\n Sample sizes (2-sided test): " , size)

za <- qnorm(1-alpha/2)

za1 <- qnorm(1-alpha)

nb <- length(power)

p <- (p1+p2)/2

va <- p1*(1-p1)+p2*(1-p2)

rp <- sqrt(2*p*(1-p)/va)

cat("Sample sizes for testing equality

of two proportions")

# Obtain a sample size for each of

# the requested power values

zb <- qnorm(power)

size <- ((za*rp+zb)^2)*va/((p1-p2)^2)

size1 <- ((za1*rp+zb)^2)*va/((p1-p2)^2)

size1 <- ceiling(size1)

cat("\n \n p1=",p1,"p2=", p2, "alpha=",

alpha,"power=", power)

cat("\n Sample sizes (1-sided test): " , size1)

# Compute corrected sample sizes according to

# Fleiss, J. L. (1981) Statistical Methods for

# Rates and Proportions, Wiley, New York.

sizec<-(size/4)*(1+sqrt(1+4/(size*abs(p1-p2))))^2

sizecr <- ceiling(sizec)

cat("\n \n \n p1=",p1,"p2=", p2, "alpha=",

alpha,"power=", power)

cat("\n Corrected sizes(2-sided test): ",sizecr)

151

s1c<-(size1/4)*(1+sqrt(1+4/(size1*abs(p1-p2))))^2

s1cr <- ceiling(s1c)

cat("\n \n \n p1=",p1,"p2=", p2, "alpha=",

alpha,"power=", power)

cat("\n Corrected sizes(1-sided test): ",s1cr)

#

#

#

#

Compute sample size needed to obtain a

confidence interval for the difference

between the two proportions with a

specified margin of error

# Enter the confidence level

level <- c(0.95)

152

# Compute needed sample sizes

alpha2 <- (1.0-level)/2

np <- length(me)

one <- rep(1,np)

n <-((qnorm(one-alpha2)/me)^2)*va

n <- ceiling(n)

percent <- level*100;

cat("\n \n \n Sample sizes for ", percent,

" percent confidence intervals: \n")

cat("margin of error: ", me, "\n")

cat("sample size:

", n, "\n")

# Enter desired margin of error

me <- c(0.04, .02, .01)

153

154

Sample sizes for testing equality

of two proportions

p1= 0.58 p2= 0.5 alpha= 0.05

power= 0.8 0.9 0.95 0.99

Sample sizes (2-sided test): 609 814 1006 1422

p1= 0.58 p2= 0.5 alpha= 0.05

power= 0.8 0.9 0.95 0.99

Sample sizes (1-sided test): 479 663 838 1220

p1= 0.58 p2= 0.5 alpha= 0.05

power= 0.8 0.9 0.95 0.99

Corrected sizes(2-sided test): 634 839 1031 1447

Sample sizes for 95 percent

confidence intervals:

margin of error: 0.04 0.02 0.01

sample size:

1186 4741 18962

p1= 0.58 p2= 0.5 alpha= 0.05

power= 0.8 0.9 0.95 0.99

Corrected sizes(1-sided test): 504 688 863 1245

156

155

S-PLUS for windows

Statistics

) Power and Sample size

) Binomial Proportion

) change Sample Type to

and specify

values of two proportions

Two Sample

*** Power Table ***

p1 p2 delta alpha

1 0.5 0.58 0.08 0.05

2 0.5 0.58 0.08 0.05

3 0.5 0.58 0.08 0.05

4 0.5 0.58 0.08 0.05

5 0.5 0.58 0.08 0.05

6 0.5 0.58 0.08 0.05

power

0.800

0.850

0.900

0.950

0.975

0.990

n1

634

721

839

1031

1214

1447

n2

634

721

839

1031

1214

1447

Comparing Several Proportions:

Several independent binomial

experiments (or samples)

Y1 = number of successful outcomes in

n1 trials for experiment 1

Bin(n1; 1)

Y2 = number of successful outcomes in

n2 trials for experiment 2

Bin(n2; 2)

..

..

Yk = number of successful outcomes in

nk trials for experiment k

Bin(nk; k)

157

158

Example:

Test:

Sunower seeds were stored in three

types of storage facilities. 100 seeds

were taken from each of the three facilities and the germination of each seed

was monitored. Dene

H0 : 1 = 2 = = k vs.

HA : i 6= j for some i 6= j

Note:

H0 : 1 = 2 = = k

is referred to as the hypothesis of

homogeneity

or

independence

i = proportion of seeds from the

i-th storage facility that will

germinate

%

the "success rate" i does not

depend on the experiment (or

population)

Are there dierences in germination

rates?

159

160

A 3 2 contingency table

Storage Number of Germination

Facility Seeds Tested

Rates

Type 1

100

69%

Type 2

100

58%

Type 3

100

41%

Total

300

56%

Storage Number that

Facility Germinated

Number that

failed to

Germinate

Totals

Type 1

Y11 = 69

Y12 = 31

n1 = 100

Type 2

Y21 = 58

Y22 = 42

n2 = 100

Type 3

Y31 = 41

Y32 = 59

n3 = 100

Totals

Y+1 = 168

Y+2 = 132

161

Y+j

\Expected counts" m

^ ij = YiY+++

Pearson statistic:

m

^ 11 = 56 m

^ 12 = 44

m

^ 21 = 56 m

^ 22 = 44

m

^ 31 = 56 m

^ 32 = 44

Here

0

1

of seeds A

m

^ i1 = @ number

of type i

0

(Yij m

^ ij )2

m

^ ij

i=1 j =1

I

X

X2 =

1

A

overall germination

rate

@

J

X

2

2

= (69 56) + (31 44)

56

44

2

2

+ (58 56) + (42 44)

56

44

2

2

+ (41 56) + (59 44)

56

44

= 16:15

163

162

Deviance

Reject H0 : 1 = 2 = 3

if

G2 = 2

J

X

i=1 j =1

Yij log(Yij =m

^ ij )

= 2 69 log(69=56) + 31 log(31=44)

+58 log(58=56) + 42 log(42=44)

Note that

d:f:

I

X

2

6

4

X 2 > 22;

22;:05

22;:005

(log-likelihood ratio test)

3

7

5

+41 log(41=56) + 59 log(59=44)

= 5:99

= 10:60

= 20:12

= (number of rows 1)

(of columns 1)

164

Reject H0 : 1 = 2 = 3 if

G2 > 22;

X 2 and G2 have the same limiting cen-

tral chi-squared distribution when the

null hypthesis is true.

165

The joint probability function (or

likelihood function) is

Maximum likelihood estimation:

Y11

Bin(n1; 1)

Y21

Bin(n2; 2)

Y31

Bin(n3; 3)

Pr (Y11 = y11; Y21 = y21; Y31 = y31)

0

1

= @ yn1 A 1y11 (1 1)n1 y11

11

are independent binomial random

variables.

3

Y

0

@

0

@

1

n2 A y21(1 )n2 y21

2

y21 2

1

n3 A y31

n3 y31

y31 3 (1 3)

20

1

3

= 4@ yni A iyi1(1 i)ni yi15

i1

i=1

= L(1; 2; 3; y11; y21; y31)

166

167

Since the natural logarithm is a monotone increasing function, the value

that maximizes L(; data) is obtained

by maximizing

Consider the hypothesized model

where

1 = 2 = 3 = ;

01

`(; data) = log[L(; data)]

=

Then the likelihood function becomes

L(; data) =

3

Y

i=1

2

4

3

ni!

yi1(1 )ni yi15

yi1!(ni yi1)!

168

3

X

[log(ni!) log(yi1!)

i=1

log((ni yi1)!)]

+ log()

3

X

i=1

+ log(1 )

yi1

3

X

i=1

(ni yi1)

169

Solve the likelihood equation

0 = @`( ; data)

@

3

X

=

i=1

yi1

Maximum likelihood estimators of

mean (or expected) counts:

3

X

ni yi1

i=1

1 The solution is the maximum likelihood

estimate for for the \independence"

or \homogeneity" model.

3

X

^ =

i=1

yi1

3

X

i=1

ni

= y+1

y++

For this model: Yi1 Bin(ni; )

and mi1 = E (Yi1) = ni

Then E (Yi2) = E (ni Yi1) = ni(1 )

m

^ i1 = ni^ = Yi+Y+1

Y++

m

^ i2 = ni(1 ^) = Yi+Y+2

Y++

Formula for \expected counts

0

10

1

total

for

total

for

@

A@

A

i th row

j th column

0

1

m

^ ij =

total

for

the

@

A

entire table

The formula for the \expected" counts

corresponds to the maximum likelihood

estimators for the means of the counts

for the specied model.

171

170

Maximum likelihood estimates

for the alternative model

(model A):

Yi1 Bin(ni; i)

0 i 1

i = 1; 2; 3

0 = @`

@i

= yi1 ni yi1 ; i = 1; 2; 3

i 1 i

log-likelihood function

`(1; 2; 3; data) =

3

X

i=1

flog(ni!) log(y1i!) log((ni y1i)!)g

+

+

3

X

i=1

3

X

i=1

Likelihood equations:

yi1 log(i)

Solutions:

y

^i = i1 = pi ; i = 1; 2; 3

ni

(ni yi1) log(1 i)

172

173

Maximum likelihood estimates

of expected counts for the

alternative model

m

^ i1 = ni^i =

1

0

yi 1 A

@

ni

ni

m

^ i2 = ni(1 ^i) =

1

yi1 A

ni

nested models;

Model A: Yi1 Bin(ni; i) i = 1; 2; 3

are independent random counts.

= yi1

0

n

ni @ i

Likelihood ratio tests for comparing

= yi2

Maximum likelihood estimators are

Y11

n1

Y

^2 = p2 = 21

n2

Y

^3 = p3 = 31

n3

^1 = p1 =

are the observed counts.

This is called a \saturated"

model because it contains as

many parameters (1; 2; 3)

as independent counts

(Y11; Y21; Y31).

175

174

Ratio of Likelihoods:

^ =

Model B: Model A with the added restrictions that 1 = 2 = 3 = 3

X

^ =

i=1

Yi1

3

X

i=1

ni

Lmodel B(^; data)

Lmodel A(^1; ^2; ^3; data)

ni!

^yi1(1 ^)ni yi1

y

!(

n

y

)!

i

1

i

i

1

i

=1

= Y3

ni !

^iyi1(1 ^i)ni yi1

i=1 yi1!(ni yi1)!

3

Y

=

3

Y

i=1

2 3y

2

i1 4

4 5

^

^i

3

1 ^ 5ni yi2

1 ^i

Note that 0 ^ 1 and small

values of ^ suggest that model

A is substantially better than

model B.

176

177

2

3

of parameter 5

d.f. = 4 dimension

space for model A

2

3

dimension

of

parameter

4

5

space for model B

When the null hypothesis

(model B) is correct and

each ni is \large", then

= 3 1= 2

and in this case

2 log(^ ) 2d:f :

2 log(^ ) = 2

8

3

<X

:

^i !

yi1 log

^

i=1

9

1

^i !=

+ yi2 log

1 ^ ;

i=1

3

X

=2

3

X

2

X

i=1 j =1

yij

1

0

m

A;ij

A

log @

^

m

^ B;ij

179

178

\Expected" counts:

Model A: m

^ A;i1 = ni^i = yi1

m

^ B;i2 = ni(1 ^i)

= (ni yi1) = yi2

Model B: m

^ B;i1 = ni^ = m

^ i1

m

^ B;i1 = ni(1 ^) = m

^ i2

Then

0

1

y

yij log @ ij A

m

^ ij

i j

= G2:

2 log(^ ) = 2

X X

180

The general form for the Pearson

chi-squared statistic is

X X (m

^ A;ij m

^ B;ij )2

X2 =

:

m

^ B;ij

i j

When model A (the alternative

hypothesis) places no restrictions on

the parameters it is called the

general alternative and

m

^ A;ij = Yij :

Simplifying the notation, m

^ ij = m

^ B;ij;

the Pearson statistic becomes

X X (Yij

m

^ ij )2

X2 =

m

^ ij

i j

181