Repa rameterization, Restrictions, and

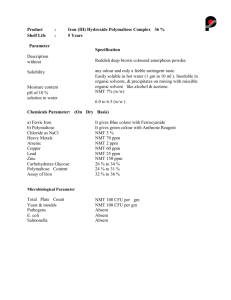

advertisement

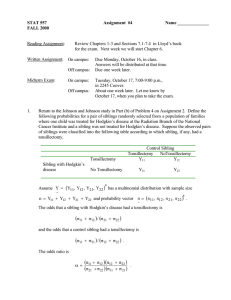

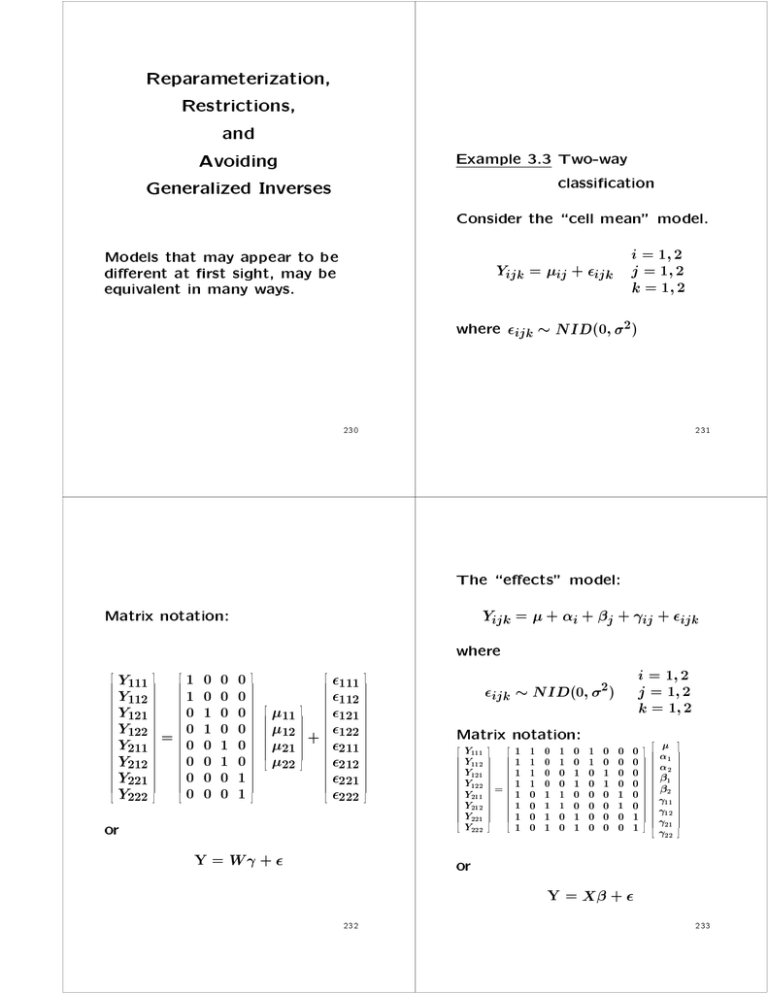

Reparameterization, Restrictions, and Example 3.3 Two-way classication Avoiding Generalized Inverses Consider the \cell mean" model. i = 1; 2 Yijk = ij + ijk j = 1; 2 k = 1; 2 Models that may appear to be dierent at rst sight, may be equivalent in many ways. where ijk NID(0; 2) 231 230 The \eects" model: Yijk = + i + j + ij + ijk Matrix notation: 2 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 4 Y111 1000 Y112 1000 Y121 0100 Y122 = 0 1 0 0 Y211 0010 Y212 0010 Y221 0001 Y222 0001 3 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 5 2 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 4 where 3 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 5 2 6 6 6 6 6 6 6 6 6 6 6 6 6 4 11 12 21 22 3 7 7 7 7 7 7 7 7 7 7 7 7 7 5 111 112 121 + 122 211 212 221 222 2 66 66 66 66 66 66 66 66 66 66 66 66 66 66 66 66 4 or Y = W + 3 77 77 77 77 77 77 77 77 77 77 77 77 77 77 77 77 5 i = 1; 2 j = 1; 2 k = 1; 2 ijk NID(0; 2) Matrix notation: 2 66 66 66 66 66 66 66 66 66 64 Y111 Y112 Y121 Y122 Y211 Y212 Y221 Y222 3 77 77 77 77 77 77 77 77 77 75 = 2 66 66 66 66 66 66 66 66 66 64 1 1 1 1 1 1 1 1 1 1 1 1 0 0 0 0 0 0 0 0 1 1 1 1 1 1 0 0 1 1 0 0 0 0 1 1 0 0 1 1 1 1 0 0 0 0 0 0 0 0 1 1 0 0 0 0 0 0 0 0 1 1 0 0 0 0 0 0 0 0 1 1 3 77 77 77 77 77 77 77 77 77 75 2 66 66 66 66 66 66 66 66 66 66 66 4 1 2 1 2 11 12 21 22 3 77 77 77 77 77 77 77 77 77 77 77 5 or Y = X + 232 233 The models are \equivalent": the space spanned by the columns of W is the same as the space spanned by columns of X . You can nd matrices F and G such that 0 0 0 0 W =X 0 1 0 0 0 2 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 4 0 0 0 0 0 0 1 0 0 0 0 0 0 0 0 0 1 0 0 0 0 0 0 = XF 0 0 0 1 3 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 5 and 1 X = W 11 1 = WG 2 66 66 66 66 66 66 64 1 1 0 0 0 0 1 1 1 0 1 0 0 1 0 1 1 0 0 0 0 1 0 0 0 0 1 0 0 0 0 1 234 Then, (i) rank(X ) = rank (W ) (ii) Estimated mean responses are the same: Y^ = X (X T X ) X T Y = W (W T W ) 1 W T Y or Y^ = PX Y = PW Y 3 77 77 77 77 77 77 75 235 Example 3.1 Regression model for the yield of a chemical process. Yi = 0 + 1X1i + 2X2i + i " yield " temperature " time An \equivalent" model is (iii) Residual vectors are the same e = Y Y^ = (I PX )Y = (I PW )Y 236 Yi = 0 + 1(X1i X 1:) + 2(X2i X 2:) + i 237 For the rst model: Y1 1 X11 X21 1 Y2 1 X12 X22 0 2 Y3 = 1 X13 X23 1 + 3 Y4 1 X14 X24 2 4 Y5 1 X15 X25 5 = X + 2 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 4 3 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 5 2 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 4 3 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 5 2 6 6 6 6 6 6 6 6 4 2 66 66 66 66 66 66 66 66 64 3 7 7 7 7 7 7 7 7 5 The space spanned by the columns of X is the same as the space spanned by the columns of W . 3 77 77 77 77 77 77 77 77 75 and For the second model: 2 6 6 6 6 6 6 6 6 6 6 6 6 6 6 4 Y1 777 Y2 7777 Y3 7777 Y4 7775 Y5 3 = = X11 X12 X13 X14 X15 W + 2 6 6 6 6 6 6 6 6 6 6 6 6 6 6 4 1 1 1 1 1 X 1 X 1 X 1 X 1 X 1 X21 X22 X23 X24 X25 X 2 777 2 3 666 1 777 X 2 7777 666 0 777 6666 2 7777 X 2 7777 6664 1 7775 + 6666 3 7777 X 2 7775 2 6664 4 7775 5 X 2 3 1 X=W 0 0 2 66 66 66 66 4 2 3 X 1 X 2 3 77 77 77 77 5 1 0 = WG 0 1 1 X 1 X 2 0 = XF W =X 0 1 0 0 1 2 66 66 66 66 4 3 77 77 77 77 5 and Y^ = PX Y = PW Y e = Y Y^ = (I PX )Y = (I PW )Y 238 Defn 3.9: Consider two linear models: (1) E (Y) = X and V ar(Y) = and (2) E (Y) = W and V ar(Y) = where X is an n k model matrix and W is an n q model matrix. We say that one model is a reparameterization of the other if there is a k q matrix F and a q k matrix G such that 239 The previous examples illustrate that if one model is a reparameterization of the other, then (i) rank(X ) = rank(W ) (ii) Least squares estimates of the response means are the same, i.e., Y^ = PX Y = PW Y (iii) Residuals are the same, i.e., e = Y Y^ = (I PX )Y = (I Pw)Y W = XF and X = W G : 240 241 Reasons for reparameterizing models: (iv) An unbiased estimator for 2 is provided by MSE = SSE=(n rank(X )) where, (i) Reduce the number of parameters Obtain a full rank model Avoid use of generalized inverses (ii) Make computations easier In the previous examples, W T W is a diagonal matrix and (W T W ) 1 is easy to compute. SSE = eT e = YT (I PX )Y = YT (I PW )Y (iii) More meaningfull interpretation of parameters. 242 243 Result 3.12. Suppose two linear models, (1) E (Y) = X V ar(Y) = and (2) E (Y) = W V ar(Y) = are reparameterizations of each other, and let F be a matrix such that W = XF . Then (i) If CT is estimable for the rst model, then = F and CT F is estimable under Model 2. 244 (ii) Let ^ = (X T X ) X T Y and ^ = (W T W ) W T Y. If CT is estimable, then CT ^ = CT F ^ (iii) if H0 : CT = d is testable under one model, then H0 : CT F = d is testable under the other. 245 Proof: (i) If CT is estimable for the frist model, then (by Result 3.9 (i)) CT = aT X for some a : (ii) Since CT is estimable, the unique b.l.u.e. is CT ^ = CT (X T X ) X T Y = aT X T (X T X ) X T Y = aT PX Y for some a Hence, CT F = aT X F = aT W which implies that CT F is estimable for the second model. 246 Since CT F is also estimable, the unique b.l.u.e. for CT F is 247 Hence, the estimators are the same if PX = PW . To show this, note that PX W = PX XF = XF = W which implies CT F (W T W ) W T Y = aT XF (W T W ) W T Y = aT W (W T W ) W T Y = aT PW Y for the same a. PX PW = PX W (W T W ) W T = W (W T W ) W T = PW 248 249 Example 3.2 An eects model By a similar argument PW PX = PX Yij = + i + ij Then, PW = P + W T = (PX PX )T = PWT PXT = PW P X = PX This model can be expressed as Y11 1100 11 Y12 1100 12 Y21 = 1 0 1 0 1 + 21 Y31 1 0 0 1 2 31 Y32 1 0 0 1 3 32 Y33 1001 33 2 66 66 66 66 66 66 66 66 66 66 66 4 3 77 77 77 77 77 77 77 77 77 77 77 5 2 66 66 66 66 66 66 66 66 66 66 66 4 3 77 77 77 77 77 77 77 77 77 77 77 5 2 66 66 66 66 66 66 64 3 77 77 77 77 77 77 75 2 66 66 66 66 66 66 66 66 66 66 66 4 251 250 Reparameterize the model as The unique OLS estimator for = (0 1 2)T is Yij = 0 + 1X1ij + 2X2ij + ij using \othogonal" polynomial contrasts (for factors with equally spaced levels and balanced designs) 2 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 4 Y11 Y12 Y21 Y31 Y32 Y33 3 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 5 1 1 1 = 1 1 1 2 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 4 p12 p16 p12 p16 0 p26 p12 p16 p12 p16 p12 p16 3 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 5 2 6 6 6 6 6 6 6 6 4 11 12 0 1 + 21 31 2 32 33 3 7 7 7 7 7 7 7 7 5 2 66 66 66 66 66 66 66 66 66 66 66 4 3 77 77 77 77 77 77 77 77 77 77 77 5 3 77 77 77 77 77 77 77 77 77 77 77 5 252 b = (X T X ) 1 X T Y ^0 67:000 = ^1 = 5:6568 4:8989 ^2 2 66 66 66 66 66 66 4 3 77 77 77 77 77 77 5 2 66 66 66 66 66 4 3 77 77 77 77 77 5 Note that ^0 + ^1( p12 ) + ^2( p16 ) = 61 = Y1: ^0 + ^1(0) + ^2( p26 ) = 71 = Y2: ^0 + ^1( p12 ) + ^2( p16 ) = 69 = Y3: 253 Write this model as Y = X + where Reparameterize the model using Helmert contrasts: 2 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 4 Y11 1 Y12 1 Y21 = 1 Y31 1 Y32 1 Y33 1 3 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 5 2 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 4 1 1 1 0 0 0 1 1 1 2 2 2 3 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 5 2 6 6 6 6 6 6 6 6 4 11 12 0 1 + 21 31 2 32 33 3 7 7 7 7 7 7 7 7 5 2 66 66 66 66 66 66 66 66 66 66 66 4 1 1 X = 11 1 1 2 66 66 66 66 66 66 66 66 66 66 66 4 3 77 77 77 77 77 77 77 77 77 77 77 5 Then, 1 1 1 0 0 0 3 77 77 77 77 77 77 77 77 77 77 77 5 0 and = 1 2 2 66 66 66 66 4 n: Y:: X T Y = Y2: Y1: 2Y3: Y1: Y2: 2 66 66 66 66 4 3 3 77 77 77 77 5 254 255 The unique OLS estimator for = (0 1 2)T is Restrictions (side conditions) b = (X T X ) 1 X T Y Y:: ^0 67 1 (Y Y1:) = ^1 = 5 = 2 2: 1 (Y ^2 1 (Y1:+2 Y2:)) 3 3: 2 6 6 6 6 6 6 6 6 6 6 6 6 6 6 4 3 7 7 7 7 7 7 7 7 7 7 7 7 7 7 5 2 6 6 6 6 6 6 6 6 6 6 6 6 4 3 77 77 77 77 77 77 5 3 77 77 77 77 5 n2 n1 2n3 n1 n2 777 n n n1 + n2 n1 n2 777 2 1 4 2n3 n1 n2 n1 n2 n1 + n2 + 4n3 5 2 6 6 X T X = 6666 and 1 1 1 2 2 2 2 66 66 66 66 66 66 4 Note that ^0 + ^1( 1) + ^2( 1) = 61 = Y1: ^0 + ^1(1) + ^2( 1) = 71 = Y2: ^0 + ^1(0) + ^2(2) = 69 = Y3: 256 Give meaning to individual parameters 3 77 77 77 77 77 77 5 Make individual parameters estimable Create a full rank model matrix Avoid the use of generalized inverses 257 Impose the restriction 3 = 0 Then, E (Y1j ) = + 1 for j = 1; :::; n1 E (Y2j ) = + 2 for j = 1; :::; n2 E (Y3j ) = for j = 1; :::; n3 Example 3.2 An eects model Yij = + i + ij This model can be expressed as Y11 1100 11 Y12 1100 12 Y21 = 1 0 1 0 1 + 21 Y31 1 0 0 1 2 31 Y32 1 0 0 1 3 32 Y33 1001 33 2 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 4 3 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 5 2 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 4 3 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 5 2 6 6 6 6 6 6 6 6 6 6 6 6 6 4 2 66 66 66 66 66 66 66 66 66 66 66 4 3 7 7 7 7 7 7 7 7 7 7 7 7 7 5 3 77 77 77 77 77 77 77 77 77 77 77 5 and 2 66 66 66 66 66 66 66 66 66 66 66 4 Y11 110 Y12 110 Y21 = 1 0 1 Y31 100 Y32 100 Y33 100 3 77 77 77 77 77 77 77 77 77 77 77 5 2 66 66 66 66 66 66 66 66 66 66 66 4 3 77 77 77 77 77 77 77 77 77 77 77 5 2 66 66 66 66 4 11 12 1 + 21 31 2 32 33 3 77 77 77 77 5 2 66 66 66 66 66 66 66 66 66 66 66 4 258 Write this model as Y = X + where 1 1 X = 11 1 1 2 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 4 Then, 1 1 0 0 0 0 0 0 1 0 0 0 3 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 5 and = 1 2 2 6 6 6 6 6 6 6 6 4 n: n1 n2 X T X = n1 n1 0 n2 0 n2 2 6 6 6 6 6 6 6 6 4 and Y:: T X Y = Y1: Y2: 2 6 6 6 6 6 6 6 6 4 3 77 77 77 77 5 259 and the unique OLS estimator for = ( 1 2)T is b = (X T X ) 1 X T Y 1 1 1 1 n + n 1 3 1 = n 1 n1 3 n + 1 1 2n2n3 Y3: ^ = Y1: Y3: = ^ 1 ^ 2 Y2: Y3: 2 66 66 66 66 66 66 64 3 7 7 7 7 7 7 7 7 5 2 66 66 66 66 66 64 3 7 7 7 7 7 7 7 7 5 260 3 77 77 77 77 77 77 77 77 77 77 77 5 3 77 77 77 77 77 75 2 66 66 66 66 66 4 3 77 77 77 77 77 77 75 2 66 66 66 66 4 Y:: Y1: Y2: 3 77 77 77 77 5 3 77 77 77 77 77 5 261 Consider the model Yij = + i + ij with the restriction 1 + 2 + 3 = 0. Then, 3 = 1 2 and E (Y1j ) = + 1 for j = 1; :::; n1 E (Y2j ) = + 2 for j = 1; :::; n2 E (Y3j ) = + 3 = 1 2 for j = 1; :::; n3 and Y11 1 1 0 11 Y12 1 1 0 12 Y21 = 1 0 1 1 + 21 Y31 1 1 1 2 31 Y32 1 1 1 32 Y33 1 1 1 33 2 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 4 3 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 5 2 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 4 3 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 5 2 6 6 6 6 6 6 6 6 4 2 66 66 66 66 66 66 66 66 66 66 66 4 3 7 7 7 7 7 7 7 7 5 3 77 77 77 77 77 77 77 77 77 77 77 5 This model is Y = X + with 1 1 X = 11 1 1 1 1 0 1 1 1 2 66 66 66 66 66 66 66 66 66 66 66 4 0 0 1 1 1 1 3 77 77 77 77 77 77 77 77 77 77 77 5 and = 1 2 2 66 66 66 66 4 The unique OLS estimator for = ( 1 2)T is b = (X T X ) 1 X T Y = = 2 66 66 66 66 64 2 66 66 66 66 4 n1 n2 n: n1 n3 n2 n3 3777 n3 n1 + n3 n3 77777 n3 n3 n2 + n3 75 Y:: 3 77 77 77 77 5 Y1: Y:: Y2: Y:: = 2 66 66 66 66 4 1 2 66 66 66 4 E (Y1j ) = for j = 1; :::; n1 E (Y2j ) = + 2 for j = 1; :::; n2 E (Y3j ) = + 3 for j = 1; :::; n3 and 2 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 4 Y:: Y1: Y3: Y2: Y3: ^ 3777 ^ 1 77777 ^ 2 5 262 Consider the model Yij = + i + ij with the restriction 1 = 0: Then, 3 77 77 77 77 5 263 This model is Y = X + , with 1 1 X = 11 1 1 2 66 66 66 66 66 66 66 66 66 66 66 4 0 0 1 0 0 0 0 0 0 1 1 1 3 77 77 77 77 77 77 77 77 77 77 77 5 and = 2 3 2 66 66 66 66 4 3 77 77 77 77 5 The unique OLS estimator for = ( 1 2)T is b = (X T X ) 1 X T Y 100 Y11 100 Y12 Y21 = 1 1 0 101 Y31 101 Y32 101 Y33 3 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 5 2 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 4 3 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 7 5 2 6 6 6 6 6 6 6 6 4 11 12 2 + 21 31 3 32 33 3 7 7 7 7 7 7 7 7 5 2 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 4 3 77 77 77 77 77 77 77 77 77 77 77 5 264 = = 2 66 66 66 66 64 2 66 66 66 66 4 n: n2 n3 3777 n2 n2 0 77777 n3 0 n3 75 Y1: Y2: Y1: Y3: Y1: 3 77 77 77 77 5 = 1 2 66 66 66 66 4 2 66 66 66 4 Y:: 77 Y2: 77775 Y3: 3 ^ 3777 ^ 2 77777 ^ 3 5 265 3 77 77 77 5 The restrictions (i.e. the choice of one particular solution to the norma equations) have no eect on the OLS estimates of estimable quantities. The estimates treatment means are: E (Y^1j ) = ^ = Y1: = 61 E (Y^2j ) = ^ + ^ 2 = Y2: = 71 E (Y^3j ) = ^ + ^ 3 = Y3: = 69 266