North Carolina Testing Program Standard Setting for the

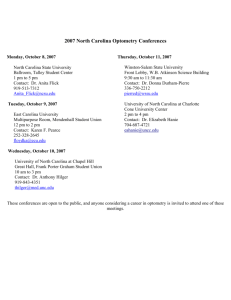

advertisement