Advanced Topics in Survey Sampling

advertisement

Advanced Topics in Survey Sampling

Jae-Kwang Kim

Wayne A Fuller

Pushpal Mukhopadhyay

Department of Statistics

Iowa State University

World Statistics Congress Short Course

July 23-24, 2015

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Advanced Topics in Survey Sampling

7/23-24/2015

1 / 318

7/23-24/2015

2 / 318

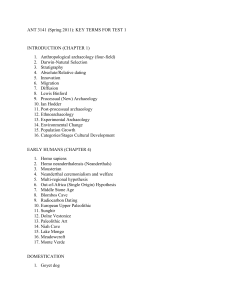

Outline

1

Probability sampling from a finite population

2

Use of auxiliary information in estimation

3

Use of auxiliary information in design

4

Replication variance estimation

5

Models used in conduction with sampling

6

Analytic studies

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Advanced Topics in Survey Sampling

Chapter 1

Probability sampling from a finite universe

Jae-Kwang Kim

Wayne A Fuller

Pushpal Mukhopadhyay

Department of Statistics

Iowa State University

World Statistics Congress Short Course

July 23-24, 2015

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 1

7/23-24/2015

3 / 318

Probability Sampling

U = {1, 2, · · · , N} : list for finite population, sampling frame

F = {y1 , y2 , · · · , yN } : finite population/finite universe

A (⊂ U) : index set of sample

A : set of possible samples

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 1

7/23-24/2015

4 / 318

Sampling Design

Definition

p(·) is a sampling design

⇔ p(a) is a function from A to [0, 1] such that

1

2

p(a) ∈ [0, 1], ∀a ∈ A,

P

a∈A p(a) = 1.

i.e. p(a) is a probability mass function defined on A.

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 1

7/23-24/2015

5 / 318

7/23-24/2015

6 / 318

Notation for Sampling

1

if i ∈ A

0

otherwise

d = (I1 , I2 , · · · , IN )

P

n= N

i=1 Ii : (realized) sample size

Ii =

πi = E [Ii ] : first order inclusion probability

πij = E [Ii Ij ] : second order inclusion probability

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 1

Design-estimator Characteristics

Definition

θ̂ = θ̂(yi ; i ∈ A) is design unbiased for θN = θ (y1 , y2 , · · · , yN )

P

⇔ E {θ̂|F} = θN , ∀ (y1 , y2 , · · · , yN ), where E {θ̂|F} = a∈A θ̂(a)p(a)

Definition

θ̂ is a design linear estimator ⇔ θ̂ =

P

i∈A wi yi ,

where wi are fixed with respect to the sampling design

P

Note: If wi = πi−1 , θ̂ = i∈A wi yi is the Horvitz-Thompson estimator.

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 1

7/23-24/2015

7 / 318

7/23-24/2015

8 / 318

Theorem 1.2.1

Theorem

If θ̂ =

P

i∈A wi yi

is a design linear estimator, then

P

E {θ̂n |F} = N

i=1 wi πi yi

V {θ̂n |F} =

PN PN

i=1

Kim & Fuller & Mukhopadhyay (ISU & SAS)

j=1 (πij

− πi πj ) wi yi wj yj .

Chapter 1

Proof of Theorem 1.2.1

Because E {Ii } = πi and because wi yi , i = 1, 2, ..., N, are fixed,

E {θ̂ | F} =

N

X

E {Ii | F}wi yi =

i=1

N

X

πi wi yi

i=1

Using E (Ii Ik ) = πik and Cov (Ii , Ik ) = E (Ii Ik ) − E (Ii )E (Ik ),

( N

)

X

V {θ̂ | F} = V

Ii wi yi | F

=

i=1

N

N

XX

Cov (Ii , Ik )wi yi wk yk

i=1 k=1

N X

N

X

=

(πik − πi πk )wi yi wk yk

i=1 k=1

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 1

7/23-24/2015

9 / 318

Horvitz-Thompson Estimator of Total

Corollary

Let πi > 0 ∀i ∈ U. Then, T̂y =

X

πi−1 yi satisfies

i∈A

(i) E T̂y | F = Ty

(ii) V T̂y | F =

N X

N

X

(πij − πi πj )

i=1 j=1

yi yj

πi πj

Proof of (ii): Substitute πi−1 for wi of Theorem 1.2.1.

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 1

7/23-24/2015

10 / 318

Unbiased Variance Estimation

Theorem

P

Let πij > 0, ∀i, j ∈ U and let θ̂ = i∈A wi yi be design linear. Then,

XX 1

V̂ =

(πij − πi πj ) wi yi wj yj satisfies

πij

i∈A j∈A

E V̂ | F = V θ̂ | F

Proof : Let g (yi , yj ) = (πij − πi πj )wi yi wj yj . By Theorem 1.2.1

N X

N

X

X

E

πij−1 g (yi , yj )|F =

g (yi , yj )

i=1 j=1

i,j∈A

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 1

7/23-24/2015

11 / 318

Simple Random Sampling (SRS)

Choose n units from N units without replacement with equal

probability.

1

2

3

Each subset of

n distinct units is equally likely to be selected.

N

There are n samples of size n from N.

Give equal probability of selection to each subset with n units.

Definition

Sampling design for SRS:

P(A) =

.

1

Kim & Fuller & Mukhopadhyay (ISU & SAS)

N

n

0

if

|A| = n

otherwise.

Chapter 1

7/23-24/2015

12 / 318

Lemma

Under SRS, the inclusion probabilities are

πi

πij

−1 N

1 N −1

n

=

=

n

1

n−1

N

−1 N

1 1 N −2

n (n − 1)

=

=

n

1 1

n−2

N (N − 1)

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 1

for i 6= j.

7/23-24/2015

13 / 318

7/23-24/2015

14 / 318

Simple Random Samples

Let ȳn = n−1

−1 1 − N −1 n s 2 ,

y

,

V̂

=

n

i

n

i∈A

P

P

sn2 = (n − 1)−1 i∈A (yi − ȳn )2 , ȲN = N −1 N

i=1 yi ,

P

2

and SN2 = (N − 1)−1 N

i=1 (yi − ȳN ) . Then,

P

(i) E (ȳn | F) = ȳN

(ii) V (ȳn | F) = n−1 1 − N −1 n SN2

(iii) E V̂ | F = V (ȳn | F)

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 1

Poisson Sampling

Definition:

inde

Ii ∼ Bernoulli (πi ) ,

P

Estimation (of Ty = N

i=1 yi )

T̂y =

N

X

i = 1, 2, · · · , N.

Ii yi /πi , E {T̂y | F} =

i=1

N

X

πi yi /πi

i=1

Variance

N

N X

X

1

Var T̂y | F =

− 1 yi2

(πi − πi2 )yi2 /πi2 =

πi

i=1

i=1

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 1

7/23-24/2015

15 / 318

Poisson Sampling

Optimal design: minimize Var T̂y | F

min

N

X

subject to

PN

i=1 πi

=n

N

X

πi−1 yi2 + λ(

πi − n)

i=1

i=1

⇒ πi−2 yi2 = λ

⇒ πi ∝ yi

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 1

7/23-24/2015

16 / 318

A Design Result

Definition

Superpopulation model (ξ) : the model for y = (y1 , y2 , . . . , yN ).

Definition

Anticipated Variance : the expected value of the design variance of the

planned estimator calculated under the superpopulation model.

Theorem (1.2.3)

2 ), let d = (I , I , · · · , I ) be

Let y = {y1 , y2 , · · · , yN } be iid (µ, σP

1 2

N

−1

independent of y, and define T̂y = i∈A πi yi . Then V {T̂y − Ty } is

minimized at πi = n/N and πij = n(n − 1)/N(N − 1), i 6= j.

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 1

7/23-24/2015

17 / 318

Proof of Theorem 1.2.3

V {T̂y − Ty } = V {E (T̂y − Ty |F)} + E {V (T̂y − Ty |F)}

N X

N

X

yi yj

= E

(πij − πi πj )

πi πj

i=1 j=1

N

N X

N

X

X

1

1 1

2

= µ

(πij − πi πj )

+σ

(πi − πi2 ) 2

πi πj

πi

2

i=1

i=1 j=1

P

PN

−1

−1 n

(i) min N

π

s.t.

i=1 i

i=1 πi = n ⇒ πi = N

PN PN

PN

−1 −1

−1

(ii)

(π

−

π

π

)π

π

=

V

{

i j i

i=1

j=1 ij

i=1 Ii πi } ≥ 0

j

P

−1

−1 n and π = n(n − 1) .

V{ N

I

π

}

=

0

for

π

=

N

i

i

ij

i=1

i

N(N − 1)

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 1

7/23-24/2015

18 / 318

Discussion of Theorem 1.2.3

(i) Finding πi and πj that minimize V {θ̂ | F} is not possible

because V {θ̂ | F} is a function of N unknown values

Godambe (1955), Godambe & Joshi (1965), Basu (1971)

(ii) If y1 , y2 , · · · , yN ∼ ξ for some model ξ (superpopulation model), then

we sometimes can find an optimal strategy (design and estimator).

Under iid & HT estimation, the optimal design is SRS.

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 1

7/23-24/2015

19 / 318

Example: Stratified Sampling

Definition

1

The finite population is stratified into H subpopulations.

U = U1 ∪ · · · ∪ UH

2

Within each population (or stratum), samples are drawn independently

in the strata.

Pr (i ∈ Ah , j ∈ Ag ) = Pr (i ∈ Ah ) Pr (j ∈ Ag ) ,

for h 6= g

where Ah is the index set of the sample in stratum h, h = 1, 2, · · · , H.

Example: Stratified SRS

1

2

3

Stratify the population. Let Nh be the population size of Uh .

Sample size allocation: Determine nh .

Perform SRS independently (select nh sample elements from Nh ) in

each stratum.

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 1

7/23-24/2015

20 / 318

Estimation under Stratified SRS

1

HT estimator:

T̂y =

H

X

Nh ȳh

h=1

−1 P

2

where ȳh = nh

Variance

i∈Ah yhi .

H

X

Nh2

nh

Var T̂y =

1−

Sh2

nh

Nh

h=1

2

P h

where Sh2 = (Nh − 1)−1 N

y

−

Ȳ

hi

h .

i=1

Variance estimation

H

X

Nh2

nh

V̂ T̂y =

1−

sh2

nh

Nh

3

h=1

where sh2 = (nh − 1)−1

Kim & Fuller & Mukhopadhyay (ISU & SAS)

P

i∈Ah

(yhi − ȳh )2 .

Chapter 1

7/23-24/2015

21 / 318

Optimal Strategy under Stratified Sampling

Theorem (1.2.6)

Let F be a stratified finite population in which the elements in stratum h

are realizations of iid(µh , σh2 ) random variables. Let C be the total cost for

sample observation and assume that it costs ch to observe an element in

stratum h. Then a sampling and estimation strategy for Ty that minimizes

the anticipated variance in the class of linear unbiased estimators and

probability design is: Select independent simple random samples in each

stratum, selecting nh∗ in stratum h, where

Nh σh

nh∗ ∝ √

ch

with C =

P

j

nh∗ ch , subject to nh∗ ≤ Nh , and use the HT estimator.

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 1

7/23-24/2015

22 / 318

Comments on Theorem 1.2.6

Anticipated variance

AV {θ̂ − θN } = E {E [(θ̂ − θN )2 | F]} − [E {E (θ̂ − θN | F)}]2

For HT estimation, E (θ̂ − θN | F) = 0 and the anticipated variance

becomes

H

X

2

−1

−1

2

AV {T̂y − Ty } =

Nh nh − Nh σh

h=1

P

PH

−1 2 2

Minimizing H

n

N

σ

subject

to

h=1 h

h=1 nh ch = C leads to the

h h

optimal allocation

Nh σh

nh∗ ∝ √ .

ch

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 1

7/23-24/2015

23 / 318

7/23-24/2015

24 / 318

R

Sample Selection Using SAS

PROC SURVEYSELECT

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 1

PROC SURVEYSELECT

Probability-based random sampling

equal probability selection

PPS selection

Stratification and clustering

Sample size allocation

Sampling weights

inclusion probabilities

joint inclusion probabilities

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 1

7/23-24/2015

25 / 318

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 1

7/23-24/2015

26 / 318

Sampling Methods

Simple random with and without replacement

Systematic

Sequential

PPS

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 1

7/23-24/2015

27 / 318

PPS Sampling Methods

With and without replacement

Systematic

Sequential with minimum replacement

Two units per stratum: Brewer’s, Murthy’s

Sampford’s method

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 1

7/23-24/2015

28 / 318

Digitech Cable Company

Digital TV, high-speed Internet, digital phone

13,471 customers in four states: AL, FL, GA, and SC

Customer satisfaction survey (high-speed Internet

service)

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 1

7/23-24/2015

29 / 318

Sampling with Stratification

Can afford to call only 300 customers

The sampling frame contains the list of customer

identifications, addresses, and types

Need adequate sampling units in every stratum (state

and type)

Select simple random sample without replacement

within strata

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 1

7/23-24/2015

30 / 318

Sampling Frame

CustomerID

Kim & Fuller & Mukhopadhyay (ISU & SAS)

State

Type

416874322

AL

Platinum

288139763

GA

Gold

339008654

GA

Gold

118980542

GA

Platinum

421670342

FL

Platinum

623189201

SC

Platinum

324550324

FL

Gold

832902397

AL

Gold

Chapter 1

7/23-24/2015

31 / 318

Sort Sampling Frame by Strata before Selection

p r o c s o r t d a t a=C u s t o m e r s ;

by S t a t e Type ;

run ;

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 1

7/23-24/2015

32 / 318

Select Stratified Sample

p r o c s u r v e y s e l e c t d a t a=C u s t o m e r s method=s r s n=300

s e e d =3232445 o u t=S a m p l e S t r a t a ;

s t r a t a S t a t e Type / a l l o c=p r o p ;

run ;

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 1

7/23-24/2015

33 / 318

7/23-24/2015

34 / 318

The SURVEYSELECT Procedure

Selection Method

Simple Random Sampling

Strata Variables

State

Type

Allocation

Proportional

Input Data Set

CUSTOMERS

Random Number Seed

3232445

Number of Strata

8

Total Sample Size

Output Data Set

Kim & Fuller & Mukhopadhyay (ISU & SAS)

300

SAMPLESTRATA

Chapter 1

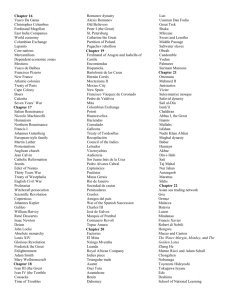

Strata Sizes

Kim & Fuller & Mukhopadhyay (ISU & SAS)

State

Type

SampleSize

PopSize

AL

Gold

16

706

AL

Platinum

28

1238

FL

Gold

31

1370

FL

Platinum

48

2170

GA

Gold

43

1940

GA

Platinum

78

3488

SC

Gold

19

875

SC

Platinum

37

1684

Chapter 1

7/23-24/2015

35 / 318

Data Collection

Important practical considerations that the computer

cannot decide for you:

Telephone interview

Rating, age, household size, ...

Auxiliary variables: data usage, average annual

income, home ownership rate, ...

Callbacks, edits, and imputations

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 1

7/23-24/2015

36 / 318

Survey Objective: Digitech Cable

Rate customer satisfaction

Are customers willing to recommend Digitech?

Is satisfaction related to

household size?

race?

Is usage time related to data usage?

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 1

7/23-24/2015

37 / 318

Digitech Cable Data Collection

Survey variables

Auxiliary variables

Rating

Data usage

Recommend

Neighborhood income

Usage time

Competitors

Household size

Race

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 1

7/23-24/2015

38 / 318

Large Sample Results for Survey Samples

Complex designs : Weights

Few distributional assumptions

Heavy reliance on large sample theory

Central Limit Theorem

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 1

7/23-24/2015

39 / 318

Review of Large Sample Results

Mann and Wald notation for order in probability

Sequence: X1 , X2 , · · · , Xn , · · · (gn > 0)

Xn = op (gn ) ⇔ Xn /gn → 0 in probability

⇔

lim P[|Xn /gn | > ] = 0

n→∞

Xn = Op (gn ) ⇔ Xn /gn bounded in probability

⇔ P[|Xn /gn | > M ] < for some M , ∀ > 0, ∀n

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 1

7/23-24/2015

40 / 318

Examples of Order in Probability

Let x̄n ∼ N(0, n−1 ). Then the following statements hold

P{|x̄n | > 2n−1/2 } < 0.05 ∀n

P{|x̄n | > Φ−1 (1 − 0.5)n−1/2 } < ∀n

therefore x̄n = Op (n−1/2 ).

If x̄n ∼ N(0, n−1 σ 2 ), then x̄n = Op (?)

If x̄n ∼ N(µ, n−1 σ 2 ), then x̄n = Op (?)

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 1

7/23-24/2015

41 / 318

Example of op

Again, let x̄n ∼ N(0, n−1 ).

lim P{|x̄n | > k} = 0 ∀k > 0 ⇒ x̄n = op (1)

n→∞

lim P{|n1/4 x̄n | > k} = 0 ∀k > 0 ⇒ x̄n = op (n−1/4 )

n→∞

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 1

7/23-24/2015

42 / 318

Properties of Order in Probability

fn > 0, gn > 0

Xn = Op (fn ), Yn = Op (gn ), then

Xn Yn = Op (fn gn )

|Xn |s

= Op (fns ), s ≥ 0

Xn + Yn = Op (max{fn , gn }).

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 1

7/23-24/2015

43 / 318

Chebychev’s Inequality

For given r > 0 with E {|X |r } < ∞,

E {|X − A|r }

P[|X − A| ≥ ] ≤

.

r

Corollary

E {Xn2 } = O(an2 ) ⇒ Xn = Op (an )

By Chebyshev’s inequality,

|Xn |

E {Xn2 }

P

> M ≤ 2 2 < an

an M

p

choose M = K /, where K is the upper bound of an−2 E {Xn2 }.

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 1

7/23-24/2015

44 / 318

Central Limit Theorems

P

Lindeberg: X1 , X2 , · · · : independent (µi , σi2 ), Bn2 = ni=1 σi2

P

n

(Xi − µi ) L

1 X

2

E

{(X

−

µ

)

I

(|X

−

µ

|

>

B

)}

→

0

⇒

→ N(0, 1)

n

i

i

i

i

Bn2

Bn

i=1

Liapounov:

P

X1 , X2 , · · · : independent (µi , σi2 ), Bn2 = ni=1 σi2

Pn

2+δ = o(B 2+δ ), for some δ > 0

E

|X

−

µ

|

i

i

n

i=1

Pn

(Xi − µi ) L

→ N(0, 1)

⇒ i=1

Bn

Note: Liapounov condition ⇒ Lindeberg condition

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 1

7/23-24/2015

45 / 318

7/23-24/2015

46 / 318

Slutsky’s Theorem

{Xn }, {Yn } are sequences of random variables satisfying

L

Xn → X , p lim Yn = c

⇒

L

Xn + Yn → X + c

L

Yn Xn → cX

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 1

Theorem 1.3.1: Samples of Samples

Theorem

y1 , · · · , yN are iid with d.f F (y ) and c.f. ϕ(t) = E {e itY }, i =

√

−1

d = (I1 , · · · , IN ) : random vector independent of y

⇒ (yk ; k ∈ A)|d are iid with c.f. ϕ(t)

Proof: In book. A SRS of a SRS is a SRS.

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 1

7/23-24/2015

47 / 318

7/23-24/2015

48 / 318

Application of Theorem 1.3.1

y1 , · · · , yN ∼ iid N(µy , σy2 )

SRS of size nN from FN

⇒ (yk , k ∈ A)|d are iid N(µy , σy2 ) and

(yk , k ∈ U ∩ Ac )|d are iid N(µy , σy2 )

⇒ ȳn ∼ N(µy , σy2 /nN ) and

ȳN−n ∼ N(µy , σy2 /(N − nN )) indep of ȳn

−1

⇒ ȳn − ȲN ∼ N 0, nN (1 −

−1

nN (1 −

−1/2

2

fN )sn

(ȳn

Kim & Fuller & Mukhopadhyay (ISU & SAS)

fN )σy2

and

− ȲN ) ∼ tn−1

Chapter 1

Finite Population Sampling

Motivation

Is x̄n − x̄N = op (1)?

x̄n − x̄N

L

Does q

→ N(0, 1)?

V̂ {x̄n − x̄N | FN }

We’ll be able to answer these shortly.

n → N isn’t very interesting

Need n → ∞ and N − n → ∞

Need a sequence of samples from a sequence of finite populations

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 1

7/23-24/2015

49 / 318

Sequence of Samples from a Sequence of Populations

Approach 1 {FN } is a sequence of fixed vectors

Approach 2 {y1 , y2 , · · · , yN } is a realization from a superpopulation

model.

Notation

UN = {1, 2, · · · , N} : N-th finite population

FN = {y1N , · · · , yNN }

yiN : observation associated with i-th element in the N-th population

AN : sample index set selected from UN with size nN = |AN |

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 1

7/23-24/2015

50 / 318

Design Consistency

Definition

θ̂ is design consistent for θN if for every > 0,

lim P{|θ̂ − θN | > | FN } = 0

N→∞

almost surely, where P(· | FN ) denotes the probability induced by the

sampling mechanism.

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 1

7/23-24/2015

51 / 318

Design Consistency for ȳn in SRS

Approach 1 (fixed sequence)

Assume a sequence of finite populations {FN } s.t.

lim N

−1

N→∞

N

X

(yi , yi2 ) = (θ1 , θ2 ),

and θ2 − θ12 > 0.

i=1

By Chebyshev’s inequality,

−1

nN

(1 − fN )SN2

P |ȳn − ȲN | ≥ | FN ≤

2

where fN = nN N −1 .

Approach 2 y1 , · · · , yN ∼ iid(µ, σy2 )

⇒ limN→∞ SN2 = σy2 a.s.

⇒ V [ȳn − ȲN |FN ] = Op (nN−1 )

−1/2

⇒ ȳn − ȲN |FN = Op (nN )

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 1

7/23-24/2015

52 / 318

Central Limit Theorem (1.3.2) Part 1

Theorem (Part 1)

{y1N , · · · , yNN } ∼ iid(µ, σ 2 ) and 2 + δ moments (δ > 0)

P

PN

−1

SRS, ȳn = n−1 N

I

y

,

Ȳ

=

N

N

i

iN

i=1

i=1 yiN

L

⇒ [V (ȳn − ȲN )]−1/2 (ȳn − ȲN ) | d → N(0, 1).

Proof : Write ȳn − ȲN = N

−1

N

X

ciN yiN , where ciN = n

−1

NIi − 1 .

i=1

Bn2 = N 2 V (ȳn − ȲN ) = N 2 V (ȳn − ȲN | d) =

Applying Lindberg CLT

Kim & Fuller & Mukhopadhyay (ISU & SAS)

PN

2 2

i=1 ciN σ

Chapter 1

= (N − n)N/nσ 2

7/23-24/2015

53 / 318

Theorem 1.3.2 Part 2

Theorem

(Part 2) Furthermore, if {yiN } has bounded fourth moments, then

L

[V̂ (ȳn − ȲN )]−1/2 (ȳn − ȲN ) → N(0, 1).

Proof : Want to apply Slutsky’s theorem:

p

ȳ − ȲN

ȳn − ȲN

V (ȳn − ȲN ) L

q n

q

=p

→ N(0, 1).

V

(ȳ

−

Ȳ

)

n

N

V̂ (ȳn − ȲN )

V̂ (ȳn − ȲN )

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 1

7/23-24/2015

54 / 318

Proof of 1.3.2 Part 2, Continued

Then it is enough to show that

(n−1 − N −1 )σy2 p

= −1

→ 1.

(n − N −1 )sn2

V̂ (ȳn − ȲN )

V (ȳn − ȲN )

p

To show sn2 → σy2 , note that

sn2

σy2

n

=

=

=

1 1 X

(yi − ȳn )2

2

σy n − 1

1 1

σy2 n − 1

1 1

σy2 n

i=1

n

X

(yi − µ)2 −

i=1

1 n

2

(ȳ

−

µ)

n

σy2 n − 1

n

X

(yi − µ)2 + Op (n−1 )

i=1

p

→ 1 if E (yi − µ)4 ≤ M4 < ∞

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 1

7/23-24/2015

55 / 318

Comment on Theorem 1.3.2

1

2

The CLT in Theorem 1.3.2 is derived with Approach 2 (using

superpopulation model)

The result can be extended to stratified random sampling (textbook)

{yhiN } ∼ iid (µh , σh2 )

θ̂n =

HN

X

N

−1

Nh ȳhn , ȲN =

h=1

HN

X

N −1 Nh ȲhN

h=1

L

[V̂ (θ̂n − ȲN )]−1/2 (θ̂n − ȲN ) → N(0, 1)

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 1

7/23-24/2015

56 / 318

Poisson Sampling

Population: y1 , y2 , ..., yN

Probabilities: π1 , π2 , ..., πN

The sampling process is

Bernoulli trial for each i (Independent trials)

Sample is those i for which the trial is a success

N independent random variables

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 1

7/23-24/2015

57 / 318

CLT under Approach 1 (Fixed Sequences)

y1 , y2 , · · · : sequence of real vectors

π1 , π2 , · · · : sequence of selection probabilities

gi = (1, yi , αN πi−1 , αN πi−1 yi )0 where αN = E (nN )/N = nBN /N

xi = gi Ii , Ii ∼ Bernoulli(πi ) (i.e. Poisson sampling)

−1 PN

−1 PN

−1 PN

Let µ̂x = nBN

x

=

n

g

I

and

µ

=

n

xN

BN

BN

i=1 i

i=1 i i

i=1 gi πi

E (µ̂x | FN ) = µxN

T̂y ,HT =

N

X

Ii yi πi−1

=

−1

αN

i=1

−1

= αN

N

X

i=1

N

X

αN πi−1 yi Ii

xi4 .

i=1

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 1

7/23-24/2015

58 / 318

Theorem 1.3.3, Part 1

Theorem (Part 1)

Assume Poisson sampling

−1 PN

(i) limN→∞ nBN

i=1 gi πi = µx

P

Σ

Σ

11

12

N

−1

0

(ii) limN→∞ nBN

,

0

i=1 πi (1 − πi )gi gi = Σxx =

Σ12 Σ22

Σ11 , Σ22 : positive definite

(iii) lim

max PN

N→∞ 1≤k≤N

Then,

√

(γ 0 gk )2

i=1 (γ

0 g )2 π (1

i

i

− πi )

= 0 ∀γ s.t. γ 0 Σxx γ > 0 .

L

nBN (µ̂x − µxN )|FN → N(0, Σxx ).

Proof: textbook

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 1

7/23-24/2015

59 / 318

7/23-24/2015

60 / 318

Theorem 1.3.3, Part 2

Theorem (Part 2)

Under conditions (i)-(iii), if

−1 PN

4

(iv) limN→∞ nBN

i=1 πi |gi | = M4 , then

[V̂ (T̂y )]−1/2 (T̂y − Ty )|FN → N(0, I ), where

X πi (1 − πi ) yi yi 0

.

V̂ (T̂y ) =

πi

πi

πi

i∈A

Proof: textbook

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 1

Theorem 1.3.4 CLT for SRS

Theorem

{yi } : sequence of real numbers with bounded 4th moments

SRS without replacement

−1/2

⇒ V̂n

L

(ȳn − ȳN )|FN → N(0, 1)

The result is obtained by showing that there is a SRS mean that differs

from Poisson mean by op (n−1/2 ).

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 1

7/23-24/2015

61 / 318

7/23-24/2015

62 / 318

Function of Means (Theorem 1.3.7)

Theorem

(i)

√

L

n(x̄n − µx ) → N(0, Σxx )

(ii) g (x) : continuous and differentiable at x = µx

∂g (x)

: continuous at x = µx

(iii) hx (x) =

∂x

√

L

⇒ n[g (x̄n ) − g (µx )] → N(0, h0x (µx )Σxx hx (µx )).

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 1

Proof of Theorem 1.3.7

[Step 1] By a Taylor expansion g (x̄n ) = g (µx ) + (x̄n − µx ) h0x (µ∗ )

where µ∗x is on the line segment joining x̄n and µx .

[Step 2] Show µ∗x − µx = op (1)

[Step 3] Using the assumption that hx (x) is continuous at x = µx ,

show that hx (µ∗x ) − hx (µx ) = op (1).

[Step 4] Because

√

√

n [g (x̄n ) − g (µx )] =

n (x̄n − µx ) h0x (µ∗x )

√

=

n (x̄n − µx ) h0x (µx ) + op (1) ,

we apply Slutsky’s theorem to get the result.

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 1

7/23-24/2015

63 / 318

Example on Curvature

·

x̄n ∼ N(µ, V (x̄n ))

·

Approximation: x̄n2 ∼ N(µ2 , (2µ)2 V (x̄n ))

Let µ = 2 and V (x̄n ) = 0.01. Then

.

.

E {x̄n2 } = 22 + 0.01 = µ2 . V {x̄n2 } = 2(0.01)2 + 4(22 )(0.01) = 4µ2 V (x̄n ).

Let µ = 2 and V (x̄n ) = 3. Then

E {x̄n2 } = 4 + 3 6= µ2 . V {x̄n2 } = 2(2)2 + 4(22 )(3) 6= 4µ2 V (x̄n ).

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 1

7/23-24/2015

64 / 318

Large Sample Bias

L

n1/2 (θ̂ − θ) → N(0, 1) does not imply E {θ̂} → θ.

For example, if

(ȳn , x̄n ) ∼ N((µy , µx ), Σ) and µx 6= 0,

then E

ȳn

x̄n

is not defined.

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 1

7/23-24/2015

65 / 318

7/23-24/2015

66 / 318

Ratio Estimation (Theorem 1.3.8, Part 1)

Theorem

xi = (x1i , x2i ), X̄1N 6= 0, R̂ = x̄2,HT /x̄1,HT

√ −1

L

nN (T̂x − Tx N ) → N(0, Mxx )

√

⇒ n(R̂ − RN ) → N(0, hN Mxx h0N )

where T̂x =

−1

i∈A πi xi , TxN =

P

PN

i=1 xi , RN =

−1

hN = x̄1N

(−RN , 1)

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 1

X̄2N

, and

X̄1N

Proof of Theorem 1.3.8 Part 1

R̂ =

x̄2,HT

1

X̄2N

X̄2N

+

(x̄2,HT − X̄2N ) − 2 (x̄1,HT − X̄1N ) + Remainder

=

x̄1,HT

X̄1N

X̄1N

X̄1N

Method 1. Mean value theorem & continuity of the first order partial

derivatives⇒ Remainder= op (n−1/2 )

Method 2. Second order Taylor expansion + continuity of the second

order partial derivatives ⇒ Remainder= Op (n−1 )

∂R R̂ = R +

∗ (x̄HT − X̄N )0

∂x x̄

2 ∂R

1

∂ R 0

= R+

(x̄HT − X̄N )0 + (x̄HT − X̄N )

(x̄

−

X̄

)

HT

N

∂x

2

∂x∂x0 x̄∗∗

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 1

7/23-24/2015

67 / 318

Theorem 1.3.8 Part 2

Theorem

In addition, if {V (T̂x |FN )}−1 V̂HT (T̂x ) − I = op (n−1/2 ), then

L

[V̂ (R̂)]−1/2 (R̂ − RN ) → N(0, 1)

where V̂ (R̂) =

XX

πij−1 (πij − πi πj )πi−1 dˆi πj−1 dˆj ,

i∈A j∈A

−1

dˆi = T̂x1

(x2i − R̂x1i ), T̂x =

Kim & Fuller & Mukhopadhyay (ISU & SAS)

P

−1

i∈A πi xi , TxN =

Chapter 1

PN

i=1 xi , RN

=

x̄2N

x̄1N

7/23-24/2015

68 / 318

Remarks on Ratios

1

Variance estimation :

X

V̂ (R̂) = V̂ (

πi−1 dˆi )

dˆi

2

=

i∈A

−1

T̂x1

(x2i

− R̂x1i )

If x1i = 1 and x2i = yi , then Hájek estimator

P

−1

i∈A πi yi

ȳπ = P

−1

i∈A πi

X

.

−1

⇒ V (ȳπ − ȳN | F) = V [N

πi−1 (yi − ȳN )|F]

i∈A

V [N −1 T̂y | F] = V [N −1

X

πi−1 yi | F].

i∈A

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 1

7/23-24/2015

69 / 318

7/23-24/2015

70 / 318

Approximations for Complex Estimators

θ̂ is defined through an estimating equation

X

wi g (xi , θ̂) = 0.

i∈A

Let Ĝ (θ) =

X

wi g (xi ; θ)

i∈A

G (θ) = N −1

N

X

g (xi ; θ)

i=1

Ĥ(θ) = ∂ Ĝ (θ)/∂θ

H(θ) = ∂G (θ)/∂θ

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 1

Theorem 1.3.9

Theorem

Under suitable conditions,

√

L

n(θ̂ − θN ) → N(0, V)

where

V = n[H(θN )]−1 V {Ĝ (θN |FN )}[H(θN )]−1

Also, V (θ̂) can be estimated by

V̂ = n[Ĥ(θ̂)]−1 V̂ {Ĝ (θ̂|FN )}[Ĥ(θ̂)]−1

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 1

7/23-24/2015

71 / 318

7/23-24/2015

72 / 318

Comments

It is difficult to show CLT for general HT estimator

Exception: Large number of strata

CLT requires:

Large samples (that is, effectively large)

Moments: no extreme observations

No extreme weights

Functions: curvature small relative to s.e

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 1

Basic Estimators Using SAS

PROC SURVEYMEANS

PROC SURVEYFREQ

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 1

7/23-24/2015

73 / 318

PROC SURVEYMEANS

Univariate analysis: population totals, means, ratios,

and quantiles

Variances and confidence limits

Domain analysis

Poststratified analysis

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 1

7/23-24/2015

74 / 318

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 1

7/23-24/2015

75 / 318

PROC SURVEYFREQ

One-way to n-way frequency and crosstabulation

tables

Totals and proportions

Tests of association between variables

Estimates of risk differences, odds ratios, and relative

risks

Standard errors and confidence limits

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 1

7/23-24/2015

76 / 318

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 1

7/23-24/2015

77 / 318

Digitech Cable

Describe:

Satisfaction ratings

Usage time

Satisfaction ratings based on household sizes

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 1

7/23-24/2015

78 / 318

PROC SURVEYMEANS

o ds g r a p h i c s on ;

p r o c s u r v e y m e a n s d a t a=R e s p o n s e D a t a mean t o t a l=t o t ;

s t r a t a S t a t e Type ;

weight SamplingWeight ;

c l a s s Rating ;

v a r R a t i n g UsageTime ;

run ;

o ds g r a p h i c s o f f ;

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 1

7/23-24/2015

79 / 318

The SURVEYMEANS Procedure

Data Summary

Number of Strata

Number of Observations

Sum of Weights

8

300

13471

Statistics

Variable

Mean

Std Error

of Mean

Computer Usage Time

284.953667

10.904880

Extremely Unsatisfied

Customer Satisfaction

0.247287

0.024463

Unsatisfied

Customer Satisfaction

0.235889

0.025091

Neutral

Customer Satisfaction

0.224797

0.024247

Satisfied

Customer Satisfaction

0.200509

0.023548

Extremely Satisfied

Customer Satisfaction

0.091518

0.016986

Level

UsageTime

Rating

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Label

Chapter 1

7/23-24/2015

80 / 318

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 1

7/23-24/2015

81 / 318

7/23-24/2015

82 / 318

PROC SURVEYFREQ

p r o c s u r v e y f r e q d a t a=R e s p o n s e D a t a ;

s t r a t a S t a t e Type ;

weight SamplingWeight ;

t a b l e s Rating / chisq

t e s t p =(0.25 0 . 2 0 0 . 2 0 0 . 2 0 0 . 1 5 ) ;

run ;

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 1

The SURVEYFREQ Procedure

Customer Satisfaction

Frequency

Weighted

Frequency

Extremely

Unsatisfied

70

3154

315.53192

Unsatisfied

67

3009

Neutral

64

Satisfied

Extremely

Satisfied

Rating

Total

Std Err of

Wgt Freq Percent

Test

Percent

Std Err of

Percent

24.7287

25.00

2.4739

323.64922

23.5889

20.00

2.5376

2867

312.75943

22.4797

20.00

2.4522

57

2557

303.73618

20.0509

20.00

2.3814

26

1167

219.09943

9.1518

15.00

1.7178

284

12754

7.72778E-6

100.000

Frequency Missing = 16

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 1

7/23-24/2015

83 / 318

7/23-24/2015

84 / 318

Rao-Scott Chi-Square Test

Pearson Chi-Square

9.1865

Design Correction

0.9857

Rao-Scott Chi-Square

9.3195

DF

4

Pr > ChiSq

0.0536

F Value

2.3299

Num DF

4

Den DF

1104

Pr > F

0.0543

Sample Size = 284

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 1

Domain Estimation

Domains are subsets of the entire population

Domain sample size is not fixed

Variance estimation should account for random

sample sizes in domains

Degrees of freedom measured using the entire sample

Use the DOMAIN statement

Do NOT use the BY statement for domain analysis

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 1

7/23-24/2015

85 / 318

7/23-24/2015

86 / 318

Describe Usage Based on Household Sizes

p r o c s u r v e y m e a n s d a t a=R e s p o n s e D a t a

mean t o t a l=t o t ;

s t r a t a S t a t e Type ;

weight SamplingWeight ;

v a r UsageTime ;

domain H o u s e h o l d S i z e ;

run ;

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 1

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 1

7/23-24/2015

87 / 318

7/23-24/2015

88 / 318

Describe Rating Based on Household Sizes

p r o c s u r v e y f r e q d a t a=R e s p o n s e D a t a ;

s t r a t a S t a t e Type ;

weight SamplingWeight ;

t a b l e s HouseholdSize ∗ Rating /

p l o t= a l l ;

run ;

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 1

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 1

7/23-24/2015

89 / 318

7/23-24/2015

90 / 318

Chapter 2

Use of Auxiliary Information in Estimation

World Statistics Congress Short Course

July 23-24, 2015

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 2

Ratio Estimation

Population : Observe x̄N = N −1

Sample : Observe (x̄HT , ȳHT ) =

PN

i=1 xi

P

N −1 i∈A πi−1 (xi , yi )

Ratio estimator

ȳrat = x̄N

ȳHT

x̄HT

Let RN = x̄N−1 ȳN be the population ratio, where

P

(x̄N , ȳN ) = N −1 N

i=1 (xi , yi ).

Assume that (x̄HT , ȳHT ) − (x̄N , ȳN ) = Op (n−1/2 ).

Assume that x̄N 6= 0.

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 2

7/23-24/2015

91 / 318

Asymptotic Properties of Ratio Estimator (1)

Linear approximation:

ȳrat − ȳN = ȳHT − RN x̄HT + Op (n−1 )

Proof

ȳrat − ȳN

−1

= x̄HT

x̄N (ȳHT − RN x̄HT )

n

o

−1/2

=

1 + Op (n

) (ȳHT − RN x̄HT )

= ȳHT − RN x̄HT + Op (n−1 )

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 2

7/23-24/2015

92 / 318

Asymptotic Properties of Ratio Estimator (2)

Bias approximation: Uses second order Taylor expansion

−1

x̄HT

ȳHT

= RN + x̄N−1 (ȳHT − RN x̄HT )

n

o

2

−2

+x̄N RN (x̄HT − x̄N ) − (x̄HT − x̄N ) (ȳHT − ȳN )

+Op (n−3/2 ).

−1

Under moment conditions for x̄HT

ȳHT and x̄N 6= 0,

Bias(ȳrat ) = E (ȳrat − ȳN )

= x̄N−1 [RN V (x̄HT ) − Cov (x̄HT , ȳHT )] + O(n−2 )

= O(n−1 ).

Thus, bias of θ̂ = ȳrat is negligible because

Bias(θ̂)

R.B.(θ̂) = q

→ 0 as

Var (θ̂)

Kim & Fuller & Mukhopadhyay (ISU & SAS)

n → ∞.

Chapter 2

7/23-24/2015

93 / 318

Asymptotic Properties of Ratio Estimator (3)

Given the conditions of Theorem 1.3.8

θ̂ − θ

q

→ N(0, 1),

Var (θ̂)

and

V̂ (θ̂) = V̂ (ȳHT − R̂ x̄HT ) =

Kim & Fuller & Mukhopadhyay (ISU & SAS)

x̄HT

x̄N

Chapter 2

−2

V̂ (ȳHT − R̂ x̄HT )

7/23-24/2015

94 / 318

Other Properties of Ratio Estimator

1

Ratio estimator is the best linear unbiased estimator under

yi = xi β + ei ,

ei ∼ (0, xi σ 2 )

2

Scale invariant, not location invariant

3

Linear but not design linear

4

Ratio estimator in stratified sample,

ȳst,s

ȳst,c

H

X

x̄hN

=

Wh ȳh

: separate ratio estimator

x̄h

h=1

!P

H

H

X

Wh ȳh

: combined ratio estimator

=

Wh x̄hN Ph=1

H

W

x̄

h h

h=1

h=1

where Wh = Nh /N.

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 2

7/23-24/2015

95 / 318

§2.2 Regression estimation

Sample : Observe (xi , yi ).

Population : Observe xi = (1, x1i ) i = 1, 2, ..., N or only x̄N .

Interested in estimation of ȳN = N

−1

N

X

yi

i=1

Regression model

yi = xi β + ei

ei independent of xj for all i and j, ei ∼ ind (0, σe2 ).

Under Normal model, regression gives the best predictor

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 2

7/23-24/2015

96 / 318

Regression Model: Estimation

yi = xi β + ei , ei ∼ ind (0, σe2 )

P

Linear Estimator: ȳw = i∈A wi yi .

To find the best linear unbiased estimator of x̄N β = E {ȳN },

!

(

)

X

X

min V

wi yi | X , x̄N s.t. E

wi yi − ȳN | X, x̄N = 0

i∈A

⇔ min

X0

=

X

i∈A

wi2 s.t.

i∈A

(x01 , x02 , ..., x0n )

X

wi xi = x̄N

i∈A

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 2

7/23-24/2015

97 / 318

Best Linear Unbiased Estimator

Lagrange multiplier method

Q =

∂Q

∂wi

1X

2

!0

wi2 + λ0

i∈A

X

wi xi − x̄N

i∈A

= wi + λ0 x0i

P

0 = −x̄ (

0

−1

w

x

=

x̄

⇒

λ

N

N

i

i

i∈A

i∈A xi xi )

P

∴ wi = xi ( i∈A x0i xi )−1 x̄0N = x̄N (X 0 X )−1 x0i

P

Regression estimator is the solution that minimizes the variance in the

class of linear estimators that are unbiased under the model.

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 2

7/23-24/2015

98 / 318

Properties of Regression Estimator

1 Linear in y . Location-Scale Invariant.

2 Alternative expression: For xi = (1, x1i ),

X

ȳreg = ȳn + (x̄1N − x̄1n )β̂1 =

wi yi

i∈A

#−1

"

β̂1 =

wi

=

X

(x1i − x̄1n )0 (x1i − x̄1n )

X

(x1i − x̄1n )0 yi

i∈A

i∈A

1

+ (x̄1N

n

"

#−1

X

− x̄1n )

(x1i − x̄1n )0 (x1i − x̄1n )

(x1i − x̄1n )0

i∈A

3 Writing ȳreg = ȳn + (x̄1N − x̄1n )β̂1 = β̂0 + x̄1N β̂1 , the regression

estimator can be viewed as the predicted value of Y = β0 + x1 β1 + e

at x1 = x̄1N under the regression model.

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 2

7/23-24/2015

99 / 318

Example: Artificial population

0

5

y

10

15

Population Plot

(3,4)

−5

0

5

10

x

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 2

7/23-24/2015

100 / 318

Example (Cont’d): SRS of size n = 20

15

Sample Plot (n=20)

pop. mean = 4

sam. mean = 3.42

10

reg. est. = 3.85

5

y

(3,4)

0

( 3 , 3.85 )

( 2.61 , 3.42 )

−2

0

2

4

6

8

x

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 2

7/23-24/2015

101 / 318

Properties of Regression Estimator (2)

4 For the mean adjusted regression model

yi = γ0 + (x1i − x̄1N )γ1 + ei ,

the OLS estimator of γ0 is

γ̂0 = ȳn − (x̄1n − x̄1N ) γ̂1

where γ̂1 = β̂1 . That is, γ̂0 = ȳreg .

5 Under the linear regression model:

a ȳreg unbiased (by construction)

∵ E (ȳreg − ȳN |XN )

=

E

( N

X

xi β̂ −

i=1

=

N

X

i=1

xi β −

N

X

)

(xi β + ei )|XN

i=1

N

X

xi β = 0

i=1

(∵ E (β̂|XN ) = β & E (ei |XN ) = 0)

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 2

7/23-24/2015

102 / 318

Properties of Regression Estimator (3)

b Variance

V (ȳreg − ȳN |X , x̄N ) = n−1 (1 − f )σe2 + (x̄1N − x̄1n )V (β̂1 | X )(x̄1N − x̄1n )0

)0 (x

−1 2

σe

− x̄1n )

V (β̂1 | X) =

P

∵ ȳreg

=

ȳn + (x̄1N − x̄1n )β̂1

=

β0 + x̄1n β1 + ēn + (x̄1N − x̄1n )β1 + (x̄1N − x̄1n )(β̂1 − β1 )

=

β0 + x̄1N β1 + ēn + (x̄1N − x̄1n )(β̂1 − β1 )

=

β0 + x̄1N β1 + ēN

ȳN

i∈A (x1i

− x̄1n

1i

⇒ ȳreg − ȳN = ēn − ēN + (x̄1N − x̄1n )(β̂1 − β1 )

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 2

7/23-24/2015

103 / 318

Properties of Regression Estimator (4)

If x1i ∼ Normal,

V {ȳreg

k

1

− ȳN } = (1 − f ) 1 +

σe2

n

n−k −2

where k = dim(xi )

V {ȳn − ȳN } = n−1 (1 − f )σy2

If

2

Radj

σe2

k

=1− 2 ≥

, then

σy

n−2

V {ȳn − ȳN } ≥ V {ȳreg − ȳN }.

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 2

7/23-24/2015

104 / 318

Best Linear Predictor

Let yi for i ∈ A be observations from the model

yi = xi β + ei ,

with ei ∼ ind(0, σe2 ).

Predict ȳN −n = x̄N −n β + ēN −n . The BLUP of ēN −n is 0, and the BLUP

(estimator) of x̄N −n β is x̄N −n β̂. Thus, the BLUP of ȳN is

N −1 [nȳn + (N − n)x̄N −n β̂] = N −1 [nx̄n β̂ + (N − n)x̄N −n β̂]

= x̄N β̂

because ȳn − x̄n β̂ = 0.

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 2

7/23-24/2015

105 / 318

General Population: SRS Regression

Since ȳn − x̄n β̂ = 0,

ȳreg − ȳN

= x̄N β̂ − ȳN

= ȳn + (x̄N − x̄n )β̂ − ȳN

= ȳn − ȳN + (x̄N − x̄n )βN + (x̄N − x̄n )(β̂ − βN )

= ān − āN + (x̄N − x̄n )(β̂ − βN )

ai

βN

= yi − xi βN , āN = 0

!−1

!

X

X

=

x0i xi

x0i yi

i∈U

Kim & Fuller & Mukhopadhyay (ISU & SAS)

i∈U

Chapter 2

7/23-24/2015

106 / 318

Bias of Regression Estimator

Design bias is negligible (assume moments)

E [ȳreg

n

o

| FN ] = ȳN + E {ān − āN | FN } + E (x̄N − x̄n )(β̂ − βN )|FN

n

o

= ȳN + E (x̄N − x̄n )(β̂ − βN )|FN .

Bias(ȳreg | FN ) = −E [(x̄n − x̄N )(β̂ − βN )|FN ]

o

n

0

0

= −tr Cov (x̄n , β̂ ) | FN

[Bias(ȳreg | FN )]2 ≤

k

X

V (x̄j,n | FN )][V (β̂j | FN )] = O(n−1 )O(n−1 ).

j=1

⇒ Bias(ȳreg | FN ) = O(n−1 ).

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 2

7/23-24/2015

107 / 318

Variance of Approximate Distribution

ȳreg − ȳN

= ān − āN + (x̄N − x̄n )(β̂ − βN )

= ān − āN + Op (n−1 )

V (ān | FN ) = (1 − f )n−1 Sa2

L

By Theorem 1.3.4, [V (ān | FN )]−1/2 (ān − āN ) → N(0, 1).

Recall āN = 0.

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 2

7/23-24/2015

108 / 318

Estimated Variance

V̂ {ȳreg } = (1 − f )n

−1

−1

(n − k)

X

âi2 ,

i∈A

where âi = yi − xi β̂ and k = dimension of xi .

X

âi2

=

i∈A

Xh

ai − xi (β̂ − βN )

i2

i∈A

!

=

X

=

X

ai2 − 2(β̂ − βN )0

X

x0i ai + (β̂ − βN )0

i∈A

i∈A

X

x0i xi

(β̂ − βN )

i∈A

ai2 + Op (1)

i∈A

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 2

7/23-24/2015

109 / 318

Limiting Distribution of Coefficients

Theorem 2.2.2 (SRS) Assume (yi , xi ) iid, existence of eighth moments.

!−1

β̂ = (M̂xx )−1 M̂xy =

n−1

X

x0i xi

n−1

i∈A

X

x0i yi

i∈A

!−1

βN

−1

= (Mxx,N )

Mxy ,N =

N

−1

X

x0i xi

i∈U

N

−1

X

x0i yi

i∈U

L

⇒ V {β̂ − βN |FN }−1/2 (β̂ − βN )|FN → N(0, I)

−1

V {β̂ − βN |FN } = n−1 (1 − fN )M−1

xx,N Vbb,N Mxx,N

X

Vbb,N = N −1

x0i ai2 xi , ai = yi − xi βN

i∈U

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 2

7/23-24/2015

110 / 318

Proof of Theorem 2.2.2

!

−1

β̂ − βN = M̂−1

xx M̂xa =: M̂xx

n−1

X

bi

i∈A

P

where M̂xa = n−1 i∈A x0i ai .

Given moment conditions,

o

√ n

L

n M̂xa − Mxa,N → N [0, (1 − fN )Vbb,N ] (Theorem 1.3.4)

P

p

where Mxa,N = N −1 i∈U xi ai = 0. Now M̂xx → Mxx,N and, by Slutsky’s

theorem, the result follows.

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 2

7/23-24/2015

111 / 318

Regression for General Designs (Theorem 2.2.1)

FN = {z1 , z2 , · · · , zN } where zi = (yi , xi ), Z0n = (z10 , z20 , · · · , zn0 ).

Define

M̂zφz = n−1 Z0n Φ−1

n Zn =

M̂xφx

M̂y φx

M̂xφy

M̂y φy

and β̂ = M̂−1

xφx M̂xφy , where Φn :n × n matrix (positive definite) e.g.

Φn = (N/n)diag{π1 , · · · , πn }. Assume

(i) V {z̄HT − z̄N |FN } = Op (n−1 ) a.s. where z̄HT = N −1

P

i∈A

πi−1 zi

(ii) M̂zφz − Mzφz,N = Op (n−1/2 ) a.s. and M̂zφz nonsingular.

(iii) K1 < Nn−1 πi < K2

L

(iv) [V {z̄HT − z̄N |FN }]−1/2 (z̄HT − z̄N ) → N(0, I)

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 2

7/23-24/2015

112 / 318

Theorem 2.2.1

Theorem

Given moments, design consistent HT estimators,

0

−1

(i) β̂ − βN = M−1

xφx,N b̄HT + Op (n ) a.s.

P

P

0 x −1

0

where βN = (Mxx,N )−1 Mxy ,N =

x

i

i∈U i

i∈U xi yi .

L

(ii) [V̂ (β̂|FN )]−1/2 (β̂ − βN ) → N(0, I)

where b0i = n−1 Nπi ξi ai , ai = yi − xi βN ,

−1

ξi is the i-th column of X0n Φ−1

n , b̄HT = N

P

i∈A

πi−1 bi and

−1

−1

0

V̂ (β̂ | FN ) = M̂xφx

V̂ (b̄HT

)M̂xφx

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 2

7/23-24/2015

113 / 318

Proof of Theorem 2.2.1

β̂ − βN

−1

= M̂xφx

(n−1 Xn0 Φ−1

n an )

−1/2

n−1 Xn0 Φ−1

)

n an = Op (n

−1

M̂xφx

β̂ − βN

n−1 Xn0 Φ−1

n an

−1

= Mxφx,

+ Op (n−1/2 )

N

−1

−1

= Mxφx,

(n−1 Xn0 Φ−1

n an ) + Op (n )

N

X

X

−1

−1

0

= n

ξ i ai = N

πi−1 bi0 = b̄HT

i∈A

i∈A

−1

0

ξi is the i-th column of Xn0 Φ−1

n , bi = n Nπi ξi ai .

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 2

7/23-24/2015

114 / 318

Remarks on Theorem 2.2.1

1

The choice of Φn is arbitrary.

(i.e. The result in Theorem 2.2.1 holds for given Φn )

A simple case is Φn = (N/n)diag{π1 , · · · , πn }

2

Variance estimation:

−1

−1

V̂ (β̂) = M̂xφx

V̂bb M̂xφx

where V̂bb is the estimated sampling variance of b̄0HT calculated with

b̂0i = n−1 Nπi ξi âi and âi = yi − xi β̂.

3

Result holds for a general regression estimator. That is, the

asymptotic normality of x̄N β̂ follows from the asymptotic normality of

β̂.

4

Theorem 2.2.1 states the consistency of β̂ for βN . But we are also

interested in the consistency of the estimator of ȳN .

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 2

7/23-24/2015

115 / 318

Theorem 2.2.3: Design Consistency of ȳreg for ȳN

Theorem

n

o

Let p lim β̃ − βN | FN = 0. Then,

p lim {ȳreg

N

1 X

− ȳN | FN } = 0 ⇐⇒ p lim

ai = 0

N→∞ N

i=1

where ai = yi − xi βN and ȳreg = x̄N β̃.

Proof : Because β̃ is design consistent for βN ,

)

(

N

n

o

X

= p lim N −1

(yi − xi β̃) | FN

p lim ȳN − x̄N β̃ | FN

(

= p lim N −1

i=1

N

X

)

(yi − xi βN ) | FN

.

i=1

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 2

7/23-24/2015

116 / 318

Condition for Design Consistency

Corollary (2.2.3.1)

Assume design consistency for z̄HT and for sample moments.

−1 0 −1

Let ȳreg = x̄N β̂, β̂ = (X0n Φ−1

n Xn ) Xn Φn yn

If ∃γn such that

Xn γn = Φn D−1

π Jn

(1)

where Dπ = diag(π1 , π2 , · · · , πn ) and Jn is a column vector of 1’s,

then ȳreg is design-consistent for ȳN .

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 2

7/23-24/2015

117 / 318

7/23-24/2015

118 / 318

Proof of Corollary 2.2.3.1

By Theorem 2.2.3, we have only to show that

X

−1

N

(yi − xi βN ) = 0,

i∈U

where βN = p lim β̂.

Now,

(y − Xn β̂)0 Φ−1

n Xn = 0

⇒ (y − Xn β̂)0 Φ−1

n Xn γn = 0

⇒ (y − Xn β̂)0 Dπ−1 Jn = N(ȳHT − x̄HT β̂) = 0

⇒ p lim{ȳHT − x̄HT βN } = p lim{ȳ

P HT − x̄HT β̂} = 0

−1

& p lim{ȳHT − x̄HT βN } = N

i∈U (yi − xi βN ).

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 2

Remarks on Corollary 2.2.3.1

1

2

Condition Φn Dπ−1 Jn ∈ C(Xn ) is a crucial condition for the design

consistency of the regression estimator of the form ȳreg = x̄N β̂ with

−1 0 −1

β̂ = (Xn0 Φ−1

n Xn ) Xn Φn yn .

If condition Φn Dπ−1 Jn ∈ C(Xn ) does not hold, one can expand the Xn

matrix by including z0 = Φn Dπ−1 Jn and use Zn = [z0 , Xn ] to

construct the regression estimator:

ȳreg

= z̄N γ̂

= (z̄0N , x̄N )

z00 Φ−1

z00 Φ−1

n z0

n Xn

0 −1

Xn0 Φ−1

n z0 Xn Φn Xn

Kim & Fuller & Mukhopadhyay (ISU & SAS)

−1 Chapter 2

z00 Φ−1

n y

Xn0 Φ−1

n y

7/23-24/2015

119 / 318

Examples for Φn Dπ−1 Jn ∈ C(Xn )

1

Φn = Dπ and xi = (1, x1i )

⇒ ȳreg = ȳπ + (x̄1,N − x̄1,π ) β̂1

P

−1 −1 P

−1

where (ȳπ , x̄1,π ) =

π

i∈A i

i∈A πi (yi , x1i )

P

−1 P

−1

−1

0 (x − x̄

0

β̂1 =

π

(x

−

x̄

)

)

1,π

1,π

1i

1i

i∈A i

i∈A πi (x1i − x̄1,π ) yi .

2

P

Φn = In , xi = πi−1 , x1i = (wi , x1i ), w̄N = N −1 N

i=1 wi ,

⇒ ȳreg ,ω = w̄N ȳω + (x̄1,N − w̄N x̄1,ω ) β̂1,ols

(ȳω , x̄1,ω ) =

−1 P

−1

−1

i∈A πi wi

i∈A πi (yi , x1i )

P

0

(β̂0,ols , β̂1,ols

)0 =

0

i∈A xi xi

P

Kim & Fuller & Mukhopadhyay (ISU & SAS)

−1 P

0

i∈A xi yi .

Chapter 2

7/23-24/2015

120 / 318

Design Optimal Regression Estimator (Theorem 2.2.4)

Theorem

Sequence of populations and designs giving consistent estimators of

moments. Consider ȳreg (β̂) = ȳπ + (x̄1N − x̄1π )β̂ for some β̂

(i) ȳreg is design consistent for ȳN for any reasonable β̂.

(ii) β̂ ∗ = [V̂ (x̄1π )]−1 Ĉ (x̄1π , ȳ1π ) minimizes the estimated variance of

ȳreg (β̂)

(iii) CLT for ȳreg (β̂ ∗ ) can be established

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 2

7/23-24/2015

121 / 318

Remarks on Theorem 2.2.4

1

Optimal estimator can be viewed as Rao-Blackwellization based on

0 0 x̄π

x̄N

V(x̄0π )

C(x̄π , ȳπ )

∼N

,

C(x̄0π , ȳπ )

V (ȳπ )

ȳπ

ȳN

2

GLS interpretation

Kim & Fuller & Mukhopadhyay (ISU & SAS)

x̄0π − x̄0N

ȳπ

=

Chapter 2

0

1

ȳN +

e1

e2

7/23-24/2015

122 / 318

§2.3 Linear Model Prediction

Population model : yN = XN β + eN , eN ∼ (0, Σee NN )

eN independent of XN . Assume Σee NN known

Sample model : yA = XA β + eA , eA ∼ (0, Σee AA )

Best linear predictor (BLUP)

X

X

−1

θ̂ = N

yi +

{ŷi + Σee ĀA Σ−1

ee AA (yA − XA β̂)}

i∈A

i∈Ā

−1

−1

where ŷi = xi β̂ and β̂ = (X0A Σ−1

ee AA XA ) XA Σee AA yA

Q : When is the model-based predictor θ̂ design consistent?

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 2

7/23-24/2015

123 / 318

Model and Design Consistency

Theorem (2.3.1)

If Σee AA (D−1

π Jn − Jn ) − Σee AĀ JN−n ∈ C(XA ), then

θ̂ = ȳHT + (x̄N − x̄HT )β̂ and θ̂ − ȳN = āHT − āN + Op (n−1 )

where ai = yi − xi βN

Analogous to Corollary 2.2.3.1 for prediction.

If the model satisfies the conditions for design consistency, then the model

is called full model. Otherwise, it is called reduced model (or restricted

model).

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 2

7/23-24/2015

124 / 318

Model and Design Consistency

General strategy (for general purpose survey)

a Pick important y

b Find a model y = X β + e

c Use ȳreg = x̄N β̂ (Full model) or use ȳreg ,π = ȳπ + (x̄N − x̄π )β̂

−1

0 −1

where β̂ = (Xn0 Σ−1

ee Xn ) (Xn Σee yn )

If the design consistency condition does not hold, we can expand the

XA matrix by including z0 such as Σee AA Dπ−1 Jn , Z = [z0 , X ]. If z̄0N is

not known, use ȳreg ,π of (c).

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 2

7/23-24/2015

125 / 318

§2.3.2 Nonlinear Models (All x Values Known)

Superpopulation model

yi = α(xi ; θ) + ei , E (ei ) = 0, ei indep. of xj , for all i and j.

1

2

P

P

ȳc,reg = ȳHT + N −1 i∈U α(xi ; θ̂) − N −1 i∈A πi−1 α(xi ; θ̂)

hP

i

P

−1

ȳm,reg = N

i∈A yi +

i∈Ā α(xi ; θ̂)

Remark: ȳc,reg = ȳm,reg if

Bennett, 1988).

Kim & Fuller & Mukhopadhyay (ISU & SAS)

−1

i∈A (πi

P

− 1)(yi − ŷi ) = 0. (Firth and

Chapter 2

7/23-24/2015

126 / 318

Consistency of Nonlinear Regression (Theorem 2.3.2)

Theorem

(i) There exist θN such that θ̂ − θN = Op (n−1/2 ), a.s..

(ii) α(x, θ) is a continuous differentiable function of θ with derivative

uniformly continuous on B, a closed set containing θN .

(iii) The partial derivative h(xi ; θ) = ∂α(xi ; θ)/∂θ satisfies

X

X

−1

−1 sup N πi h(xi ; θ) −

h(xi ; θ) = Op (n−1/2 ) a.s.

θ∈B

i∈A

i∈U

⇒ ȳc,reg − ȳN = āHT − āN + Op (n−1 ) where ai = yi − α(xi ; θN )

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 2

7/23-24/2015

127 / 318

Calibration

Minimize ω 0 V ω s.t. ω 0 X = x̄N

(ω 0 Vω)(aX0 V−1 Xa0 ) ≥ (ω 0 Xa0 )2

with equality iff

ω 0 V 1/2 ∝ aX 0 V −1/2

ω 0 ∝ aX 0 V −1

ω 0 = kaX 0 V −1 , k : constant

ω0X

= kaX 0 V −1 X

x̄N (X 0 V −1 X )−1 = ka

∴

ω 0 = x̄N (X 0 V −1 X )−1 X 0 V −1

& ω 0 V ω ≥ x̄N (X 0 V −1 X )−1 x̄0N

Note Minimize V (ω 0 y | X, d) s.t. E (ω 0 y − ȳN | X, d) = 0.

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 2

7/23-24/2015

128 / 318

Alternative Minimization

Lemma

α : given n-dimensional vector

Let ωa = arg minω ω 0 Vω s.t ω 0 X = x̄N

Let ωb = arg minω (ω − α)0 V(ω − α) s.t ω 0 X = x̄N

If V α ∈ C(X), then ωa = ωb .

Proof :

(ω − α)0 V(ω − α)

= ω 0 Vω − α0 Vω − ω 0 Vα + α0 Vα

= ω 0 Vω − λ0 X0 ω − ω 0 Xλ + α0 Vα

= ω 0 Vω − 2λ0 x̄0N + α0 Vα

where V α = Xλ

∵ ω 0 X = x̄N

If α = Dπ−1 Jn , then V α ∈ C(X ) is the condition for design

consistency in Corollary 2.2.3.1.

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 2

7/23-24/2015

129 / 318

General Objective Function

min

X

G (ωi , αi ) s.t.

i∈A

X

ωi xi = x̄N

i∈A

Lagrange multiplier method

∂G

g (ωi , αi ) − λ0 x0i = 0 where g (ωi , αi ) =

∂ω

X i

g −1 (λ0 x0i , αi )xi = x̄N

⇒ ωi = g −1 (λ0 x0i , αi ) where λ0 is from

i∈A

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 2

7/23-24/2015

130 / 318

GREG Estimator

min Q(ω, d) =

X

di

i∈A

ωi

−1

di

2

qi s.t.

X

ωi xi = x̄N .

i∈A

⇒ di−1 (ωi − di )qi + λ0 x0i = 0

⇒ ωi = di + λ0 di x0i /qi

X

X

X

0

⇒

ωi x i =

di xi + λ

di x0i xi /qi

i∈A

∴

i∈A

i∈A

X

0

λ = (x̄N − x̄HT )(

di x0i xi /qi )−1

i∈A

∴

X

wi = di + (x̄N − x̄HT )(

di x0i xi /qi )−1 di x0i /qi

i∈A

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 2

7/23-24/2015

131 / 318

Other Objective Functions

Pseudo empirical likelihood

Q(ω, d ) = −

X

di log

ωi

di

ωi

di

, ωi = di /(1 + xi λ)

Kullback-Leibler distance:

Q(ω, d) =

X

ωi log

, ωi = di exp(xi λ)

where di = 1/πi .

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 2

7/23-24/2015

132 / 318

Theorem 2.7.1 Deville and Särndal (1992)

Theorem

Let G (ω, α) be a continuous convex function with a first derivative that is

zero for ω = α. Under some regularity conditions, the solution ωi that

minimizes

X

X

G (ωi , αi ) s.t.

ωi xi = x̄N

i∈A

i∈A

satisfies

X

ωi yi =

i∈A

X

αi yi + (x̄N − x̄α ) β̂ + Op (n−1 )

i∈A

P

P

0 x /φ −1

0

where β̂ =

x

i

ii

i∈A i

i∈A xi yi /φii and

φii = ∂ 2 G (ωi , αi )/∂ωi2 ω =α .

i

i

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 2

7/23-24/2015

133 / 318

Weight Bounds

ωi = di + di λ0 x0i /ci can take negative values (or take very large

values)

P

Add L1 ≤ ωi ≤ L2 to

ωi xi = x̄N .

Approaches

1

Huang and Fuller (1978)

Q(wi , di ) =

2

X

di Ψ

wi

di

, Ψ : Huber function

Husain (1969)

0

min ω 0 ω + γ(ω 0 X − x̄N )0 Σ−1

x̄ x̄ (ω X − x̄N ) for some γ

3

Other methods, quadratic programming.

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 2

7/23-24/2015

134 / 318

Comments

Regression estimation is large sample superior to mean and ratio

estimation for k << n.

Applications require restrictions on regression weights ( wi > 1/N )

Model estimator is design consistent if X γ = Σee Dπ−1 J.

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 2

7/23-24/2015

135 / 318

7/23-24/2015

136 / 318

Regression Estimators Using SAS

PROC SURVEYREG

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 2

Regression Estimators

Response variable is correlated to a list of auxiliary

variables

Population totals for the auxiliary variables are known

Efficient estimators can be constructed by using a

linear contrast from a regression model

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 2

7/23-24/2015

137 / 318

Digitech Cable

Can you improve the estimate of the average usage time

by taking data usage into account?

Average data usage (MB) for the population is

available

Data usage for every unit in the sample is available

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 2

7/23-24/2015

138 / 318

ESTIMATE Statement

p r o c s u r v e y r e g d a t a=R e s p o n s e D a t a

p l o t= f i t ( w e i g h t=heatmap s h a p e=hex

n b i n s =20);

s t r a t a S t a t e Type ;

weight SamplingWeight ;

model UsageTime = DataUsage ;

estimate ’ Regression Estimator ’

i n t e r c e p t 1 DataUsage 4 0 0 2 . 1 4 ;

run ;

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 2

7/23-24/2015

139 / 318

7/23-24/2015

140 / 318

The SURVEYREG Procedure

Regression Analysis for Dependent Variable UsageTime

Fit Statistics

R-Square

0.6555

Root MSE

121.56

Denominator DF

292

Estimate

Label

Regression Estimator

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Estimate

Standard

Error

279.18

6.9860

Chapter 2

DF t Value

Pr > |t|

292

<.0001

39.96

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 2

7/23-24/2015

141 / 318

Poststratification Using SAS

PROC SURVEYMEANS

Strata identification is unknown, but strata totals or

percentages are known

Stratification after the sample is observed

Use poststratification to

produce efficient estimators

adjust for nonresponse bias

perform direct standardization

Variance estimators must be adjusted

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 2

7/23-24/2015

142 / 318

Digitech Cable

Known distribution of race in the four states

Adjust the distribution of race in the sample to match

the population

Estimate the average usage time after adjusting for

race

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 2

7/23-24/2015

143 / 318

7/23-24/2015

144 / 318

POSTSTRATA Statement

p r o c s u r v e y m e a n s d a t a=R e s p o n s e D a t a

mean s t d e r r ;

s t r a t a S t a t e Type ;

weight SamplingWeight ;

v a r UsageTime ;

p o s t s t r a t a Race /

p o s t p c t=R a c e P e r c e n t ;

run ;

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 2

Poststratified Estimator

The SURVEYMEANS Procedure

Statistics

Variable

Label

UsageTime

Computer Usage Time

Mean

Std Error

of Mean

288.477541

10.612532

A set of poststratified-adjusted weights is created

The variance estimator uses the poststratification

information

Store the poststratified-adjusted replicate weights

from PROC SURVEYMEANS and use the adjusted

replicate weights in other survey procedures

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 2

7/23-24/2015

145 / 318

7/23-24/2015

146 / 318

Chapter 3

Use of Auxiliary Information in Design

World Statistics Congress Short Course

July 23-24, 2015

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 3

Design Strategy

Find the best strategy (design, estimator) for ȳN under the model

yi = xi β + ei , ei ∼ ind (0, γii σ 2 )

x̄N , γii known, β, σ 2 unknown

P

Estimator class : θ̂ = i∈A wi yi : linear in y

and E {(θ̂ − ȳN ) | d , XN } = 0, so θ̂ is model-unbiased & design

consistent.

Criterion : Anticipated variance

AV{θ̂ − ȳN } = E {V (θ̂ − ȳN |F)}

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 3

7/23-24/2015

147 / 318

Candidate Estimator

!

θ̂ = N −1

X

yi +

P

xi β̂

i∈Ac

i∈A

−1 0 −1

β̂ = (X 0 D−1

γ X ) X Dγ y =

X

0

i∈A xi xi /γii

−1 P

0

i∈A xi yi /γii

Dγ = diag(γ11 , γ22 , ..., γnn )

If the vector of γii is in the column space of X ,

P

i∈A (yi − xi β̂) = 0 and θ̂ = ȳreg = x̄N β̂.

1/2

1/2

If πi ∝ γii and the vector of γii

ȳreg = ȳHT + (x̄N − x̄HT )β̂.

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 3

in in the column space of X ,

7/23-24/2015

148 / 318

Theorem 3.1.1 (Isaki and Fuller, 1982)

1/2

1/2

Under moment conditions, if πi ∝ γii , γii = xi τ1 , and γii = xi τ2 for

some τ1 and τ2 , then

!2

N

N

n X 2

1 X 1/2

− 2

γii

γii σ

lim nAV{ȳreg − ȳN } = lim

N→∞

N→∞

N

N

i=1

i=1

and

lim n [AV{ȳreg − ȳN } − AV{Ψl − ȳN }] ≤ 0

N→∞

for all Ψl ∈ Dl and all p ∈ Pc , where

X

Dl = {Ψl ; Ψl =

αi yi and E {(Ψl − ȳN ) | d, X } = 0}

i∈A

and Pc is the class of fixed-sample-size nonreplacement designs with fixed

probabilities admitting design-consistent estimators of ȳN .

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 3

7/23-24/2015

149 / 318

Proof of Theorem 3.1.1

For Ψl = α0 y

E {Ψl − ȳN |d , XN } = 0 ⇔ α0 X = x̄N

V {Ψl − ȳN |d , XN } = α0 Dγ α − 2N −1 α0 Dγ Jn + N −2 J0N Dγ N JN σ 2

α0 Dγ Jn = α0 X τ2 = x̄N τ2 = N −1 J0N XN τ2 = N −1 J0N Dγ N JN

0

2

−2 0

∴ V {Ψl − ȳN |d, XN } = α Dγ α − N JN Dγ N JN σ

Enough to find α that minimizes α0 Dγ α s.t. α0 X = x̄N

−1 0 −1

⇒ α∗0 = x̄N (X 0 D−1

γ X ) X Dγ

α0 Dγ α ≥ x̄N (X 0 Dγ−1 X )−1 x̄0N

(See Section 2.7)

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 3

7/23-24/2015

150 / 318

Remarks on Theorem 3.1.1 (1)

Under the model, yi = xi β + ei ,

= ēHT − ēN + Op (n−1 )

.

− ȳN ) = AV(ēHT − ēN )

ȳreg − ȳN

AV(ȳreg

= N

−2

N

X

[(1 − πi )πi−1 ]γii σ 2

i=1

min AV(ȳreg − ȳN ) s.t.

PN

P

1/2

N

j=1 γjj

i=1 πi

AV(ȳreg − ȳN ) ≥ N −2 n−1

= n, πi = n

!2

N

N

X 1/2

X

γii

−

γii σ 2

i=1

1/2

γii

i=1

(Godambe-Joshi lower bound)

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 3

7/23-24/2015

151 / 318

Remarks on Theorem 3.1.1 (2)

For model: yi = xi β + ei , ei ∼ (0, γii σ 2 ), best strategy is

1/2

ȳreg = ȳHT + (x̄N − x̄HT )β̂ with πi ∝ γii

1/2

To achieve a sampling design with πi ∝ γii

1

2

3

Use Poisson sampling : n is not fixed (Not covered by Theorem).

Use systematic sampling

Use approximation by stratified random sampling

P

P

1/2 .

1/2

Choose Uh s.t. i∈Uh γii = H −1 i∈U γii ,

γ11 ≤ γ22 ≤ · · · ≤ γNN

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 3

7/23-24/2015

152 / 318

Stratification, Example 3.1.2

yi = β0 + xi β1 + ei , ei ∼ (0, σe2 ) and xi = i, i = 1, 2 · · · , N = 1, 600

stratified sampling with Nh = N/H(= M), nh = n/H, n = 64

ȳst

H

X

Nh

=

H

1 X

ȳh =

ȳh

N

H

h=1

h=1

H

H

1 X

1 X 1 2

1 2

V

(ȳ

)

=

σ

σ

=

h

H2

H2

nh yh

n w

V (ȳst ) =

h=1

where

σw2

=

H −1

PH

2

h=1 σyh ,

h=1

2

σyh

= E (yhi − ȳh

)2

.

=

M 2 −1 2

12 β1

+ σe2

(M 2 − 1)/12 = variance of {1, 2, · · · , M}

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 3

7/23-24/2015

153 / 318

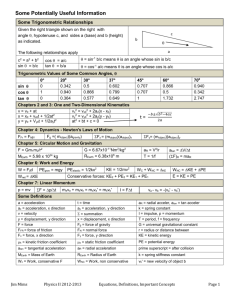

Example 3.1.2 continued

Number of

Strata

2

4

8

32

ρ2

V (ȳst )

= 0.25 ρ2

81

77

75

75

= 0.9

32

16

11

10

ρ2

V {V̂ (ȳst })

= 0.25 ρ2 = 0.90

67

7.7

62

2.4

64

1.5

111

2.0

ρ2 = 1 − (σy2 )−1 σe2

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 3

7/23-24/2015

154 / 318

Remarks on Stratification

1

For efficient point estimation, increase H

2

V {V̂ (ȳst )} depends on ρ: V {V̂ (ȳst )} can decrease in H and then

increase in H

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 3

7/23-24/2015

155 / 318

One per Stratum

A common procedure is to select one unit per stratum and to

combine or “collapse” two adjacent strata to form a variance

estimation stratum

V̂cal {ȳcol } = 0.25(y1 − y2 )2

h

i

E V̂cal {ȳcol } = 0.25(µ1 − µ2 )2 + 0.25(σ12 + σ22 )

Two-per-stratum design

V {ȳ2,st } = 0.125(µ1 − µ2 )2 + 0.25(σ12 + σ22 )

Controlled two-per-stratum design (§ 3.1.4) can be used to reduce the

variance of the two-per-stratum design

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 3

7/23-24/2015

156 / 318

Cluster Sampling

Population of cluster of elements

May have different cluster sizes

Cluster size can be either known or unknown at the design stage

Clusters are sampling units

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 3

7/23-24/2015

157 / 318

Model for Cluster Sampling

yij

= µy + bi + eij , i = 1, 2, · · · , N, j = 1, 2, · · · , Mi

bi

∼ (0, σb2 ), eij ∼ (0, σe2 ), Mi ∼ (µM , σM2 )

and bi , eij , and Mi are independent.

M

i

X

yi =

yij ∼ (Mi µy , γii )

j=1

Mi

γii = V (yi |Mi ) = Mi2 σb2 + Mi σe2

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 3

7/23-24/2015

158 / 318

Strategies for Mean per Element

1

θ̂n,1 = M̄n−1 ȳn : SRS

2

−1

θ̂n,2 = M̄HT

ȳHT : with πi ∝ Mi

3

−1

θ̂n,3 = M̄HT

ȳHT : with πi ∝ γii

1/2

Then V (θ̂n,3 ) ≤ V (θ̂n,2 ) ≤ V (θ̂n,1 )

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 3

7/23-24/2015

159 / 318

Two Stage Sampling

Population of N clusters (PSUs)

Select a sample of n1 clusters

Select a sample of mi elements from Mi elements in cluster i

Sampling within clusters is independent

A model:

yij = µy + bi + eij

bi ∼ (0, σb2 ) ind of eij ∼ (0, σe2 )

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 3

7/23-24/2015

160 / 318

Estimation of Mean per Element

N

X

θN =

!−1

Mi

i=1

θ̂SRC =

where ȳi· = mi−1

yij

i=1 j=1

n

X

!−1

Mi

i=1

mi

X

Mi

N X

X

n

X

Mi ȳi·

i=1

yij .

j=1

With equal Mi , equal mi , and SRS at both stages,

V(θ̂SRC − θN ) =

.

=

1

n1

1

n1

n1 2

1 h

n1 m i 2

1−

σb +

1−

σ

N

n1 m

NM e

1 2

2

σb + σe

m

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 3

7/23-24/2015

161 / 318

Optimal Allocation

Cost function: C = c1 n1 + c2 n1 m

Minimize V{θ̂ − θN } s.t. C = c1 n1 + c2 n1 m:

σe2 c1

m =

σb2 c2

∗

1/2

Proof: C is fixed. Minimize

C · V {θ̂ − θn } = n1−1 σb2 + m−1 σe2 (c1 n1 + c2 n1 m)

= σb2 c1 + σe2 c2 + σb2 c2 m + c1 σe2 m−1 .

Kim & Fuller & Mukhopadhyay (ISU & SAS)

Chapter 3

7/23-24/2015

162 / 318

Two-Phase Sampling

1

Phase-one : Select A1 from U. Observe xi

2

Phase-two : Select A2 from A1 . Observe (xi , yi )