THE 801 MINICOMPUTER

advertisement

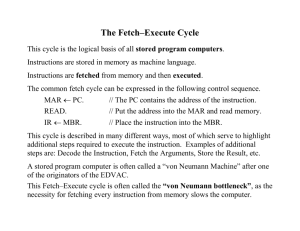

THE 801 MINICOMPUTER George Radin, IBM Fellow IBM Thomas J. Watson Research Center P. O. Box 218 Yorktown Heights, New York 10598 Abstract But it is just this pernicious effect on the primitive instructions that has made us suspicious. Most instruction frequency-studies show a sharp skew in favor of high usage of primitive instructions (such as Load, Store, Branch, Compare, Add). If the presence of a more complex set adds just one logic level to a 10 level basic machine cycle (e.g. to fetch a micro • instruction from ROS), the CPU has been slowed down by 10%. The frequency and performance improvement of the complex functions must first overcome this 10% degradation, and then justify the additional cost. If the presence of complex functions results in the CPU exceeding a packaging constraint on some level (e.g. a chip, a-board), the performance degradation can be even more substantial. This paper provides an overview of an experimental system developed at the IBM T. J. Watson Research Center. It consists of a running hardware prototype, a control program and an optimizing compiler. The basic concepts underlying the system are discussed as are the performance characteristics of the prototype. In particular, three principles are examined: - system orientation towards the pervasive use of high level language programming and a sophisticated compiler, - a primitive instruction set which can be completely hard-wired, - storage hierarchy and I / O organization to enable the CPU to execute an instruction at almost every cycle. Often, however, a minicomputer that boasts of a rich set of complex instructions has not spent additional hardware at all, but has simply microprogrammed the functions. These microinstructions are designed to execute in a single cycle and, in that cycle, to set controls most useful for the functions desired. This however, is exactly the design goal of the 801 primitive instruction set. We question, therefore, the need for a separate set of instructions. Introduction In October, 1975, a group of about twenty researchers at the IBM T. J. Watson Research Center began the design of a minicomputer, a compiler, and a control program whose goal was to achieve significantly better cost/performance for high level language programs than that attainable by existing systems. The name 801 was chosen because it was the IBM number of the building in which the project resided• (The twenty creative researchers were singularly uninspired namers.) In fact, for "vertical microcode", the benefits claimed are generally not due to the power of the instructions as much as to their residence in a high-speed control store. This amounts to a hardware architect attempting to guess which subroutines, or macros, are most frequently used and assigning high speed memory to them. It has resulted, for instance, in functions like Extended-Precision Floating Point Divide and Translate-and-Test on S / 370' s residing in high speed storage, while procedure prologues and the First-Level-InterruptHandler are in main storage. The 801 CPU gets its instructions from an "instruction cache" which is managed by LRU information. Thus all frequently used functions are very likely to be found in this high-speed storage, exhibiting the performance characteristics of vertical microcode. in addition to a running research prototype the project resulted in an understanding of many design mistakes and thus has spawned a second generation research activity which is currently being pursued. This paper is a description of the basic design principles and the resulting system components (hardware and software). Basic Concepts Single Cycle Implementation Probably the major distinguishing characteristic of the 801 architecture is that its instructions are constrained to execute in a single, straightforward, rather primitive machine cycle. A similar general approach has been pursued by a group at the University of California [1]. Programming complex functions as software procedures or macros rather than in microcode has three advantages: First, the CPU is interruptible at "microcode" boundaries, hence more responsive. Architectures with complex instructions either restrict interrupts to instruction boundaries, or (as in, for instance, the Move Characters Long instruction on the S/370) define specific interruptible points. If the instruction must be atomic, the implementation must ensure that it can successfully complete before any observable state is saved. Thus, in the S / 370 Move Character instruction, before starting the move all pages are pretouched (and locked, in an MP system) to guard against a page fault interrupt occurring after the move has begun. If interruptible points are architected, the state must be such that the instruction is restartable. Complex, high-function instructions, which require several cycles to execute, are conventionally realized by some combination of random logic and microcode. It is often true that implementing a complex function in random logic will result in its execution being significantly faster than if the function were programmed as a sequence of primitive instructions. Examples are floating point arithmetic and fixed point multiply. We have no objection to this strategy, provided the frequency of use justifies the cost and, more important, provided these complex instructions in no way slow down the primitive instructions. Permission to copy without fee all or part of this material is granted provided that the copies are not made or distributed for direct commercial advantage, the ACM copyright notice and the title of the publication and its date appear, and notice is given that copying is by permission of the Association for Computing Machinery. To copy otherwise, or to republish, requires a fee and/or specific permission. @ 1982 A C M 0-89791-066-4 82/03/0039 $00.75 39 Most c o n v e n t i o n a l architectures m a k e this decision difficult because every store of data can be a~modification of an instruction, perhaps even the one following the store. Thus the hardware must ensure that the two caches are properly synchronized, a job that is either expensive or degrading, or both. E v e n instruction prefetch m e c h a n i s m s are complex since the effective address of a store must be compared to the Instruction Address Register. The second advantage of programming these functions is that an optimizing compiler can often separate their components, moving some parts out of a loop, commoning others, etc. Thirdly, it is often possible for parts of a complex instruction to be computed at compile time. Consider, for instance, the S / 3 7 0 Move Character instruction once again. Each execution of this instruction must determine the optimal move strategy by e x a m i n i n g the lengths of the source a n d - t a r g e t strings, whether (and in what direction) they overlap, and what their alignment characteristics are. But, for most programming languages, these may all be k n o w n at compile time. Consider also a multiply instruction. If one of the operands is a constant, known at compile time, the compiler, can often produce more efficient " s h i f t / a d d " sequences than the general multiply microcode subroutine. Historically, as soon as index registers were introduced into computers the frequency of instruction modification fell dramatically until, today, instructions are almost never modified. Therefore the 801 architecture does not require this hazard detection. Instead it exposes the existence of the split-cache t9 software and provides instructions by which software can synchronize the caches when required. In our system the only program that modifies instructions is the one that loads programs into memory. The major disadvantage to using procedures instead of microcode to impldment complex functions occurs wheri the micro instruction set is defined to permit its o p e r a n d s to be indirectly named by the register name fields in the instruction which i s being interpreted. Since, in the 801 and in most conventional architectures, the register numbers are bound into the instructions a compiler must adopt some specific register usage convention for the procedure operands, and move the operands to these registers when necessary. Similarly, in conventional systems in which the existence of a cache is unobservable to the software, I / O must (logically) go through the cache. This is often accomplished in less expensive systems by sending the I / O physically through the cache. The result is that the CPU is idle while the I / O proceeds, and that after an I / O burst the c o n t e n t s of the cache no longer reflect the working set of the process being executed, forcing it back into transient mode. Even in more expensive systems a b r o a d c a s t i n g or d i r e c t o r y - d u p l i c a t i o n s t r a t e g y may result in some performance degradation. A c o m p u t e r whose instructions all execute very efficiently, however, is attractive only if the n u m b e r of such instructions required to perform a task is not c o m m e n s u r a t e l y larger than that required of a more complex instruction set. The 801 project was concerned only with the execution of programs compiled by our optimizing compiler. Therefore, within the constraints of a primitive data flow, we left the actual definition of the instructions to the compiler writers. The results will be discussed later, b u t g e n e r a l l y show pathlengths (that is, n u m b e r of instructions executed) about equivalent to those on a S / 3 7 0 for systems code, and up to 5 0 % longer for commercial and scientific applications (given no hardware floating point). We observed that responsibility for the initiation of I / O in current systems was evolving t o w a r d s paging supervisors, system I / O managers using fixed block transfers, and, for low speed devices, a buffer s t r a t e g y which moves data b e t w e e n subsystem buffers and user areas. This results in the I / O manager knowing the location and extent of the storage being accessed, and k n o w i n g when an 1 / O transfer is in process. Thus this software can properly synchronize the caches, and the I / O hardware can transmit directly to and from the backing store. The result of this system approach in our prototype is that even when half of the memory bandwidth is being used for I / O the CPU is virtually undegraded. Overlapped Storage Access 801 instruction mixes show that about 3 0 % of instructions go to storage to send or receive data, and between 1 0 % and 2 0 % of instructions are taken branches. Moreover, for many applications, a significant portion of the memory bandwidth is used by I / O . If the CPU is forced to wait many cycles for storage access its internal performance will be wasted. Notice that in the p r e c e d i n g discussions (and in the earlier discussion of complex instructions) an underlying strategy is being pervasively applied. Namely, wherever there is a system function that is expensive or slow in all its generality, but where software can recognize a frequently occurring degenerate case (or can move the entire function from run time to compile time) that function is moved from hardware to software, resulting in lower cost and improved performance. The second major design goal of the 801 project, therefore, was to organize the storage hierarchy and develop a system architecture to minimize C P U idle time due to storage access. First, it was clear that a cache was required whose access time was consistent with the machine cycle of the CPU. Secondly we chose a " s t o r e - i n - c a c h e " s t r a t e g y (instead of "storing t h r o u g h " to the b a c k i n g store) so that the 1 0 % of expected store instructions would not degrade the performance severely. (For instance, if the time to store a word through to the backing store is ten cycles, and 1 0 % of instructions are stores, this will add up to one cycle to each instruction on average depending on the a m o u n t of execution overlap.) An interesting example of the application of this strategy concerns managing the cache itself. In the 801 the cache line is 32 bytes and the largest unit of a store is four bytes. In such a cache, whose line size is larger than the unit of a store and in which a "store in cache" approach is taken, a store directed at a word which is not in the cache must initiate a fetch of the entire line from the backing store into the cache. This is because, as far as the cache can tell, a load of another word from this line might be requested subsequently. Frequently, however, the store is simply the first store into what to the program is newly acquired space. It could be a new activation on a process stack just pushed on procedure call (e.g. P L / I Automatic); it could be an area obtained by a request to the operating system; or it could be a register save area used by the First Level Interrupt Handler. In all of these cases the hardware does not know that no old values from that line will be needed, while to the compiler and supervisor this situation is quite clear. We have defined explicit instructions in the 801 for But a CPU organization that needs a new instruction at every cycle as well as accessing data every third cycle will still be degraded by a single conventional cache that delivers a word every cycle. Thus we decided to split the cache into a part containing data and a part containing instructions. In this way we effectively doubled the bandwidth to the cache and allowed asynchronous fetching of instructions and data at the backing store. 40 A Compiler-Based System c a c h e m a n a g e m e n t so t h a t s o f t w a r e c a n reduce these u n n e c e s sary loads a n d stores of c a c h e lines. So f a r we h a v e discussed t w o m a j o r ideas w h i c h p e r v a d e the 801 system. First, build a C P U t h a t c a n execute its instructions quickly (i.e. in o n e relatively s h o r t m a c h i n e cycle), a n d define these i n s t r u c t i o n s to be a g o o d t a r g e t f o r c o m p i l a t i o n so t h a t resulting p a t h l e n g t h s are g e n e r a l l y c o m m e n s u r a t e with those for the same f u n c t i o n s o n m o r e c o m p l e x i n s t r u c t i o n sets (e.g. S / 3 7 0 ) . S e c o n d , define the s t o r a g e h i e r a r c h y a r c h i t e c t u r e , the C P U instructions, the 1 / O a r c h i t e c t u r e a n d the s o f t w a r e so t h a t the C P U will g e n e r a l l y not h a v e to wait for s t o r a g e access• T h e third m a j o r idea c e n t e r s a b o u t the 801 compiler. A f u n d a mental decision of the 801 p r o j e c t w a s to base the entire s y s t e m on its pervasive use. This has resulted in the following system characteristics: O n e o t h e r 801 system s t r a t e g y leads to m o r e effective use of the cache. C o n v e n t i o n a l s o f t w a r e a s s u m e s t h a t its m e m ory is r a n d o m l y a d d r e s s a b l e . Because of this a s s u m p t i o n e a c h service p r o g r a m in the s u p e r v i s o r a n d s u b s y s t e m s has its o w n local t e m p o r a r y storage. T h u s an application p r o g r a m r e q u e s t ing these services will cause r e f e r e n c e s to m a n y different addresses. In a h i g h - l e v e l - l a n g u a g e - b a s e d s y s t e m like the 801, control p r o g r a m services are C A L L ' e d just like a user's s u b r o u t ines. The result is t h a t all these service p r o g r a m s get their t e m p o r a r y areas f r o m the same stack, resulting in m u c h reuse of c a c h e lines and, t h e r e f o r e , higher c a c h e hit ratios. So f a r we h a v e d i s c u s s e d 801 f e a t u r e s t h a t result in o v e r l a p p e d access to the c a c h e b e t w e e n i n s t r u c t i o n s a n d d a t a , o v e r l a p p e d b a c k i n g store access a m o n g the c a c h e s a n d 1 / O , less h a r d w a r e s y n c h r o n i z i n g a m o n g the c a c h e s a n d I / O , a n d techniques to improve the c a c h e hit ratios. O n e o t h e r aspect of the 801 C P U design a n d a r c h i t e c t u r e should be d e s c r i b e d to c o m plete the picture. I n s t r u c t i o n sets f o r c o n v e n t i o n a l C P U ' s have b e e n defined with a n implicit a s s u m p t i o n t h a t m a n y p r o g r a m m e r s will use a s s e m b l y l a n g u a g e . This a s s u m p t i o n h a s m o t i v a t e d the d e f i n i t i o n of c o m p l e x i n s t r u c t i o n s ( s u c h as E d i t a n d M a r k , T r a n s l a t e a n d Test) almost as m u c h as has the n o t i o n of a f a s t c o n t r o l store. But, increasingly, p r o g r a m m e r s d o not use a s s e m bly l a n g u a g e except w h e r e optimal p e r f o r m a n c e is essential or m a c h i n e f u n c t i o n s are r e q u i r e d t h a t are n o t r e f l e c t e d in the source language. E v e n if almost all i n s t r u c t i o n a n d d a t a r e f e r e n c e s are f o u n d in the cache, a n d the c a c h e a n d b a c k i n g store are a l w a y s available to the C P U , a c o n v e n t i o n a l C P U will still o f t e n be idle while w a i t i n g f o r a load to c o m p l e t e or f o r the t a r g e t of a b r a n c h to be fetched. Sophisticated C P U ' s o f t e n k e e p b r a n c h t a k e n histories or fetch a h e a d o n b o t h p a t h s in o r d e r to overc o m e this idle time. In the 801 p r o j e c t we o b s e r v e d that, with a small n u m b e r of h a r d w a r e primitives, s o f t w a r e (i.e. the c o m p i ler) c o u l d r e o r d e r p r o g r a m s so t h a t the s e m a n t i c s r e m a i n e d u n c h a n g e d but the h a r d w a r e could easily o v e r l a p this idle time with useful w o r k . The compiler for the 801 has d e m o n s t r a t e d t h a t it c a n p r o d u c e o b j e c t c o d e t h a t is close e n o u g h to b e s t h a n d c o d e g e n e r a l l y so t h a t a s s e m b l y l a n g u a g e p r o g r a m m i n g is a l m o s t n e v e r n e e d e d f o r p e r f o r m a n c e • T h e o p e r a t i n g system has isolated those m a c h i n e - d e p e n d e n t f u n c t i o n s n o t r e f l e c t e d in the l a n g u a g e (such as Disable, Start I/.O, D i s p a t c h ) a n d d e v e l o p e d efficient p r o c e d u r e s w h i c h provide these f u n c t i o n s with minimal linkage o v e r h e a d . T h e result is a s y s t e m in w h i c h less t h a n a t h o u s a n d lines of s u p e r v i s o r c o d e ( a n d some of the " m i c r o e o d e " s u b r o u t ine i m p l e m e n t a t i o n s of the c o m p l e x f u n c t i o n s ) are w r i t t e n in A s s e m b l y l a n g u a g e . This has relieved the 801 a r c h i t e c t u r e of the b u r d e n of being easy to p r o g r a m directly. Virtually the o n l y p r o g r a m m e r s w h o are c o n c e r n e d with the n a t u r e of the a r c h i t e c ture are the compiler writers, a n d the " c o r e " supervisor writers. All o t h e r s see the s y s t e m only t h r o u g h a high level l a n g u a g e . Because of this, the 801 a r c h i t e c t s were able to base their decisions solely o n the n e e d s of these f e w p r o g r a m m e r s , a n d o n cost/performance considerations. O n load instructions the register t h a t is to be the t a r g e t of the load is locked b y the C P U . The C P U then c o n t i n u e s execution of the i n s t r u c t i o n s t r e a m until it r e a c h e s a n instruction t h a t requires this register, at w h i c h time it idles until the load is completed. Thus, if the compiler c a n find a useful instruction to put a f t e r the load t h a t d o e s not require the result of the load, the C P U will not be idle at all while the d a t a c a c h e f e t c h e s the r e q u e s t e d w o r d . ( A n d if the c o m p i l e r c a n find several s u c h i n s t r u c t i o n s to put a f t e r the l o a d , e x e c u t i o n of these will even o v e r l a p c a c h e miss.) Similarly for b r a n c h e s , the 801 a r c h i t e c t u r e defines, f o r e v e r y t y p e of b r a n c h i n s t r u c t i o n , a n a l t e r n a t e f o r m called B r a n c h with Execute. (This is similar to the d e l a y e d b r a n c h in the R I S C c o m p u t e r [1].) T h e s e i n s t r u c t i o n s h a v e e x a c t l y the s a m e s e m a n t i c s as their c o r r e s p o n d i n g b r a n c h i n s t r u c t i o n s , except t h a t while the i n s t r u c t i o n c a c h e is f e t c h i n g the b r a n c h t a r g e t the C P U executes the i n s t r u c t i o n t h a t has b e e n placed i m m e d i a t e l y a f t e r the B r a n c h with E x e c u t e i n s t r u c t i o n . F o r instance, in the sequence: T h u s the 801 a r c h i t e c t u r e w a s d e f i n e d as t h a t set of r u n - t i m e o p e r a t i o n s which: - could n o t be m o v e d to compile time, - could not be m o r e efficiently e x e c u t e d b y o b j e c t c o d e p r o d u c e d b y a c o m p i l e r w h i c h u n d e r s t o o d the high level intent of the p r o g r a m , - was to be i m p l e m e n t e d in r a n d o m logic m o r e effectively t h a n the equivalent s e q u e n c e of s o f t w a r e instructions. L O A D R1, A BNZ L It m i g h t at first s e e m s u r p r i s i n g t h a t c o m p i l e r w r i t e r s w o u l d n o t w a n t p o w e r f u l high level instructions• But in f a c t these i n s t r u c t i o n s are o f t e n h a r d to use since the compiler must find t h o s e c a s e s w h i c h e x a c t l y fit the a r c h i t e c t e d c o n s t r u c t . C o d e selection b e c o m e s n o t just finding the fewest instructions, but the right instructions. A n d w h e n these i n s t r u c t i o n s n a m e o p e r a n d s in s t o r a g e instead of in registers, c o d e selection will d e p e n d u p o n the results of register allocation. the C P U w o u l d be idle while the i n s t r u c t i o n c a c h e was f e t c h i n g L, if the b r a n c h was taken. C h a n g i n g the B R A N C H - N O N Z E R O to a B R A N C H N O N - Z E R O W I T H E X E C U T E , a n d m o v i n g the L O A D instruction results in: BNZX L LOAD R1,A The 801 a p p r o a c h to p r o t e c t i o n is s t r o n g l y b a s e d u p o n this c o m p i l e r i n t e r m e d i a r y b e t w e e n users a n d the h a r d w a r e . Conventional systems expect application programmers, and certainly s u b s y s t e m p r o g r a m m e r s , to use a s s e m b l y l a n g u a g e or o t h e r l a n g u a g e s in w h i c h it is possible to s u b v e r t the s y s t e m w h i c h has exactly the same s e m a n t i c s but allows the C P U to execute the L O A D while the i n s t r u c t i o n c a c h e is f e t c h i n g the instruction at L. The 801 compiler is able, generally, to c o n v e r t a b o u t 6 0 % of the b r a n c h e s in a p r o g r a m into the execute form. 41 The linkage between procedures on the 801 is another example of a consistent machine design based on a system used solely via a high level language. We wanted applications on the 801 to be p r o g r a m m e d using good p r o g r a m m i n g style. This implies a large number of procedures and many calls. In particular it implies that very short procedures can be freely written and invoked. Thus, for these short procedures, the linkage must be minimal. (either deliberately or accidentally). Thus hardware facilities are required to properly isolate these users. The most popular examples of these facilities are storage protect keys, multiple virtual address spaces, and supervisor state. These facilities are often costly and sometimes degrade performance. But what is more important is that they are often inadequate. Since even 16 different keys are insufficient to assign uniquely, for instance, different users are sometimes given the same key or the system limits the n u m b e r of active users. Also, because the key disciplines are only two level, many subsystems are forced to run with full addressing capability. The 801 procedure linkage attempts to keep arguments in registers where possible. It also expects some register values to be destroyed across a C A L L . The result is that a procedure call can be as cheap as a Branch and Link instruction when the called procedure can execute entirely out of available registers. As more complex functions are required they increase the overhead for linkage incrementally. If, however, users are Constrained to a properly defined source language, and their programs are processed by an intelligent compiler and run on an operating system that understands the addressing strategies of the compiler, it. is possible to provide better protection at less cost. The 801 system, therefore, is based upon the assumption that certain critical c o m p o n e n t s of the compiler are correct, and that all programs executing on the system (except for a small supervisor core) have been compiled by this compiler. The system will guarantee: Finally, the pervasive use of a high level language and compiler has given the project great freedom to change. The architecture has undergone several drastic changes, and countless minor ones. The linkage conventions, storage m a p p i n g strategies, and run time library have similarly been changed as experience provided new insights. In almost every case the cost of the change was limited to recompilations. • that all references to data (scalars, arrays, structures, areas) really do point to that data, and that the extents of the references are included in the extents of the data, This ability to preserve source code, thus limiting the impact of change, can have significant long range impact on systems. New technologies (and packaging) often offer great p e r f o r m a n c e and cost benefits if they can be exploited with architecture changes. • that a reference to d y n a m i c a l l y a l l o c a t e d - a n d - f r e e d data is made only between an allocation and a free, • that all branches are to labels, and all calls are to proper entry points in procedures, System Components • that the extents of all arguments to a procedure match the extents of their corresponding parameters, so that the protection persists across calls, The ProgrammingLanguage • that all declarations of global (external) variables in separately compiled procedures have consistent extents. The source language for the 801 system is called PL.8. It was defined to be an appropriate language for writing systems programs and to produce o p t i m i z e d code with the c h e c k i n g described above. PL.8 began as an almost-compatible subset of P L / I , so that the PL.8 compiler was initially compiled by the P L / I Optimizer. It contains, for instance, the P L / I storage classes, functions, floating point variables, varying c h a r a c t e r strings, arrays with adjustable extents, the structured control primitives of P L / I , the string-handling built-in-functions, etc. It differs from P L / I in its interpretation of bit strings as binary numbers, in its binary arithmetic (which simply reflects the arithmetic of the 801 h a r d w a r e ) and in some language a d d i t i o n s b o r r o w e d from Pascal. It does not contain full P L / I O N conditions, multiple entry .points, or the ability to develop absolute pointers to A u t o m a t i c or Static storage. Relative pointers, called Offsets, can be developed only to Areas. This discipline has several advantages: - All program and data areas can be moved freely by the system, since absolute addresses are never stored in useraddressable data structures. This checking is often done at compile time, link edit time, or program fetch time, but, when necessary, trap instructions are introduced into the object code to check at run time. The resulting increase in pathlength due to this run time checking is generally less than 1 0 % because this code is optimized along with the rest of the program [5]. Notice that this is not a "strongly typed" approach to checking. Overlays of one data type on another are permitted, provided the domains are not exceeded. But our experience in running code conventionally on the S / 3 7 0 and then on the 801 with this checking has shown that many program bugs are discovered and that, more importantly, they tend to be the kinds of bugs that elude normal c o m p o n e n t test procedures. It was noted earlier that, because the operating system was also written in the 801's high level language and compiled by the 801 compiler, its service programs were simply C A L L ' e d like any external procedure, resulting in better cache behavior. An even more important consequence of this design, however, is that the checking of matches between arguments and parameters is performed at the time a program is loaded into memory and linked to the supervisor. This results in efficient calls to supervisor services, especially when compared to conventional overhead. It means, also, that the compiler-generated " t r a c e b a c k " mechanism continues into the operating system, so that when an error occurs the entire symbolic call chain can be displayed. - Any a r i t h m e t i c data type can be used as an offset (relative pointer) and all a r i t h m e t i c o p e r a t i o n s can be freely performed, since the extent checks will be made on every use. - A store, using a computed offset, can only affect other data in that particular area. Thus, the locations whose values could have been changed by this store are significantly limited. This enhances the power of the optimization algorithms. - It leads to b e t t e r structured, more easily readable programs. 42 The Optimizing ~ompiler equivalent to the problem of coloring the graph so that no two nodes connected by a vertex are colored with the same crayon. There have been about seven programmers in the compiler group since the project began. A running compiler was completed after about two years. Since then the group has been involved with language extensions, new optimization techniques, debugging, and useability aids. It should be noted, however, that the C o m p u t e r Sciences d e p a r t m e n t at Y o r k t o w n Heights has been working on compiler algorithms for about t w e n t y years, many of which were simply incorporated into this compiler. This global approach has proven very effective. Surprisingly many procedures "color" so that no s t o r e / l o a d sequences are necessary to keep results in storage temporarily. (At present the compiler "colors" only computations. There is, however, no technical reason why local variables could not also be "col6red" and we intend to do this eventually.) When it does fail, other algorithms which use this graph information are employed to decide what to store. Because of this ability of the compiler to effectively utilize a large number of registers, we decided to implement 32 ge~neral purpose registers in the hardware. The PL.8 compiler adopts two strategies which lead to its excellent object code. The first is a strategy which translates in the most straightforward, inefficient (but correct) m a n n e r from P L 8 source language to an intermediate language (IL). This translation has as its only objective the p r o d u c t i o n of semantically correct object code. It seeks almost no special cases, so that it is relatively easy to debug. Moreover the intermediate language which is its target is at a very low level, almost the real 801 machine. The compiler will also accept Pasca[ programs, producing compatible object code so that PL.8 and Pascal procedures can freely call one another. It will also produce efficient object code for the S / 3 7 0 , thus providing source code portability. Instructions and O p e r a n d s The next phase of the compiler develops flow graphs of the program as described in [2], and, using these graphs, performs a series of conventional optimization algorithms, such as: • • • • Instruction formats and data representations are areas which saw significant change as the project evolved. This section describes the current version of the architecture; "The kind of instruction and operand set requested by the compiler developers turned out, fortunately, to be precisely one which made hardware i m p l e m e n t a t i o n easier. The overriding theme was regularity. For instance: common sub-expression elimination, moving code out of loops, eliminating dead code, strength reduction. - All operands must be aligned on boundaries consistent with their size (i.e. halfwords on halfword boundaries, words on word boundaries). All instructions are fullwords on fullword boundaries. (This results in an increase in program size over two-and-four byte formats, but the larger format allows us to define more powerful instructions resulting in shorter pathlengths.) Since the 801 was designed for a c a c h e / m a i n s t o r e / h a r d disk hierarchy, and virtual memory addressing, the consequence of larger programs is limited to more disk space and larger working sets (i.e., penalties in c a c h e - h i t - r a t i o and page-fault frequency). Each of these algorithms transforms an IL program into a semantically equivalent, but more efficient, IL program. Thus these procedures can be (and are) called repetitively and in any order. While these procedures are quite sophisticated, since each of them acts on the entire program and on all programs, a bug in one of them is very easily observed. The power of this approach is not only in the optimizing power of the algorithms but in the fact that they are applied to such a low level IL. Conventional global optimizing compilers perform their transformations at a much higher level of text, primarily because they were designed to run in relatively small size memory. Thus they can often not do much more than convert one program to another which could have been written by a more careful programmer. The PL.8 compiler, on the other hand, applies its o p t i m i z a t i o n algorithms to addressing code, domain checking code, procedure linkage code, etc. With this alignment constraint the hardware is greatly simplified. Each data or instruction access can cause at most one cache miss or one page fault. The caches will have to access at most one aligned word. Instruction prefetch mechanisms can easily find op codes if they are searching for branches. Instruction alignment and data alignment are unnecessary. Instruction L e n g t h C o u n t fields (as in the S / 3 7 0 PSW) are unnecessary and software can always b a c k t r a c k instructions. Moreover, for data, traces show that misaligned operands rarely appear and when they do are often the result of poor programming style. The second compiler strategy which is different from c o n v e n t i o n a l compilers is our approach to register allocation [3,4]. The IL, like that of most compilers, assumes an arbitrarily large number of registers. In fact the result of each different computation in the program is assigned a different (symbolic) register. The job for register allocation is simply to assign real registers to these symbolic registers. C o n v e n t i o n a l approaches use some subset of the real registers for slaecial purposes (e.g. pointers to the stack, to the code, to the parameter list). The remaining set is assigned locally within a statement, or at best a basic block (e.g. a loop). Between these assignments results which are to be preserved are temporarily stored, and variables are redundantly loaded. - Given four byte instructions, other benefits accrue. Register fields in instructions are made five bits long so that the 801 can name 32 registers. (This aspect of 801 architecture makes it feasible to use the g01 to emulate other architectures which have 16 G P R ' s , since 16 801 registers are still available for emulator use.) Four byte instructions also allow the target register of every instruction to be named explicitly so that the input operands need not be destroyed. This facility is applied pervasively, as in "Shift Reg A Left by contents of Reg B and Store Result in Reg C". This feature of the architecture simplifies register allocation and, eliminates many Move Register instructions. The 801 approach observes that the register assignment problem is equivalent to the graph coloring problem, where each symbolic register is a node and the real registers are different colors. If two symbolic registers have the property that there is at least one point in the program where both their values must be retained, we model that property on the graph as a vertex between the two nodes. Thus the register allocation problem is 43 - T h e r e are C o m p a r e a n d T r a p i n s t r u c t i o n s d e f i n e d which allow the S / 3 7 0 C o m p a r e - B r a n c h C o n d i t i o n s e q u e n c e to be e x e c u t e d in one m a c h i n e cycle for those cases w h e r e the test is for a n i n f r e q u e n t l y - e n c o u n t e r e d e x c e p t i o n c o n d i t i o n . These instructions are used to implement the r u n - t i m e extent c h e c k i n g discussed earlier. - The 801 is a true 32 .bit a r c h i t e c t u r e , not a 16 bit a r c h i t e c t u r e with e x t e n d e d registers. A d d r e s s e s are 32 bits long; arithmetic is 32 bit t w o ' s c o m p l e m e n t ; logical a n d shift instructions deal with 32 bit w o r d s (and c a n shift d i s t a n c e s up to 32). A useful w a y to reduce p a t h l e n g t h ( a n d c a c h e misses) is to define a rich set of i m m e d i a t e fields, but of course it is impossible., to e n c o d e a general 32 bit c o n s t a n t to fil into an immediate field in a f o u r byte instruction. The 801 defines the following subsets of such c o n s t a n t s w h i c h meet most requirements: - A r i t h m e t i c is 32 bit t w o ' s c o m p l e m e n t . T h e r e are special instructions defined to allow M A X , M I N , . a n d decimal a d d a n d s u b t r a c t to be c o d e d efficiently. T h e r e are also t w o instructions defined (Multiply Step a n d Divide Step) to allow two 32 bit w o r d s to be multiplied in 16 cycles (yielding a 64 bit p r o d u c t ) a n d a 64 bit dividend to be divided b y a 32 bit divisor in 32 cycles (yielding a 32 bit q u o t i e n t a n d a 32 bit r e m a i n d e r ) . • A 16 bit i m m e d i a t e field for a r i t h m e t i c a n d address c a l c u l a t i o n (D field) w h i c h is i n t e r p r e t e d as a t w o ' s c o m p l e m e n t signed integer. ( T h u s the c o n s t a n t s _+.215 c a n be r e p r e s e n t e d immediately.) • A 16 bit logical c o o s t a n t . E a c h logical o p e r a tion has t w o i m m e d i a t e f o r m s - u p p e r . a n d lower, so t h a t in at m o s t t w o i n s t r u c t i o n s "(cycles) logical o p e r a t i o n s c a n be p e r f o r m e d using a 3 2 bit logical c o n s t a n t . - The 801 has These were d e v e l o p e d emulator "microcode" functions, all available • A n 11 bit e n c o d i n g of a M a s k (i.e. a s u b s t r i n g of o n e s s u r r o u n d e d b y z e r o s or z e r o s s u r r o u n d e d b y ones). T h u s for shift, insert, a n d isolate o p e r a t i o n s the s u b s t r i n g c a n be defined immediately. a rich set of shift a n d insert instructions. to m a k e device c o n t r o l l e r " m i c r o c o d e " , a n d s y s t e m s c o d e very efficient. T h e in One m a c h i n e cycle, are: • ring shift a register up to 31 positions (specified in a n o t h e r zegister or in a n i m m e d i a t e field), • using a mask (in a n o t h e r register or in a n i m m e diate field) m e r g e this shifted w o r d with all zeros (i.e. isolate the field) or with a n y o t h e r register (i.e. merge), or with the result of the previous shift (i.e. long' shift), • s t o r e this b a c k into a n y o t h e r register or into s t o r a g e (i.e. m o v e c h a r a c t e r string). • A 16 bit i m m e d i a t e field f o r b r a n c h t a r g e t c a l c u l a t i o n (D-field) w h i c h is i n t e r p r e t e d as a signed t w o ' s c o m p l e m e n t offset f r o m the a d d r e s s of the c u r r e n t instruction. ( T h u s a relative b r a n c h to a n d f r o m a n y w h e r e within a 3 2 K b y t e p r o c e d u r e c a n be specified immediately.) • A 26 bit i m m e d i a t e field s p e c i f y i n g a n offset f r o m the a d d r e s s of the c u r r e n t i n s t r u c t i o n or a n a b s o lute address, so t h a t b r a n c h e s b e t w e e n p r o c e d u r e s , to supervisor services, or to " m i c r o c o d e s u b r o u t i n e s " c a n be specified w i t h o u t h a v i n g to establish addressability. (This last facility allows m i s a l i g n e d s o u r c e a n d t a r g e t character string moves to execute as fast as two characters/cycle.) Interrupts - L o a d a n d Store i n s t r u c t i o n s are a v a i l a b l e in e v e r y c o m b i n a t i o n of the following options: and 1/0 I / O in the 801 p r o t o t y p e is c o n t r o l l e d b y a set of a d a p ters w h i c h a t t a c h to the C P U a n d m e m o r y b y two buses. T h e E x t e r n a l Bus a t t a c h e s the a d a p t e r s to the C P U . It is used b y s o f t w a r e to s e n d c o m m a n d s a n d receive status, by m e a n s of s y n c h r o n o u s R e a d a n d Write instructions. D a t a is t r a n s m i t t e d b e t w e e n the a d a p t e r s a n d the 801 b a c k i n g s t o r e t h r o u g h the M I O ( M e m o r y - I / O ) bus. (As d e s c r i b e d a b o v e it is the r e s p o n sibility of the s o f t w a r e to s y n c h r o n i z e the caches.) • L o a d or Store, • c h a r a c t e r , h a l f w o r d , s i g n - e x t e n d e d h a l f w o r d , fullword, • Base + Index, or Base + D i s p l a c e m e n t effective a d d r e s s calculation. ( U s a g e statistics for S / 3 7 0 s h o w low use for the full B + X + D form. T h u s a three i n p u t a d d e r did n o t seem w a r r a n t e d . ) • S t o r e the effective a d d r e s s b a c k into the b a s e register (i.e. " a u t o i n c r e m e n t " ) or not. R a t h e r t h a n s u p p o r t i n t e g r a t e d a n d c o m p l e x (multilevel) i n t e r r u p t h a r d w a r e , the 801 a g a i n m o v e s to s o f t w a r e f u n c t i o n s t h a t c a n be p e r f o r m e d m o r e efficiently b y p r o g r a m ming. S o f t w a r e o n s y s t e m s t h a t provide, say, eight i n t e r r u p t levels o f t e n find this n u m b e r i n a d e q u a t e as a distinguisher of i n t e r r u p t handlers. T h u s a s o f t w a r e first level i n t e r r u p t h a n d l e r is p r o g r a m m e d o n top of the h a r d w a r e , i n c r e a s i n g the real time to r e s p o n d . M o r e o v e r the r e q u i r e m e n t to s u p p o r t eight sets of registers results in these b e i n g s t o r e d in s o m e f a s t m e m o r y r a t h e r t h a n in logic o n - c h i p . This results in a slower m a c h i n e cycle. If the real time r e s p o n s i v e n e s s of a s y s t e m is m e a s u r e d realistically it m u s t include not only the time to get to an interr u p t h a n d l e r b u t the time to process the i n t e r r u p t , w h i c h clearly d e p e n d s o n the length of the m a c h i n e cycle. T h u s in a practical sense the 801 is a g o o d real-time system. - B r a n c h e s are a v a i l a b l e with the f o l l o w i n g b r a n c h t a r g e t specifications • a b s o l u t e 26 bit address, • Instruction Add/ess Register + Displacement (signed 16 or 26 bit w o r d offset), • R e g i s t e r + Register, B r a n c h a n d L i n k f o r m s are d e f i n e d normally. But c o n ditional b r a n c h e s are d e f i n e d not only b a s e d u p o n the state of the C o n d i t i o n Register but o n the presence or a b s e n c e of a o n e in a n y bit position in a n y register. (This allows tile Test U n d e r M a s k - B r a n c h C o n d i t i o n s e q u e n c e in S / 3 7 0 to be e x e c u t e d in o n e m a c h i n e cycle ( a n d no s t o r a g e r e f e r e n c e s ) if the bit is a l r e a d y in a register. A g a i n the p o w e r of global register allocation m a k e s this m o r e p r o b a b l e . ) I n t e r r u p t d e t e r m i n a t i o n a n d p r i o r i t y h a n d l i n g is p a c k a g e d o u t b o a r d of the C P U chips in a special unit called the E x t e r n a l I n t e r r u p t c o n t r o l l e r ( a l o n g with the s y s t e m clocks, timers, a n d a d a p t e r locks). (This p a c k a g i n g d e c i s i o n allows o t h e r v e r s i o n s of 801 s y s t e m s to c h o o s e d i f f e r e n t i n t e r r u p t strategies w i t h o u t i m p a c t i n g the C P U design.) in this c o n t r o l l e r 44 there are (logically) t w o bit vectors. The first, the I n t e r r u p t R e q u e s t V e c t o r ( I R V ) c o n t a i n s a bit f o r e a c h device w h i c h m a y wish to i n t e r r u p t the C P U (plus one e a c h for the clocks, timers, a n d the C P U itself for simulating external interrupts). These bits are tied b y lines to the devices. Prototype A h a r d w a r e p r o t o t y p e has b e e n built f o r a n e a r l y version of the 801 a r c h i t e c t u r e , o u t of M E C L 10K DIP's. It r u n s at 1.1 cycles per instruction. (This n u m b e r m u s t be t a k e n as a n o u t - o f - c a c h e p e r f o r m a n c e figure b e c a u s e the a p p l i c a t i o n s w h i c h c u r r e n t l y run s h o w hit ratios at close to 1 0 0 % a f t e r the initial c a c h e load.) We d o not yet have m u l t i p l e - u s e r m e a s u r e m e n t s . The s e c o n d v e c t o r , called the I n t e r r u p t M a s k V e c t o r ( I M V ) c o n t a i n s a bit c o r r e s p o n d i n g to e a c h bit in the IRV. The IMV is loaded by s o f t w a r e in the C P U . It d y n a m i c a l l y e s t a b lishes the priority levels of the i n t e r r u p t requesters. If there is a o n e in a position in the IRV c o r r e s p o n d i n g to a o n e in the c o r r e s p o n d i n g position of the IMV, a n d the 801 C P U is e n a b l e d for interrupt, the C P U is i n t e r r u p t e d . The register file is c a p a b l e of r e a d i n g out a n y three a n d writing b a c k a n y t w o registers within a single cycle. Thus the C P U is pipelined as follows: O n i n t e r r u p t the C P U b e c o m e s disabled a n d u n r e l o c a t ed, a n d begins e x e c u t i n g the F i r s t Level I n t e r r u p t H a n d l e r ( F L I H ) in l o w e r m e m o r y . T h e F L I H stores the i n t e r r u p t e d state, reads the IRV a n d d e t e r m i n e s the requester. U s i n g this position n u m b e r , it s e n d s a new I M V (reflecting the priority of the r e q u e s t e r ) a n d b r a n c h e s to the i n t e r r u p t h a n d l e r for t h a t requester, w h i c h executes e n a b l e d a n d relocated. P a t h l e n g t h s for the F L I H are less t h a n 100 i n s t r u c t i o n s ( a n d c a n be r e d u c e d for a subclass of f a s t - r e s p o n s e i n t e r r u p t s ) , a n d less t h a n 150 i n s t r u c t i o n s f o r the d i s p a t c h e r ( w h e n the i n t e r r u p t h a n d l e r completes). Internal Hardware - The first level of the pipeline d e c o d e s the i n s t r u c t i o n , r e a d s t w o registers into the A L U , executes the A L U , a n d either latches the result or, f o r L o a d o r Store instructions, s e n d s the c o m p u t e d a d d r e s s to the cache. O n a store i n s t r u c t i o n , the d a t a w o r d is also f e t c h e d f r o m the register file a n d sent to the cache. - T h e s e c o n d level of the pipeline s e n d s the l a t c h e d result t h r o u g h the shifter, sets the c o n d i t i o n register bits, a n d stores the result b a c k into a register. D u r i n g this cycle also, if a w o r d has b e e n received f r o m the c a c h e as the result of a load instruction it is loaded into the register. Bus ( T h e h a r d w a r e m o n i t o r s r e g i s t e r n a m e s to b y p a s s the load w h e n the result is being i m m e d i a t e l y used.) We have, so far, d e s c r i b e d a C P U that must have the following (logical) buses to storage: - T h e c a c h e is designed so t h a t o n a miss the r e q u e s t e d w o r d is sent directly to the C P U , thus r 6 d u c i n g l o c k o u t while the c a c h e line is being filled. a c o m m a n d bus to describe the f u n c t i o n r e q u e s t e d , an a d d r e s s bus, a source d a t a bus for Stores, a target d a t a bus for Loads. Performance Comparisons Figures 1 a n d 2 s h o w s o m e ea~'ly p e r f o r m a n c e c o m p a r i sons. Since the compiler will p r o d u c e o b j e c t c o d e f o r the S / 3 7 0 as well as the 801, these c o m p a r i s o n s are possible for the s a m e source p r o g r a m s a n d the s a m e compiler. We use the n u m b e r of cycles in the i n n e r loops, a n d the n u m b e r of s t o r a g e r e f e r e n c e s in the inner loops to a p p r o x i m a t e d y n a m i c p e r f o r m a n c e . We o b s e r v e d t h a t o t h e r f u n c t i o n s m i g h t be i m p l e m e n t e d o u t b o a r d of the C P U a n d could a t t a c h to the C P U via these same buses (e.g. floating point). T h e r e f o r e we e x p o s e d these buses in an 801 instruction, called Internal Bus O p e r a t i o n . This instruction has o p e r a n d s to n a m e the following: the bus unit being r e q u e s t e d , - the c o m m a n d , - the two o p e r a n d s (B,D, or B,X) w h i c h will be a d d e d to p r o d u c e the o u t p u t on the a d d r e s s bus, - the source register, - the t a r g e t register, if n e e d e d , - Figure 1 s h o w s results for a n i n - m e m o r y sort p r o c e d u r e . Figure 2 s h o w s the results f o r r a n d o m l y selected modules f r o m the c o m p i l e r itself. N o t e t h a t as the m o d u l e s get l a r g e r the p o w e r of global register a l l o c a t i o n results in f e w e r s t o r a g e references. N o t e also that, in spite of the f a c t t h a t the 801 c o n t a i n s no c o m p l e x instructions, the 801 m o d u l e s c o n t a i n f e w e r instructions a n d f e w e r i n s t r u c t i o n e x e c u t i o n s . This is b e c a u s e the c o m p l e x i n s t r u c t i o n s are g e n e r a l l y very i n f r e q u e n t w h e r e a s the 801 has a m o r e p o w e r f u l set of primitive instructions. a n d three flags: - privileged c o m m a n d or not, - t a r g e t register required or not, - address bus sent b a c k to Base register, or not. H a v i n g defined this generic instruction we gave bus unit n a m e s to the instruction a n d d a t a caches, the external i n t e r r u p t controller, the timer, a n d the relocate controller, a n d assigned the IBO op c o d e to all i n s t r u c t i o n s directed to these units. 45 Figure 1 Performance Comparisons Program: Heap Sor.._..tprogrammed in PL.8 In Inner Loop CPU Code Size (Bytes) No. of Instructions Data Ref's Cycles Cycles/Inst. S/370-168 236 33 8 56 801 240 28 6 31 Figure 2 Programs: Random.L~ Selected Modules in PL.8 Compiler Module (In increasing size order FIND SEARCHV LOAD S P2 EXTS SORT S1 PM ADD1 ELMISS PM GKV P5DBG DESCRPT ENTADD Total Code Size (Bytes) Dynamic Comparisons Instructions Executed Data Storage References 801/S/370 801/S/370 801/370 1.02 .93 .83 1.00 .86 .86 .87 .92 .98 .86 .79 .91 .83 .91 1.00 .78 .96 .86 .76 .81 .75 .76 .60 .38 .43 .57 .59 .63 .69 .46 .52 .42 .42 .90 .80 .50 46 1.7 1.1 References Conclusions [11 Patterson, David A. and Sdquin, Carlo H., "RISC-I: A Reduced Instruction Set VLSI C o m p u t e r , " Eighth Annual Symposium in C o m p u t e r Architecture, May, 1981. [2] Cocke, John and Markstein, Peter W., " M e a s u r e m e n t of Program I m p r o v e m e n t A l g o r i t h m s , " I n f o r m a t i o n Processing 80, North-Holland Publishing Co., 1980. I3l Chaitin, Gregory J. et al, "Register Allocation via Coloring," C o m p u t e r Languages, Vol. 6, pp. 47-57, 1981, G r e a t Britain. [41 Chaitin, G r e g o r y J., " R e g i s t e r A l l o c a t i o n and Spilling via Coloring," IBM Research Report RC9124, 1981. [51 Markstein, V., Coeke, J., and P. Markstein, " O p t i m i z a t i o n of Range Checking," IBM Research Report R C 8 4 5 6 , 1980. While we do not have nearly enough measurements to draw hard conclusions, the 801 group has developed a set of intuitive principles which seem to hold consistently: - At least in the low-to-mid range of processor complexity, a general purpose, register-oriented instruction set can be at least as good as any special vertical microcode set. Thus there should be only one hard-wired instruction set, and it should be directly available to the compiler. - A good global register allocator can effectively use a large number of general purpose registers. Therefore all the registers which the CPU can afford to build in hardware should be directly and simultaneously addressable. Stack machines, machines that hide some of the registers to improve C A L L performance, multiple-interrupt level machines, all seem to make poorer use of the available registers. - Protection is far more effectively provided at a level where the source language program is understood. - It is easy to design and build a fast, cheap CPU, and will be more so as VLS1 evolves. The harder problem is to develop software, architecture and hardware which does not keep the CPU idling due to storage access. Acknowledgments The seminal idea for the 801 and many s u b s e q u e n t concepts are due to John Cocke. In six years, the list of contributors has grown too large to list here. The following people have been with the project from beginning until the present and are responsible for most of the design and implementation. Hardware: Frank Carrubba, manager. Paul Stuckert, Norman Kreitzer, Richard Freitas, Kenneth Case Software: Marc Auslander, manager Compiler: Martin Hopkins, manager. Richard Goldberg, Peter Oden, Philip Owens, Peter Markstein, Gregory Chaitin. C o n t r o l Program: Richard Oehler, manager. Albert Chang. Joel Birnbaum was the first manager of the project and later a constant supporter. Bill Worley also contributed significantly through the years. 47