Clustering Stat 430 Fall 2011

advertisement

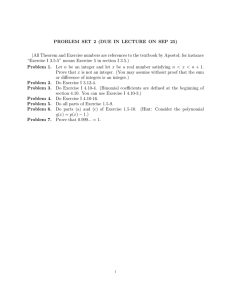

Clustering Stat 430 Fall 2011 Outline • Distance Measures • Linkage • Hierachical Clustering • KMeans Data set: Letters • from the UCI repository: Letters Data • 20,000 instances of letters • Variables: 1. lettr capital letter (26 values from A to Z) 2. x-box horizontal position of box (integer) 3. y-box vertical position of box (integer) 4. width width of box (integer) 5. high height of box (integer) 6. onpix total # on pixels (integer) 7. x-bar mean x of on pixels in box (integer) 8. y-bar mean y of on pixels in box (integer) 9. x2bar mean x variance (integer) 10. y2bar mean y variance (integer) 11. xybar mean x y correlation (integer) 12. x2ybr mean of x * x * y (integer) 13. xy2br mean of x * y * y (integer) 14. x-ege mean edge count left to right (integer) 15. xegvy correlation of x-ege with y (integer) 16. y-ege mean edge count bottom to top (integer) 17. yegvx correlation of y-ege with x (integer) Data set: Letters Data Set Information (UCI repository): Objective: identify number of black-and-white rectangular pixel displays as one of the 26 capital letters in the English alphabet. The character images were based on 20 different fonts, each letter within these 20 fonts was randomly distorted to produce a file of 20,000 unique stimuli. Each stimulus was converted into 16 primitive numerical attributes (statistical moments and edge counts) which were then scaled to fit into a range of integer values from 0 through 15. We typically train on the first 16000 items and then use the resulting model to predict the letter category for the remaining 4000. See the article cited above for more details. Clustering • part of unsupervised classification (i.e. we do not use or have a dependent variable) • classification is based on object similarity • What is similar? tools are used to assess the results of numerical methods. Section 5.5 summarizes the chapter and revisits the data analysis strategies used in the examples. A good companion to the material presented in this chapter is Venables & Ripley (2002), which provides data and code for practical examples of cluster analysis using R. Section 5.5 summarizes the chapter and revisits the data analysis strategies used in the examples. 5.1 Background V1 V2 1 T 2 5 Before2 weI can 10 M 11 Distances Brief Article V3 V4 8 3 12 3 begin 15 13 V5 V6 V7 V8 V9 V10 V11 V12 V13 V14 V15 V16 V17 5 1 8 13 0 6 6 10 8 0 8 0 8 7 2 10groups 5 5 of 4cases 13 that 3 are 9 similar, 2 8 we 4 need 10 finding 9 7 13 2 6 2 12 1 9 8 1 1 8 The Author to decide on a definition of similarity. How is similarity defined? Consider a November 2011in matrix format as dataset with three cases and four variables, 28, described • three objects, values for one ‘T’, one ‘I’ and one ‘M’ X1 7.3 7.6 7.7 8.0 X = X2 = 7.4 7.2 7.3 7.2 4.6 4.8 8 3X3 5 1 4.184.6 13 0 2 6 6 10 8 0 8 0 8 X1 5 Fig. 12 5.2. 3The7Euclidean 2 10 distance 5 5 between 4 13two 3cases9 (rows 2 8 4 10 X2 is plotted = in X = which 15 as13 9 7 13 2 6 2 12 1 9 8 1 1 8 ofX the defined 3 matrix) is11 dEuc (Xi , Xj ) = ||Xi − Xj || i, j = 1, . . . , n, � 2 + X 2 + . . . + X 2 . For example, the Euclidean distance where ||Xi || = Xi1 i2 ip > dist(uci[c(1,2,10),-1]) between cases 1 and 2 in the above data, is 2 i.e. I is closer to T than to 1 � 2 15.81139 (by sliver), M and T are 2 =a1.0. (7.3 − 7.4)2 + (7.6 − 7.2)2 + (7.7 − 7.3)2 + (8.0 −M 7.2) 10 26.68333 17.32051 For the three cases, the interpoint Euclidean distance matrix is quite far apart Linkage • Compute a distance matrix of all objects • Now we start to connect closest objects • First step is the same: combine the two closest objects into one cluster • Next step: combine the two next closest objects or clusters • How do we define the distance to a cluster? this is called linkage Single Linkage • Depending on different mechanisms for linkage, we find very different clusters • Single Linkage: Distance between a cluster and an object or another cluster is the minimal distance between any of the elements in the cluster. “My friend’s friend is my friend” This leads to long and stringy clusters Complete Linkage • The distance between two clusters V and W is defined as the maximum distance between any of the cluster elements • This leads to very tight clusters The Author November 28, 2011 Average Linkage • 2 8 3 5 1 8 13 0 6 6 10 8 0 8 0 8 X1 The distance between two clusters V and 5 12 3 7 2 10 5 5 4 13 3 9 2 8 4 10 = X2 = W11is 15 defined 13 9as7the 13average 2 6 distance 2 12 1 9 8 1 1 8 X3 between any of the cluster elements: 1 1 D(V, W ) = |V | |W | � x∈V,y∈W |x − y| • Cluster size is a compromise between single and complete linkage November 28, 2011 Ward’s Method 13 0 6 6 10 50 56 46 1310 38 25 64 213 123 19 6 2 12 1 9 � 1 1 D(V, W ) = two clusters |x − y| W Distance between V and � 1 |V1| |W | x∈V,y∈W D(V,asWthe ) = increase in the|xerror − y| sum defined |V | |W | X1 X = 2 X =X 1 2 = X2 X3 = 5 11 X3 • 2 58 1112 15 8 12 3 15 3 13 3 35 137 9 5 71 92 7 1 28 710 13 8 1013 135 2 x∈V,y∈W 8 90 92 8 is of squares (i.e. variance) nif the two clusters X � are merged.ESS(X) n= |Xi − X̄|2 ESS(X) = X � i=1 i=1 |Xi − X̄|2 D(V, W ) = ESS(V ∪ W ) − (ESS(V ) + ESS(W )) This results in spherical clusters 0 28 88 1 8 80 14 1 0 4 11 KMeans • KMeans is a non-hierarchical clustering approach. • • Randomly find K centers of the data (seeds), Each data record is assigned to the closest center. Centers are re-calculated based on members (centroids are means or medians) Repeat this step until cluster assignments do no longer change. KMeans can deal with quite large data sets problematic: K needs to be known