Bayes in Practice & Random Variables stat 430 Heike Hofmann

advertisement

Bayes in Practice &

Random Variables

stat 430

Heike Hofmann

Outline

• Bernoulli Experiments

• Discrete Random Variables

• Expected Value

Bayes

Tree Diagrams

Visualization of Total Probability

24

The probability of a nodeCHAPTER

is

given

as

the

product

of

all

B1 P(A| B1)

A B1

probabilities along the edges

P(B )

B2 P(A| B2)

back to the root (Definition

A B2

P(B )

of conditional probability)

1

2

P(Bk)

Bk

P(A| Bk)

cover

A

Bk

The probability of an event

The probability

of each

is the sum

of node

the in the tree ca

tiplying all probabilities from the root to th

probabilities of all final

diagrams).

Summing

up all the involved

probabilities in the l

nodes (leaves)

rule).

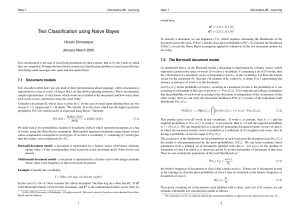

If the set B1 , . . . , Bk is a cover of the sample space Ω

for an event A by (cf. fig.??):

Bayes’ Rule

• Bayes Theorem

P (A) =

k

�

i=1

P (Bi ) · P (A|B

Let B1 , B2 , B3 , ... be a cover of the sample

space, then

is a cover of the sample space Ω, we can compute the probab

P (A|Bj ) · P (Bj )

g.??): P (B |A) = P (Bj ∩ A) =

�k

j

P (A)

i=1 P (A|Bi ) · P (Bi )

P (A) =

k

�

i=1

P (Bi ) · P (A|Bi ).

∩ A)

P (A|Bj ) · P (Bj )

= �k

A)

for all j and ∅ =

� A ⊂ Ω.

Example: Forensic Analysis

Setup: DNA is found at a crime scene

Probability for a (random) DNA match is I in10 Mio

DNA test procedure is sometimes faulty:

false negative:

P(test neg | match) = 0.0000001

false positive: P(test pos | no match) = 0.000001

Assume, police has a positive test result from a

suspect.

What is the probability that they have found the

perpetrator?

Example: Monty Hall Problem

Setup:

- Two goats and one car behind doors

- Game show contestant has to pick a door (but

doesn’t open it yet)

- Host reveals one of the goats

- Final choice for contestant: stick with original pick or

switch to the other door?

What is the probability of winning the car?

Bernoulli & Binomial

Bernoulli Experiments

• Outcome: success or failure; 0 or 1

• P(success) = p, P(failure) = 1 - p

• sequence of (independent) repetitions:

sequence of Bernoulli experiments

X

function has two main properties: Properties of a pmf

Sequence

of

Bernoulli

Experiments

must be between 0 and 1 0 ≤ p (x) ≤ 1 for all x ∈ {x

X

�

all values is 1 i pX (xi ) = 1

k repetitions of Bernoulli experiment:

�

E[h(X)]

= space

h(x

· pX (xi )of=:k-digit

µ

Write

sample

asi )sequence

binary numbers:i

•

•

Ωk = {

00...00, 00...01, 00...10, 00...11,

...,

11...00, 11...01, 11...10, 11...11}

X : Ω �→ R is called a random variable.

1, x

ll values is 1

�

i pX (xi )

=1

Probability

assignment

�

E[h(X)] =

h(x ) · p (x ) =: µ

in Bernoulli Spaces

i

X

i

i

• If experiments are independent:

Ωk = {For sequence

00...00, s00...01,

00...10,

00...11,

in sample space

•

...,

P(s) = pi (1-p)k-i

if s has11...00,

i successes

and 11...10,

k-i failures.

11...01,

11...11}

(Hint: substitute 0s by 1-p and 1s by p)

Ω �→ R is called a random variable.

# of sequences with exactly i successes is

� �

k

i

� �

n

•

i

Ωk = {

00...00, 00...01, 00...10, 00...11,

Binomial

distribution

...,

11...00, 11...01, 11...10, 11...11}

•

X : Ω �→ R Let

is called

a random

variable.

X be the

number of

successes in n

independent Bernoulli

� �experiments with P

k

(success)=p,

i

then

� �

n k

P (X = k) =

p (1 − p)n−k

k

(i)

•

0 ≤ P (A) ≤ 1

Ωk = {

00...00, 00...01, 0

Random Variables

...,

11...00, 11...01, 1

• Definition:

AAfunction

calleda arandom

random variab

function X : Ω �→ R is called

variable

� �

k

image of X:

i

im(X) = X(Omega) = set of all possible

� �

values X can take

n k

P (X = k) =

p (1

k

Examples:

•

#heads in 10 throws,

#of songs from 80s in 1h of LITE 104.1 (or KURE 88.5 FM),

(i)

0 ≤ P (A) ≤ 1

winnings in Darts

(ii)

P (∅) = 0

Discrete R.V.s

• If the image of a random variable is finite

(or countable infinite), the random variable

is a discrete variable

• probability mass function

Probability

mass

function

�

2 �

V ar[X] =

(x

i−

(pmf)

VE[X])

ar[X] =· pX (x

(xi ) − E[X])

i

i

i

2

·

pX (x)

:=function

P (X = px)

is called the probability mass func

The

X (x) := P (X = x) is called the pro

unction

has mass

two main

properties:

of a pmf

p

probability

function

has two Properties

main properties:

Pro

X, if and only

if pX is a pmf, iff

Theorem:

ust(i)

be all

between

1 01between

≤

1 for

∈(x)

{x1≤

, x12

X (x)

values

be

0≤im(X)

and

1 0all≤xpX

0 ≤0must

pand

forpall

x in

X(x) ≤

�

�

ll (ii)

values

1 ofi pall

= {x1, x2, ... }

i ) = 1is 1for im(X)

X (x

theissum

values

i pX (xi ) = 1

•

•

•

E[h(X)] =

�

i

�

h(xE[h(X)]

) =: µh(xi ) · pX (xi

i ) · pX (xi=

i

Expectation

y if

Expected Value

es must be between 0 and

� 1 0 ≤ pX (x) ≤ 1 for all x ∈ {

2

V ar[X]

(xi − E[X]) · pX (xi )

�=

m of all values is 1 i pXi(xi ) = 1

n pX (x) :=

(X = x)value

is called

the probability

TheP expected

of random

variable X ismass fu

� that we Properties

ss functionthe

haslong

twoterm

main

properties:

of a pm

average

will see,

E[h(X)] =

h(xi ) · pX (xi ) =: µ

when we repeat the same experiment over

if

i

and over:

must be between 0 and 1 0�

≤ pX (x) ≤ 1 for all x ∈ {x1 ,

xi · pX (xi ) =: µ

�E[X] =

of all values is 1 i pX (xi ) =i 1

for additional function h, we get:

�

=00...00,

h(x

(xi ) =: 00...11,

µ

ΩE[h(X)]

00...01,

i ) · pX 00...10,

k ={

•

•

..., i