Maps and Time Series Stat 579 Heike Hofmann

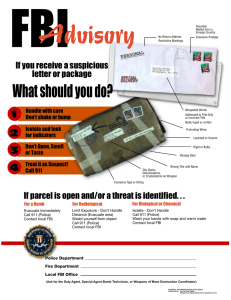

advertisement

Maps and Time Series

Stat 579

Heike Hofmann

Outline

• Melting and Casting

• Maps: polygons, chloropleth

• Time series

Warm-up

• Start R and load data ‘fbi’ from http://www.hofroe.net/stat579/crimes-2012.csv

• This data set contains number of crimes by type

for each state in the U.S.

• Investigate which states have the highest number

of crimes (almost independently of type)

• Pick one state and crime type and plot a time

series

getting ready for loops

• Let’s concentrate on the years since 2000

• Pick a state and fit a model (use lm) in the number

of Burglaries over time (i.e. lm(Burglary~Year) )

• Save the resulting object. Investigate it with your

poking and prodding functions.

• Extract the coefficients (mean and slope) from the

model

• Repeat for another state.

• How can we extract coefficients for all states?

Iterations

•

Want to run the same block of code

multiple times:

!

!

for (i in allstates) {

onestate <- subset(fbi, state==i & Year >= 2000)

model <- lm(Burglary~Year, data=onestate)

!

}

block of commands

print(coef(model)) output

!

• Loop

or iteration

Why should we avoid

loops?

• speed of for-loops still is an issue

• main reason: lots of error-prone

householding chores before and after the

‘meat’

fbi exploration

• Plot scatterplot of population size against

number of violent crimes in 2012. What is your

conclusion? How do things change in 2011?

• Plot population against number of burglaries in

2012. What is your conclusion there?

• What should we rather look at?

Reshaping Data

• Two step process: • get data into a “convenient” shape, i.e.

melt

• cast data into new shape(s) that are

cast

one that is particularly flexible

better suited for analysis

melt.data.frame(data, id.vars, measure.vars,

na.rm = F, ...)"

key

X1

molten form

“long & skinny”

• id.vars: all identifiers (keys) and

qualitative variables X2

X3

• measure.vars: all quantitative

variables

original data

id.vars

X4

key

X1 X2X3X4X5

measure.vars

X5

Casting

• Function cast

dcast(dataset, rows ~ columns, aggregate)

columns

rows

aggregate(data)

Data aggregation sometimes is just a transformation

> fbi.melt <- melt(fbi, id.vars=c("State","Abbr","Population"), measure.vars=4:12)

!

!

> head(fbi.melt)

State Abbr Population

variable value

1

Alabama

AL

4708708 Violent.crime 21179

2

Alaska

AK

698473 Violent.crime

4421

3

Arizona

AZ

6595778 Violent.crime 26929

4

Arkansas

AR

2889450 Violent.crime 14959

5 California

CA

36961664 Violent.crime 174459

6

Colorado

CO

5024748 Violent.crime 16976

!

> tail(fbi.melt)

State Abbr Population

variable value

445

Vermont

VT

621760 Motor.vehicle.theft

448

446

Virginia

VA

7882590 Motor.vehicle.theft 11419

447

Washington

WA

6664195 Motor.vehicle.theft 23680

448 West Virginia

WV

1819777 Motor.vehicle.theft 2741

449

Wisconsin

WI

5654774 Motor.vehicle.theft 8926

450

Wyoming

WY

544270 Motor.vehicle.theft

771

!

!

> summary(fbi.melt)

State

Abbr

Alabama

: 9

AK

: 9

Alaska

: 9

AL

: 9

Arizona

: 9

AR

: 9

Arkansas : 9

AZ

: 9

California: 9

CA

: 9

Colorado : 9

CO

: 9

(Other)

:396

(Other):396

Population

Min.

: 544270

1st Qu.: 1796619

Median : 4403094

Mean

: 6128138

3rd Qu.: 6664195

Max.

:36961664

variable

Violent.crime

: 50

Murder.and.nonnegligent.manslaughter: 50

Forcible.rape

: 50

Robbery

: 50

Aggravated.assault

: 50

Property.crime

: 50

(Other)

:150

value

Min.

:

7

1st Qu.:

1536

Median : 11056

Mean

: 47124

3rd Qu.: 37964

Max.

:1009614

Incidences are now

easy to compute:

•fbi.melt$irate

<- fbi.melt$value/fbi.melt$Population

Recreate this chart of

incidence rates

reorder(State, irate)

Murder.and.nonnegligent.manslaughter

Forcible.rape

Robbery

Motor.vehicle.theft

Aggravated.assault

Violent.crime

Burglary

Larceny.theft

Property.crime

South Carolina

Texas

Florida

Tennessee

Louisiana

New Mexico

Arkansas

Alabama

Georgia

Oklahoma

North Carolina

Washington

Delaware

Arizona

Hawaii

Missouri

Maryland

Nevada

Kansas

Ohio

Alaska

Utah

Indiana

Michigan

Mississippi

Illinois

Oregon

California

Nebraska

Colorado

Minnesota

Wyoming

Wisconsin

Rhode Island

West Virginia

Kentucky

Massachusetts

Montana

Virginia

Connecticut

Iowa

Pennsylvania

Vermont

Maine

New Jersey

New York

New Hampshire

Idaho

North Dakota

South Dakota

0 10002000300040000 10002000300040000 10002000300040000 10002000300040000 10002000300040000 10002000300040000 10002000300040000 10002000300040000 1000200030004000

count

Then, cast

• Row variables, column variables, and a summary

function (sum, mean, max, etc)

• dcast(molten,

• dcast(molten,

row ~ col, summary)"

• dcast(molten,

• dcast(molten,

row ~ . , summary)"

row1 + row2 ~ col,

summary)"

. ~ col, summary)

Casting

• Using dcast:

• find the number of all offenses in 2009

• find the number of offenses by type of crime

• find the number of all offenses by state

What is a map?

43.5

43.0

Set of points specifying

latitude and longitude

lat

42.5

42.0

41.5

41.0

40.5

-96

-95

-94

-93

-92

-91

long

43.5

42.5

lat

Polygon: connect dots in

correct order

43.0

42.0

41.5

41.0

40.5

-96

-95

-94

long

-93

-92

-91

What is a map?

40

lat

35

Polygon: connect only

the correct dots

30

-95

-90

long

-85

Grouping

• Use parameter group to connect the

“right” dots (need to create grouping

sometimes)

qplot(long, lat, geom="point", data=states)

40

40

lat

45

lat

45

35

35

30

30

-120

-110

-100

-90

-80

long

-70

-120

-110

-100

-90

-80

-70

long

qplot(long, lat, geom="path", data=states, group=group)

qplot(long, lat, geom="polygon", data=states, group=group, fill=region)

45

45

40

40

lat

35

lat

lat

30

35

35

40

45

30

30

-120

-110

-100

-90

long

-80

-70

-120

-110

-100

-90

-80

-70

long

qplot(long, lat, geom="polygon", data=states.map, fill=lat, group=group)

Practice

• Using the maps package, pull out map data for all

US counties

counties <- map_data(“county”)

• Draw a map of counties (polygons & path geom)

• Colour all counties called “story”

• Advanced: What county names are used often?

Merging Data

• Merging data from different datasets:

merge(x, y, by = intersect(names(x), names(y)),"

by.x = by, by.y = by, all = FALSE, all.x = all, all.y = all,"

sort = TRUE, suffixes = c(".x",".y"), incomparables = NULL, ...)"

e.g.:

states.fbi <- merge(states, fbi.cast,

by.x="", by.y="Abbr")

Merging Data

• Merging data from different datasets:

region

X1

alabama

...

...

reg X1 X2 X3

ion

alabama

alabama

alabama

X2

region

alabama

alabama

alabama

...

...

...

X3

Practice

• Merge the fbi crime data and the map of the

States

• Plot Chloropleth maps of crimes.

• Describe the patterns that you see.

!

• Advanced: try to cluster the states according to

crime rates (use hclust)

Time Series

NASA Meteorological

Data

24 x 24 grid across Central

America

•

satellite captured data:

temperature,

near surface temperature

(surftemp)

pressure,

ozone,

cloud coverage:

low (cloudlow)

medium (cloudmid)

high (cloudhigh)

•

for each location monthly

averages for Jan 1995 to Dec

2000

Gridx 1 to 24

Gridy 1 to 24

•

What is a Time Series?

305

300

295

ts

for each location multiple

measurements

290

285

280

qplot(time, temperature,

geom="point", data=subset(nasa,

(x==1) & (y==1)))

275

10

20

30

40

50

60

70

40

50

60

70

40

50

60

70

TimeIndx

305

300

ts

connected by a line

295

290

285

qplot(time, temperature,

geom="line", data=subset(nasa,

(x==1) & (y==1)))

280

275

10

20

30

TimeIndx

305

qplot(time, temperature, geom="line",

data=subset(nasa, (x==1) & (y %in%

c(1,15))), group=y)

300

295

ts

but only connect the

right points

290

285

280

275

10

20

30

TimeIndx

Practice

each location, draw a time series for pressure. • For

What do you expect? Are there surprising values? Which

are they?

near surface temperatures for each location

• Plot

Which locations show the highest range in temperatures?

Which locations show the highest overall increase in

temperatures?

use ddply to get these summaries