Modular High-Speed Signaling for Networks in

advertisement

-4

I,

Modular High-Speed Signaling for Networks in

HPC Systems Using COTS Components

by

Peggy B. Chen

Submitted to the Department of Electrical Engineering and Computer Science

in Partial Fulfillment of the Requirements for the Degrees of

Bachelor of Science in Electrical Engineering and Computer Science

and Master of Engineering in Electrical Engineering and Computer Science

at the Massachusetts Institute of Technology

May 9, 2000

@ Copyright 2000 Peggy B. Chen. Al rights reserved.

The author hereby grants M.I.T. permission to reproduce

and distribute publicly paper and electronic copies of this

thesis and to grant others the right to do so.

Author

__

Peggy B. Chen

ent of Electrical En peering and Co puter Science

Certified by

ThesisgSUpervisor, MII Artifici

Thomas Knight

Intelligence Lab

Accepted by

Arthur C. Smith

Chairman, Department Committee on Graduate Theses

MASSACHUSETTS INSTITUTE

OF TECHNOLOGY

JUL 2 7 2000

I IRRARIFRq

MODULAR HIGH-SPEED SIGNALING FOR NETWORKS IN

HPC SYSTEMS USING COTS COMPONENTS

by

Peggy B. Chen

Submitted to the

Department of Electrical Engineering and Computer Science

May 9, 2000

in Partial Fulfillment of the Requirements for the Degree of

Bachelor of Science in Electrical Engineering and Computer Science

and Master of Engineering in Electrical Engineering and Computer Science

ABSTRACT

Increasingly powerful applications summon the need for larger machines to support their

large memory and computation requirements. A key component in designing a high

performance computing (HPC) system is providing the high bandwidth, low latency

interprocessor communication network necessary for efficient and reliable multiprocessor

operation. The design process begins with an evaluation of technological trends,

advancements and practical limitations. The goal is to develop a low cost architecture that

is scalable and can be produced in a short design cycle. The Flexible Integrated Network

Interface (FINI) provides the physical layer and link layer medium that controls

movement of data between HPC processor nodes. The FINI is designed using

commodity-off-the-shelf (COTS) components and high-speed signaling techniques that

enable data to be transferred over transmission lines up to 10 meters at a maximum rate

of 5.38 Gpbs.

TABLE OF CONTENTS

.....- 8

Chapter 1. Introduction..........................................................................................

. .

1.1 Goals.....................................................................................................----.....

.

1.2 Scope ...........................................................................................

-- -- -- -9

. ------.------...............-----............... 10

........

1.3 O rganization ....................................................................................

-............ 8

Chapter 2. High Performance Computing (HPC) Systems.................................................11

2.1 Re-Engineering an HPC System.......................................................................11

2.2 ARIES HPC System.............................................................................-..12

2.2 METRO: Multipath Enhanced Transit Router Organization............................................14

..- 15

...

2.3 Design Requirements.......................................................................................

2.2.1 Scalability.......................................................................................................

16

--............

2.2.2 Programmability.................................................................................

....

.........-------................ 16

....

2.2.3 R eliability...................................................................................

.. . --------.............

2.2.4 Efficiency ...........................................................................................

--------.. . . .

19

------...................

21

....

3.1 Design M otivations.........................................................................................

....

......

...23

3.3 Duplicated Architecture...............................................................................

3.4 Design Goals................................................................................-

..

17

19

Chapter 3. The Flexible Integrated Network Interface .......................................................

3.2 Architecture .......................................................................----

16

......

---------..............

24

3.4.1 Technology Trends .................................................................................

...25

3.4.2 Component Choices ..................................................................................

...25

....

3.4.3 Design Trends ...........................................................................................

3.4.4 FINI Challenges .......................................................................

.......

26

26

Chapter 4. High-Speed Interconnect........................................................................-....

27

4.1 High-Speed Signaling Problem ............................................................

27

4.2 Transmission Lines.......................................................................

.......

28

4.2.1 Definition of High-Speed Signal................................................................28

4.2.2 Transmission Line Models.............................................................30

4.2.3 Impedance Discontinuities..................................................................32

4.2.4 Skin Effects............................................................

............. .........-----------......................

34

4.2.5 Termination Techniques......................................................................36

4.3 Differential Signaling ..............................................................................

4.3.1 Differential Signal Basics ..........................................

2

36

36

4.3.1 LVDS ............................................................................................................................. 39

4.4 Transmission M edia .............................................................................................................. 39

4.4.1 Transmitter/Receiver Pair ..............................................................................................40

4.4.2 Cable .............................................................................................................................. 40

4.4.3 Connector ....................................................................................................................... 42

Chapter 5. Com ponent Specification ..........................................................................................43

5.1 LVDS Transmitter and Receiver ..........................................................................................43

5.1.1 DS90C387/DS90CF388 .................................................................................................44

5.1.2 Decision Factors .............................................................................................................. 9

5.1.3 Functionality of DS9OC387/DS90CF388 ......................................................................45

5.2 Processors ............................................................................................................................. 46

5.2.1 Virtex XCV I OO-6PQ240C .............................................................................................46

5.2.2 Decision Factor .............................................................................................................. 47

5.2.3 Programming the XCV 100-6PQ240C ...........................................................................47

5.2.2 FPGA-I (M aster) ...........................................................................................................49

5.2.3 FPGA-2 (Slave) .............................................................................................................. 50

5.2.4 FPGA-3 (Slave) .............................................................................................................. 50

5.2.5 FPGA-4 (Slave) ..............................................................................................................50

5.3 M em ory .................................................................................................................................51

5.3.1 M T55L256L32P .............................................................................................................51

5.3.2 Decision Factors .............................................................................................................51

5.4 Clock .....................................................................................................................................52

5.4.3 Functionality of AD9851 ...............................................................................................52

5.4.2 Decision Factors .............................................................................................................53

5.4.3 Programming the AD9851 .............................................................................................54

5.5 Power Supply ........................................................................................................................56

5.5.1 LT1374 ...........................................................................................................................56

5.5.1 Decision Factors .............................................................................................................56

5.6 Cables and Connectors .........................................................................................................57

5.6.1 LVDS Cables .................................................................................................................57

5.6.2 Double-Decker Connector .............................................................................................58

5.6 Test Points ............................................................................................................................58

Chapter 6. Board Design ..............................................................................................................60

6.1 Schematic Capture ................................................................................................................60

6.1.1 Com ponent Library....................................................................................................

61

6.1.2 Schem atic Sheets............................................................................................................62

6.1.3 Schem atic V erification..............................................................................................

62

6.1.4 Bill of M aterials.............................................................................................................63

6.2 Board Layout

............................................................................................................

6.2.1 Footprint Library

.. ...................................................

63

..............................................

64

6.2.2 Component Placement ......................................................................

64

(

6.2.3 PCB Board Stack (Layers).........................................................................................

67

6.2.4 Routing...........................................................................................................................69

6.3 Board Fabrication .................................................................................................................

72

6.4 PCB M aterial and Package Properties.............................................................................

72

6.5 Board Assem bly....................................................................................................................73

Chapter 7. A pplications ............................................................................................................

75

7.1 Capability Tests....................................................................................................................76

7.1.1 Latency Test

7.1.2 Round Trip Test.

.............................................................................................................

76

.................................................................................................

77

7.1.3 Cable Integrity Test

...............................................................................................

78

7.2 N etw ork Design....................................................................................................................

7.2.1 Routers

M u tita............eN

78

....................................................................................

79

7.2.1 M ultipath, M ultistage Networks ....................................................................................

80

Chapter 8. Conclusion..................................................................................................................83

8.1 FIN I Architecture

.........

......................................................................................

8.1.1 Netw ork Interface Architecture...............................................

83

.......................................

8.1.2 Basic Router Architecture..............................................................................

.......

8.2 H igh-Speed Interconnect ........................................................................................

84

84

.... 85

8.2.1 Transm itter/R eceiver...............................................................................................

85

8.2.2 Cables and Connectors ........................................................................

87

8.3 FPGA s ..................................................................................................................................

87

8.4 Continual Evaluation ............................................................................................................

88

Appendix A. FINI Board Schematics.....................................................................................

89

Appendix B. FINI Bill of Materials (BOM).............................................................................101

A ppendix C. FIN I Board Layout..............................................................................................104

Bibliography ...............................................................................................................................

4

108

TABLE OF TABLES

Table 1. Dielectric Constants of Some Common Materials [Suth99].......................................29

Table 2. Skin-Effect Fequencies for Various Conductors.........................................................35

Table 3 . Pre-emphasis DC Voltage Level with RPRE [National99] .........................................

45

Table 4. Pre-emphasis Needed per Cable Length [National99]................................................46

Table 5. SelectMAP Mode Configuration Code [Virtex99].....................................................

48

Table 6. AD9851 8-Bit Parallel-Load Data/Control Word Functional Assignment [Analog99] ...54

Table 7. Resistor Values for Resistor Divider in Power Supply Circuitry................................56

Table 8. Electrical Characteristics of Cable [Madison99].........................................................58

Table 9. Designator K ey.................................................................................................................63

Table 10. CommercialTransceivers...........................................................................................

5

86

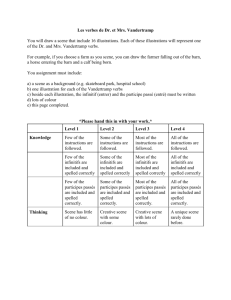

TABLE OF FIGURES

Figure 1. ARIES HPC System Diagram - Scalabe Multirack Solution...................13

Figure 2. HPC System Processor Node Diagram...........................................................................14

Figure 3. Die Shot of K7 Athlon Processor.................................................................................17

Figure 4. FPS and FINI Connection Diagram............................................................................21

Figure 5. Flexible Integrated Network Interface Block Diagram...............................................22

Figure 6. FINI Block Diagram with Duplicated Architecture........................................................24

Figure 7. First- and Second-Order Models of Transmission Lines ............................................

31

Figure 8. High-Speed Region on Network Interface................................................................

33

Figure 9. Twisted-Pair Dimensions...........................................................................................

33

Figure 10. Microstrip Dimensions..............................................................................................34

Figure 11. Skin Effect in AWG24 Copper Versus Frequency ...................................................

35

Figure 12. Differential Signals ...............................................................................................

..37

Figure 13. Differential Skew ................................................................................................

... 37

Figure 14. Effect of Skew on Receiver Input Waveform..........................................................

38

Figure 15. LVDS Point-to-Point Configuration with Termination Resistor ..............................

39

Figure 16. Twisted-Pair Cable.............................................................................................41

Figure 17. Diagram of Parallel Programming FPGAs in SelectMAP Mode [Xilinx00]....... 48

Figure 18. Basic DDS Block Diagram and Signal Flow of AD9851 [Analog99]............53

Figure 19. High-Speed Signal Probe Setup................................................................................59

Figure 20. Top View of FINI Board....................................................................................

66

Figure 21. Bottom View of FINI Board .........................................................................................

67

Figure 22. FINI Board Stack Diagram.......................................................................................68

Figure 23. Split Planes on the Internal +V33 Power Plane .......................................................

69

Figure 24. Diagram of Latency Test...............................................................................................76

Figure 25. Diagram of Round Trip Test.................................................................................77

Figure 26. Diagram of Cable Integrity Test ...................................................................................

78

Figure 27. Diagram of HPC System (Processor Nodes, FINI, and Network)..............79

Figure 28. Dilation-1, Radix-2 Router ........................................................................

.......

80

Figure 29. Dilation-2, Radix-2 Router ......................................................................................

80

Figure 30. 8 x 8 Multipath, Multistage Network.......................................................................

81

Figure 31. Basic Router Building Block Architecture ..............................................................

85

Figure 32. FINI Board - All Layers ......................................................................................

6

104

Figure 33. FLNI Board - Layer 1 1H .........................................................................................

105

Figure 34. FIN I Board - Layer 2 (2V) .........................................................................................

105

Figure 35. FIN I Board - Layer 3 (3H) .........................................................................................

106

Figure 36. FIN I Board - Layer 4 (4V).........................................................................................106

Figure 37. FIN I Board - Ground Plane ........................................................................................

Figure 38. FIN I Board - +3.3V Plane..........................................................................................107

7

107

CHAPTER

1

INTRODUCTION

Increasingly powerful applications summon the need for larger machines to support their large

memory and computation requirements. Modem microprocessors have paved the way for largescale multiprocessor systems, but the challenge remains to effectively utilize their potential

performance to run not only today's range of applications, but future applications as well. One

key component in designing such a high performance computing (HPC) system is providing the

high-performance and reliable interprocessor communication network necessary to allow efficient

multiprocessor operation.

1.1 GOALS

The goals of an interprocessor network for an HPC system are:

"

High bandwidth

"

Low latency

8

J

"

High reliability (signal integrity)

"

Scalability

"

Reasonable cost

"

Practical implementation

High bandwidth, low latency and good physical design are the key properties of a reliable highspeed signaling network in HPC systems. Practicality and cost constraints, important in today's

implementation-oriented environment, are addressed through the use of commodity-off-the-shelf

(COTS) components. The network topology and protocols must be formulated in a scalable

manner. For example, a small, simple crossbar seems easy to replicate, but distributing a large

function across an array of small cross bars would incur 0(n) switching latency and require 0(n2)

such switches [DeHon93]. Ease of replication does not translate to scalability.

1.2 SCOPE

This work only attempts to address issues directly related to designing a modular high-speed

signaling network for HPC systems using COTS components. Attention is paid to industrial

design practices and trends and how these techniques can be utilized to provide an efficient and

reliable interface between processing nodes and the network. The design detailed here may not be

the ideal solution, but it is the most practical and realistic solution at the time of design due to

component availability and/or cost constraints. It provides a foundation for implementing and

testing higher-level protocols and API issues involved in network research and design. The design

will highlight the difficulties and limitations of implementation. Results detailed here may be

used as a stepping-stone for future network interface designs in HPC systems.

9

1.3 ORGANIZATION

Before discussing the strategy used to design the high-speed signaling network, Chapter 2

provides a background on HPC systems. Chapter 3 then describes the architectural design of the

interprocessor communication network called the Flexible Integrated Network Interface (FINI).

Chapter 4 continues with a discussion of design issues specific to high-speed digital design.

Chapter 5 discusses the components used to develop the FINI interface card and Chapter 6

examines issues related to printed circuit board (PCB) design. Chapter 7 highlights some

applications the FINI board may be used for and Chapter 8 concludes with an analysis on the

FINI design and suggestions for future work.

10

CHAPTER2

HIGH PERFORMANCE

COMPUTING (HPC) SYSTEMS

High performance computing (HPC) systems are large-scale multiprocessor systems intended for

computation and communication intensive purposes. They should have hardware capable of

supporting parallel programming constructs supplemented by a good API. HPC systems should

also be able to support thousands of processors, gigabytes of data, a complex interprocessor

communication network, and any interaction between software drivers, compilers and the

hardware itself. This chapter gives an overview of the ARIES HPC system.

2.1 RE-ENGINEERING AN HPC SYSTEM

The current existence of many high performance computing (HPC) platforms questions the need

for another one. Evaluation of popular HPC systems reveals many advances in computer

11

architecture technology, but also reveals a variety of deficiencies. Commercially available HPC

platforms suffer in varying degrees from poor programmability, inadequate performance and

limited scalability.

The ARIES HPC system being designed at the Artificial Intelligence Laboratory at the

Massachusetts Institute of Technology is the result of examining computer architecture from all

levels of abstraction. Topics of consideration include operating systems and applications,

transistor level chip designs, systems engineering and hardware support. With no requirements on

backward compatibility, each component of the ARIES HPC system is re-engineered from the

basic fundamentals to maximize the potential of the system. In all cases, current industrial

practices are examined. Improvements are made where possible while many other elements have

been completely redesigned to enhance performance.

2.2 ARIES HPC SYSTEM

With multiple racks of interchangeable devices, the ARIES HPC system provides a scalabe

physical design for high performance parallel computing as depicted in Figure 1. Each slot can

contain either a processor node that consists of a RAID array, PIM processors chip and an I/O

processor, or a network interface. The swappable devices are connected via a backplane router.

The flexibility of the arrangement of HPC system components permits the implementation of a

plurality of high performance parallel computing architectures with a single set of hardware

components.

12

"RACK"

Interchangeable devices

(Processor Netwrk, etc.)

EI

7<

links to other

network boxes

K

16 more Ivices

on oppos to side

dense router

backplane

Exploded view of a network box

"BOX"

[

RAID Arry

Node Chips + 10 +

Routers

Exploded view of a processor box

Figure 1. ARIES HPC System Diagram - Scalabe Multirack Solution

The architecture of the processor node is detailed in Figure 2. Each HPC processor node requires

a means to communicate with other processors. The Flexible Integrated Network Interface (FINI)

card is the building block of the interprocessor communication network. The FINI card serves as

the network interface card for each HPC processor node. In addition, it is also the building block

upon which the back plane router is constructed. The FINI is designed on a stand-alone PCB (see

Chapters 3-6) providing the flexibility necessary to implement a scalable HPC system.

13

to backplane

48 VDC

about 16 MB PIM

per chip @ 8 procs

local

regulators

coolant

router

Ar

heat

exchanger-

route

1/0 and

storage chip

monitor

processor_

2 GB

buffer

RAID ring

distributed RAID-5 Array

(about 60 GB/node)

Figure 2. HPC System Processor Node Diagram

2.2 METRO: MULTIPATH ENHANCED TRANSIT

ROUTER ORGANIZATION

As machine size increases, interprocessor communication latency will increase, as will the

probability that some component in the system will fail. In order for the potential benefits of

large-scale multiprocessing to be realized, a physical router network and accompanying routing

14

protocol that can simultaneously minimize communication latency while maximizing fault

tolerance for large-scale multiprocessors is necessary.

The Multipath Enhanced Transit Router Organization (METRO) is a physical layer architecture

featuring multipath, multistage networks [DeHon93]. Such a network architecture provides

increased bandwidth, improved fault tolerance and the potential to decrease latency while

maintaining the scalability of the design.

The METRO Routing Protocol (MRP) sits on top of the physical layer and encompasses both the

network layer and the data-link layer as described by the ISO OSI Reference Model [DZ83]. The

MRP fulfills the role of the data-link layer by controlling the transmission of data packets and the

direction of transmission over interconnection lines, and it provides check-sum information to

signals when a retransmission is necessary. The MRP also provides dynamic self-routing, thereby

performing the functions of the network layer. All together, the MRP provides a reliable, bytestream connection from end-to-end through the routing network [BCDEKMP94].

The flexibility to choose parameters permits customized implementations of the METRO network

to suit target applications and available technologies. In the ARIES HPC system, the network will

be built out of FINI cards. Simple routing and freedom to optimize the target technology makes

METRO an ideal network architecture to meet the needs of our communication intensive

multiprocessor system.

2.3 DESIGN REQUIREMENTS

The basic design goals of the ARIES HPC are:

*

Scalability

"

Programmability

15

"

Reliability

*

Efficiency.

2.2.1 Scalability

A versatile HPC system should scale from a few processors to thousands of processors.

Scalability is supported by the ARIES HPC system via a modular design, as depicted in Figure 1.

Implemented using multiple racks of interchangeable devices, the ARIES HPC system can

support anywhere from a few to several thousand processors. The network topology used will be

based on multi-butterflies [Upfal89] [LM92] and fat-trees [Lei85]. Multipath, multistage

networks are scalable and allow construction of arbitrarily large machines using the same basic

network architecture. This makes it an ideal choice for HPC systems. The hardware resources

required for fat-tree networks grow linearly with the number of processors supported [DeHon93].

2.2.2 Programmability

Programmability refers to the ease with which all users can program the HPC system to perform

the required function. A programming language and compiler that allows programmers to express

parallel constructs in a natural manner is necessary. Programmability requires an easy-to-use user

interface to minimize programmer time spent producing correct code.

2.2.3 Reliability

A reliable HPC system is guarded against potential failures because it is designed with a

philosophy that there should be no single point of failure. Adaptive error correcting hardware and

novel hardware-supported programming models offer secure and reliable computing. The

multipath, multistage network topology provides a basis for fault-tolerant operation along with

high bandwidth operation. By providing multiple, redundant paths between each pair of

16

..........

...

Data may get

processing nodes, alternative paths exist to route around faulty components.

but

corrupted, components may fail and external factors may cause the system to malfunction,

ensures the

protection in the form of backups, check points, self-detection and correction

reliability of the ARIES HPC system.

2.2.4 Efficiency

of abstraction. In a

The efficiency issue within HPC systems is a consideration at multiple levels

to processing

parallel computer, efficiency is dependent upon the ratio of communication time

can handle such a

time. This requires an efficient processor and a communication network that

of this work.

load. Design of the communication network is described in the remaining chapters

Figure 3. Die Shot of K7 Athlon Processor

maximizing the number of

Processor efficiency on the ARIES HPC system is achieved in part by

of transistors running

computations per silicon area. Modern day processors contain millions

processor. Less than a

sequential streams of instructions. Figure 3 shows a die shot of an Athlon

The other three-quarters

quarter of the chip area is devoted to useful computation shown in red.

as caching, instruction

are dedicated to keeping the first quarter busy through techniques such

17

reordering, register renaming, branch prediction, speculative execution, block fetching and prefetching. In a parallel architecture context where multiple processes are placed on a single die,

overall area efficiency is more important than the raw speed of an individual processor. Simple,

efficient and cost effective architectures will yield the greatest computing power. Efficient

computing is achieved through the use of less silicon to perform the same amount of work.

18

CHAPTER 3

THE FLEXIBLE INTEGRATED

NETWORK INTERFACE

The Flexible Integrated Networking Interface (FINI) is the high-speed network interface in the

ARIES HPC system that facilitates communication between the processor nodes. A high

bandwidth, low latency interprocessor communication network is necessary for efficient and

reliable multiprocessor operation. This chapter focuses on the motivations behind the design of

the FINI and describes its architecture and functionality. The conclusion highlights the goals and

challenges of this design.

3.1 DESIGN MOTIVATIONS

Advances in technology have provided engineers with a vast array of building blocks. The

combinations that can achieve any one result are nearly endless, as is the case for developing the

19

FINI. The focus in designing this interprocessor communication network is on how to achieve a

high-speed network through the evaluation of technological trends, advancements and practical

limitations. Results from this work will be of benefit to future designers of all high-speed

signaling networks in HPC systems.

Past research on high-speed network design for large-scale multiprocessing implemented the

network interface in silicon to produce an integrated circuit (IC) chip [DeHon93]. This was a time

consuming, costly (on the order of $1OK for 30 chips) and fixed solution. Designing an IC is a

worthy investment if the goal is to mass-produce a commercial product, but it is not economically

feasible for a low volume scenario such as the research project at hand.

The goals associated with designing the FINI are the antithesis of what an IC design entails:

"

Short design cycle

"

Low cost

"

Flexibility.

In a research project, the probability of revisions is extremely high. A low overhead solution

coupled with a short design cycle minimizes the total cost and design time in the long run. The

FINI leverages existing technology by integrating commodity-off-the-shelf components (COTS)

on a stand-alone printed circuit board (PCB) to achieve the desired interconnect solution in a

relatively short design cycle. Modifying a PCB is relatively easy compared to redesigning a

completely new IC.

Recent technological advancements have produced powerful programmable logic devices that can

be integrated into a design to provide a more flexible and cost efficient solution. Incorporating

field-programmable gate arrays (FPGA) in this solution provides the flexibility to implement

various functions and perform multiple tests using the same platform.

20

A high-speed signaling network depends upon the FINI architecture, the signaling techniques

used on the PCB and the chosen COTS components. The remainder of this chapter describes the

architectural design of the FINI while Chapter 4 examines various technical design issues related

to high-speed signaling. Components used on the FINI card are detailed in Chapter 5.

3.2 ARCHITECTURE

The Flexible Integrated Network Interface (FINI) is the physical layer and link layer medium that

controls the movement of data between processor nodes. Its primary function is to transmit data

from one processor node to another and receive data in the reverse direction as well.

NHPC Processor

F

Node

o

N

C

A

D

HPC Processor

NodeR

D

Figure 4. FPS and FINI Connection Diagram

The Flexible Integrated Network Interface is comprised of FINI cards implemented on PCBs

connected together by high-performance cables. Each processor node on the ARIES HPC system

requires a dedicated FINI card to handle its data transmission and reception. A partial diagram of

the setup is shown in Figure 4. At the time of writing, the ARIES HPC system is not ready to be

tested in conjunction with the FINI. For the purposes of programming and testing the FINI in the

meantime, a desktop PC and fast, large SRAM buffers will be used in place of the ARIES HPC

21

system. While this alternative solution will not demonstrate the capabilities of the HPC system, it

does provide a means of testing the communication network itself. At this phase in the HPC

development process, it is more crucial to understand the design of high-speed networks and

associated signaling techniques. Focus is placed on maximizing the performance of the FINI card.

The FINI card is responsible for tasks such as bus arbitration and data synchronization. Data

management on board the FINI card is controlled by FPGAs. A parallel port serves as the

interface between the computer and the FINI card. Data to be transmitted between processor

nodes enters the FINI from the parallel port into the Master FPGA (FPGA-1). Data can be stored

in the associated memory until it is ready to be passed to the transmitter and sent to another

processor node through high-performance cables. A FINI that is receiving data from another

processor nodes sees data enter via the receiver. This incoming data is passed into the Slave

FPGA (FPGA-2) and can be then stored in the associated memory until it is needed.

Memory

-

FPGA 1

Transmitter

FPGA 2

Receiver

0e

Clock

Memory

--

Figure 5. Flexible Integrated Network Interface Block Diagram

22

Figure 5 presents the basic block diagram of the FINI architecture. The FINI is powered by 2.5V

and 3.3V power sources and clocked by a 100MHz clock. The Master FPGA (FPGA-1) is

programmed via the parallel port while the Slave FPGA (FPGA-2) is programmed by the Master

FPGA. Section 5 describes each component used on the FINI card in detail.

3.3 DUPLICATED ARCHITECTURE

This is the first version of the FINI and one of the primary concerns is ensuring that all

components work correctly and the design is valid. All projects come with a limited budget, so

minimizing costs when possible is desirable. By choosing small component packages when

possible, less board real estate will be consumed. Furthermore, since two FINIs are required to

successful test data transmission and reception, an alternative to producing two FINI boards is to

replicate the design on one single PCB. This approach will be taken and the FINI will be

replicated on the backside of the PCB (See Figure 6).

In the duplicated architecture, some components are not replicated because resources from

components on the top side can be shared. One 3.3V power supply is sufficient to support the

duplicated design and is therefore not replicated on the back of the board. The 2.5V power supply

is replicated because the FPGAs run off of this supply and they each consume enough power that

one will not be sufficient. There is only one clock on the board.

For the purposes of programming on-board components, the parallel port is wired to FPGA-I that

serves as the Master FPGA. FPGA-2, FPGA-3, and FPGA-4 are all Slave FPGAs.

23

32

PARALLEL PORT

2.5V

17/

FPGA-1

48

XMT-1

18

3.3V

H

E

A

D

E

R

12 4,

FP4A-2

SRAM

RCV-1

32

DDS

0

I-

32

16.6MHz

FPC A-3

48

XMT-2

18

H

E

A

D

E

R

0

I.-

FPGA-4

48

RCV-24

TDB = Tes t and Debug Bus

Figure 6. FINI Block Diagram with Duplicated Architecture

3.4 DESIGN GOALS

Engineering is about problem solving. The answer to the high-speed signaling network problem

in HPC systems is not one hard-set answer though. Instead, there are an endless number of ways

24

to implement FINI given the choices in components, advancement in technology and design

trends. The main purpose behind designing FINI is to understand and evaluate the technology

available on the market today to determine if it will meet the needs of an HPC system.

3.4.1 Technology Trends

Everything is getting smaller while becoming more powerful. Moore's Law [Moore79] suggests

that the number of devices that can be economically fabricated on a single chip increases

exponentially at a rate of about 50% per year and hence quadrupling every 3.5 years. Meanwhile,

the delay of a simple gate has decreased by 13% a year, halving every 5 years and chip sizes are

increasing by 6% a year. Such breakthroughs have been possible with improvements in the

semiconductor industry scaling down gate lengths from 50 pm in the 1960s to 0.18 pm 40 years

later.

Advancements in mobile computing and portable devices are driving the miniaturization of ICs

and their packaging while simultaneously providing increased capabilities and consuming less

power. Higher speeds and smaller form factors require tight integration between interconnects

and components. With such improvements in technology, the problems associated with systemslevel engineering in high-speed digital systems have become more critical.

3.4.2 Component Choices

The FINI card will be designed on a PCB using COTS components with cables providing the

interconnection medium. Much of FINI's physical performance will therefore depend upon the

capabilities of the chosen components. For instance, the supported bandwidth will be largely

determined by the capabilities of the chosen transmitter and receiver. Because component

availability is often dictated by cost and manufacturing limitations, future versions of the FINI

25

card should take into consideration alternative choices. Chapter 8 provides some suggestions, but

with more COTS components appearing every day, a thorough industry search is beneficial.

3.4.3 Design Trends

Rapid advancement in VLSI technology and the constant development of new applications keeps

the digital systems engineering field in a constant state of flux. Due to time constraints and lack

of familiarity with new technologies, design methodologies used in industry are often recycled for

reuse on future projects. This can often lead to a false sense of security. As technology advances,

ad hoc approaches that worked in the past become sub optimal and often fail. Due to

compatibility problems with existing parts, processes or infrastructure, the result is either a

product that fails to operate, fails to meet specifications, or is not competitive by industrial

standards. Avoiding this pitfall requires an understanding at both the digital and analog aspects of

the design.

3.4.4 FINI Challenges

The design challenges associated with the development of FINI are not falling in the previously

described traps. Techniques used for high-speed signaling over long-haul networks are unique

and not well documented. Careful selection of components and good implementation of design

techniques is vital for the creation of a reliable system.

System-level engineering constrains what an architect can do and is the major determinant of the

cost and performance of the resulting system [DP98]. Each decision along the way will contribute

to the performance and reliability of a system. The next two chapters will discuss the theory

behind the design techniques used and cover the various components used to implement a highspeed signaling network.

26

CHAPTER 4

HIGH-SPEED INTERCONNECT

The previous chapter introduced the FINI architecture while highlighting some of the challenges

involved. In this chapter, we address the design issues involved with the physical interface linking

two processing nodes together.

4.1 HIGH-SPEED SIGNALING PROBLEM

High-speed cables serve as the interconnect between FINI cards, each associated with an HPC

processing node. The more processing nodes there are in the ARIES HPC system, the farther

apart they may be located from each other. Hence, the FINI cards need to be able to drive signals

traveling over long interconnect lines up to 10 meters in length external of the FINI board.

Consequently, the interconnect medium will behave like a transmission line and our signaling

27

problem becomes a transmission line design problem. A signaling technology that is immune to

noise and that can maintain integrity across such distances is required.

This section reviews topics in transmission line theory and examines signaling technologies that

will be applicable to the design of the interconnect network. The high-speed region on the

network interface occurs both on the FINI board and off the board. The transmission line

phenomenon occurs in both regions, but the primary concern of this work is how the high-speed

cables behave as transmission lines. The signaling technology chosen will be applicable in both

regions. General references are available describing the design of high-speed signals on PCBs

([DP98] [JG93]).

4.2 TRANSMISSION LINES

Under certain conditions, an interconnection ceases to act as a simple pair of wires and behaves

as a transmission line, which has different characteristics. The term "wire" refers to any pair of

conductors used to move electrical energy from one place to another, such as traces with one or

more planes (ground of power), coax cables, twisted pairs, ribbon cables, telephone lines and

power lines. The transmission lines connecting FINI boards occur in the form of twisted-pair

cables. This section describes the conditions under which wires behave as transmission lines,

explains how they are modeled, what the potential side effects are, and how to avoid transmission

line effects.

4.2.1 Definition of High-Speed Signal

The point at which an interconnection ceases to act as a pair of wires and behaves as a

transmission line instead depends upon the length of the interconnection and the highest

frequency component of the signal. A signal is considered high-speed if the line is long enough

28

such that the signal can change logic levels before the signal can travel to the end of the

conductor. When its edge rate (rise or fall time) is fast enough, the signal can change from one

logic level to the other in less time than it takes for the signal to travel the length of the conductor.

A conservative rule of thumb is that a long interconnection is one whose length is longer than

1/10 of the signal's wavelength [Suth99]. For example, a 1-meter long cable can be treated as a

lumped capacitor at signal frequencies up to 19.8MHz. Useful formulas for such a calculation are

listed below.

wavelength = velocity x time = velocity x

velocity

=

c

Tr

speed of light

- Vrelative dielectric constant

-

frequency =

velocity

distance

1

frequency

or A=

C

0 xT

300x10 6 rn/sec =198x 10 6 n/sec

198 x 106 m/sec

=

on19.8

=itac

l0in

MHz

In the calculations, the distance (or wavelength) is 10 meters because a 1-meter wavelength is

1/ 1 0 th of that. The relative dielectric constant for cables is 2.3. Table 1 gives the dielectric

constants of some common materials.

Material

Dielectric Constant, Er

Air

1

Cable (RG-58)

2.3

PCB (FR-4)

4

Glass

6

Ceramic

10

Barium titanate

1200

Table 1. Dielectric Constants of Some Common Materials [Suth99]

29

Interconnects that are classified as short-length look like a pair of wires and a capacitor. When the

length of the interconnection approaches 1/04 the wavelength, resonance occurs and it no longer

behaves as a lumped capacitor. For this reason, interconnections should not be near 1/4 the

wavelength [Suth99].

A more convenient way of determining if a signal is considered to be high-speed is using its rise

time. An interconnection is considered to be at a high frequency when the signal's rise time (tr) is

less than twice the flight time (tf), or propagation time (tpd). This is the amount of time it takes the

signal to reach the end of the interconnect.

t

distance

velocity

t

=

=

d

=--

-c/f

im

198 x106 rn/sec

=5.05 nsec

If a signal takes 5.05 nsec to travel one-way down an interconnect, it will take 10.1 nsec to travel

round-trip. This 1-meter cable is considered a transmission line if the rise time is less than 10.1

nsec.

4.2.2 Transmission Line Models

Two models of transmission lines are shown in Figure 7. Transmission lines have four electrical

parameters as shown in the second-order model: resistance along the line, inductance along the

line, conductance shunting the line, and capacitance shunting the line. Although this model is

more accurate, the more common representation is the first-order model because most

transmission line cables and PCB conductors have L and C values that dwarf the R values

[Suth99].

30

R

R

L

-- Ayi

L

AM~PfV

G

Y

C

C

2nd-Order Transmission Line

L

L

C

C

1st-Order Transmission Line

Figure 7. First- and Second-Order Models of Transmission Lines

In many cases, resistance is small enough that it can be ignored. Inductance and capacitance are

defined by the geometric shape of the transmission line; they are independent of the actual size

and are determined by the ratio of the cross-sectional dimensions. Shunt conductance is almost

always negligible because low loss dielectrics are used.

If the line is short or the rise time of the signal is long, transmission line effects are not

significant. Then inductance of the line is negligible, and the line can be approximated as a

lumped capacitor. The delay is determined by the impedance of the driver and the capacitance of

the line and can be decreased by reducing the source resistance of the driver [Bakoglu90].

The inductance becomes important if the line is long and as a result has a large inductance or if

the rise times get faster. Then the transmission line effects surface, and both the distributed

inductance and capacitance must be taken into account.

31

4.2.3 Impedance Discontinuities

Impedance is the hindrance (opposition) to the flow of energy in a transmission line. Impedance

of a line matters when the time that it takes a signal to change levels is short enough that the

transition is completed before the signal has time to reach the far end of the line. The

characteristic impedance of line is given by the equation:

" I

C

When impedance discontinuities occur, energy from the signal flowing down the line will be

reflected back. This reflection could interfere with the proper operation of circuits connected on

the line. Sources of impedance discontinuities include:

"

Trace width changes on a layer,

"

Connectors,

*

Unmatched loads,

"

Open line ends,

*

Stubs,

*

Changes in dielectric constant,

*

Large power plane discontinuities.

In order to insure that high-speed signals arrive at their destinations with a minimum amount of

distortion, it is necessary to insure that the impedances of all the components in the circuit are

held within a range consistent with the noise margins of the system. The prime area of concern is

outlined in Figure 8. Transmission line effects such as reflections and crosstalk occur in highspeed designs when impedance discontinuities occur.

32

Connector

FPGA-21

XM

qCV

FPGA-3

Cable

up to 10 meters

FPGA-1

RCY

_

MT

12

FPGA-4

FINI Board

FINI Board

High-Speed Region

Figure 8. High-Speed Region on Network Interface

Transmission line impedance is determined by the geometry of the conductors and the electric

permittivity of the material separating them. Impedance can be determined by the ratio of trace

width to height above ground for printed circuit board traces. For twisted-pair lines, impedance is

dependent upon the ratio of wire diameter to wire separation. Impedance is always inversely

proportional to the square root of electric permittivity. The pertinent impedance equations for

twisted-pairs and microstrips are provided here.

For twisted-pair cables,

impedance (9)=

1{In --

d~

S

Figure 9. Twisted-Pair Dimensions

where d is the diameter of the conductor, s is the separation between wire centers and E, is the

relative permittivity of substrate as shown in Figure 9.

33

For microstrips,

impedance (Q)=

87

(5.98h

In 5.8

e +1.41

0.8w+t

*w

--------------------

-It

hI]

Figure 10. Microstrip Dimensions

where h is the height above ground (in.), w is the trace width (in.), t is the line thickness (in.) and

6, is the relative permittivity of substrate as shown in Figure 10.

4.2.4 Skin Effects

At low frequencies, resistance stays constant, but at high frequencies, it grows proportional to the

square root of frequency. When resistance starts increasing, more attenuation (loss) is present in

the wire while maintaining linear phase [JG93]. The series resistance of AWG 24 wires is shown

in Figure 11. At 65 kHz, resistance begins rising as a function of frequency. The increase in

resistance is called the skin effect.

34

101

C

10-'

0

C

4

0

10-5

10-7

101

105

103

107

109

Frequency (Hz)

Figure 11. Skin Effect in AWG24 Copper Versus Frequency

The skin effect frequency is dependent upon the conductor material. Table 2 lists a few

conductors and the frequency at which the skin effect begins to take hold [JG93].

Conductor

Skin-Effect Frequency

RG-58/U

21 kHz

AWG24

65 kHz

AWG30

260 kHz

0.010 width, 2 oz PCB trace

3.5 MHz

0.005 width, 2 oz PCB trace

3.5 MHz

0.010 width, 1 oz PCB trace

14.0 MHz

0.005 width, 1 oz PCB trace

14.0 MHz

Table 2. Skin-Effect Fequencies for Various Conductors

35

4.2.5 Termination Techniques

A multitude of problems arise when interconnects that behave as transmission lines are not

properly terminated. At the far end of the cable, a fraction of the attenuated signal amplitude

emerges. Additionally, a reflected signal travels back along the cable towards the source.

Reflections cause temporary ringing in the form of voltage oscillations. Larger voltages and

currents radiate larger electric and magnetic fields and transfer more crosstalk energy into

neighboring wires. Terminations are necessary to eliminate reflections and the distortions it

would cause in the actual signal.

Common termination schemes include parallel termination and series termination. More

sophisticated termination techniques have been developed which incorporate voltage controlled

output impedance drivers [DeHon93]. Such techniques allow the output impedance to be varied

such that it can be automatically matched to the attached transmission line impedance.

4.3 DIFFERENTIAL SIGNALING

When driving signals over a long cable, maintaining signal integrity becomes a challenge because

noise and attenuation effects will most likely corrupt the signal. Differential signals provide a

balanced solution that is capable of dealing with signal degradation over long lines and are

reasonably immune to common mode noise.

4.3.1 Differential Signal Basics

Differential signals are symmetric such that as the device switches at output A, the

complementary output A also switches at the same time in the opposite polarity (see Figure 12).

The difference in amplitude between the two signals serves as signal information. If the two

36

signals are kept under identical conditions, then noise induced on the signals will affect both

signals and the signal information will be unchanged. At high data rates, the use of differential

signals provides increased immunity from noise.

A

"True"

logic gate

"Complement"

A

Figure 12. Differential Signals

The maximum immunity from noise and EMI occurs when both signals switch simultaneously.

When there is a time shift between true and complementary signals, a differential skew occurs as

shown in Figure 13.

A

A

differential skew

Figure 13. Differential Skew

37

As the signal travels down the transmission line, noise generated by one side of the differential

pair can be effectively cancelled by the other side if the lines are closely coupled as with a twisted

pair or shielded pair. This cancellation depends on the simultaneous switching of the signals at

the driver as well as all along the transmission line where the signal propagates. Therefore, the

transmission media should be designed to minimize skew between differential lines.

Skew also occurs if the two wires in the twisted-pair are not of equal length. When one side

receives the signal early, the total differential signal will look like a sloped staircase in the input

transition region as shown in Figure 14. This can lead to timing uncertainty or jitter at the receiver

output. The overall skew will be a function of the skew characteristics of the transmission lines

and the length of the interconnect. Shorter, matched-length twisted-pairs and simultaneous

switching will minimize skew.

A

differential signal

Figure 14. Effect of Skew on Receiver Input Waveform

At the receiving end, the differential receiver compares the two signals to determine their logic

polarity. It ignores any common mode noise that may occur on both sides of the differential pair.

To optimize this noise canceling effect, the coupled noise must stay in phase. When the noise

does not arrive at the receiver simultaneously, noise rejection will not occur and some noise

remains on the signal.

38

4.3.1 LVDS

Low voltage differential signaling (LVDS) is a data communication standard (ANSI/TIA/EIA644, IEEE 1596.3) using very low voltage swings over two differential PCB traces or a balanced

cable. It is a means of achieving a high performance solution that consumes minimum power,

generates little noise, is relatively immune to noise and is inexpensive. LVDS has the flexibility

of being implemented in CMOS, GaAs or other applicable technologies while running at 5V,

3.3V or even sub-3V supplies.

The LVDS transmission medium must be terminated to its characteristic differential impedance to

complete the current loop and terminate high-speed signals. The signal will reflect from the end

of the cable and interfere with succeeding signals if the medium is not properly terminated. When

two independent 50I traces are coupled into a 1002 differential transmission line, a 100n

terminating resistor is placed across the differential signal lines as close as possible to the receiver

input as shown in Figure 15. This helps to prevent reflections and reduce unwanted

electromagnetic emissions.

--- Drver

D rZve=

g

50 ohm s

Receiver

-

Figure 15. LVDS Point-to-Point Configuration with Termination Resistor

4.4 TRANSMISSION MEDIA

To transmit signals from one FINI board to another, the driver or transmitter must send out LVDS

signals which travel through a connector and out onto the cable. At the other end, these signals

39

enter a connector on the board before reaching the receiver. Design of the transmission media

requires three sets of components: a transmitter/receiver pair, connectors and cables. This section

describes selection criteria used to determine the most appropriate media to attain the desired

results.

4.4.1 Transmitter/Receiver Pair

Transmitters and receivers typically come in pairs with complementary functions. The

requirements of these chips are:

"

TTL + LVDS conversion

*

3.3V power supply

Signals on the FINI card are TTL and need to be converted to LVDS before being transmitted

across the cable. To minimize power conversion, the maximum power supply allotted is 3.3V. In

addition, the transmitter/receiver pair's specifications should be optimized on these features:

"

Large number of LVDS output signals

*

Capability to minimize skew effects.

The more LVDS output signals provided, the greater the bandwidth of the FINI card. The number

of output signals will determine the cable and connector size necessary. To ensure signal

integrity, the receiver should have enhanced capabilities dealing with skew and noise effects.

Additional serializing techniques allow easy management of shorter on-board signals.

4.4.2 Cable

The requirements of the cable used for transmitting signals are:

*

Symmetrical or balanced transmission lines

*

Availability in variable lengths

40

-

-

_

=--

_ -1-1,

__ -

- -__

. __

Other factors to consider are:

"

Minimal skew within pairs and between pairs of wires

"

Ability to match board and capable impedance.

The high-performance cables connecting the FINI cards can be anywhere from 2 to 10 meters in

length. When signaling over a long external cable, the return impedance is too high to be ignored.

In this case, shielded twisted-pair cables are used as the transmission medium to provide a

balanced configuration where the return impedance equals the signal impedance. In a balanced

cable, the signal flows out along one wire and back along the other. Proper matching of

differential signals is achieved using balanced signals.

Jacket (dielectric)

First conductor

Jacket (dielectric)

Second conductor

Figure 16. Twisted-Pair Cable

When choosing a cable assembly, it must have a sufficient number of twisted-pairs to support the

bandwidth of the transmitter/receiver chips. The cable specifications will indicate the amount of

skew in between each differential pair. Cables available in a variety of lengths are necessary for

the ARIES HPC system.

41

FMOF-

---I"

4.4.3 Connector

Connectors serve as the interface between the electrical and mechanical parts of a design. Their

reliability often determines the overall reliability of the system. The first requirement of the

connector is that it matches the cable and has the required number of pins. The more important

considerations are those concerning signal integrity. The connector represents the least shielded

and highest impedance discontinuity portion of the signal path in the FINI high-speed

interconnect region. Differential signals running through a connector should be placed on

adjacent pins. This will allow their returning signal current paths to overlap and cancel. The

associated traces on the PCB should be kept close together as well.

42

CHAPTER 5

COMPONENT SPECIFICATION

With an endless supply of COTS components on the market, how does an engineer decide which

one to use in a design? Even a component as seemingly simple as a capacitor comes in a

multitude of variations. The physical performance of the FINI depends greatly on the chosen

components. The critical components used on the FINI are described in the following sections.

5.1 LVDS TRANSMITTER AND RECEIVER

The main function of FINI is to ensure the reliable and accurate transmission and reception of

data. To successfully transmit high-speed digital data over very long distances, a performance

solution that consumes minimal power and is immune to noise is necessary.

43

5.1.1 DS90C387/DS90CF388

Data transmission and reception on the FINI card are accomplished using a high-speed point-topoint cabled data link chipset from National Semiconductor. The DS90C387/DS90CF388

transmitter/receiver pair was originally designed for data transmission between a host and flat

panel display but its capabilities suit the FINI application well. It converts 48 bits of CMOS/TTL

data into 8 LVDS (Low Voltage Differential Signaling) data streams at a maximum dual pixel

rate of 112 MHz or vice versa. The LVDS bit rate is 672 Mbps per differential pair providing a

total throughput of 5.38Gbps.

5.1.2 Decision Factors

A chipset supporting LVDS technology is chosen because it offers better performance than

single-ended solutions by supporting higher data rates and requiring lower power while being less

susceptible to common mode noise at lower costs. The DS90C387/DS90CF388 offers greater

bandwidth than standard LVDS line drivers and receivers [National97]. With 8 LVDS channels,

it offers at least twice the bandwidth available from general drivers and receivers.

Longer cables increase the potential of differential skew and subsequent cable loading effects.

The DS90C387 is equipped with a pre-emphasis feature that adds extra current during LVDS

logic transitions to reduce cable loading effects. This helps to reduce the amount of jitter in the

signal seen by the receiver and minimize problems associated with high frequency signal

attenuation.

Cable lengths will vary from 2 meters to 10 meters. At such great lengths, the pair-to-pair skew is

potentially quite significant. The DS90CF388 has a built-in deskew capability that can deskew

long cable pair-to-pair skews up to +/-1 LVDS data bit time. This increases the tolerance allotted

44

in the cables and decreases the potential bit error rate. Available for less than $10 a chip, this

National Semiconductor chipset provides ample capabilities at a low cost.

5.1.3 Functionality of DS90C387/DS90CF388

The transmitter will receive input from the FPGA and send out LVDS signals onto the high

performance cables connecting the two FINI boards. At the other end, the receiver will convert

the LVDS signals back into CMOS/TTL data that is then passed onto another FPGA. For the

FINI card, the transmitters and receivers are configured for dual pixel applications by setting the

DUAL pin on the DS90C387 to Vcc. The DC Balance feature sends an extra bit along with the

pixel and control information to minimize the short- and long-term DC bias on the signal.

Cable loading effects are reduced by using the pre-emphasis feature built into the DS90C387.

Additional current is added during LVDS logic transitions by applying a DC voltage at the PRE

pin (pin 14). The required voltage is dependent upon both the frequency and the cable length. A

higher input voltage increases the magnitude of dynamic current during data transition. A pull-up

resistor from the PRE pin to Vcc sets the DC level. See Tables 3 and 4 to set the appropriate

voltage level. On board the FINI, a potentiometer is used to provide variable resistance via the

same design.

RE

Resulting PRE Voltage

Effects

I M9 or NC

0.75V

Standard LVDS

50kQ

1.0V

9kW

1.5V

3kQ

2.OV

1kn

2.6V

100

Vcc

50% pre-emphasis

100% pre-emphasis

Table 3. Pre-emphasis DC Voltage Level with RpE [National99]

45

Frequency

PRE Voltage

Typical Cable Length

112 MHz

1.OV

2 meters

112 MHz

1.5V

5 meters

80 MHz

1.0V

2 meters

80 MHz

1.2V

7 meters

65 MHz

1.5V

10 meters

56 MHz

1.0V

10 meters

Table 4. Pre-emphasis Needed per Cable Length [National99]

5.2 PROCESSORS

The FINI architecture is designed to serve as the interconnect solution for HPC systems as well as

be a platform to evaluate on-board components. With this dual intention, the FINI card requires

enough flexibility to implement test code and various networking protocols. This can be achieved

by using a programmable device as the core processor on board.

5.2.1 Virtex XCV100-6PQ240C

The duplicated architecture of the FINI card employs four Xilinx Virtex FPGAs, the XCV 1006PQ240C, as the core processors on board. These 2.5V field programmable gate arrays consist of

108,904 gates, 2700 logic cells and 180 1/0 pins [Virtex99]. Initially, the FPGA will facilitate the

testing process in determining the signal quality on board. In the long run, implementing the

METRO protocol using FPGAs will permit iterations of the networking protocol to achieve

maximum throughput and minimum expected latency.

Each XCV 100-6PQ240C is associated with either a transmitter or receiver on the FINI card and

controls the data flowing in and out of the respective transmitter or receiver chip. Traffic through

46

the FPGAs can originate from either the associated memory chip or the processor node in the FPS

context. For the purposes of validating the FINI design however, random data will be generated

in a computer since the FPS is not available at the time of writing.

5.2.2 Decision Factor

The Virtex family of products from Xilinx Corp. offers full-featured high-end FPGAs designed

and manufactured using the latest technology. FPGAs no longer serve only as glue logic, but are

often integral system components. With four full digital delay locked loops, these FPGAs are

capable of system clock generation, synchronization and distribution. With more embedded

SRAM than previous generations of FPGAs, these chips offer a high-speed flexible design

solution that reduces the overall design cycle time required of an engineer. The capabilities of a

Virtex FPGA make it an ideal choice for the core processors on the FINI card.

Of the various Virtex FPGAs, the XCV100-6PQ240C was chosen due to cost and availability

constraints. Another factor of consideration was the number of 1/0 pins. The main components

interfacing with the FPGA are the transmitter/receiver and an external SRAM. At component

selection time, 180 pins were determined to be sufficient. This will be further evaluated at the

conclusion of the design.

5.2.3 Progranuning the XCV100-6PQ240C

Of the many ways the Xilinx XCVlOO-6PQ240C can be programmed, SelectMAP mode is the

fastest and will be used to program the four FPGAs on the FINI card. In SelectMAP mode, an 8bit bi-directional data bus (DO-D7) is used to write onto or read from the FPGA. To configure the

FPGA using SelectMAP mode, the configuration mode pins (M2, Ml, and MO) need to be set

according to the table below.

47

M2 M1

Configuration

Mode

SelectMAP Mode

1

1

MO

CCLK

Direction

Data

Width

Serial

Dout

Pre-configuration

Pull-ups

0

In

8

No

No

Table 5. SelectMAP Mode Configuration Code [Virtex99]

Once the FPGA is powered-up, the configuration memory needs to be cleared before

configuration may take place. /PROG is held at a logic Low for a minimum of 300 ns (no

maximum) to reset the configuration logic and hold the FPGA in the clear configuration memory

state. While the configuration memory is cleared, /INIT is held Low to indicate that the

configuration memory is in the process of being cleared. /NIT is not released until the

configuration memory has been cleared. With the configuration memory cleared, the

configuration mode is selected and then configuration commences.

FPGAD[ :714

FPGACC LK

/FPGA-WRI TE

I

I

~1

A4TJ-

FPGA_BU SY

M1

MO

D[0:7]

0,CCLK

/RITE

- BUSY

ICS

/CS2

/PROG

DONE

FPGA-4

FPGA-3

FPGA-2

/CS3

M1

M1

MO

MO

D[0:71

COLK

/WRITE

BUSY

/CS

/CS4

D[0:7]

CCLK

/WRITE

BUSY

/CS

-a

/PROG

DONE

/PROG

DONE

/NIT

N

/NIT

/NIT

FPGADO NE

/FPGAI NIT

/FPGAPROGR AM

I

Source: XAPPl38 (v2.0)

Figure 17. Diagram of Parallel Programming FPGAs in SelectMAP Mode [Xilinx00]

Virtex FPGAs cannot be programmed in a serial daisy-chain format, but SelectMAP mode

provides an alternative by allowing multiple FPGAs to be connected in a parallel-chain fashion

(See Figure 17). Data pins (DO-D7), CCLK, /WRITE, BUSY, /PROG, DONE and /INIT are

48

shared in common between all FPGAs while the /CS input is kept separate so that each FPGA

may be accessed individually. Ideally, we would like all four FPGAs arranged in this fashion and

programmed by the computer via the parallel port, but due to insufficient pins on the parallel port,

only FPGA-1 will be programmed in this manner. The other three FPGAs will be connected in

parallel and programmed by the FPGA-1 (hence the Master FPGA) in the manner just described

using three distinct chip select pins.

Configuration data is written to the data bus D[0:7], while readback data is readfrom the data

bus. The bus direction is controlled by /WRITE. When /WRITE is asserted Low, data is being

written to the data bus. When /WRITE is asserted High, data is being read from the bus. Data

flow is controlled by the BUSY signal. When BUSY is Low, the FPGA reads the data bus on the

next rising CCLK edge where both /CS and /WRITE are asserted Low. Data on the bus is ignored

if BUSY is asserted High. The data must be reloaded on the next rising CCLK edge when BUSY

is Low for it to be read. If /CS is not asserted, BUSY is tri-stated. All reads and writes onto the

data bus for configuration and readback is synchronized to CCLK, which can be a signal

generated externally or driven by a free running oscillator.

5.2.2 FPGA-1 (Master)

The Master FPGA is responsible for programming FPGA-2, FPGA-3 and FPGA-4. The

programming procedure for programming the slaved FPGAs is virtually the same as

programming the master FPGA. In this case, circuitry within FPGA-l serves the function of the