Lecture 13: “Confuse/Match” Games (II) 1 Reminders from Lecture 12

advertisement

CSE 533: The PCP Theorem and Hardness of Approximation

(Autumn 2005)

Lecture 13: “Confuse/Match” Games (II)

Nov. 14, 2005

Lecturer: Ryan O’Donnell

1

Scribe: Ryan O’Donnell and Sridhar Srinivasan

Reminders from Lecture 12

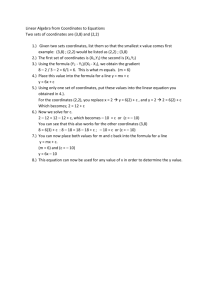

Our goal is to show the following theorem:

Theorem 1.1. Let s < 1 be a constant. Suppose G is a “confuse/match”-style game with ω(G) ≤

s. Then if k = poly(1/²), ω(Gk ) < ².

If G is repeated in parallel poly(1/²) times, with overwhelming probability (as a function of

²), there will be poly(1/²) many “confuse rounds” and poly(1/²) many “match rounds”. These

rounds will be randomly ordered. Further, it only helps the provers if we fix the questions in some

rounds and tell them everything chosen in those rounds. For all of these reasons, it suffices (and

indeed is equivalent) to prove the following theorem:

Theorem 1.2. Let s < 1 be constant. Let C = (1/²)39 , m = (1/²)11 , and write C 0 = C + m.

Given any 2P1R game G (with the projection property), let G0 be the game with C 0 parallel rounds,

in which C confuse rounds of G and m match rounds of G are played, in a random order. Then

ω(G0 ) ≤ ².

To prove this theorem we will refer to the following two theorems proved in the last class:

0

Fact 1.3. Let X be a set and γ be a probability distribution on X. Let f : X C → {0, 1}, C ≥ 1,

0

0

where we think of X C as having product probability distribution γ C . Let

µ = Pr 0 [f (~q) = 1].

q~∈γ C

Suppose we pick i ∈ [C 0 ] at random and then pick qi ← γ at random. Let

µ

e=µ

ei,qi = Pr[f | i, qi ].

i,qi

Then,

h

i

√

√

3

3

Pr |e

µ − µ| ≥ 1/ C 0 ≤ 1/ C 0 .

i,qi

0

Fact 1.4. Let R ⊆ [C] be any nonempty set, and P : QC → AC . Suppose i and qi are chosen

randomly as in Fact 1.3; then

0 ≤ E [PredictabilityR (P | i, qi )] − PredictabilityR (P )] ≤ 1/C 0 .

i,qi

Remark 1.5. Note that the above two facts are lemmas about general functions; i.e., they have

nothing to do with 2P1R games.

1

2

Intuition for the proof

0

0

Let P : QC → AC be the strategy of Prover 2. The key component of our overall proof is

codifying the intuition that P2 ’s strategy is either “mostly serial” or “highly dependent on many

coordinates”. (Note that this key theorem still has nothing to do with games; it is just a theorem

about the structure of prover strategies.) To prove a rigorous statement along these lines takes some

work. Roughly speaking, our key theorem will say something like the following:

High-level version of the key theorem. Suppose we pick a block of coordinates R ⊆ [C 0 ] and

the questions for that block, ~qR , randomly. Then either:

• It’s very likely (over the choice of R, ~qR that P ’s answers on the coordinates of R are highly

unpredictable (over the choice of the remaining questions). OR,

• [There’s a decent chance that P ’s answers on R are decently predictable, BUT. . . ] Conditioned on any plausible set of answers in the coordinates of R, P is forced into a highly serial

strategy on the remaining coordinates [C 0 ] \ R.

Once we rigorize this statement, it is not too hard to use it to show that the provers cannot succeed

in G0 with probability more than ².

3

Finding a “good block size”

0

0

Fix once and for all the strategy of Prover 2, P2 : QC → AC . (We have switched notation, Q

instead of Y .) Our first task is to determine a “good block size” r∗ for the block R discussed in the

previous section on intuition. This will be a number between 1 and m/2. To find r∗ , we consider

the following “thought experiment” regarding P2 :

0

We imagine filling in up to m/2 questions in QC at random, one by one. I.e., we pick

i1 , i2 , . . . , im/2 at random from [C 0 ] (all distinct) and also qi1 , qi2 , . . . , qm/2 , independently from

γ, P2 ’s natural distribution on questions (inputs). Given 1 ≤ r ≤ m/2, let Rr denote the (random) set {i1 , . . . , ir }, and write ~qRr for the associated questions. We now look at the following

Predictabilities:

(1 ) PredictabilityR1 (P2 | R1 , ~qR1 )

(10 ) PredictabilityR1 (P2 | R2 , ~qR2 )

(2 ) PredictabilityR2 (P2 | R2 , ~qR2 )

(20 ) PredictabilityR2 (P2 | R3 , ~qR3 )

(3 ) PredictabilityR3 (P2 | R3 , ~qR3 )

···

2

(m/2) PredictabilityRm/2 (P2 | Rm , ~qRm/2 )

I.e., we see how Predictability of P2 varies as we do the following two things: a) Give a new

random question in a random coordinate (this makes Predictability go up); b) Require prediction

on this new coordinate (this makes Predictability go down).

Consider now the list of expectations of the above quantities, (1), (10 ), etc., where the expectation is over the choice of Rm/2 and ~qRm/2 .

Lemma 3.1. There exists some “special block size” 1 ≤ r∗ < m/2 such that in going from the (r∗ )

expectation to the (r∗ + 1) expectation, the expected Predictability goes down by at most O(1)/m.

Proof. The expected value of any of the quantities (r) or (r0 ) is a number in the range [0, 1], since

Predictabilities are always in this range. In the r → r0 steps, Predictability goes up. However, by

Fact 1.4, it goes up by at most 1/(C 0 − r) ≤ 1/C. This is very small, and so the total amount the

expected Predictability goes up over all m/2 steps is at most (m/2)(1/C) ¿ 1, using the fact that

C À m. Since all numbers are in the range [0, 1], and the total increase from beginning to end is

at most 1, the total decrease, from the r0 → r + 1 steps, must be at most 2. Thus there must be

at least one step (r∗ )0 → r∗ + 1, where the decrease in the Predictabilities’ expectation is at most

2/(m/2) = 4/m. (And, going from r∗ to r∗ + 1 only makes the decrease less.)

Thus we have identified a “special block size” 1 ≤ r∗ < m/2 with the following property:

Corollary 3.2. Let R ⊆ [C 0 ] be a random set of cardinality r∗ and let ~qR be random questions for

P2 on these coordinates. Further, let i be a random coordinate from [C 0 ] \ R and let qi be a random

question for this coordinate. Then

E[PredictabilityR (P2 | ~qR )] − E[PredictabilityR∪{i} (P2 | ~qR , qi )] ≤ O(1)/m.

Notation.

In the remainder of the proof, we will often use the following notation:

• (R, ~qR ) will be a “random block”, meaning R is a randomly chosen subset of [C 0 ] of size r∗ ,

and ~qR is a random set of questions for those coordinates.

• ~a will denote a string of answers in AR .

• Notation like “Pr[~a | (R, ~qR )]” will mean “the probability, over the choice of questions to

P2 outside of R, that P2 will output the string of answers ~a in the positions R, given that it

gets the questions ~qR in the coordinates R”.

• In particular, we will also use the notation Pr[~a | (R, ~qR ), (i, qi )] and Pr[~a, a | (R, ~qR ), (i, qi )],

where (i, qi ) is a coordinate and a question outside R, and a is a single answer associated

with the coordinate i.

3

4

The key theorem

In this section we use Corollary 3.2 to prove the key theorem, giving a dichotomy of the possibilities for P2 ’s strategy.

Definition 4.1. Given (R, ~qR ), we say answer string ~a ∈ AR is dead if Pr[~a | (R, ~qR )] ≤ ².

Otherwise it is alive. We further say that the whole block (R, ~qR ) is dead if all answer strings

~a ∈ AR are dead for it.

Recall that ² is our target value for the repeated game. Define also η = ²3 . We now make the

following slightly tricky definition:

Definition 4.2. We say that the block (R, ~qR ) “(1 − η) induces serial strategies” if

• (R, ~qR ) is alive.

• For every associated live answer ~a ∈ AR , there is a serial strategy

S~a : ([C 0 ] \ R) × Q → A

such that with probability ≥ 1 − η over the choice of an additional random question (i, qi ),

it holds that

Pr[~a, S~a (i, qi ) | (R, ~qR ), (i, qi )] ≥ (1 − η)Pr[~a | (R, ~qR ), (i, qi )].

In other words, for almost all additional questions, the answer P2 gives in the associated

coordinate is essentially forced.

The idea for the key theorem is this. From Corollary 3.2 we know that for a typical r∗ -block

(R, ~qR ), there is almost no loss in Predictability between predicting on R and predicting on R plus

one more random coordinate. By the definition of Predictability, this can only happen in one of two

ways: First, either Predictability was negligible to begin with (i.e., almost all answer strings are

dead); or, Predictability was non-negligible, but, every answer string on R forces a mostly serial

way of answering most other questions.

Theorem 4.3 (Key Theorem). Given the strategy P2 , one of the following two cases holds:

• (Case 1) When (R, ~qR ) is a random r∗ -block, with probability at least 1 − ², (R, ~qR ) is dead.

• (Case 2) [At least an ² fraction of (R, ~qR ) are alive, but. . . ] If (R, ~qR ) is a random live block,

then with probability at least 1 − ², (R, ~qr ) (1 − η)-induces serial strategies.

Proof. The proof essentially just involves unpacking the definitions involved in Corollary 3.2 and

the phrase “(1 − η)”-induces serial strategies. The proof is by contradiction. Assuming that neither

Case 1 or Case 2 holds, we get that if you pick the block (R, ~qR ) at random, with probability at least

²2 it is alive and fails to (1 − η)-induce serial strategies. This means that there is some particular

live answer string ~a such that, conditioned on P2 answering ~a, at least an η fraction of future

4

(i, qi ) pairs are not “(1 − η)-determined”. This is enough to show that in fact there is a slightly

substantial loss in expected Predictability in predicting on r∗ -sized blocks versus (r∗ + 1)-sized

blocks, contradicting Corollary 3.2.

To do the details, we need to overcome a slight technicality: Answer strings that are alive (i.e.,

have probability at least ²) for (R, ~qR ) might become significantly less probable once the extra

question (i, qi ) is picked. However, by Fact 1.3, this is extremely unlikely. Leaving this detail for

later, what we have is the following:

Proposition 4.4. Assuming the statement of the theorem does not hold, let (R, ~qR ) be a random

r∗ -block and let (i, qi ) be an additional random question. Then with probability at least η²2 /2,

there exists some answer string ~a ∈ AR such that:

1. For all a ∈ A, Pr[~a, a | (R, ~qR ), (i, qi )] ≤ (1 − η)Pr[~a, a | (R, ~qR )].

2. Pr[~a | (R, ~qR ), (i, qi )] ≥ ²/2.

We show that this proposition leads to a loss of η 2 ²4 /8 in expected Predictability. From Point 1

above it is easy to conclude that

X

Pr[~a, a | (R, ~qR ), (i, qi )]2 ≤ (1 − 2η + 2η 2 )Pr[~a | (R, ~qR ), (i, qi )]

a∈A

because, subject to Point 1, the left-hand side is maximized if there is one answer contributing a

(1 − η)-fraction of the probability, another contributing an η-fraction, and the rest contributing 0.

Using 2η − 2η 2 ≥ η and Pr[~a | (R, ~qR ), (i, qi )] ≥ ²/2, we get

X

Pr[~a | (R, ~qR ), (i, qi )]2 −

Pr[~a, a | (R, ~qR ), (i, qi )]2 ≥ η(²/2)2 .

a∈A

From the definition of Predictability, we conclude that whenever the events of the Proposition

occur, at least η(²/2)2 is contributed to

E[PredictabilityR (P2 | ~qR )] − E[PredictabilityR∪{i} (P2 | ~qR , qi )].

Thus the total loss is at least (η²2 /2) · η(²/2)2 = ²1 0/8 ¿ O(1)/m, contradicting Corollary 3.2.

To complete the proof we now only need to use Fact 1.3 to overcome the technicality mentioned

earlier.

Fix any (R, ~qR ) and any live answer string ~a ∈ AR , so Pr[~a | (R, ~qR )] ≥ ². Since

√

3

1/√C ¿ ²/2, Fact 1.3 tells us that Pr[~a | (R, ~qR ), (i, qi )] ≥ ²/2 except with probability at most

1/ 3 C over the choice of (i, qi ). (We are using C ≤ C 0 − r∗ here.) Union-bounding over all

live ~a (of which there

pat most 1/²) we get that for a random choice of (R, ~qR ) and (i, qi ), except

with probability 1/² 3 (C) = (1/²)12 every answer string ~a that is alive for (R, ~qR ) still satisfies

Pr[~a | (R, ~qR ), (i, qi )] ≥ ²/2. Since 1/²12 ¿ η²2 /2, we are done.

5

5

Bounding the success probability of the provers

We now turn to dealing with 2P1R games; specifically, Theorem 1.2. Fix now also Prover 1’s

0

0

strategy, P1 : X C → AC .

In the theorem the way questions are picked is that a random m our C 0 coordinates are “match

rounds” and the rest are “confuse rounds”. We will equivalently imagine the rounds types and

questions to be picked as follows:

Step 1: Pick R ⊆ [C 0 ] of size r∗ at random. These will be match rounds. Pick also both provers’

questions for these rounds.

Step 2: Pick m − r∗ more random coordinates to be match rounds, and also pick the provers’

questions for these rounds. (Note there are at least m/2 such rounds, as r∗ ≤ m/2.)

Step 3: All other coordinates are “confuse rounds”; pick the provers’ questions for these rounds

randomly. Note that the two provers get independent questions in these rounds, since they are

confuse rounds.

The proof that the provers succeed with probability at most ² (actually, we will just prove O(²))

now divides into two cases, the case from the Key Theorem 4.3.

Case 1: In this case, after Step 1 is complete, the question block (R, ~qR ) for P2 is dead with

probability at least 1 − ². We give up the ² here to the provers’ success probability. So assuming

it’s dead, no answer string ~a ∈ AR has more than ² probability of ultimately being given by P2 ,

over the choice of its remaining questions.

We would like to argue that this is still true after Step 2, when P2 has gotten its remaining

match round questions. This follows pretty easily from Fact 1.3. We can’t quite union bound

over all answer strings (there may be too many), but we can do the following: Group all possible

strings in AR into “clusters”, where the clusters have total probability between ²/2 and ². There

are at most 2/² such clusters. By Fact√

1.3, for each additional random√

match round question, the

3

3

probability a cluster gets more than 1/ C more probable is at most 1/ C. Union-bounding over

all remaining match round questions (at most m)

and all clusters, we get that even after Step 2,

√

3

except with probability at most m · (2/²) · (1/ √

C = 2², all clusters — and therefore all answer

R

strings in A — have probability at most ² + m/ 3 C ≤ 2².

Again, we give up the 2² chance of answer strings becoming significantly more likely to the

provers’ success probability. So assuming this doesn’t happen, we are through Steps 1 and 2 and

we know that for every answer string ~a ∈ AR in P2 ’s R coordinates, the probability P2 will answer

with that string — over the choice of its remaining confuse round questions — is at most 2². But

now in Step 3, the provers’ questions are chosen independently. So first imagine filling Prover 1’s

questions. Then this prover has all of its questions, and so its complete answer string is decided. In

particular, its answer string on the coordinates R — call it ~b — is decided. Since these coordinates

are match coordinates, and since G has the projection property, this means that there is a unique

6

string ~a that Prover 2 must answer on these R coordinates (π(~b)) in order for the provers to win. But

we have already argued the probability P2 will answer this string, over the choice of its remaining

confuse round questions, is at most 2².

We have therefore shown that the overall success probability of the provers in Case 1 is at most

² + 2² + 2² = O(²).

Case 2: In this case, let us first do Step 1, producing (R, ~qR ) (as well as Prover 1’s questions on

R). If (R, ~qR ) is dead then the analysis from Case 1 shows that the provers succeed with probability

at most 4². Giving up this probability, we can condition on (R, ~qR ) being alive. Since we are in

Case 2 of Theorem 4.3, we have that except with probability 1 − ², (R, ~qR ) (1 − η)-induces serial

strategies. We assume this is the case, giving up the remaining ² probability.

We now proceed to analyze the provers’ success probability as follows:

X

Pr[success] =

Pr[success AND P2 eventually answers ~a on R].

~a∈AR

We break up the sum above into the cases when ~a is alive and when it is dead. For the dead ~a’s,

we can again use the analysis from Case 1 to show that in total they contribute at most 4² to the

overall sum. Giving up this 4² probability, we proceed to analyze the contribution from live ~a’s,

each of which we know (1 − η)-induces a serial strategy S~a . We will show that for each live ~a

the probability the provers succeed AND that P2 eventually answers ~a is at most O(²2 ). Thus we

conclude that the overall success probability is at most 4² + ² + 4² + (1/²) · O(²2 ) ≤ O(²), using

the fact that there are at most (1/²) live answers. This will complete the proof.

So let us fix a live answer ~a and its associated serial strategy S := S~a . By definition of inducing

serial strategies, we know that conditioning on P2 answering with ~a, the probability that it answers

a random additional question (i, qi ) in accordance with S is at least 1 − 2η. Hence the expected

fraction of coordinates answered in accordance with S is at least 1 − 2η. Indeed, this is true just of

the coordinates in the remaining match rounds. Using Markov’s inequality, we conclude:

Fact 5.1. Conditioned on P2 ultimately answering ~a in the coordinates R, except with probability

²2 , P2 will answer at least a 1 − 2η/²2 = 1 − 2² fraction of the coordinates in the Step 2 rounds

according to the serial strategy S.

Thus

Pr[success AND P2 eventually answers ~a] ≤ ²2 +

Pr[success AND P2 eventually answers ~a

AND P2 answers ≥ 1 − 2² fraction of remaining match coordinates according to S].

But as soon as we know P2 plays a large fraction of coordinates according to a serial strategy, we

can upper-bound its success probability. Since succeeding on all coordinates while using a serial

7

strategy on many coordinates is even harder than succeeding on many coordinates while using a

serial strategy on all coordinates, we have the probability on the right, above, is at most

Pr[P2 matches on ≥ 1 − 2² fraction of the Step 2 match coordinates

| P2 answers all Step 2 coordinates according to S]. (1)

But S is a serial strategy, and even with all questions in the Step 1 match rounds fixed, P2 can match

in any given Step 2 match round with probability at most s < 1. Thus it is expected to match in at

most an s fraction of these coordinates — of which there are at least m/2. By a Chernoff bound,

we easily get that (1) at most exp(−Ω(²(m/2))) ¿ O(²2 ). This completes the proof.

8