An Abstract of the Thesis of

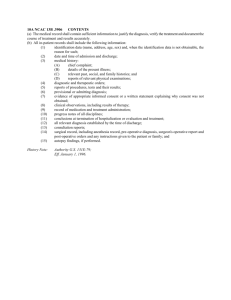

advertisement