Cram` er-Rao Inequality

advertisement

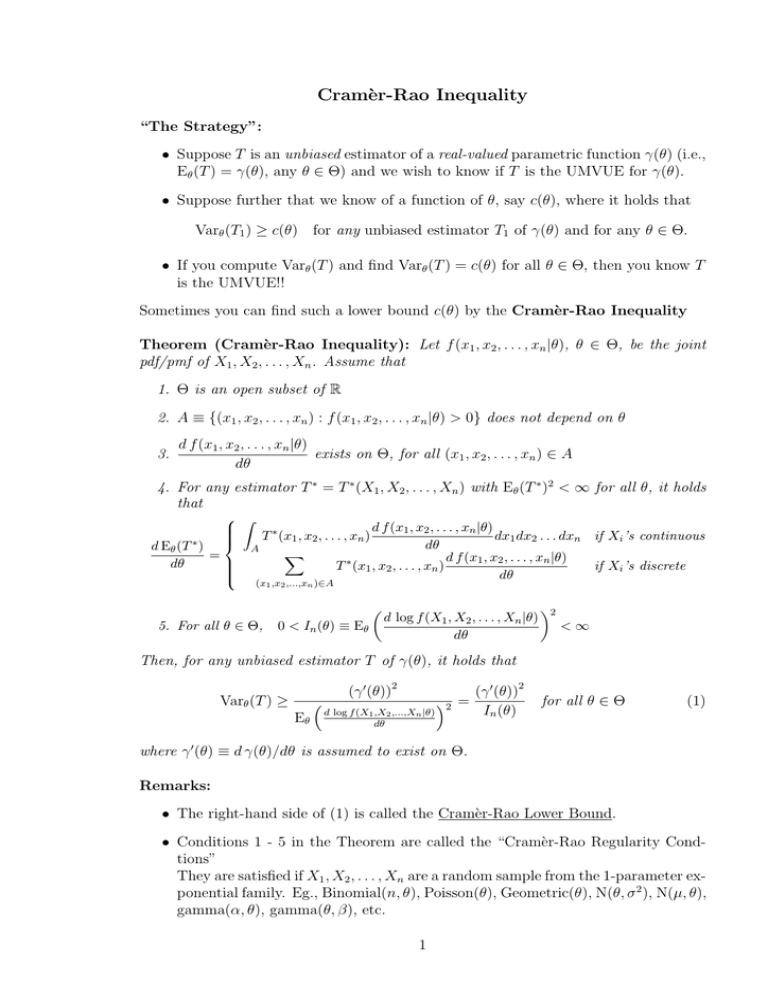

Cramèr-Rao Inequality

“The Strategy”:

• Suppose T is an unbiased estimator of a real-valued parametric function γ(θ) (i.e.,

Eθ (T ) = γ(θ), any θ ∈ Θ) and we wish to know if T is the UMVUE for γ(θ).

• Suppose further that we know of a function of θ, say c(θ), where it holds that

Varθ (T1 ) ≥ c(θ) for any unbiased estimator T1 of γ(θ) and for any θ ∈ Θ.

• If you compute Varθ (T ) and find Varθ (T ) = c(θ) for all θ ∈ Θ, then you know T

is the UMVUE!!

Sometimes you can find such a lower bound c(θ) by the Cramèr-Rao Inequality

Theorem (Cramèr-Rao Inequality): Let f (x1 , x2 , . . . , xn |θ), θ ∈ Θ, be the joint

pdf/pmf of X1 , X2 , . . . , Xn . Assume that

1. Θ is an open subset of R

2. A ≡ {(x1 , x2 , . . . , xn ) : f (x1 , x2 , . . . , xn |θ) > 0} does not depend on θ

3.

d f (x1 , x2 , . . . , xn |θ)

exists on Θ, for all (x1 , x2 , . . . , xn ) ∈ A

dθ

4. For any estimator T ∗ = T ∗ (X1 , X2 , . . . , Xn ) with Eθ (T ∗ )2 < ∞ for all θ, it holds

that

Z

d f (x1 , x2 , . . . , xn |θ)

T ∗ (x1 , x2 , . . . , xn )

dx1 dx2 . . . dxn if Xi ’s continuous

∗

dθ

d Eθ (T )

A

X

=

d f (x1 , x2 , . . . , xn |θ)

dθ

T ∗ (x1 , x2 , . . . , xn )

if Xi ’s discrete

dθ

(x1 ,x2 ,...,xn )∈A

µ

5. For all θ ∈ Θ,

0 < In (θ) ≡ Eθ

d log f (X1 , X2 , . . . , Xn |θ)

dθ

¶2

<∞

Then, for any unbiased estimator T of γ(θ), it holds that

(γ 0 (θ))2

´2 =

³

In (θ)

d log f (X1 ,X2 ,...,Xn |θ)

(γ 0 (θ))2

Varθ (T ) ≥

Eθ

for all θ ∈ Θ

(1)

dθ

where γ 0 (θ) ≡ d γ(θ)/dθ is assumed to exist on Θ.

Remarks:

• The right-hand side of (1) is called the Cramèr-Rao Lower Bound.

• Conditions 1 - 5 in the Theorem are called the “Cramèr-Rao Regularity Condtions”

They are satisfied if X1 , X2 , . . . , Xn are a random sample from the 1-parameter exponential family. Eg., Binomial(n, θ), Poisson(θ), Geometric(θ), N(θ, σ 2 ), N(µ, θ),

gamma(α, θ), gamma(θ, β), etc.

1

• In (θ) is called the Fisher Information number (for size n sample)

• If X1 , X2 , . . . , Xn are iid with common pdf/pmf f (x|θ), then

µ

¶2

d log f (X1 |θ)

In (θ) = nI1 (θ), where I1 (θ) = Eθ

dθ

(2)

and I1 (θ) represents the Fisher information for one observation.

d2 f (x1 , x2 , . . . , xn |θ)

exits on Θ, for all (x1 , x2 , . . . , xn ) ∈ A, then

dθ2

¶2

µ 2

µ

¶

d log f (X1 , X2 , . . . , Xn |θ)

d log f (X1 , X2 , . . . , Xn |θ)

= −Eθ

In (θ) = Eθ

.

dθ

dθ2

• If

If, in addition, X1 , X2 , . . . , Xn are iid with common pdf/pmf f (x|θ), then we have

µ

¶2

µ 2

¶

d log f (X1 |θ)

d log f (X1 |θ)

In (θ) = nI1 (θ) where I1 (θ) = Eθ

= −Eθ

dθ

dθ2

Proof of Equation (2)/continuous case. Just using definitions, for any sample size n,

µ

¶

d log f (X1 , X2 , . . . , Xn |θ)

Eθ

dθ

Z

d log f (x1 , x2 , . . . , xn |θ)

=

f (x1 , x2 , . . . , xn |θ)dx1 , . . . , dxn

dθ

ZA

d f (x1 , x2 , . . . , xn |θ) f (x1 , x2 , . . . , xn |θ)

=

dx1 , . . . , dxn derivative of log

dθ

f (x1 , x2 , . . . , xn |θ)

A

Z

d f (x1 , x2 , . . . , xn |θ)

=

1·

dx1 , . . . , dxn

dθ

A

d Eθ (1)

=

by condition (4) of Theorem with T ∗ = 1

dθ

d

=

1=0

dθ

so that

µ

In (θ) =

=

=

=

=

=

=

¶2

d log f (X1 , X2 , . . . , Xn |θ)

Eθ

dθ

µ

¶

d log f (X1 , X2 , . . . , Xn |θ)

Varθ

dθ

Ã

!

n

Qn

d X

Varθ

log f (Xi |θ)

since f (x1 , x2 , . . . , xn |θ) = i=1 f (xi |θ)

dθ i=1

!

à n

X d log f (Xi |θ)

Varθ

dθ

i=1

¶

µ

n

X

d log f (Xi |θ)

sum of independent variables

Varθ

dθ

i=1

µ

¶

d log f (X1 |θ)

nVarθ

iid variables

dθ

µ

¶2

d log f (X1 |θ)

nEθ

= nI1 (θ)

dθ

2