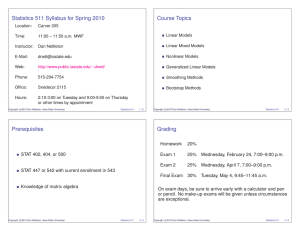

Document 10639913

advertisement

The Gauss-Markov Model

c

Copyright 2012

Dan Nettleton (Iowa State University)

Statistics 611

1 / 61

Recall that

Cov(u, v) = E((u − E(u))(v − E(v)))

= E(uv) − E(u)E(v)

Var(u)

= Cov(u, u)

= E(u − E(u))2

= E(u2 ) − (E(u))2 .

c

Copyright 2012

Dan Nettleton (Iowa State University)

Statistics 611

2 / 61

If u and v are random vectors, then

m×1

n×1

Cov(u, v) = [Cov(ui , vj )]m×n

Var(u)

c

Copyright 2012

Dan Nettleton (Iowa State University)

and

= [Cov(ui , uj )]m×m .

Statistics 611

3 / 61

It follows that

Cov(u, v) = E((u − E(u))(v − E(v))0 )

= E(uv0 ) − E(u)E(v0 )

Var(u)

= E((u − E(u))(u − E(u))0 )

= E(uu0 ) − E(u)E(u0 ).

(Note that if Z = [zij ], then E(Z) ≡ [E(zij )]; i.e., the expected value of a

matrix is defined to be the matrix of the expected values of the

elements in the original matrix.)

c

Copyright 2012

Dan Nettleton (Iowa State University)

Statistics 611

4 / 61

From these basis definitions, it is straightforward to show the following:

If a, b, A, B are fixed and u, v are random, then

Cov(a + Au, b + Bv) = ACov(u, v)B0 .

c

Copyright 2012

Dan Nettleton (Iowa State University)

Statistics 611

5 / 61

Some common special cases are

Cov(Au, Bv) = ACov(u, v)B0

Cov(Au, Bu) = ACov(u, u)B0

= AVar(u)B0

Var(Au)

c

Copyright 2012

Dan Nettleton (Iowa State University)

= Cov(Au, Au)

= ACov(u, u)A0

= AVar(u)A0 .

Statistics 611

6 / 61

The Gauss-Markov Model

y = Xβ + ε,

where

E(ε) = 0

and Var(ε) = σ 2 I for some unknown σ 2 .

“Mean zero, constant variance, uncorrelated errors.”

c

Copyright 2012

Dan Nettleton (Iowa State University)

Statistics 611

7 / 61

More succinct statement of Gauss-Markov Model:

E(y) = Xβ,

Var(y) = σ 2 I

or

E(y) ∈ C(X),

c

Copyright 2012

Dan Nettleton (Iowa State University)

Var(y) = σ 2 I.

Statistics 611

8 / 61

Note that the Gauss-Markov Model (GMM) is a special case of the

GLM that we have been studying.

Hence, all previous results for the GLM apply to the GMM.

c

Copyright 2012

Dan Nettleton (Iowa State University)

Statistics 611

9 / 61

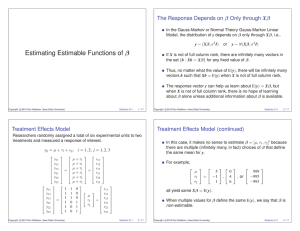

Suppose c0 β is an estimable function.

Find Var(c0 β̂), the variance of the LSE of c0 β.

c

Copyright 2012

Dan Nettleton (Iowa State University)

Statistics 611

10 / 61

Var(c0 β̂) = Var(c0 (X0 X)− X0 y)

c

Copyright 2012

Dan Nettleton (Iowa State University)

= Var(a0 X(X0 X)− X0 y)

= Var(a0 PX y)

= a0 PX Var(y)P0X a

= a0 PX (σ 2 I)PX a

= σ 2 a0 PX PX a

= σ 2 a0 PX a

= σ 2 a0 X(X0 X)− X0 a

= σ 2 c0 (X0 X)− c.

Statistics 611

11 / 61

Example: Two-treatment ANCOVA

yij = µ + τi + γxij + εij

i = 1, 2; j = 1, . . . , m

∀ i = 1, 2; j = 1, . . . , m

0

if (i, j) 6= (s, t)

Cov(εij , εst ) =

σ 2 if (i, j) = (s, t).

E(εij ) = 0

xij is the known value of a covariate for treatment i and observation j

(i = 1, 2; j = 1, . . . , m).

c

Copyright 2012

Dan Nettleton (Iowa State University)

Statistics 611

12 / 61

Under what conditions is γ estimable?

γ is estimable ⇐⇒ · · ·?

c

Copyright 2012

Dan Nettleton (Iowa State University)

Statistics 611

13 / 61

γ is estimable iff x11 , . . . , x1m are not all equal, or

x21 , . . . , x2m are not all equal, i.e.,

x11

.

.

.

x

1

0

1m

/ C m×1 m×1 .

x= ∈

x21

0 1

m×1 m×1

.

..

x2m

This makes sense intuitively, but to prove the claim formally...

c

Copyright 2012

Dan Nettleton (Iowa State University)

Statistics 611

14 / 61

"

X=

1 1 0 x1

#

1 0 1 x2

,

where

xi1

.

.

xi =

.

xim

i = 1, 2.

µ

τ

1

β = .

τ2

γ

c

Copyright 2012

Dan Nettleton (Iowa State University)

Statistics 611

15 / 61

If x =

" #

x1

x2

∈C

"

#!

1 0

0 1

X=

, then

"

#

1 1 0 a1

1 0 1 b1

c

Copyright 2012

Dan Nettleton (Iowa State University)

for some

a, b ∈ R.

Statistics 611

16 / 61

z ∈ N (X) ⇐⇒

z1 + z2 + az4

=0

z + z + bz

1

3

4

=0

0

−a

⇒ ∈ N (X).

−b

1

Now note that

h

0

i −a

0 0 0 1 = 1 6= 0.

−b

1

c

Copyright 2012

Dan Nettleton (Iowa State University)

Statistics 611

17 / 61

It follows that

µ

h

i τ

1

0 0 0 1 =γ

τ2

γ

is not estimable.

c

Copyright 2012

Dan Nettleton (Iowa State University)

Statistics 611

18 / 61

This proves that if γ is estimable, then x ∈

/C

Conversely, if x ∈

/C

"

#!

1 0

0 1

c

Copyright 2012

Dan Nettleton (Iowa State University)

"

#!

1 0

0 1

.

, then ∃ xij 6= xij0 for some i and j 6= j0 .

Statistics 611

19 / 61

Taking the difference of the corresponding rows of X yields

h

i

0 0 0 xij − xij0 .

Dividing by xij − xij0 , yields

h

i

0 0 0 1 .

c

Copyright 2012

Dan Nettleton (Iowa State University)

Statistics 611

20 / 61

Thus,

0

0

∈ C(X0 ),

0

1

and

µ

h

i τ

1

0 0 0 1 = γ is estimable.

τ2

γ

c

Copyright 2012

Dan Nettleton (Iowa State University)

Statistics 611

21 / 61

"

To find the LSE of γ, recall X =

1 1 0 x1

1 0 1 x2

#

. Thus

2m m m x··

m m 0 x

1·

0

XX=

.

m 0 m x2·

x·· x1· x2· x0 x

c

Copyright 2012

Dan Nettleton (Iowa State University)

Statistics 611

22 / 61

When x is not in C

"

#!

1 0

0 1

, rank(X) = rank(X0 X) = 3. Thus, a GI of

X0 X is

0 0

1×3

,

0 A

where

3×1

c

Copyright 2012

Dan Nettleton (Iowa State University)

−1

m

0

x1·

A=

0

m

x2·

.

x1· x2· x0 x

Statistics 611

23 / 61

m

0

x1·

Because

0

m

x2·

is not so easy to invert, let’s consider a

x1· x2· x0 x

different strategy.

c

Copyright 2012

Dan Nettleton (Iowa State University)

Statistics 611

24 / 61

To find LSE of γ and its variance in an alternative way, consider

"

W=

1 0 x1 − x̄1· 1

0 1 x2 − x̄2· 1

#

.

This matrix arises by dropping the first column of X and applying GS

orthogonalization to remaining columns.

c

Copyright 2012

Dan Nettleton (Iowa State University)

Statistics 611

25 / 61

X

0

"

#

1 1 0 x1 1

1 0 1 x2

0

0

c

Copyright 2012

Dan Nettleton (Iowa State University)

=

T

0

0

W

"

#

1 0 x1 − x̄1· 1

0 −x̄1·

.

=

0 1 x2 − x̄2· 1

1 −x̄2·

0

1

Statistics 611

26 / 61

"

# 1 1 0 x̄1·

"

#

1 0 x1 − x̄1· 1

1

1

0

x

1

1 0 1 x̄2· =

0 1 x2 − x̄2· 1

1 0 1 x2

0 0 0 1

W

c

Copyright 2012

Dan Nettleton (Iowa State University)

S

=

X

Statistics 611

27 / 61

"

0

WW=

1 0 x1 − x̄1· 1

#0 "

#

1 0 x1 − x̄1· 1

0 1 x2 − x̄2· 1

0 1 x2 − x̄2· 1

m

0

10 x1 − x̄1· 10 1

.

=

0

m

10 x2 − x̄2· 10 1

P

P

2

m

0

0

0

0

2

1 x1 − x̄1· 1 1 1 x2 − x̄2· 1 1

i=1

j=1 (xij − x̄i· )

c

Copyright 2012

Dan Nettleton (Iowa State University)

Statistics 611

28 / 61

m 0

0

=

0

0 m

P2 Pm

2

0 0

i=1

j=1 (xij − x̄i· )

1

0

0

m

1

0

(W 0 W)−1 =

0 m

0 0 P2 Pm 1

i=1

c

Copyright 2012

Dan Nettleton (Iowa State University)

.

2

j=1 (xij −x̄i· )

Statistics 611

29 / 61

"

0

Wy=

1 0 x1 − x̄1· 1

#0 " #

y1

0 1 x2 − x̄2· 1

y2

y1·

.

=

y2·

P2 Pm

i=1

j=1 yij (xij − x̄i· )

c

Copyright 2012

Dan Nettleton (Iowa State University)

Statistics 611

30 / 61

α̂ = (W 0 W)− W 0 y

ȳ1·

= P P ȳ2·

.

m

2

yij (xij −x̄i· )

c

Copyright 2012

Dan Nettleton (Iowa State University)

i=1

P2

i=1

j=1

Pm

2

j=1 (xij −x̄i· )

Statistics 611

31 / 61

Recall that

E(y) = Xβ = Wα

= WSβ = XTα.

c0 β estimable ⇒ c0 Tα is estimable with LSE c0 T α̂.

c

Copyright 2012

Dan Nettleton (Iowa State University)

Statistics 611

32 / 61

Thus, LSE of β is

c0

T

α̂

0 0

0

ȳ1·

h

i 1 0 −x̄

1·

P P ȳ2·

0 0 0 1

m

0 1 −x̄2· 2

Pi=1 Pj=1 yij (xij −x̄i· )

2

m

2

i=1

j=1 (xij −x̄i· )

0 0

1

P2 Pm

i=1

j=1 yij (xij − x̄i· )

= P2 Pm

.

2

i=1

j=1 (xij − x̄i· )

c

Copyright 2012

Dan Nettleton (Iowa State University)

Statistics 611

33 / 61

Note that in this case

h

i

c0 β̂ = 0 0 1 α̂ = α̂3 .

Var(c0 β̂) = Var

h

i 0 0 1 α̂

0

h

i

0

−1

2

= σ 0 0 1 (W W) 0

1

= σ 2 P2

c

Copyright 2012

Dan Nettleton (Iowa State University)

i=1

1

Pm

j=1 (xij

− x̄i· )2

.

Statistics 611

34 / 61

Theorem 4.1 (Gauss-Markov Theorem):

Suppose the GMM holds. If c0 β is estimable, then the LSE c0 β̂ is the

best (minimum variance) linear unbiased estimator (BLUE) of c0 β.

c

Copyright 2012

Dan Nettleton (Iowa State University)

Statistics 611

35 / 61

Proof of Theorem 4.1:

c0 β is estimable ⇒ c0 = a0 X for some vector a.

The LSE of c0 β is

c0 β̂ = a0 Xβ̂

= a0 X(X0 X)− X0 y

= a0 PX y.

Now suppose u + v0 y is any other linear unbiased estimator of c0 β.

c

Copyright 2012

Dan Nettleton (Iowa State University)

Statistics 611

36 / 61

Then

E(u + v0 y) = c0 β

∀ β ∈ Rp

⇐⇒ u + v0 Xβ = c0 β

⇐⇒ u = 0

c

Copyright 2012

Dan Nettleton (Iowa State University)

∀ β ∈ Rp

and v0 X = c0 .

Statistics 611

37 / 61

Var(u + v0 y) = Var(v0 y)

= Var(v0 y − c0 β̂ + c0 β̂)

= Var(v0 y − a0 PX y + c0 β̂)

= Var((v0 − a0 PX )y + c0 β̂)

= Var(c0 β̂) + Var((v0 − a0 PX )y)

c

Copyright 2012

Dan Nettleton (Iowa State University)

+ 2Cov((v0 − a0 PX )y, c0 β̂).

Statistics 611

38 / 61

Now

Cov((v0 − a0 PX )y, c0 β̂) = Cov((v0 − a0 PX )y, a0 PX y)

c

Copyright 2012

Dan Nettleton (Iowa State University)

= (v0 − a0 PX )Var(y)PX a

= σ 2 (v0 − a0 PX )PX a

= σ 2 (v0 − a0 PX )X(X0 X)− X0 a

= σ 2 (v0 X − a0 PX X)(X0 X)− X0 a

= σ 2 (v0 X − a0 X)(X0 X)− X0 a

= 0 ∵ v0 X = c0 = a0 X.

Statistics 611

39 / 61

∴ we have

Var(u + v0 y) = Var(c0 β̂) + Var((v0 − a0 PX )y).

It follows that

Var(c0 β̂) ≤ Var(u + v0 y)

with equality iff

Var((v0 − a0 PX )y) = 0;

c

Copyright 2012

Dan Nettleton (Iowa State University)

Statistics 611

40 / 61

i.e., iff

σ 2 (v0 − a0 PX )(v − PX a) = σ 2 (v − PX a)0 (v − PX a)

= σ 2 kv − PX ak2

= 0;

i.e., iff

v = PX a;

i.e., iff

u + v0 y is a0 PX y = c0 β̂, the LSE of c0 β.

c

Copyright 2012

Dan Nettleton (Iowa State University)

Statistics 611

41 / 61

Result 4.1:

The BLUE of an estimable c0 β is uncorrelated with all linear unbiased

estimators of zero.

c

Copyright 2012

Dan Nettleton (Iowa State University)

Statistics 611

42 / 61

Proof of Result 4.1:

Suppose u + v0 y is a linear unbiased estimator of zero. Then

E(u + v0 y) = 0

∀ β ∈ Rp

⇐⇒ u + v0 Xβ = 0

∀ β ∈ Rp

⇐⇒ u = 0

and v0 X = 00

⇐⇒ u = 0

and X0 v = 0.

c

Copyright 2012

Dan Nettleton (Iowa State University)

Statistics 611

43 / 61

Thus,

Cov(c0 β̂, u + v0 y) = Cov(c0 (X0 X)− X0 y, v0 y)

c

Copyright 2012

Dan Nettleton (Iowa State University)

= c0 (X0 X)− X0 Var(y)v

= c0 (X0 X)− X0 (σ 2 I)v

= σ 2 c0 (X0 X)− X0 v

= σ 2 c0 (X0 X)− 0

= 0.

Statistics 611

44 / 61

Suppose c01 β, . . . , c0q β are q estimable functions 3 c1 , . . . , cq are LI.

c01

.

.

Let C =

C ) = q.

. . Note that rank(q×p

c0q

c

Copyright 2012

Dan Nettleton (Iowa State University)

Statistics 611

45 / 61

c01 β

.

.

Cβ =

. is a vector of estimable functions.

c0q β

The vector of BLUEs is the vector of LSEs

c01 β̂

.

.. = Cβ̂.

0

cq β̂

c

Copyright 2012

Dan Nettleton (Iowa State University)

Statistics 611

46 / 61

Because c01 β, . . . , c0q β are estimable, ∃

ai 3 X0 ai = ci

∀ i = 1, . . . , q.

a01

.

.

If we let A =

. , then AX = C.

a0q

Find Var(Cβ̂).

c

Copyright 2012

Dan Nettleton (Iowa State University)

Statistics 611

47 / 61

Var(Cβ̂) = Var(AXβ̂)

c

Copyright 2012

Dan Nettleton (Iowa State University)

= Var(AX(X0 X)− X0 y)

= Var(APX y)

= σ 2 APX (APX )0

= σ 2 APX A0

= σ 2 AX(X0 X)− X0 A0

= σ 2 C(X0 X)− C0 .

Statistics 611

48 / 61

Find rank(Var(Cβ̂)).

c

Copyright 2012

Dan Nettleton (Iowa State University)

Statistics 611

49 / 61

q = rank(C) = rank(AX)

= rank(APX X) ≤ rank(APX )

= rank(P0X A0 ) = rank(APX P0X A0 )

= rank(APX PX A0 ) = rank(APX A0 )

= rank(AX(X0 X)− X0 A0 )

= rank(C(X0 X)− C0 )

≤ rank(C) = q. ∴ rank(σ 2 C(X0 X)− C0 ) = q.

c

Copyright 2012

Dan Nettleton (Iowa State University)

Statistics 611

50 / 61

Note

Var(Cβ̂) = σ 2 C(X0 X)− C0

is a q × q matrix of rank q and is thus nonsingular.

c

Copyright 2012

Dan Nettleton (Iowa State University)

Statistics 611

51 / 61

We know that each component of Cβ̂ is the BLUE of each

corresponding component of Cβ; i.e., c0i β̂ is the BLUE of

c0i β

∀ i = 1, . . . , q.

c

Copyright 2012

Dan Nettleton (Iowa State University)

Statistics 611

52 / 61

Likewise, we can show that Cβ̂ is the BLUE of Cβ in the sense that

Var(s + Ty) − Var(Cβ̂)

is nonnegative definite for all unbiased linear estimators s + Ty of Cβ.

c

Copyright 2012

Dan Nettleton (Iowa State University)

Statistics 611

53 / 61

Nonnegative Definite and Positive Definite Matrices

A symmetric matrixn×n

A is nonnegative definite (NND) if and only if

x0 Ax ≥ 0 ∀ x ∈ Rn .

A symmetric matrixn×n

A is positive definite (PD) if and only if

x0 Ax > 0 ∀ x ∈ Rn \ {0}.

c

Copyright 2012

Dan Nettleton (Iowa State University)

Statistics 611

54 / 61

Proof that Var(s + Ty) − Var(Cβ̂) is NND:

s + Ty is a linear unbiased estimator of Cβ

⇐⇒ E(s + Ty) = Cβ

⇐⇒ s + TXβ = Cβ

⇐⇒ s = 0

∀ β ∈ Rp

∀ β ∈ Rp

and TX = C.

Thus, we may write any linear unbiased estimators of Cβ as Ty where

TX = C.

c

Copyright 2012

Dan Nettleton (Iowa State University)

Statistics 611

55 / 61

Now ∀ w ∈ Rq consider

w0 [Var(Ty) − Var(Cβ̂)]w = w0 Var(Ty)w − w0 Var(Cβ̂)w

= Var(w0 Ty) − Var(w0 Cβ̂)

≥ 0 by Gauss-Markov Theorem because . . .

c

Copyright 2012

Dan Nettleton (Iowa State University)

Statistics 611

56 / 61

(i) w0 Cβ is an estimable function:

w0 C = w0 AX ⇒ X0 A0 w = C0 w

⇒ C0 w ∈ C(X0 ).

(ii) w0 Ty is a linear unbiased estimator of w0 Cβ :

E(w0 Ty) = w0 TXβ = w0 Cβ

∀ β ∈ Rp .

(iii) w0 Cβ̂ is the LSE of w0 Cβ and is thus the BLUE of w0 Cβ.

c

Copyright 2012

Dan Nettleton (Iowa State University)

Statistics 611

57 / 61

We have shown

w0 [Var(Ty) − Var(Cβ̂)]w ≥ 0,

∀ w ∈ Rp .

Thus,

Var(Ty) − Var(Cβ̂)

is nonnegative definite (NND).

c

Copyright 2012

Dan Nettleton (Iowa State University)

Statistics 611

58 / 61

Is it true that

Var(Ty) − Var(Cβ̂)

is positive definite if Ty is a linear unbiased estimator of Cβ that is not

the BLUE of Cβ̂?

c

Copyright 2012

Dan Nettleton (Iowa State University)

Statistics 611

59 / 61

No. Ty could have some components equal to Cβ̂ and others not.

Then

∃ w 6= 0 3 w0 [Var(Ty) − Var(Cβ̂)]w = 0.

For example, suppose first row of T is c01 (X0 X)− X0 but the second row

is not c02 (X0 X)− X0 .

Then Ty 6= Cβ̂ but

c

Copyright 2012

Dan Nettleton (Iowa State University)

Statistics 611

60 / 61

w0 [Var(Ty) − Var(Cβ̂)]w

= Var(w0 Ty) − Var(w0 Cβ̂)

=0

for w0 = [1, 0, . . . , 0].

c

Copyright 2012

Dan Nettleton (Iowa State University)

Statistics 611

61 / 61