Document 10639907

advertisement

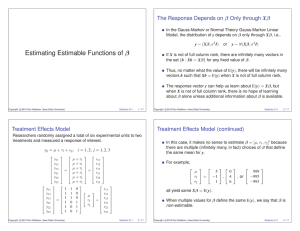

Estimable Functions and Their Least Squares Estimators in Reparameterized Models c Copyright 2012 Dan Nettleton (Iowa State University) Statistics 611 1 / 16 Once again consider the linear models y = Wα + ε and y = Xβ + ε, where E(ε) = 0 and C(W) = C(X). Suppose W = XT and X = WS. c Copyright 2012 Dan Nettleton (Iowa State University) Statistics 611 2 / 16 We have E(y) = Xβ = Wα = WSβ = XTα. Note the correspondence between c Copyright 2012 Dan Nettleton (Iowa State University) β and Tα α and Sβ. Statistics 611 3 / 16 Result 3.4: Suppose c0 β is estimable and W 0 W α̂ = W 0 y. Then c0 T α̂ is the least squares estimator of c0 β. c Copyright 2012 Dan Nettleton (Iowa State University) Statistics 611 4 / 16 Proof of Result 3.4: W 0 W α̂ = W 0 y ⇒ X0 XT α̂ = X0 y by Result 2.9. ∵ T α̂ solves the NE X0 Xb = X0 y, c0 T α̂ is LS estimator of c0 β by definition. c Copyright 2012 Dan Nettleton (Iowa State University) Statistics 611 5 / 16 Example: Consider again the case where " X= " W= 1n1 1n1 0n1 1n2 0n2 1n2 1n1 0n1 1n2 1n2 c Copyright 2012 Dan Nettleton (Iowa State University) # # 1 T = 0 0 " 1 S= 0 0 0 1 1 0 −1 1 # . Statistics 611 6 / 16 Recall that the unique solution to the NE W 0 W α̂ = W 0 y is " α̂ = c Copyright 2012 Dan Nettleton (Iowa State University) ȳ1· ȳ2· − ȳ1· # . Statistics 611 7 / 16 Suppose we denote the components of β by µ, τ1 , τ2 so that µ β= τ1 τ2 and c Copyright 2012 Dan Nettleton (Iowa State University) E(y) = # " (µ + τ1 )1n1 (µ + τ2 )1n2 . Statistics 611 8 / 16 τ1 − τ2 = (µ + τ1 ) − (µ + τ2 ) is estimable ∵ it is a LC of elements of E(y). τ1 − τ2 = c0 β where c0 = [0, 1, −1]. c Copyright 2012 Dan Nettleton (Iowa State University) Statistics 611 9 / 16 Result 3.4 implies that # 1 0 " ȳ 1· c0 T α̂ = [0, 1, −1] 0 0 ȳ − ȳ 2· 1· 0 1 " # ȳ1· = [0, −1] ȳ2· − ȳ1· = ȳ1· − ȳ2· is LSE of c0 β = τ1 − τ2 . c Copyright 2012 Dan Nettleton (Iowa State University) Statistics 611 10 / 16 Result 3.5: If d0 α is estimable in the model y = Wα + ε, then d0 Sβ is estimable in the model y = Xβ + ε, and its LSE is d0 α̂ = d0 Sβ̂, where α̂ and β̂ are solutions to W 0 Wa = W 0 y and X0 Xb = X0 y, c Copyright 2012 Dan Nettleton (Iowa State University) respectively. Statistics 611 11 / 16 Proof of Result 3.5: By Result 3.1, d0 α is estimable ⇐⇒ ∃ a 3 d0 = a0 W. Multiplying on the right by S leads to ∃ a 3 d0 S = a0 WS = a0 X. ∴ By Result 3.1, d0 Sβ is estimable in the model y = Xβ + ε. By definition, we know d0 α̂ is LS estimate of d0 α and d0 Sβ̂ is LS estimate of d0 Sβ. c Copyright 2012 Dan Nettleton (Iowa State University) Statistics 611 12 / 16 To see that d0 α̂ = d0 Sβ̂, note that Result 3.4 implies d0 Sβ̂ = d0 ST α̂ = a0 WST α̂ = a0 XT α̂ = a0 W α̂ (d0 = a0 W) (X = WS) (W = XT) = d0 α̂. c Copyright 2012 Dan Nettleton (Iowa State University) Statistics 611 13 / 16 Returning to our example, rank(W) = 2 ⇒ d0 α is estimable ∀ d ∈ R2 . For example, d0 α is estimable for d0 = [1, 0], and the LSE is " d0 α̂ = [1, 0] c Copyright 2012 Dan Nettleton (Iowa State University) ȳ1· ȳ2· − ȳ1· # = ȳ1· . Statistics 611 14 / 16 According to Result 3.5, µ 1 1 0 τ1 d0 Sβ = [1, 0] 0 −1 1 τ2 µ = [1, 1, 0] τ1 τ2 " = µ + τ1 c Copyright 2012 Dan Nettleton (Iowa State University) # is also estimable. Statistics 611 15 / 16 The LSE is " 0 1 0 ȳ1· 0 −1 1 ȳ2· 0 = [1, 1, 0] ȳ1· ȳ2· d0 Sβ̂ = [1, 0] = ȳ1· c Copyright 2012 Dan Nettleton (Iowa State University) 1 # . Statistics 611 16 / 16