Some Chapter 2 Notes on Completely Randomized Designs

advertisement

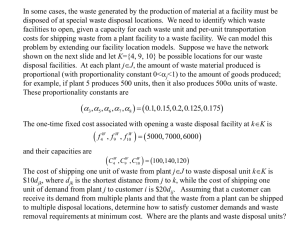

Some Chapter 2 Notes on Completely Randomized Designs Want to compare the weight gains of hogs on 3 diets (A,B,C) 12 hogs available for study Can use table of random digits on p. 624 of Kuehl. Arbitrarily start at Line 6, Column 6. ID Number 1 2 3 4 5 6 7 8 9 10 11 12 Random Number 29 69 24 14 90 78 59 62 39 67 53 73 RANDOMIZE −→ −→−→−→ ID Number 4 3 1 9 11 7 8 10 2 12 6 5 Diet A A A A B B B B C C C C Weight Gain 28 33 30 37 23 28 25 24 31 28 33 28 Response Label y11 y12 y13 y14 y21 y22 y23 y24 y31 y32 y33 y34 Assumptions All observations are independent and y11 , y12 , y13 , y14 ∼ N (µ1 , σ 2 ) y21 , y22 , y23 , y24 ∼ N (µ2 , σ 2 ) y31 , y32 , y33 , y34 ∼ N (µ3 , σ 2 ) yij = µi + eij i = 1, . . . , t (t = 3 in this case) j = 1, . . . , ri (r1 = r2 = r3 = 4 in this case) eij ∼ independent N (0, σ 2 ) eij are experimental errors. If there was no experimental error, the weight gains of all hogs given the same treatment would be exactly the same. If µ1 = 33, what is e13 ? How about e14 ? We want to test H0 : µ1 = µ2 = µ3 versus HA : µi 6= µk for some i, k. If H0 is true, there exists µ such that yij = µ + eij i = 1, . . . , t j = 1, . . . , ri . This is known as the reduced model. The full model is yij + µi + eij i = 1, . . . , t j = 1, . . . , ri . Under the reduced model, all diets are equivalent. Diets don’t affect weight gain. Under the full model, diets matter. Which model seems to fit data the best? (yij = µi + eij i = 1, . . . , t j = 1, . . . , ri ) For the Full Model: We want estimates of the model parameters µ1 , µ2 , µ3 , and σ 2 . Choose estimates of µ1 , µ2 , and µ3 that will make residuals as close to zero as possible. experimental error eij = yij − µi residual êij = yij − µ̂i i i Choose µ̂i so that SSE = ti=1 rj=1 ê2ij = ti=1 rj=1 (yij − µ̂i )2 is as small as possible. Such estimates are called least squares estimates. P P P P Can use calculus or some slightly tricky algebra to show that Pi the least squares estimate of µi is µ̂i = ȳi· = rj=1 yij /ri . What is the least squares estimates of µ2 in the weight gain experiment? Note that SSE = ri t X X 2 (yij − µ̂i ) = i=1 j=1 ri t X X 2 (yij − ȳi· ) = i=1 j=1 t X r (ri − 1)s2i where s2i i=1 i 1 X (yij − ȳi· )2 = ri − 1 j=1 s2i is the sample variance in the ith group. The error variance σ 2 is estimated by M SE = (N = SSE (r1 − 1)s21 + · · · + (rt − 1)s2t = = weighted average of within-group sample variances. N −t (r1 − 1) + · · · + (rt − 1) Pt i=1 ri =total sample size) (yij = µ + eij i = 1, . . . , t j = 1, . . . , ri ) For the Reduced Model: êij = yij − µ̂ We want to estimate µ. Least squares estimate of µ is µ̂ = ȳ·· = SSER = Pt i=1 Pri j=1 (yij − µ̂)2 = Pt SSER = Pt i=1 i=1 Pt i=1 Pri j=1 (yij − µ̂)2 Pri j=1 yij /N . Pri j=1 (yij − ȳ·· )2 = SSTotal SSER ≥ SSEF SSER − SSEF = SST = SSTreatment =sum of squares treatment (SST is reduction in sum of squares error due to including treatment in the model.) SSTotal Pt i=1 Pri j=1 (yij − ȳ·· )2 = SSTreatment + SSError = Pt + Pt i=1 ri (ȳi· − ȳ·· )2 i=1 Pri j=1 (yij − ȳi· )2 Source DF Sum of Squares Mean Square Fo Treatment t−1 Pt SST /(t − 1) MST/MSE Error N −t Pt Pri − ȳi· )2 Total N −1 Pt Pri − ȳ·· )2 i=1 ri (ȳi· i=1 i=1 − ȳ·· )2 j=1 (yij j=1 (yij SSE/(N − t) Fo ∼ F with t − 1 and N − t degrees of freedom when H0 : µ1 = · · · = µt is true.