OPTIMAL STOPPING WITH PRIVATE INFORMATION

advertisement

OPTIMAL STOPPING WITH PRIVATE INFORMATION

THOMAS KRUSE AND PHILIPP STRACK

Abstract. Many economic situations are modeled as stopping problems. Examples are

timing of market entry decisions, search, and irreversible investment. We analyze a principalagent problem where the principal and the agent have dierent preferences over stopping

rules. The agent privately observes a signal that inuences his own and the principal's

payo. Based on his observation the agent decides when to stop. In order to inuence the

agents stopping decision the principal chooses a transfer that conditions only on the time

the agent stopped.

We derive a monotonicity condition such that all cut-o stopping rules can be implemented using such a transfer. The condition generalizes the single-crossing condition from

static mechanism design to optimal stopping problems. We give conditions under which the

transfer is unique and derive a closed form solution based on reected processes. We prove

that there always exists a cut-o rule that is optimal for the principal and, thus, can be

implemented using a posted-price mechanism.

An application of our result leads to a new purely stochastic representation formula for

the value function in optimal stopping problems based on reected processes. Finally, we

apply the model to characterize the set of implementable policies in the context of job search

and irreversible investment.

Dynamic Mechanism Design, Optimal Stopping, Dynamic Implementability,

Posted-Price Mechanism

Keywords:

JEL codes:

D82, D86, C02

Thomas Kruse, Institute for Applied Mathematics, University of Bonn, Endenicher Allee 60, 53113 Bonn,

Germany. Web: http://www.uni-bonn.de/∼tkruse/, Email: tkruse@uni-bonn.de; Philipp Strack, Institute

for Microeconomics & Hausdor Center for Mathematics, University of Bonn, Lennestrasse 43, 53111 Bonn,

Germany. Web: http://www.uni-bonn.de/∼pstrack/, Email: philipp.strack@gmail.com.

We would like to thank Stefan Ankirchner, Dirk Bergemann, Paul Heidhues, Daniel Krähmer, Benny

Moldovanu, Sven Rady, Juuso Toikka and seminar audiences in Yale, Maastricht and Bonn for many helpful

comments and suggestions. All remaining errors are our own.

1

1. Introduction

Many economic situations are modeled as stopping problems. Examples are job search,

timing of market entry decisions, or irreversible investment decisions. In an optimal stopping

problem information arrives over time and a decision maker decides at every point in time

whether to stop or continue. The stopping decision is irreversible and, thus, stopping today

implies forgoing the option to stop later with a potentially larger return.

The literature on optimal stopping assumes that the decision maker observes all relevant

information directly.

There are, however, many economic environments modeled as opti-

mal stopping problems where private information is a crucial feature. Important examples

include the regulation of a rm that decides when to enter a market, or the design of unemployment benets when the worker privately observes his job oers. Consider for example a

pharmaceutical rm that observes the stochastically changing demand for a new product and

needs to decide on whether and when to enter the market for this product. If the rm starts

selling the product, it pays an investment cost and receives the ow returns from selling

the product at all future times. When timing its market entry decision, the rm solves an

irreversible investment problem. As famously shown in the irreversible investment literature,

it is optimal for the rm to delay investment beyond the point where the expected return

of investing becomes positive (for an excellent introduction in the irreversible investment

literature see Dixit and Pindyck 2008). While this investment strategy is optimal for the

rm, it is typically wasteful from a social perspective: As the rm does not internalize the

consumer surplus, it will enter the market too late. A question that naturally arises is how a

regulator can improve the incentives of the rm if the demand is private information of the

rm. The result we derive in this paper implies that the regulator can always implement a

socially ecient market entry decision through a simple posted-price-like-mechanisms that

conditions on when the rm enters.

Our model is a dynamic principal-agent model. The agent privately observes a randomly

changing signal and chooses a stopping rule. The principal observes the stopping decision

of the agent, but not the signal. The principal commits to a transfer in order to inuence

the agent's decision.

Our main result is that under a single-crossing condition all cut-o

rules can be implemented using transfers that condition only on the time the agent stopped.

As the transfer does not depend on private information of the agent it can be implemented

without communication. This feature resembles a posted-price mechanism and makes our

mechanism especially appealing from an applied perspective.

Finally, we show that the

minimal stopping rule that is optimal for the principal is a cut-o rule and thus can be

implemented.

2

We establish our result as follows. First, we derive a dynamic single-crossing condition

that ensures the monotonicity of the continuation gain in the agent's signal at a every point

in time. A consequence of this monotonicity is that the incentive to stop is increasing in the

type. Thus, to implement a cut-o rule it suces to provide incentives to the (marginal) cuto type. Intuitively, taking future transfers as given, the transfer providing incentives to the

marginal type today could be calculated recursively. But as future transfers are endogenous,

this recursive approach is dicult and requires the calculation of a value function at every

point in time.

We circumvent all these problems attached to the recursive approach by

directly constructing transfers using reected stochastic processes.

We dene a reected

process as a Markov process that equals the original process as long as the latter stays below

the cut-o. Once the original process exceeds the cut-o, the reected process follows its

own dynamics and is dened to stay below the cut-o.

We show that every cut-o rule can be implemented through a transfer that equals the

agent's expected payo evaluated at the process reected at the cut-o. To the best of our

knowledge, we are the rst to use reected processes in the context of mechanism design. We

also believe that this paper contributes to the mathematical literature on optimal stopping as

we are able to give a new, completely probabilistic sucient condition for optimal stopping

rules.

The approach of this paper diers from the standard mechanism-design one, since we do

not rely on the revelation principle and direct mechanisms. We do not use direct mechanisms

in order to bypass technical problems that a formalization of the cheap talk protocol between

the principal and the agent would entail: the agent would have to communicate with the

principal constantly and, hence, the space of communication strategies would be very rich.

As optimal communication strategies are not necessarily Markovian, an optimization over

those is a hard problem.

The direct mechanism design approach, nevertheless, has been

successfully used in general discrete-time settings in Bergemann and Välimäki (2010) for

welfare maximization, in Pavan et al. (2012) for revenue maximization, or in continuous

time to solve principal-agent problems in Sannikov (2008) and Williams (2011).

Surprisingly, it turns out that for optimal stopping problems the focus on posted-price

mechanisms is not restrictive from a welfare perspective. We show that a principal-optimal

full information stopping rule (rst best) is always implementable by posted-price mechanisms. Moreover, while direct mechanisms often require communication and a transfer at

every point in time, our posted-price mechanism demands no communication and only a

single transfer at the time of stopping.

The paper proceeds as follows. Section 2 introduces the model. In Section 3 we show that

all cut-o rules are implementable using posted-price mechanisms and derive the transfer.

Furthermore Section 3 establishes that the minimal stopping rule that is optimal for the

3

principal is a cut-o rule. Section 4 presents applications to irreversible investment, search,

and proves that the classical static implementability result is a special case of our dynamic

result. Section 5 concludes.

2. The Model

We rst give an informal presentation of the model before we present all the precise

mathematical assumptions. There is a risk-neutral principal and a risk-neutral agent, who

depending on the stopping rule

τ

and the signal receive

ˆ

X

a payo of

τ

agent: V (τ ) = E

h(t, Xt )dt + g(τ, Xτ )

0

ˆ τ

principal: W (τ ) = E

β(t, Xt )dt + α(τ, Xτ ) .

0

The signal

X

is a Markov process which is only observed by the agent. Depending on his past

observation of the signal the agent can decide at every point in time between stopping and

continuing. In order to inuence the agents decision the principal commits to a transfer

π

π implements the stopping

rule τ if τ is optimal for the agent given he receives the transfer π in addition to his payos

g and h

V (τ ? ) + π(τ ? ) ≥ V (τ ) + π(τ )

for all τ .

that only conditions on the time the agent stopped. The transfer

?

?

The next sections state the precise assumptions of the model.

Evolution of the Private Signal.

2.1.

some xed time horizon

T < ∞.

1

Time is continuous and indexed by

At every point in time

t

t ∈ [0, T ]

for

the agent privately observes a

Xt . The signal is adapted to the ltration F of some ltered probability

space (Ω, P, F = {Ft }t∈[0,T ] ). The ltered probability space supports a one-dimensional F Brownian motion Wt and a F -Poisson random measure N (dt dz) with compensator ν(dz) on

R. We denote by Ñ (dt dz) = N (dt dz) − ν(dz)vt the compensated Poisson random measure.

The time zero signal X0 is distributed according to the cumulative distribution function

F : R → [0, 1]. The signal observed by the agent Xt changes over time and follows the

real valued signal

inhomogeneous Markovian jump diusion dynamics

ˆ

(2.1)

The functions

dXt = µ(t, Xt )dt + σ(t, Xt )dWt +

µ, σ : [0, T ] × R → R

and

γ(t, Xt− , z)Ñ (dtdz).

R

γ : [0, T ] × R × R → R satisfy the

usual assump-

tions listed in Appendix A to ensure that the process exists and is well-behaved .

Under

t,x

these assumptions there exists a path-wise unique solution (Xs )s≥t to (2.1) for every initial

t,x

condition Xt

= x.

1We

show later that discrete time models are a special case where we restrict the set of possible strategies.

4

We assume that jumps preserve the order of signals.

signal

x≤x

0

At every point in time

t

a lower

before a jump has a lower level after a jump

x + γ(t, x, z) ≤ x0 + γ(t, x0 , z)

ν(dz) − a.s.

This implies a comparison result (c.f. Peng and Zhu 2006): The path of a signal starting at

a lower level

times

x ≤ x0

at time

t

is smaller than the path of a signal starting in

at all later

s>t

P − a.s.

0

Xst,x ≤ Xst,x

(2.2)

2.2.

x0

Information, Strategies and Payos of the Agent.

the signal the agent decides when to stop.

Based on his observations of

The agent can only stop at times

T ⊆ [0, T ].

Note that we can embed discrete time in our model by only allowing the agent to stop at a

nite number of points

taking values in

T.

T = {1, 2, . . . , T }.

Denote by

T

the set of

F

adapted stopping rules

A stopping rule is a complete contingent plan which maps every history

(t, (Xs )s≤t ) into a binary stopping decision. The agent is risk-neutral

2

and receives a ow payo h : [0, T ] × R → R and terminal payo g : [0, T ] × R → R. His

value V : T → R of using the stopping rule τ equals

ˆ τ

h(t, Xt )dt + g(τ, Xτ ) .

V (τ ) = E

observed by the agent

0

The problem analyzed in the optimization theory literature is to nd an

ping rule, i.e.

a stopping rule

τ

?

such that for all stopping rules

agent-optimal stop-

τ ∈T

V (τ ? ) ≥ V (τ ) .

h are Lipschitz

assume that g is twice

To ensure that the optimization problem is well posed we assume that

continuous and of polynomial growth in the

dierentiable in the

(2.3)

x

x

variable and once in the

variable. Moreover we

t

g

and

variable. Then the innitesimal

1

(E [g(t + ∆, Xt+∆ )|Xt = x] − g(t, x))

∆&0 ∆

(Lg)(t, x) = lim

is well dened for every

t

and every

x

and we have the identity

1

(Lg)(t, x) = gt (t, x) + µ(t, x)gx (t, x) + σ(t, x)2 gxx (t, x)

2

ˆ

+ g(t, x + γ(t, x, z)) − g(t, x) − gx (t, x)γ(t, x, z)ν(dz).

R

Finally we assume that the payos of the agent satisfy the following single-crossing condition

2Note

extendedito include a ow payo after stopping, i.e. payos of the form

h´ that our model can easily

´T

τ

E 0 h(t, Xt )dt + g(τ, Xτ ) + τ h̃(t, Xt )dt .

5

Denition 1

.

(Dynamic Single-Crossing)

x 7→ h(t, x) + (Lg)(t, x)

The mapping

is non-

increasing.

The dynamic single-crossing condition means that if after every history

Ft

the expected

change in the payo of the agent if he waits an innitesimal short time is decreasing in the

current signal

lim

∆&0

1

∆

Xt = x

ˆ t+∆

E

ˆ

t

h(s, Xs )dt + g(t, Xt ) | Ft

h(s, Xs )ds + g(t + ∆, Xt+∆ ) −

0

0

ˆ t+∆

1

= lim E

h(s, Xs )dt + g(t + ∆, Xt+∆ ) − g(t, Xt ) | Xt = x

∆&0 ∆

t

= h(t, x) + (Lg)(t, x) .

The dynamic single-crossing condition is a condition naturally satised in most optimal

stopping problems. Examples in the economic literature are irreversible investment (Dixit

and Pindyck 2008), learning in one armed bandit models, job and other search problems as

well as American options.

2.3.

Information, Strategies and Payos of the Principal.

Similar to the agent the

W :T →R

β : [0, T ] × R → R and terminal payo α : [0, T ] × R → R

ˆ τ

β(t, Xt )dt + α(τ, Xτ ) .

W (τ ) = E

principal is risk neutral and wants to maximize the functional

of a ow payo

which is the sum

0

α, β

g, h as

The payos

of the principal satisfy the same regularity assumptions as the payos of

the agent

well as the dynamic single-crossing condition. We call a stopping time

principal-optimal

if it maximizes the utility of the principal,

W (τ ? ) ≥ W (τ )

for all

τ?

τ ∈ T.

Note that the principal optimal stopping time (rst best) is the stopping time the principal

would like to use if he observes the signal

X.

The principal however, does not observe the signal

of

X0

signal

and the probability measure

X

P

X.

He only knows the distribution

describing the evolution of

X,

i.e

µ, σ

and

γ.

F

As the

is unobservable the principal has no information about the realized ow payos of

the agent or the agents expectation about future payos. The only information the principal

observes is the realized stopping decision of the agent.

she commits to a transfer scheme

π : [0, T ] → R

In order to inuence this decision

that depends only on the time the agent

stopped. As the transfer only conditions on publicly observable information it requires no

communication between the principal and the agent and can be interpreted as a posted-price.

We dene the set of stopping rules that are implementable through a transfer

6

Denition 2 (Implementability).

E

(2.4)

τ?

τ? ∈ T

ˆ

h(t, Xt )dt + g(τ , Xτ ? ) + π(τ ) ≥ E

?

π

τ

?

0

A transfer

is implemented by the transfer

π

τ ∈T

if for all stopping rules

ˆ

A stopping rule

h(t, Xt )dt + g(τ, Xτ ) + π(τ ) .

0

implements the stopping rule

if he receives the transfer

π

τ?

if the stopping rule

in addition to his payos

h

and

g.

τ?

is optimal for the agent

Implementability generalizes

the notion of optimality for stopping rules, as a stopping rule is optimal if and only if it is

3

implemented by a constant transfer.

3. Implementable Stopping Rules

We introduce the notion of cut-o rules. Cut-o rules are stopping rules such that the

agent stops the rst time the signal

Denition 3 (Cut-O Rule).

function

b : [0, T ] → R

Xt

exceeds a time-dependent threshold

A stopping rule

τ ∈T

b.

is called a cut-o rule if there exists a

such that

τ = inf{t ≥ 0 | Xt ≥ b(t)}.

In this case we write

τ = τb .

If

b

is càdlàg we call

τb

a regular cut-o rule.

4

In Section 3.2 we show that all regular cut-o rules are implementable. The associated

transfer admits an explicit representation in terms of the reected version of

introduce in Section 3.1.

X

which we

Under the assumption, that the agent stops the rst time it is

optimal to stop we show in Section 3.3 that every implementable stopping rule, is a cut-o

rule.

Reected Processes.

b : [0, T ] → R the reected version of X is a

process X̃ which evolves according to the same dynamics as X as long it is strictly below b

but is pushed downwards any time it tries to exceed b.

3.1.

Denition

(X̃t )0≤t≤T

For a given barrier

.

(Reected Process)

on

(Ω, P, F)

Let

b : [0, T ] → R

is called a reected version of

be a càdlàg function.

X

with barrier

b

A processes

if it satises the

conditions

1)

X̃

is constrained to stay below

X

Xt = X̃t

2) Until

b:

For every

t ∈ [0, T ]

X̃t ≤ b(t) a.s.

0 ≤ t < τb we have

we have

hits the barrier both processes coincide: For every

a.s.

3The

denition of implementability does not require a regularity assumption on the transfer π ensuring

that the resulting optimization problem of the agent is well posed, as all transfers that lead to an ill-posed

optimization problem implement no stopping rule and thus are irrelevant.

4A function b : [0, T ] → R is called càdlàg if it is right-continuous and has left-limits.

7

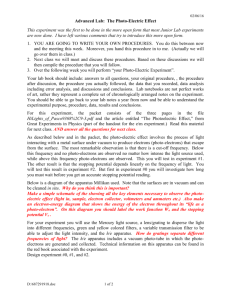

Random Walk X t , Reflected RW X t

18

17

16

15

14

13

12

11

10

9

8

7

6

5

4

3

2

1

0

10

20

Brownian Motion X t , Reflected BM X t

30

40

18

17

16

15

14

13

12

11

10

9

8

7

6

5

4

3

2

1

t 0

10

20

30

Figure 3.1. Realization of a random walk (left) and a Brownian motion

(right) starting in

3)

X̃

X0 = 8

reected at the constant barrier

X : For every t ∈ [0, T ] we have X̃t ≤ Xt

barrier, X̃ is at b: X̃τb = b(τb ) a.s.

is always smaller than

4) When

X

hits the

Moreover, we assume that for every stopping rule τ ∈

τ,η

variable η there exists a process (X̃t )τ ≤t≤T such that

5)

6)

7)

b(t) = 10.

T

and every

Fτ

a.s.

measurable random

X̃ τ,η starts at time τ in η : P[X̃ττ,η = η] = 1.

X̃ has the strong Markov Property: We have X̃tτ,X̃τ = X̃t a.s. for every t ∈ [τ, T ].

0

A higher value x ≥ x of X̃ at time t leads to higher values of X̃ at all later times

0

s ≥ t: X̃st,x ≤ X̃st,x a.s.

The next Proposition ensures the existence of a reected version of

X

for a wide range of

settings.

Proposition 4. Let b be a càdlàg function.

a) If X has no jumps (i.e. γ = 0), then there exists a version of X reected at b.

b) If X is non decreasing and b non increasing, then a version of X reected at b

is given by X̃sτ,η = min{Xsτ,η , b(s)}.

Proposition 4 shows that reected versions of the process exist for very large classes of environments, especially Diusion processes. One assumption of Proposition 4 will be satised

in all the examples we discuss later.

3.2.

All Cut-O Rules are implementable.

In this section we prove that every cut-o

rule is implementable. For a given cut-o rule we dene the transfer and explicitly verify

that it implements the cut-o rule. The transfer equals the expected future change in payo

evaluated at the process reected at the cut-o.

8

40

t

Theorem 5. Let b : [0, T ] → R such that there exists a version X̃ of X reected at b. Then

τb is implementable by the transfer

ˆ T

t,b(t)

t,b(t)

(3.1)

π(t) = E

h(s, X̃s ) + (Lg)(s, X̃s )ds .

t

Proof.

Let

(Lg)(t, x).

π

be given by Equation (3.1). To shorten notation we dene

E

ˆ

τ

ˆ

h(t, Xt )dt + g(τ, Xτ ) + π(τ ) ≤ E

X

g

is a Feller process and the generator of

E

ˆ

τ

z(s, Xs )ds + π(τ ) ≤ E

ˆ

First, we prove that the stopping

ˆ

E 1{τb <τ }

0

= E 1{τb <τ }

τb

z(s, Xs )ds + π(τb ) .

0

0

min{τ, τb }

τ

performs at least as well as

τ.

We readily

z(s, Xs )ds + π(τ )

ˆ

ˆ

τb

ˆ

τ

τb

T

z(s, X̃sτ,b(τ ) )ds

z(s, Xs )ds +

z(s, Xs )ds +

0

.

τ

The comparison principle for the reected process

for every time

h(t, Xt )dt + g(τb , Xτb )ds + π(τb ) .

is well dened we can apply Dynkin's formula

to get that (3.2) is equivalent to

compute

τb

0

0

As

is non-increasing. We need to show

τ ∈T

for all stopping times

(3.2)

x 7→ z(t, x)

By the single-crossing condition

z(t, x) = h(t, x) +

X̃ τb ,b(τb )

with the original process

X

yields

s ≥ τb

after the process hit the barrier that the reected process is not greater

τb ,b(τb )

than the original process Xs ≥ X̃s

. As the reected process X̃ is Markovian it follows

τ ,b(τb )

τb ,b(τb )

τ,X̃τ b

that X̃s

= X̃s

for all times s ≥ τ > τb . By the comparison principle for reected

s ≥ τ > τb

the reected process started in (τ, b(τ )) is not

τb ,b(τb )

τb ,b(τb )

τ,b(τ )

smaller than the reected process started in (τ, X̃τ

), i.e. X̃s

≥ X̃sτ,X̃τ

. These

processes we have that for all times

two inequalities combined with the monotonicity of

ˆ

ˆ

τ

ˆ

z(s, X̃sτb ,b(τb ) )ds

τ

τb

ˆ

T

z(s, X̃sτ,b(τ ) )ds

yield that for all

τ

z(s, Xs )ds ≤

τb

z

9

T

z(s, X̃sτb ,b(τb ) )ds .

≤

τ

τb < τ

τb < τ the stopping rule τb

the stopping rule τ

ˆ τ

E 1{τb <τ }

z(s, Xs )ds + π(τ )

Hence conditional on

than

0

ˆ

≤ E 1{τb <τ }

= E 1{τb <τ }

ˆ

ˆ

τb

gives the agent a higher expected payo

τ

z(s, X̃sτb ,b(τb ) )ds

z(s, Xs )ds +

τb

T

z(s, X̃sτb ,b(τb ) )ds

+

τ

τb

0

ˆ

z(s, Xs )ds + π(τb )

.

0

Consequently it is never optimal to continue after

τb .

The agent is at least as well of if he

min{τ, τb } instead of τ

τ

E

z(s, Xs )ds + π(τ )

0

ˆ τ

ˆ τ

= E 1{τb <τ }

z(s, Xs )ds + π(τ ) + E 1{τb ≥τ }

z(s, Xs )ds + π(τ )

0

0

ˆ τ ∧τb

z(s, Xs )ds + π(τ ∧ τb ) .

≤E

uses the stopping rule

ˆ

0

Thus it suces to consider stopping rules

E

ˆ

τ

τ ≤ τb .

We have

z(s, Xs )ds + π(τ )

0

=E

ˆ

ˆ

τ

z(s, X̃sτ,b(τ ) )ds

z(s, Xs )ds +

0

ˆ

τb

τ

T

z(s, X̃sτ,b(τ ) )ds

+

.

τb

τ,b(τ )

for all s ≥ τ.

X̃sτ,Xτ ≤ X̃s

By the minimality property of reected processes the original process X and the reected

τ,X

τ,Xτ

coincide for all times before they hit the barrier, i.e. Xs = X̃s τ for all s < τb .

process X̃

τb ,b(τb )

Moreover, the Markov property of the reected process yields X̃s

= X̃sτ,Xτ for s ≥ τb .

From the comparison principle for reected processes follows

10

The monotonicity of

E

ˆ

τ

z

implies

ˆ

z(s, Xs )ds + π(τ ) = E

0

ˆ

τ

=E

ˆ

0

z(s, X̃sτ,Xτ )ds

ˆ

τ

ˆ

ˆ

τb

z(s, X̃sτ,Xτ )ds

ˆ

τ

z(s, X̃sτ,b(τ ) )ds

τb

ˆ

τb

z(s, Xsτ,Xτ )ds

T

z(s, X̃sτb ,b(τb ) )ds

+

τb

τ

τb

T

+

τ

z(s, Xs )ds +

ˆ

τ

z(s, Xs )ds +

0

=E

T

z(s, Xs )ds +

ˆ

τ

ˆ

τ

0

=E

z(s, X̃sτ,b(τ ) )ds

z(s, Xs )ds +

0

≤E

T

h(t, Xt )dt + g(τb , Xτb ) + π(τb ) .

0

3.3.

All Implementable Stopping Rules are Cut-o Rules.

a xed time-t signal

payo if

For a xed transfer

π

and

π

x = Xt the agent's continuation value w (t, x) is the increase in expected

the agent uses the optimal continuation strategy instead of stopping at time t

ˆ τ

t,x

t,x

π

h(s, Xs )ds + g(τ, Xτ ) + π(τ ) − g(t, x) − π(t).

w (t, x) = sup E

τ ∈Tt,T

t

The continuation value satises the following properties.

5

Lemma 6. The mapping x 7→ wπ (t, x) is non-increasing and Lipschitz continuous.

By denition the continuation value is non-negative. If the continuation value is positive

it is never optimal for the agent to stop. If the continuation value equals zero it is optimal

to stop immediately but potentially there exists another strategy that stops later and is

also optimal. Clearly if the agent is indierent strategies that randomize over stopping and

continuing are also optimal.

Denition 7 (Minimal Stopping Rule). We call a stopping time τ

the transfer

π

which is implemented by

minimal if and only if the agent stops the rst time it is optimal to stop, i.e.

τ = inf{t ≥ 0 | wπ (t, Xt ) = 0}.

The restriction to minimal stopping rules allows us to excludes non-generic cases.

next proposition shows only cut-o rules can be implemented using a transfer

π

The

if we assume

that the agent stops the rst time it is optimal for him.

5The

monotonicity of x 7→ wπ (t, x) and the resulting form of the continuation region is shown in Jacka and

Lynn (1992) for the case that X is a Itô diusion.

11

Proposition 8. Let the stopping rule τ be implemented by the transfer π and minimal then

τ is a cut-o rule.

Proof. We x a point in time t ∈ [0, T ] and introduce the stopping region Dt = {x ∈

R|wπ (t, x) = 0}. Let x ∈ Dt and x0 ≥ x. Then Lemma 6 implies that the continuation value

wπ (t, x0 )

at

x0

is smaller than the continuation value

stopping region

Dt

as well. This implies that

Dt = [b(t), ∞)

at

x

and hence

x0

is in the

is an interval which is unbounded on the

π

x → w (t, x) is continuous and hence Dt is closed. Therefore

some b(t) ∈ R. This implies that τ is a cut-o rule with barrier b

right. By Lemma 6 the function

we have

Dt

wπ (t, x)

for

τ = inf{t ≥ 0 | Xt ∈ Dt } = inf{t ≥ 0 | Xt ≥ b(t)}.

3.4.

A Principal-Optimal Policy is Implementable.

principal-optimal policy is implementable.

payo of the principal

W (τ ) = E

ˆ

In this section we prove that a

A principal-optimal policy that maximizes the

τ

β(t, Xt )dt + α(τ, Xτ ) .

0

Recall that we made no assumptions on the payos

a, b

of the principal, apart from dier-

entiability and the single-crossing assumption.

Theorem 9. There exists a principal-optimal stopping rule that is implementable.

Proof.

Assume that the preferences of the principal and the agent are perfectly aligned

V ≡ W.

It follows from Proposition 8 that the minimal stopping rule implemented by the

transfer of zero

π ≡0

is a cut-o rule. As the preferences of the agent and the principal

are the same it follows that the minimal principal-optimal stopping rule is a cut-o rule. By

Theorem 5 all cut-o rules can be implemented and it follows that the minimal principal-

optimal stopping rule is implementable.

3.5.

Uniqueness of The Payment.

payment implementing a cut-o rule

τb

In this section we derive conditions such that the

is uniquely determined up to a constant.

Proposition 10. Let X be a regular continuous diusion (γ = 0 and σ bounded away from

zero) and the barrier b be continuously dierentiable, then there exists up to an additive

constant at most one continuously dierentiable transfer π : [0, T ] → R implementing a

cut-o rule τb .

Proof.

Let

π

denote a continuously dierentiable transfer implementing the stopping rule

We assume that

π(T ) = 0

and show that

π

τb .

is uniquely determined. The value function is

12

dened by

v (t, x) = sup E

π

(3.3)

ˆ

τ ∈Tt,T

Since

g, h

and

π

τ

h(s, Xst,x )ds

+

g(τ, Xτt,x )

+ π(τ ) .

t

v π is continuously dierentiable in t

x < b(t). Moreover, since τb is an optimal

are suciently smooth it follows that

and twice continuously dierentiable in

x

for all

stopping time in (3.3) the value function is also given by

v (t, x) = E

π

ˆ

τb

h(s, Xst,x )ds

g(τb , Xτt,x

)

b

+

t

In particular, at every point

x < b(t)

+ π(τb ) .

below the barrier it is optimal to continue, which

implies that

Lv π (t, x) + h(t, x) = 0.

(3.4)

On the barrier it is optimal to stop, which yields

v π (t, b(t)) = g(t, b(t)) + π(t)

(3.5)

for all

t ∈ [0, T ].

Since the agent has to stop at time

T

we have for all

x∈R

v π (T, x) = g(T, x).

(3.6)

Moreover the smooth pasting principle implies that

∂x v π (t, b(t)) = ∂x g(t, b(t)).

(3.7)

Observe that by Ladyzhenskaja et al. (1968; Theorem 5.3 in Chapter 4) there exists a

unique solution to Equations (3.4),(3.6) and (3.7). As these three equations do not depend

on the transfer

π

the value function does not depend on

dierentiable transfers

by Equation (3.5)

π

π

with

π(T ) = 0

π

neither. So for all continuously

the associated value functions coincide

is uniquely determined by

π

π(t) = v (t, b(t)) − g(t, b(t)).

v π = v.

But

4. Applications and Examples

4.1.

Irreversible Investment and Regulation.

In this section we present an application

of our result to the classical irreversible investment literature. A pharmaceutical rm observes demand for a new product

X.

The demand

Xt

is a geometric Brownian motion with

drift

dXt = Xt µdt + Xt σdWt ,

0 ≤ µ < r and σ > 0. If the rm starts selling the product at time t it pays continuous

investment cost c : R+ → R+ and receives the ow returns from selling the product. The

where

13

total expected payo of the rm equals

ˆ

V (τ ) = E −c(τ ) +

∞

−rt

e

τ

−rτ Xτ

Xt dt = E −c(τ ) + e

.

r−µ

When timing its market entry decision the rm solves an irreversible investment problem. As

famously shown in the irreversible investment literature (see for example Dixit and Pindyck

(2008)) it is optimal for the rm to delay investment beyond the point where the net present

value

Xt

−c(t) + e−rt r−µ

of investing becomes positive. While this behavior is optimal for the

rm it is wasteful from a social perspective. Assume that the consumers discount at the same

rate have an additional gain

Γ>0

society equals

W (τ ) = V (τ ) + E

ˆ

from the pharmaceutical such that the overall benet of

∞

−rt

e

τ

−rτ (1 + Γ)Xτ

ΓXt dt = E −c(τ ) + e

.

r−µ

As the rm does not internalize the benet of the consumers it will enter the market too

late.

Proposition 11. Let

τ V = arg supτ V (τ ) be the optimal stopping time of the rm and

τ W = arg supτ W (τ ) be the socially optimal stopping time, then we have that

τW < τV .

A question that naturally arises is how a regulator could improve the incentives of the rm

if demand is private information of the rm. By Theorem 1 we know that using a simple

posted-price mechanisms the regulator can always implement the socially ecient market

π.

V (τ ) = E [g(τ, Xτ )] .The

entry decision. In the next paragraph we will explicitly calculate the associated transfer

x

−c(t), the rm faces the maximization problem

e−rt r−µ

g(t, x) =

generator Lg is given

Dene

by

(Lg)(t, x) = −c0 (t) − re−rt

Clearly

(Lg)(t, x)

is decreasing in

x

x

µx

+ e−rt

= −c0 (t) − e−rt x .

r−µ

r−µ

and thus the dynamic single-crossing condition is satis-

ed. The transfer is given by

π(t) = E

ˆ

T

(Lg)(s, X̃st,b(t) )ds

=E

t

ˆ

14

0

−rs

−c (s) − e

t

= (c(T ) − c(t)) − φb (t, b(t)) ,

T

X̃st,b(t) ds

where

φ

φb (t, x) = E

h´

T

t

e−rs X̃st,x ds

i

is the expectation of the reected process

X̃ .The function

is the unique solution to the partial dierential equation

This allows

4.2.

φx (t, x) = 0 for all x = b(t)

x2 σ 2

φt (t, x) + φx (t, x)µx + φxx (t, x)

= e−rt x for all x < b(t) .

2

one to easily calculate φ numerically.

Constant Processes and the Relation to Static Mechanism Design.

In this sec-

tion we show that for the special case of constant processes our setting reduces to the classical

static mechanism design setting analyzed among others by Mirrlees (1971) and Guesnerie

and Laont (1984). We will explicitly construct the reected version

X̃

of the process

X.

In

this context the set of cut-o rules is equivalent to the set of monotone allocations and thus

we recover the famous result that every monotone allocation is implementable.

Consider the case without ow transfers

zero, i.e.

Xt = X0

for all

t ∈ [0, T ].

h ≡ 0.

Let the signal

X

be constant after time

As the signal is constant after time zero the private

information is one dimensional. Consequently the outcome of any (deterministic) stopping

X0 . Henceforth we dene τ̂ : R → [0, T ] as a function

of the initial signal τ̂ (x) = E [τ |X0 = x]. As the outcome τ̂ (X0 ) depends only on the initial

signal X0 we can dene a transfer π̂ : R → R that only conditions on X0 by π̂(x) = π(τ (x)) .

rule

τ

depends only on the initial signal

Implementability reduces to the following well known denition of static Implementability

(Guesnerie and Laont (1984), Denition 1)

Denition 12 (Static Implementability). An allocation rule τ : R → [0, T ] is implementable

if there exists a transfer

π̂ : R → R

such that for all

x, x0 ∈ X

g(τ̂ (x), x) + π̂(x) ≥ g(τ̂ (x0 ), x) + π̂(x0 ) .

As famously shown by Mirrlees (1971) and Guesnerie and Laont (1984) any monotone

decreasing allocation rule is implementable (Guesnerie and Laont (1984), Theorem 2).

Furthermore the transfers implementing

ˆ

π̂(x) =

x

with some constant

x

τ̂

6

are of the from

∂

g(τ̂ (z), z)dz − g(τ̂ (x), x) + c ,

∂x

c ∈ R.

In the following paragraph we show how our dynamic single-crossing condition, cut-o

rules and the transfer simplify to the results well-known from static mechanism design. The

6Both

Mirrlees and Guesnerie & Laont analyze a more general setting as they do not assume that the agent

is risk-neutral or put dierently that his utility is linear in the transfer.

15

generator

Lg

equals

1

E [g(t + ∆, Xt+∆ ) − g(t, x) | Xt = x]

∆&0 ∆

g(t + ∆, x) − g(t, x)

∂

= lim

= g(t, x) .

∆&0

∆

∂t

(Lg)(t, x) = lim

Thus the dynamic single-crossing condition (1) simplies to

t ∈ [0, T ]

and all

x ∈ R.

As the process

X

b

barrier

X

a version of the process

b.

x

for all

is constant, for every barrier there exists a

decreasing barrier that induces the same stopping time.

we restrict attention to decreasing barriers

∂

g(t, x) decreasing in

∂t

Hence without loss of generality

It is easy to check that for every decreasing

reected at

b

is given by

X̃st,x = min{x, b(t)} .

From the fact that the reected version of the process

t,b(t)

X̃s

started on the barrier at time

t stays on the barrier at all future times s > t follows that it is deterministic and given

t,b(t)

X̃s

= b(s). Hence the transfer implementing the stopping time τb equals

ˆ T

ˆ T

ˆ T

∂

∂

t,b(t)

(Lg)(s, X̃s )ds = E

π(t) = E

g(s, b(s))ds =

g(s, b(s))ds .

∂t

∂t

t

t

t

Finally we show that the transfer

π(τ (x))

Proposition 13. Let

π(τ (x))

Proof.

for all

τ : [x, x] → [0, T ]

x ∈ [x, x].

Recall that

π̂

is given by

ˆ

equals the transfer

π̂(x)

up to a constant.

be measurable and non-increasing then

x

∂x g(τ̂ (z), z)dz − g(τ̂ (x), x) + c ,

π̂(x) =

x

for some constant

c ∈ R.

We x

ˆ

x ∈ [x, x]

and compute

x

π̂(x) − c =

∂x g(τ̂ (z), z) − ∂x g(τ̂ (x), z)dz − g(τ̂ (x), x)

x

ˆ

x

ˆ

τ (z)

∂t ∂x g(s, z)dsdz − g(τ̂ (x), x).

=

x

τ (x)

16

by

π̂(x) =

Then Fubini's Theorem implies

ˆ

τ (x)

ˆ

b(s)

∂x ∂t g(s, z)dzds − g(τ (x), x)

π̂(x) − c =

τ (x)

ˆ

x

τ (x)

∂t g(s, b(s)) − ∂t g(s, x)ds − g(τ (x), x)

=

τ (x)

ˆ

τ (x)

∂t g(s, b(s))ds − g(τ (x), x).

=

Choosing

4.3.

c=

τ (x)

´T

τ (x)

∂t g(s, b(s))ds + g(τ (x), x)

yields

π̂(x) = π(τ (x)).

Monotone Increasing Processes and Decreasing Cut-O.

In this section we

restrict attention to increasing processes and stopping times with decreasing cut-o

easy to check that for every decreasing barrier

b

a version of the process

X

b.

reected at

It is

b

is

given by

X̃st,x = min{x, b(t)} .

From the fact that the reected version of the process

t,b(t)

X̃s

started on the barrier at time

t stays on the barrier at all future times s > t follows that it is deterministic and given

t,b(t)

X̃s

= b(s). Hence the transfer implementing the stopping time τb equals

ˆ T

ˆ T

ˆ T

t,b(t)

(4.1)π(t) = E

(Lg)(s, b(s))ds =

(Lg)(s, b(s))ds .

(Lg)(s, X̃s )ds = E

t

t

4.4.

Search with Recall.

recall.

t

In the next section we apply our result to (job) search with

Oers arrive according to a Poisson process

(wi )i∈N are

G : R → R. Xts,t is

Oers

by

(Nt )t∈[0,T ]

with constant rate

λ > 0.

identically and independently distributed according to the distribution

the best oer the agent got in the past, where we assume that the agent

started with an oer of

x∈R

at time

s

Xts,x = max{wi | i ≤ Nt − 1} ∨ z .

We assume that the agent receives a nal payo

The generator of

g

equals

ˆ

(Lg)(t, x) = −rx + λ

g(t, x) = e−rt x

and a ow payo of zero.

∞

(1 − G(z))dz .

x

G(z) ≤ 1 the generator is monotone decreasing in x and thus the single-crossing condition

is satised. By denition the process X is monotone increasing and by equation (4.1) the

transfer that implements a monotone cut-o b is given by

ˆ T

ˆ T

ˆ ∞

π(t) =

(Lg)(s, b(s))ds =

−rb(s) + λ

(1 − G(z))dzds .

As

t

t

b(s)

17

4.5.

Application to Optimal Stopping.

As an immediate consequence of Theorem (5)

we establish a link between reected processes and optimal stopping rules. If a stopping rule

τ?

is implemented by a constant transfer, then

sup E

(4.2)

τ ∈T

ˆ

τ

τ?

is optimal in the stopping problem

h(t, Xt )dt + g(τ, Xτ ) .

0

This observation leads to the following purely probabilistic sucient condition for optimal

stopping rules.

Corollary 14. Let b : [0, T ] → R be a barrier such that the reected process satises

E

ˆ

T

h(s, X̃st,b(t) )

+

(Lg)(s, X̃st,b(t) )ds

=0

t

for all t ∈ [0, T ]. Then the associated cut-o rule τb solves the optimal stopping problem

(4.2).

Conclusion

We have shown under weak assumptions that in optimal stopping settings a stopping rule

that is optimal for the principal can be implemented by a simple posted-price mechanism.

The new approach we introduced is a forward construction of transfers. The transfer is the

expected payo evaluated at the process reected at the time-dependent cut-o one wants

to implement.

It is an interesting question for future research whether a similar approach can be used in

environments where non cut-o rules are of interest. Two such situations arise naturally.

First, with multiple agents, the socially-ecient cut-o depends not only on time but also

on the signals of the other agents. We think that a generalization of the method developed

in this paper can be used to implement the principal-optimal behavior in this multi-agent

settings. As the cut-o depends on the other agents' signals, one needs to dene processes

reected at stochastic barriers. If one denes payments as expected payos evaluated at the

process reected at the stochastic cut-o, we conjecture that our implementability result

(Theorem 5) holds also for those generalized stochastic cut-os.

The payment, however,

will depend on the other agents' signals and, thus, the mechanism will require constant

communication between the principal and the agents.

A second, more challenging, problem is revenue maximization. For special cases the revenue maximizing policy depends only on the initial and the current signal. In those cases

one might be able to use the method developed here for revenue maximization by oering

at time zero a menu of posted-price mechanisms.

Those extensions illustrate that posted-price transfers without communication are often

insucient in more general dynamic setups. We hope, however, that due to its simplicity

18

and the explicit characterization of transfers, our result will prove useful to introduce private

information into the wide range of economic situations that are modeled as single-agent

optimal stopping problems.

Appendix

Appendix A.

thatµ, σ and

γ

We impose the following assumptions on the coecients in (2.1). We assume

are Lipschitz continuous, i.e. there exist positive constants

L

and

L(z)

such

that

|µ(t, x) − µ(t, y)| + |σ(t, x) − σ(t, y)| ≤ L|x − y|

|γ(t, x, z) − γ(t, y, z)| ≤ L(z)|x − y|

for all

t ∈ [0, T ] and x, y ∈ R.

Moreover, we assume that these functions are of linear growth,

K

i.e. there exist positive constants

and

K(z)

such that

|µ(t, x)| + |σ(t, x)| ≤ K(1 + |x|)

|γ(t, x, z)| ≤ K(z)(1 + |x|)

for all

t ∈ [0, T ]

and

x ∈ R.

Furthermore the constants

ˆ

K(z)

satisfy

p ≥ 2.

Proof of Lemma 6.

Let us rst show monotonicity of

the continuation value satises

w (t, x) = sup E

π

ˆ

τ ∈Tt,T

for all

and

K(z)p + L(z)p ν(dz) < ∞

R

for all

L(z)

(t, x) ∈ [0, T ] × R.

τ

h(s, Xst,x )

+

Lg(s, Xst,x )ds

By Dynkin's formula

+ π(τ ) − π(t)

t

The dynamic single-crossing condition and Equation (2.2) imply

that the ow payos given that

payos if it started in

x 7→ wπ (t, x).

X

started in a higher level

x0 ≥ x

are smaller than the ow

x

0

0

h(s, Xst,x ) + Lg(s, Xst,x ) ≤ h(s, Xst,x ) + Lg(s, Xst,x )

s ≥ t. Hence, we

x, y ∈ R. We have

for all

and

have

wπ (t, x0 ) ≤ wπ (t, x).

For the Lipschitz continuity x

t ∈ [0, T ]

|wπ (t, x) − wπ (t, y)|

ˆ τ

t,y t,x

t,y t,x

≤ sup E

h(s, Xs ) − h(s, Xs ) ds + g(τ, Xτ ) − g(τ, Xτ ) + |g(t, x) − g(t, y)| .

τ ∈Tt,T

t

19

Let

C

denote the Lipschitz constants of

h

g,

and

i.e.

|h(t, x) − h(t, y)| + |g(t, x) − g(t, y)| ≤ C|x − y|

Then we have

|wπ (t, x) − wπ (t, y)| ≤ 2C E

"

#

t,x

sup Xs − Xst,y + |x − y| .

s∈[t,T ]

C̃

By Theorem 3.2 in Kunita there exists a constant

C̃|x − y|,

such that

E sups∈[t,T ] |Xst,x − Xst,y | ≤

which yields the claim.

Appendix B.

Proof of Proposition 4.

For case b) it is straightforward to verify that

X̃sτ,η = min{Xsτ,η , b(s)}

satises all the conditions from the denition of a reected process.

For case a) we show

that the solution to a reected stochastic dierential equation (RSDE) is a reected version

of

X.

A solution to a RSDE with initial condition

satises the equation

ˆ

X̃s = η +

ˆ

s

Moreover,

X̃

is a pair of processes

(X̃, l)

σ(r, X̃r− )dWr − ls ,

t

is constrained to stay below the barrier

X̃s ≤ b(s)

3.4).

Existence of

(X̃, l)

l is non´T

b(s) −

t

and the process

decreasing and minimal in the sense that it only increases on the barrier, i.e.

X̃s dls = 0.

which

s

µ(r, X̃r )dr +

t

(t, η)

follows from Slominski and Wojciechowski (2010; Theorem

Conditions (3.1), (3.1) and (3.1) are satised by denition.

Furthermore pathwise

uniqueness holds, which implies condition (3.1). Note that the solution to the unreected

SDE (2.1) solves the RSDE for

s < τb .

As the solution to the RSDE is unique (3.1) follows.

It remains to verify conditions (3.1) and (3.1). For

ξ1 , ξ2 ∈ R we write (X̃ i , li ) = (X̃ 0,ξi , l0,ξi ),

= 1, 2) and introduce the process ∆s = X̃s1 − X̃s2 . Applying the Meyer-Itô formula Protter

2

(2005; Theorem 71, Chapter 4) to the function x 7→ max(0, x) yields

ˆ s

2

2

max(0, ∆s ) = max(0, ∆0 ) + 2

1{∆r >0} ∆r d∆cr

0

ˆ s

X

+

1{∆r >0} dh∆icr +

max(0, ∆r )2 − max(0, ∆r− )2 ,

(i

0

where

σ

∆c

denotes the continuous part of

implies

ˆ

ˆ

s

1{∆r >0} dh∆icr

0

0<r≤s

∆.

For the last integral the Lipschitz continuity of

ˆ

s

1{∆r >0} (σ(r, X̃r1 )

=

0

−

20

σ(r, X̃r2 ))2 dr

s

max(0, ∆r )2 dr.

≤K

0

The rst integral decomposes into the following terms, which we will consider successively.

By the Lipschitz continuity of

ˆ

b

we have

1{∆r >0} ∆r (b(r, X̃r1 )

2

ˆ

s

−

b(r, X̃r2 ))dr

s

max(0, ∆r )2 dr.

≤ 2K

0

0

Moreover, it follows from the Lipschitz continuity of

ˆ

σ

that the process

s

1{∆r >0} ∆r (σ(r, X̃r1 ) − σ(r, X̃r2 ))dWr

s 7→ 2

0

0.

is a martingale starting in

Hence, it vanishes in expectation. Next, we have

ˆ

s

1{∆r >0} ∆r dlr1,c ≤ 0

−2

0

ˆ

and

ˆ

s

1{∆r >0} ∆r dlr2,c

2

=2

0

0

s

1{X̃r1 >b(r)} ∆r dlr2,c = 0.

Putting everything together, we obtain

E[2

ˆ

ˆ

s

1{∆r >0} ∆r d∆cr ]

≤ 2K

0

s

E[max(0, ∆r )2 ]dr.

0

r ∈ (0, s] such that max(0, ∆r )2 >

max(0, ∆r− )2 . This implies ∆r > 0 and ∆r > ∆r− . Since X̃ i jumps if and only if li jumps

2

2

1

1

2

2

2

1

(i = 1, 2), this is equivalent to X̃r > X̃r and lr − lr− > lr − lr− . We have lr − lr− > 0,

1

2

2

because l is non-decreasing. Hence, l jumps at r , which implies that X̃r = b(r). Thus, we

1

obtain the contradiction X̃r > b(r). This implies

ˆ s

2

2

E[max(0, ∆s ) ] ≤ max(0, ∆0 ) + 3K E[max(0, ∆r )2 ]dr.

Considering the jump terms, assume that there exists

0

Then Gronwall's Lemma yields

For

t>0

yields for

E[max(0, ∆s ) ] ≤ C max(0, ∆0 )2

2

C > 0.

conditional to Ft

for some constant

performing the same computations with the expectation taken

ξ1 , ξ2 ∈ L2 (Ft )

E[max(0, X̃s1 − X̃s2 )2 |Ft ] ≤ C max(0, ξ1 − ξ2 )2

for all

s ∈ [t, T ].

If

ξ1 ≤ ξ2

this directly yields (3.1).

argument.

21

Claim (3.1) follows by the same

References

Bergemann, D. and J. Välimäki (2010): The Dynamic Pivot Mechanism,

rica, 78, 771789.

Dixit, R. and R. Pindyck (2008):

Investment under Uncertainty,

Economet-

Princeton university

press.

Guesnerie, R. and J. Laffont (1984): A Complete Solution to a Class of Principal-

Agent Problems with an Application to the Control of a Self-Managed Firm,

Public Economics, 25, 329369.

Jacka, S. and J. Lynn (1992):

Journal of

Finite-Horizon Optimal Stopping, Obstacle Problems

and the Shape of the Continuation Region,

Stochastics: An International Journal of

Probability and Stochastic Processes, 39, 2542.

Ladyzhenskaja, O., V. Solonnikov, and N. Ural'ceva (1968):

Linear and Quasi-

Linear Equations of Parabolic Type, Translations of Mathematical Monographs, American

Mathematical Society.

Mirrlees, J. (1971): An Exploration in the Theory of Optimum Income Taxation,

Review of Economic Studies, 175208.

The

Pavan, A., I. Segal, and J. Toikka (2012): Dynamic Mechanism Design, .

Peng, S. and X. Zhu (2006): Necessary and sucient condition for comparison theorem of

1-dimensional stochastic dierential equations,

Stochastic processes and their applications,

116, 370380.

Protter, P. (2005):

Stochastic Integration and Dierential Equations,

Applications of

Mathematics, Springer.

Sannikov, Y. (2008):

A continuous-time version of the principal-agent problem,

Review of Economic Studies, 75, 957984.

The

Slominski, L. and T. Wojciechowski (2010): Stochastic Dierential Equations with

Jump Reection at Time-Dependent Barriers,

tions, 120, 1701 1721.

Stochastic Processes and their Applica-

Williams, N. (2011): Persistent private information,

22

Econometrica, 79, 12331275.