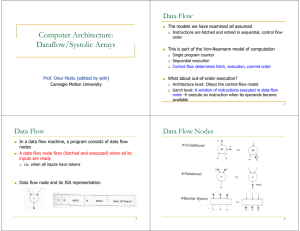

Dataflow Machine Architecture ARTHUR H. VEEN

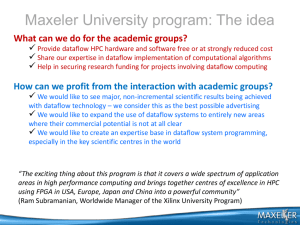

advertisement