by Jason T. Richards B.A., Psychology (1997)

advertisement

Three-dimensional Spatial Learning in a Virtual Space Station Node

by

Jason T. Richards

B.A., Psychology (1997)

B.S., Mathematics (1998)

University of the Pacific

Submitted to the Department of Aeronautics and Astronautics

in Partial Fulfillment of the Requirements for the Degree of

Master of Science in Aeronautics and Astronautics

at the

MASSACHUSETTS INSTITUTE OF TECHNOLOGY

September 2000

© 2000 Massachusetts Institute of Technology

All Rights Reserved

Signature of Author______________________________________________________________

Department of Aeronautics and Astronautics

August 22, 2000

Certified by____________________________________________________________________

Charles M. Oman

Director, Man-Vehicle Laboratory

Thesis Supervisor

Accepted by___________________________________________________________________

Professor Nesbitt W. Hagood

Chair, Departmental Graduate Office

Department of Aeronautics and Astronautics

2

Three-dimensional Spatial Learning in a Virtual Space Station Node

by

Jason T. Richards

Submitted to the Department of Aeronautics and Astronautics on

August 21, 2000 in Partial Fulfillment of the Requirements for the

Degree of Master of Science in Aeronautics and Astronautics

ABSTRACT

Astronauts find it difficult to recognize their orientation while facing any of the viewing directions in 6ported space station node modules. Our previous experiments tested the spatial memory of human

subjects in 1-G in an analogous cubic virtual environment and showed that humans are able to learn to

orient when instructed to imagine different body orientations while facing in two different directions. Can

subjects do the task when facing in all 6 directions? Does training help? Does spatial memory depend on

the direction of remembered targets relative to the body? Does performance depend on the subject’s

ability to rotate himself mentally and use imagery? How long is ability retained after training? 3D spatial

learning was studied in two virtual cubic chambers, in which a picture of an animal was drawn on each

wall. Through trial-by-trial exposures to a virtual chamber, subjects (n=24) had to memorize the spatial

relationships among the 6 pictures around them and learn to predict the direction to a specific picture if

they were facing any wall in any roll orientation. After learning in one chamber, the procedure was

repeated in a second. Before being tested, subjects received computer-based instructions and practice.

Half of subjects were taught to remember logical picture groupings (strategy), while the remaining

(control) subjects were free to do the task as they saw fit. Subjects’ retention of configurational

knowledge (both chambers) and spatial ability (second chamber only, without feedback) were re-tested 1,

7, and 30 days after initial training. Response time (RT) and percent correct (% correct) learning curves

were measured on all four days, while configurational knowledge was tested on the last three. All subjects

ultimately learned to do the task within 36 trials in either test environment, but performed faster in the

second environment than in the first (especially the strategy-trained group). The strategy group showed

superior % correct and RT for above/behind targets and generally better configurational knowledge.

Retention of configurational knowledge and spatial ability for both groups was good over 30 days. The

subjects who reported using mental imagery (n=8) had higher scores on figure rotation tests and % correct

for left/right targets. Performances by the control group on the experimental tasks were significantly

correlated with those on conventional tests of field independence and 2/3D figure rotation ability.

Strategy training helped those who had poorer mental rotation skills, and those who could not use mental

imagery.

Supported by NASA Cooperative Agreement NCC9-58 with the National Space Biomedical Research Insitute, USA

Thesis Supervisor: Charles M. Oman

Title: Director of the Man-Vehicle Laboratory

3

4

Acknowledgements

–

To Charles “Chuck” M. Oman, PhD, thesis supervisor, and Director of the Man-Vehicle Laboratory,

for giving me the opportunity to come to MIT and be a part of such a prestigious academic

environment. The patience and confidence with which you guided me through the process made it

much easier to handle than it would have been otherwise. I cannot imagine having a better match for

an advisor. Remember to root for my buddy Reichert and the Royals when they’re in town!

–

To Alan Natapoff, PhD, for all of your generosity of knowledge, spirit, and wisdom in supporting me

throughout this project. You lifted my spirits when I was frustrated and helped me maintain

perspective when the goal seemed too far away to see. Your expert advice has been and always will

be of great encouragement and value to me. It was an absolute joy for me to have been blessed with

such charitable mentoring. Once again, thank you. (p.s. “You hang in”… and save my seat!)

–

To Andy Beall, PhD, now at UC-Santa Barbara: The man who made the transition from

undergraduate school in California to graduate school at MIT as smooth as possible. I don’t know if

it’s possible to ask more questions than I asked you in the fall of 1998. But, you were always

incredibly patient, and you always had an answer that worked. Only a Californian could have done all

this without ever being in a bad mood! See you in sunny, SoCal.

–

To Dr. Wayne Shebilske (formerly of Texas A&M, now at Wright State University) for playing a

major role in conceiving the original node experiment described in Sect.2.3.

–

To Hilda Gutierrez, distinguished MVL UROPer, for doing much of the preliminary programming

and modeling for the original node experiment before I arrived as a first-year graduate student.

Thanks for being so compassionate when times were rough.

–

To Rex “Rex-Dog” Wu, former MVLer and roommate for 3 months: I now know how you felt last

year at this time. Thanks for the positive support and companionship, and the hilarious memories of

watching the Terrapins of Maryland play basketball (and lose) against Kathy Sienko’s Kentucky

Wildcats!

–

To Joe Saleh, for the help you so selflessly offered when I was most overwhelmed by the mystique of

MIT. I might have never made through that first semester without your study sessions.

–

To my mother and father, Kathy and Larry Richards, for getting me here. It never would have been

possible without the loving support of you two amazing individuals throughout the years… special

thanks to the notorious and often-used “Bank of Parents” – don’t worry, jobs are on the way. Thank

you so much. I love you dearly!

–

To Ms. Melanie Heather Wright, for making the last 3 months of my life in Boston utterly blissful.

Your heart and spirit are what every man needs. Words will never be able to describe how fortunate I

feel about the intersection of our paths. Mahalo, Honey Girl!

–

To Professors Dava Newman and Larry Young for their guidance, encouragement, and generous

hosting of MVL outings.

The present research was supported by NASA Cooperative Agreement NCC9-58 with the National Space

Biomedical Research Insitute, USA.

5

6

Table of Contents

ABSTRACT

3

ACKNOWLEDGEMENTS

5

TABLE OF CONTENTS

7

LIST OF FIGURES

10

LIST OF TABLES

11

CHAPTER 1: INTRODUCTION

13

1.1 Sense of Static Orientation in 0-G

13

1.2 Problems Related to Space Station Structures

14

1.3 Importance of Spatial Memory in Emergency Situations

15

CHAPTER 2: BACKGROUND

19

2.1 Spatial Memory and Navigation in 1-G

19

2.2 Previous Visual Orientation and Spatial Memory Training

2.2.1 Virtual Environment Training for 1-G Applications

2.2.2 Spatial Orientation Countermeasures for 0-G Applications

21

21

21

2.3 Space Station Node Experiment 1

22

2.4 Space Station Node Experiment 2: The Present Experiment

23

CHAPTER 3: METHOD

25

3.1 Subjects

25

3.2 Materials and Apparatus

3.2.1 Virtual Environment Generator

3.2.2 Virtual Cubic Chamber and Object Arrays

25

25

27

3.3 Procedure

3.3.1 Experiment Timeline

3.3.2 Spatial Learning Trials

3.3.3 Paper-and-Pencil Spatial Ability Tests

3.3.4 Strategy Training and Control Training

3.3.5 Retention Tests

3.3.6 Exit Interviews

289

29

31

33

33

34

35

7

3.4 Experiment Design and Data Analysis

CHAPTER 4: RESULTS

36

38

4.1 Evidence of Learning

38

4.2 Transfer of Learning from the First Array to the Second

38

4.3 Effect of Strategy Training

42

4.4 Effect of Using Mental Imagery

46

4.5 Retention Testing

4.5.1 Spatial Ability Test

4.5.2 Effect of Layoff on Spatial Ability

4.5.3 Configurational Knowledge Test

50

50

51

54

4.6 Predictors of Task Performance

58

4.7 Exit Interview Responses

59

4.8 Relative Target Direction

62

4.9 Array Presentation Order

66

CHAPTER 5: DISCUSSION

69

CHAPTER 6: CONCLUSIONS

72

REFERENCES

77

APPENDIX A: PYTHON/VRUT CODE

79

A.1 Training Day Script for Control Group

79

A.2 Training Day Script for Strategy Group

99

A.3 Script for the Configurational Knowledge Retention Test

104

APPENDIX B: SUBJECT HISTORY QUESTIONNAIRE

121

APPENDIX C: PAPER AND PENCIL TESTS

124

APPENDIX D: INSTRUCTION SLIDE SHOWS

125

D.1. Strategy Group Instructions

125

D.2. Control Group Instructions

134

8

APPENDIX E: TRIAL SEQUENCE AND COUNTERBALANCING

137

APPENDIX F: SCORING CONVENTION FOR CONFIGURATION TEST

139

APPENDIX G: SUBJECT CONSENT FORM

145

9

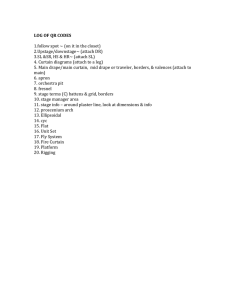

List of Figures

FIGURE 1.1. MIR SPACE STATION SCHEMATIC

FIGURE 1.2. INTERNATIONAL SPACE STATION SCHEMATIC

17

18

FIGURE 3.1. HEAD-MOUNTED DISPLAY SYSTEM

FIGURE 3.2. INTERSENSE IS600-MARK 2 TRACKING SYSTEM

FIGURE 3.3A. WIDE-ANGLE VIEW OF THE INTERIORS OF THE THREE OBJECT ARRAYS,

AS SEEN FROM THE INITIAL, OR “BASELINE,” SIMULATED ORIENTATION

FIGURE 3.3B. SUBJECT WEARING HMD AND PERFORMING EXPERIMENTAL TASK

FIGURE 3.4. EXPERIMENT TIMELINE

FIGURE 3.5. SCHEMATIC OF 3D SPATIAL LEARNING EXPERIMENT TIMELINE FOR

EACH TRIAL.

26

27

FIGURE 4.1. MEAN PERFORMANCE FOR ALL TARGETS, BY SET, WITHIN TRAINING

GROUP ON THE TRAINING DAY

FIGURE 4.2. MEAN PERFORMANCE FOR LEFT/RIGHT TARGETS, BY SET, WITHIN

TRAINING GROUP ON THE TRAINING DAY

FIGURE 4.3. MEAN PERFORMANCE FOR ABOVE/BEHIND TARGETS, BY SET, WITHIN

TRAINING GROUP ON THE TRAINING DAY

FIGURE 4.4. MEAN % CORRECT, BY SET, WITHIN IMAGERY GROUP ACROSS TRAINING

GROUP ON THE TRAINING DAY

FIGURE 4.5. MEAN % CORRECT FOR NON-IMAGERY SUBJECTS, BY SET, WITHIN

TRAINING GROUP

FIGURE 4.6. MEAN PERFORMANCE FOR ALL TARGETS, BY SET, OVER DAYS WITHIN

TRAINING GROUP DATA FOR TRAINING DAY (SETS 4-6) USING SECOND ARRAY

FIGURE 4.7. MEAN PERFORMANCE FOR LEFT/RIGHT TARGETS, BY SET, OVER DAYS

WITHIN TRAINING GROUP DATA FOR TRAINING DAY (SETS 4-6) USING SECOND

ARRAY

FIGURE 4.8. MEAN PERFORMANCE FOR ABOVE/BEHIND TARGETS, BY SET, OVER DAYS

WITHIN TRAINING GROUP DATA FOR TRAINING DAY (SETS 4-6) USING SECOND

ARRAY

FIGURE 4.9. MEAN PERFORMANCE FOR THE STRATEGY GROUP BY RELATIVE-TARGET

DIRECTION OVER DAYS

FIGURE 4.10. MEAN PERFORMANCE FOR THE CONTROL GROUP BY RELATIVE-TARGET

DIRECTION OVER DAYS

FIGURE 4.11. MEAN RT FOR RELATIVE-TARGET-DIRECTION GROUPS WITHIN ARRAY

PRESENTATION ORDER ACROSS TRAINING, BY SET, OVER DAYS

28

28

30

32

43

44

45

47

49

52

53

54

64

65

68

FIGURE E.1. COMBINATIONS, ORDER, AND NOTATION CONVENTION USED FOR TRIALS 138

FIGURE F.1. OBJECT-POSITION CODE IN THE BASELINE ORIENTATION

140

10

List of Tables

TABLE 1-1. GROUP SCORES ON PAPER AND PENCIL TESTS

TABLE 4-1. SIGNIFICANT TRANSFER IN MEAN RT AND % CORRECT BY TRAINING, TARGET

GROUP, AND SET

TABLE 4-2. TRENDS IN PERFORMANCE BY TRAINING, ARRAY PRESENTATION ORDER,

AND SET ON THE TRAINING DAY FOR ABOVE/BEHIND TARGETS

TABLE 4-3. TRENDS IN PERFORMANCE MEANS BY TRAINING, ARRAY PRESENTATION

ORDER, AND SET ON THE TRAINING DAY FOR LEFT/RIGHT TARGETS

TABLE 4-4. TRAINING EFFECTS ON PERFORMANCE BY RELATIVE TARGET DIRECTION,

ARRAY, AND SET ON THE TRAINING DAY

TABLE 4-5. EFFECT OF CLAIMED USE OF MENTAL IMAGERY ON % CORRECT BY SET

TABLE 4-6. EFFECT OF STRATEGY TRAINING ON % CORRECT BY SET AMONG THE

NON-IMAGERY GROUP

TABLE 4-7. CONFIGURATION KNOWLEDGE FOR TRAINING GROUPS BY DAY AND

OBJECT ARRAY

TABLE 4-8. CONFIGURATION TEST MEASUREMENTS FOR THE FIRST ARRAY BY SUBJECT

AND DAY

TABLE 4-9. CONFIGURATION TEST MEASUREMENTS FOR THE SECOND ARRAY BY

SUBJECT AND DAY

TABLE 4-10. SPEARMAN CORRELATION COEFFICIENTS FOR PAPER AND PENCIL TESTS

TABLE 4-11. SUMMARY OF TRAINING DAY EXIT INTERVIEW RESPONSES FOR TRAINING

GROUPS

TABLE 4-12. SUMMARY OF RETENTION DAY EXIT INTERVIEW RESPONSES FOR

TRAINING GROUPS

TABLE 4-13. EFFECT OF RELATIVE-TARGET DIRECTION ON STEADY-STATE PERFORMANCE

BY DAY

TABLE 4-14. ORDER EFFECT ON RT PERFORMANCE, BY RETENTION DAY AND SET, WITHIN

RELATIVE-TARGET DIRECTION

TABLE F-1. UNIQUE RANK-ORDER SCORES

TABLE F-2. DOUBLE COMBINATIONS

34

40

41

41

42

48

50

56

57

58

59

60

61

66

67

141

143

11

12

Chapter 1: INTRODUCTION

Human space flight has been a reality for almost 40 years now. In the beginning, the concern with

space travel was focused mostly on the ability of the human body to withstand exertion of high G-loads

during launch and re-entry. Thanks to the efforts of a multitude of talented engineers, many short-duration

shuttle missions have been implemented with a high rate of success. Humans have also been successfully

sent into orbit to live on space stations like Skylab and Russia’s MIR for up to a year without fatal harm.

Several problems related to spatial orientation and spatial memory have been identified during these

longer missions that have yet to be solved.1

1.1 Sense of Static Orientation in 0-G

Here on Earth, knowing where we are and where we need to go is almost second nature for many

of us. If we are sitting in our living room at home, most of us can point to places of interest in our

communities and describe how to get there with relative ease. However, astronauts have found it much

more difficult to locate major landmarks while working in microgravity. Why is it so much easier to

orient on Earth than in orbital flight? In daily life on Earth, “up” and “down” are defined for us by

gravity, and we always see people and objects upright relative to us. Information from our otolith organs

and other gravireceptors also provide us with salient cues regarding the static orientation of our bodies

relative to the gravitational vertical. Astronauts flying on Skylab (Cooper, 1976) and Shuttle (Oman et al,

1984) missions, however, said that their sense of static orientation is unstable in orbital flight due to the

absence of an intrinsic gravitational “down.” When floating with their feet toward the space station (or

shuttle) “floor” (whose identity is transferred visually from 1-G simulations), crewmembers rarely seem

to have a problem with their sense of self-orientation.

When they work upside down (relative to the learned upright orientation in 1-G training

modules), however, or upright while viewing another crewmember who is working upside down,

1

Some sections of this introduction have been adapted from a research proposal submitted to the National Space

Biomedical Research Institute 16, June, 2000 by Oman, et al.

13

astronauts frequently experience a sudden change in direction of the perceived vertical of the module,

called a “visual reorientation illusion” (VRI) (Oman et al., 1986). In this compelling illusion, the

surrounding walls, “floor,” and “ceiling” seem to exchange subjective identities. VRIs are much like

figure reversal illusions (e.g., the Necker cube), except it is one’s own subjective orientation that changes.

The sudden change in perceived orientation (unaccompanied by normal vestibular motion cues) can

trigger space sickness, cause reaching errors, and make it difficult to recognize important landmarks.

VRIs occur because the surface below one’s feet is always a floor on Earth, and because other people

(and some objects) usually appear in a visually upright orientation relative to the observer. Unweighting

of gravireceptors and headward fluid shift also contribute to the problem, making some astronauts feel as

if they are continuously upside down (“inversion illusion”) (Gazenko, 1964; Matsnev et al 1983; Oman et

al, 1986; Lackner, 1992).

Individual differences in susceptibility to VRIs have been found between crewmembers on the

Neurolab Shuttle mission to whom scenes of spacecraft interiors were presented at various tilt angles

using a virtual reality display (Oman, et al., 2000). VRIs are experienced on Earth, but usually only occur

about the gravitational upright, such as when one exits an unfamiliar building and finds he or she is facing

an unexpected direction. These illusions have, however, been induced experimentally about the

gravitational horizontal in 1-G through the use of real and virtual tumbling rooms (Howard and

Childerson, 1994; Oman and Skwersky, 1997).

1.2 Problems Related to Space Station Structures

US astronauts living on the Russian MIR space station have reported spatial orientation

difficulties stemming from the complex architecture of the structure. MIR is comprised of 6 different

modules connected at right angles from one another at a central hub called “the node” (see Figure 1.1).

An astronaut floating inside the node is surrounded by six different portals, each leading to one of the

modules. A brown “floor,” a blue “ceiling” and tan walls mark the rectangular interior of, and define a

visual vertical for each module. One of the main problems with this configuration is that the different

14

modules’ visual axes are not co-aligned. Two of the modules’ visual axes are actually rotated 180 degrees

from one another: For the sake of analogy, imagine walking from a normal room in your house into one

with all the furniture bolted upside down to the ceiling! This makes it hard for astronauts to mentally

visualize other parts of the station, despite claiming to know the entire physical arrangement. Without the

ability to visualize effectively, crewmembers develop route knowledge via declarative rules that link

important landmarks, much as we do on Earth (Anderson, 1982). With practice, the procedures associated

with these rules eventually become nearly automatic. For example, one crewmember recalled: “I learned

that to go into Priroda, I needed to leave the base block upright, go through the hatch to my left, and then

immediately roll upside down so that Priroda would be right side up.”

Navigating is especially difficult when one must pass through the node. The International Space

Station (ISS) will have up to six nodes with up to six modules connected to each of them in the same

orthogonal manner as the one on MIR. Learning and remembering spatial relationships both between

modules and between nodes in such a complex arrangement will probably be quite difficult. Recently

implemented NASA human factors standards (e.g., 3000/8.4.3), however, require only that internal visual

verticals be consistent within a module. Fortunately, the SM, FGB, USLab, JEM, and COF all have

parallel axes and are connect at a common node module (see Figure 1.2). Although travelling through

arrangements like this will probably not be as difficult as on MIR, US nodes and modules have a square

interior cross section and similarly colored equipment and stowage drawers on all 4 surrounding surfaces.

This increases visual directional ambiguity and fails to alleviate susceptibility to the previously described

disorienting illusions. Meanwhile, the increased number of nodes and modules in the ISS structure adds

complexity to crewmembers’ spatial orientation tasks.

1.3 Importance of Spatial Memory in Emergency Situations

Maintaining spatial memory is important especially in emergency situations when crew have to

make spatial judgments in darkness, or with smoke-obscured cabins. The majority of Shuttle, MIR, and

Skylab crewmembers claim to depend heavily on visual cues for orientation and consider these cues very

15

important when confusion occurs. Spatial orientation difficulties encountered in the node on MIR,

especially by Shuttle visitors, prompted Russian cosmonauts to place red arrows made of Velcro on the

walls pointing toward the Shuttle adapter hatch, creating their own global directional markings. A

location coding system has been recommended by long-duration crewmembers and used in FGB and

Node 1 modules on ISS (Novak, personal communication). Coding systems in use now, however, are not

spatially consistent between modules. These visual aids are helpful in normal working conditions, but

become virtually useless when visibility is compromised.

What happens when crewmembers are unable to see helpful visual cues? A fire on MIR in 1997,

filled the modules with smoke and reduced visibility to dangerously low levels (Burroughs, 1998).

Although crewmembers did not have trouble finding escape routes, the experience alerted NASA to the

potential hazard that might be posed by spatial orientation difficulties during operational crises aboard

space stations.

16

Figure 1.1. MIR Space Station Schematic

17

Figure 1.2. International Space Station Schematic2

2

ISS assembly schedule and planned configuration are subject to change.

18

Chapter 2: BACKGROUND

The following sections provide a brief outline of previous research regarding spatial memory on

Earth and mention related navigation research. Previous visual orientation and spatial memory

countermeasures for disorientation and motion sickness problems experienced by astronauts are also

discussed.3

2.1 Spatial Memory and Navigation in 1-G

On Earth, humans are able to keep track of their orientation and location in environments by a

process of spatial updating (Pick & Reiser, 1982). Proprioceptive cues allow humans to perform this

process reliably and without difficulty when actual physical movement is allowed (Ivanenko, Grasso,

Israel, & Berthoz, 1997; Loomis, Da Silva, Fujita, & Fukusima, 1992; Loomis, Klatzky, Golledge,

Ciccinelli, Pellegrino, & Fry, 1993; Mittelstaedt & Glasauer, 1991; Rieser, 1989; and Rieser, Guth, & Hill

1986). By contrast, spatial updating is considerably less reliable and error-prone when imagined

movement relative to an environment is required (Klatzky, Loomis, Beall, Chance, & Golledge, 1998;

Farrell & Robertson, 1998). The frame of reference used during spatial updating needs to be considered as

well. One can imagine oneself moving relative to the environment (viewer-based), or vice versa (objectbased). Most studies have shown that a viewer-based approach is advantageous for imagined spatial

updating of small-scale environments involving rotations (Wraga, Creem, & Proffitt, 1999; Simons &

Wang, 1998).

Rather than studying the process of learning, the majority of studies on imagined spatial updating

have considered the situation in which novel configurations are encountered, or once asymptotic

performance has been reached. When people encounter a novel environment, they first learn to identify

salient landmarks, and with experience, to associate them with specific actions between connecting routes

(Siegel & White, 1975). The sequence of landmarks and actions is eventually learned as route knowledge,

3

Several sections of this chapter were adapted from a research proposal submitted to the National Space Biomedical

Research Institute 16, June, 2000 by Oman, et al.

19

which at first is based on declarative rules (Anderson, 1982) such as “Turn right at the church,” but

becomes automatic with practice. With more experience, people develop survey (configurational)

knowledge of an environment, which is characterized by an ability to take shortcuts or to describe an

environment as it appears from different viewpoints. The idea that landmark, route, and survey

knowledge are developed in stages is widely accepted (McDonald and Pellegrino, 1993), but many

believe they develop concurrently rather than sequentially. Route and survey knowledge of an

environment can be acquired through direct experience (“primary knowledge”) or learned by studying

maps (“secondary knowledge”). Although both techniques are often used, the former is detailed and

nearly automatic, while the latter is thought to involve mental rotation of a cognitive map, which makes it

harder to retrieve. Survey knowledge can involve processes of mental imagery and mental rotation (e.g.,

Reiser, 1989) that may activate the same brain structures as in direct visual perception (e.g., Kosslyn et

al., 1993). Mental imagery can also affect the subjective sense of orientation relative to gravity via topdown processing (Mast, Kosslyn, & Berthoz, 1999). Spatial information has also been shown, however,

to be processed and organized according to hierarchies and categories: Sadalla et al. (1980) showed that

humans employ spatial “reference points” as organizing loci for adjacent places in an environment.

Franklin and Tversky (1990) have argued that people keep track of object arrangements according to a

“spatial framework” in which body axes are used to establish referent directions for categorizing object

locations, and that they transform the model appropriately when imagining the environment from new

points of view. While imagining relative body orientations, subjects who learned relative object locations

via narrative descriptions had better spatial memory for objects aligned with asymmetric (e.g.,

front/behind) body axes than for those aligned with symmetric (left/right) body axes (Franklin and

Tversky, 1990; Bryant and Tversky, 1999; Bryant et al., 1992). Bryant et al. (2000) conducted a similar

study in which subjects learned the spatial relationships among objects located to all six sides of their

bodies via direct observation rather than narratives. They found evidence that subjects employ the spatial

framework model when locating objects from memory, but when locating objects from observation a

20

“physical transformation” model is applicable which predicts longer retrieval times for longer distances

from front. They noted that the mental transformations required to imagine an environment from a new

perspective in daily life usually only involve rotations about a single (gravitational) axis.

2.2 Previous Visual Orientation and Spatial Memory Training

2.2.1 Virtual Environment Training for 1-G Applications

Spatial orientation training on Earth is preferably accomplished via direct observation of the

environment. Virtual environments (VEs), however, have emerged as potential tools for teaching spatial

tasks (e.g., Regian, Shebilske, & Monk, 1992), especially when the actual environment is inaccessible

(e.g., space station). Does spatial learning in a VE transfer to the actual environment? Wilson et al. (1997)

showed that subjects were able to acquire spatial information of a real multistory building by exploring a

to-scale desktop computer simulation of the same building. Experience in an equivalent virtual setting has

been found to produce better configurational knowledge of the actual environment than does a descriptive

narrative or map study (Koh, 1997; Witmer et al., 1996). Bliss et al. (1995) showed that civilian

firefighters could follow a route through a building to perform a rescue and exit via a different route after

training in a VE model of that building.

Relevant to the concerns of this thesis, preliminary results (Witmer et al., 1996) suggest that VE

training can also enhance the performance of shipboard firefighters under low visibility conditions.

2.2.2 Spatial Orientation Countermeasures for 0-G Applications

The need for preflight visual orientation practice for astronauts was realized after Apollo and

Sklylab. Countermeasures for spatial orientation difficulties in microgravity currently include experiential

training in real and virtual mockups and parabolic flight. Crews routinely rehearse Extra Vehicular

Activity (EVA) in neutral buoyancy facilities but have recently (since 1993) added virtual reality

techniques. The SAFER training program in the Virtual Reality Development Center at Johnson Space

Center provides Shuttle and MIR crewmembers with mission-specific experience in highly detailed

virtual mockups, including a view of the earth. The latter cannot be simulated in water tanks.

21

Crewmembers say the practice they get visually orienting to the virtual mockups of Shuttle Payload Bay

or the MIR Station is extremely valuable (J. Hoffman, personal communication to C. Oman). This

facility, however, has not yet been used for Intra Vehicular Activity (IVA) training.

Parker, Reschke, and Harm were the first to develop formal preflight orientation training using

the DOME, a simulator that projected visual scenes on a quarter of the interior surface of a 12-ft. sphere.

The DOME was part of a Preflight Adaptation Training (PAT) research effort whose main goal was to

mitigate visuo-vestibular conflicts and to develop a laptop computer-based method for reporting

subjective self-orientation. A group of astronauts also used the DOME to practice moving about a

Spacelab interior while in gravitationally unfamiliar simulated body orientations. The effectiveness of this

training on orientation performance was not formally evaluated. Parker and Harm (1993) conducted a

retrospective study that suggests that PAT-trained astronauts may have had reduced incidence of space

sickness.

2.3 Space Station Node Experiment 1

How well can humans imagine and orient in three dimensions in situations where they are free to

turn completely upside down? Our first series of experiments (Oman et al., 2000; Shebilske et al., 2000)

studied how quickly and well subjects inside a 6 walled node chamber or a virtual equivalent could learn

to predict the relative direction of a target object while imagining a different roll orientation (including

upside down), and/or viewing direction. This involved training subjects to locate target objects from

imagined body orientations relative to a previously seen environment. Subjects were able to achieve

relatively high accuracy within 20 exposures from a given viewpoint, regardless of roll orientation. They

learned to orient from a second viewpoint with equal or greater ease. Subjects were believed to have used

a combination of learned memorization strategies and practice with visual imagery from the first

viewpoint, and transferred that knowledge to the second. Task performance measures correlated

significantly with scores on conventional paper-and-pencil tests of field independence and 2/3D figure

rotation ability. Despite limitations in field of view, resolution, tracking delays and other factors, head-

22

mounted virtual reality displays were shown to be as effective as a real environment for this type of

spatial memory training. Changing the physical orientation of the subjects with respect to gravity had

only minor effects on performance in either the real or the virtual environment. However, we recognized

several significant limitations of this first investigation: 1) Because of time constraints, subjects were

tested facing only two of the six walls of the chamber. It remained to be demonstrated that subjects could

maintain their orientation and spatial memory when facing any of the six walls. 2) Subjects were given

no specific advice as to how to remember the location of the target objects. 3) Subjects were not tested in

a novel second environment, to see whether they had “learned how to learn”. 4) Disoriented astronauts

arguably have to infer their orientation based on the relative direction of remembered targets, whereas in

these experiments, our subjects were required to do the reverse: they were told their orientation, and then

had to predict the direction of remembered targets.

2.4 Space Station Node Experiment 2: The Present Experiment

For astronauts entering a 6-sided space station node, spatial orientation tasks are “forward” in

nature – that is, their orientation must be quickly inferred from the visual surround. We wanted to

demonstrate the effectiveness of virtual reality as a display medium for teaching generic strategies for

memorizing and visualizing 3D spatial arrangements in any possible imagined body orientation. With

regard to this goal, the following questions are of interest: Can subjects learn to locate target pictures

while facing any of the six node surfaces? Does learning in one environment accelerate learning in a

second environment (i.e. does one “learn how to learn”)? How long is spatial memory retained? Does

strategy training help? Do certain memorization strategies correlate significantly with paper-and-pencil

test scores and/or task performance? Does 2&3D mental rotation ability correlate significantly with task

performance? Is spatial memory better for particular target locations relative to imagined body orientation

than others? If this experiment is successful, it could lead directly to a cost-effective countermeasure for

in-flight spatial disorientationin the form of preflight spatial orientation training with a virtual reality

display. By allowing astronauts to practice effective spatial memory strategies in multiple virtual

23

environments, both generic and/or mission-specific, they could develop a generalizable approach to 3D

spatial orientation in microgravity.

The objective of the current experiment is to study whether humans can learn to correctly identify

target directions, regardless of relative body orientation, while facing any of the six surfaces in a virtual

cubic chamber. Subjects were trained in a 3D array of objects on a forward task in which they must infer

their relative orientation and then quickly locate a target object relative to their body. The task was then

repeated with a different array of objects to see if experience in the first array helped subjects learn faster

in the second. Subjects returned 1, 7, and 30 days afterward to see how long configurational knowledge

and spatial memory task ability was retained. Performance of one subject group who received computerbased strategy training was compared with that of a control group who were free to use whatever

strategies they wanted to perform the task, and with the results of conventional paper-and-pencil test

assessments of visual dependence and 2/3D figure mental rotation ability.

24

Chapter 3: METHOD

3.1 Subjects

Twenty-seven students (24 MIT engineering students, 2 Harvard law students, and 1 spouse of an

engineering student: ages 18-47) were recruited who had no history of visual, vestibular, or auditory

disease. Subjects were paid $10 per hour for participation, and those for whom participation ran over the

hour were compensated in a pro-rated manner. Data for three subjects were discarded because they did

not finish all three days of testing: One did not finish because she got a headache and felt slightly

disoriented after the training day; two simply did not return for testing. The protocol was reviewed and

approved by the MIT Committee on the Use of Humans as Experimental Subjects (COUHES).

3.2 Materials and Apparatus

3.2.1 Virtual Environment Generator

The experiment was conducted using a Virtual Environment Generator (VEG) system comprised

of three subsystems: a 3D graphics computer, a head-mounted display (HMD), and a head tracker.

Stereoscopic visual scenes were rendered by a graphics-accelerator-equipped workstation (two

Pentium II 400MHz processors, two Intergraph GLZ-13 graphics accelerator cards, Gateway PC) using

Python/OpenGL/VRUT software (see Appendix A for program code used).

The HMD was a color-stereo Kaiser Proview-80 consisting of an eyepiece with small LCD

displays, one for each eye, with an adjustable interpupillary distance, nominally 6 cm (see Figure 3.1).

The workstation rendered the graphics images in RGB mode at the 640 (horizontal) by 480 (vertical)

resolution of the HMD. This RGB signal was sent to an electrical box where it was converted to NTSC

format before being sent to each eye of the HMD. The field of view of each eye was 65° (horizontal) X

50° (vertical) with 100% binocular overlap. Each display was refreshed in full color at 60 Hz in fieldsequential mode (60 Hz for each of red, green, and blue fields). The average graphics update rate for each

eye was approximately 30 Hz, but the update rate varied between 29 Hz and 31 Hz depending on the

complexity of the scene. The angular resolution of the Kaiser HMD was about 6 arc minutes.

25

Figure 3.1. Head-Mounted Display system

The third subsystem was an acoustical-intertial head tracking system (IS-600 Mark 2, Intersense,

Inc., Burlington, MA) and is shown in Figure 3.2. The tracker measured head orientation with a

miniature, solid-state inertial measurement unit (IMU or InertiaCube) that senses angular rate of rotation

and linear acceleration along three perpendicular axes. (The linear position tracking capability of the IS600 Mark 2 was not needed or used in this experiment.) The angular rates were integrated to obtain the

orientation (yaw, pitch, and roll) of the IMU with an angular accuracy of 0.25º RMS for pitch and roll and

0.5o RMS for yaw. The IMU had an angular resolution about all axes of 0.10º RMS. The IMU was

mounted on the top of HMD while continually communicating the 3-degree of freedom orientation of the

HMD to the workstation over an RS-232 serial line at up to 115,200 baud. This information was used to

update the user’s binocular imagery to coincide with the direction in which he or she was facing in the

virtual environment. The tracker software incorporated a feature that predicted angular motion 10 ms into

the future in order to compensate for graphics rendering delays and to minimize simulator lag.

26

Figure 3.2. Intersense IS600-Mark 2 Tracking System

3.2.2 Virtual Cubic Chamber and Object Arrays

The environment was a virtual cubic chamber that had simulated dimensions of 1.22 m3 (or 4 ft.3)

with a black interior. Subjects sat erect at all times. All simulated orientations inside the virtual chamber

were such that the subject’s line of sight was centered with the surface ahead and 10 cm away from the

surface behind. During successive portions of the training and experiments, subjects saw three object

arrays as shown in Figure 3.3: (1) Practice array, (2) Array A, and (3) Array B. Each “object” consisted of

four identical pictures of a familiar animal (except for those in the practice array, as shown in Figure 3.3)

rotated by multiples of 90 degrees and symmetrically clustered so the aggregate could be easily

recognized from any orientation. The symmetry of the objects prevented any one object from providing

pointer cues to the other five. The spatial relationships between objects were constant. The walls and

objects were rotated together by computer; subjects were asked to interpret this as a self-orientation

change.

27

Practice Array

Array A

Array B

Behind:

Figure 3.3a. Wide-angle view of the interiors of the three object arrays, as seen from the initial, or

“baseline,” simulated orientation. Arrays were named based on the fact that one contained all animals

(A) and the other included a butterfly (B) with all animals otherwise. Animals in Array A included a frog

(above), a bluebird (below), a snake (left), a deer (right), an elephant (ahead), and a rooster (behind).

Animals in Array B included a turtle (above), a lion (below), a parrot (left), a trout (right), a butterfly

(ahead), and a giraffe (behind). The practice array contained pictures of a star (above), a pound sign

(below), hearts (left), diamonds (right), spades (ahead), and clubs (behind).

Figure 3.3b. Subject Wearing HMD and Performing Experimental Task

28

3.3 Procedure

3.3.1 Experiment Timeline

Before the experiment session, subjects completed a medical history questionnaire (see Appendix

B), three different paper-and-pencil tests related to visual field dependence and mental rotation ability

(Sect 3.3.3 and Appendix C), and on the basis of their performance on these tests, assigned to the

“strategy training” or “control training” group, as detailed in Sect 3.3.4 below. At the start of the first test

session, subjects were trained using a computer-aided procedure, (Sect 3.3.4 and Appendix D). At the end

of instructions, both subject groups were allowed 5 trials in the practice array to get used to the procedure.

Each subject returned 1, 7, and 30 days later for skill retention testing, described in Section 3.3.5,

consisting of a test of their memory of the configurations of the test environments, and how well they

could still accomplish the spatial task. At the end of each session, subjects were interviewed, as described

in Sect. 3.3.6. Figure 3.4 shows a graphical overview of subject activities with cross-references to

relevant sections.

29

Figure 3.4. Experiment Timeline

30

3.3.2 Spatial Learning Trials

The basic experimental task required the subjects to memorize the location of objects around

them. The “strategy training” subjects (Sect. 3.3.4) were given suggestions on how to learn to do this,

while the “control training” group was not. In each trial, they were shown the chamber in a different

orientation with only two objects (ahead and below their body) in view. By recognizing the objects, they

had to infer their orientation, and then predict the direction to a third specific target relative to their body

from this inferred orientation as quickly as possible while in darkness. Response time (RT) and indicated

target direction were measured during this phase of each trial using a 4-button keyboard. (Targets were

never presented ahead or below the subject). Next, to allow the subject to learn the environment and

recognize indication errors over successive trials, the subject was shown all objects as they would appear

from the tested orientation. The subject was instructed to look around, find the correct location of the

target, and then use the brief time remaining before the next trial to study/memorize the configuration of

the object array.

The successive views seen by the subject during each trial are shown schematically in Figure 3.5.

First, a picture of the target object was shown. Next, the orientation to be inferred was specified by

showing two surfaces ahead and below. Subjects could indicate relative target direction in body axes as

soon as these surfaces appeared using “above”, “behind”, “left”, or “right” buttons on the keyboard, but

they had up to seven more seconds after they disappeared if needed. On the first trial, the subject was

completely naïve but, in subsequent trials, usually learned the relative location of the objects to one

another via a trial-by-trial learning process. Pilot experiments showed that the task in any possible

orientation was too difficult to do without prior exposure involving simpler transformations in the object

arrays. Therefore, we had subjects do 12 “training” trials, which were meant solely to allow subjects time

to learn at least a few of the target relationships before their ability to learn was tested in any orientation.

The first 4 trials were in a baseline configuration without mental rotations; i.e. the same pictures were

presented ahead and below. For the next eight trials, the subject faced a second surface in various roll

31

orientations. Finally, the subject performed 24 trials facing all six surfaces in various roll orientations.

Presentation order was pseudorandomized but balanced by surface and relative target direction (see

Appendix E), since Bryant and Tversky’s experiments suggested the latter was important (as reviewed in

Sect. 2.1).

When we oriented the subject, we revealed two picture locations instead of just one as in our first

experiment.4 This increased the probability of correctly predicting target direction from 1/5 to 1/4 chance,

which made our % correct measure slightly more quantized and less powerful statistically than it was in

the previous design. Since we could not reliably determine which combinations of surface and relative

target direction were most difficult, we were concerned about how well sets of 4 trials would be balanced

for perceived difficulty. We averaged data across sets of 8 trials in the present experiment instead of just

4 in order to compensate for these properties.

Following a short break, the subject repeated an identical sequence of 36 trials in a second,

different environment. The order of presentation of the environments was reversed for half the subjects to

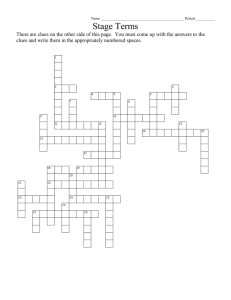

Figure 3.5. Schematic of 3D Spatial Learning Experiment timeline for each trial. Subject was shown

target picture, pictures ahead and below, then during “Memory” phase had 7 more seconds to indicate

relative target direction in body axes. Finally, during “Study” phase, all 6 pictures are shown. Subject

found target and then studied array for remainder of time available.

4

In our first experiment, subjects imagined entering the node through a specified hatch (the picture shown to them)

in a particular body orientation, defined by a clock hand.

32

control for intrinsic differences in “learnability” of the object arrays.

3.3.3 Paper-and-Pencil Spatial Ability Tests

In our earlier experiments (Sect.2.3), a correlation between performance on three conventional

tests of visual field dependence (Group Embedded Figures Test (GEFT) (Witkin et al., 1971), and two

and three dimensional mental rotation ability (Card Rotations and Cube Comparisons Tests; Eckstrom et

al., 1976; Appendix C) was found. In the GEFT, the subject is asked to identify perceptually a target

geometric figure embedded within an irrelevant stimulus content. In the Card Rotation tests, a drawing of

a card cut into an irregular shape is depicted. To its right are eight other drawings of the same card,

sometimes merely rotated and sometimes turned over to its other side. Subjects must indicate for each

drawing whether the card has been rotated (i.e., it is the same as the original) or turned over (i.e., it is

different from the original). In the Cube Comparison test, subjects are presented with two drawings of a

cube. Then, assuming no cube can have two faces that are alike, the subject must indicate whether the two

drawings could possibly be of the same cube or could not be of the same cube. We expected that these

tests might also be predictive of performance in the present experiments, and provide a useful metric for

balancing strategy and control training groups.

3.3.4 Strategy Training and Control Training

Based on experience piloting previous experiments, we hypothesized subjects might perform

better if a) they were instructed to remember the targets from a “baseline” orientation, b) to memorize

opposite pairs of objects, and c) to remember the relative orientation of object-pairs by memorizing at

least one object “triad”. This approach was incorporated into a (Microsoft Powerpoint presentation

manager based) set of computer-based written instructions, shown in detail in Appendix D.1. Half the

subjects used this “strategy training” presentation. The other half used a “control training” presentation

(see Appendix D.2), which did not introduce the “baseline orientation”, nor the logical grouping of

objects using “pairs” and “triads” concepts, but as in the strategy training group instructions, encouraged

the subjects to use mental imagery, and instructed them how to report their responses. The training

procedure typically took 15 minutes to complete. Subjects were assigned to the strategy or control group

33

based on their scores on the Card rotation and Cube comparison tests, so that the two groups were

approximately balanced (see Table 3.1). The strategy group received 5 more orientation demonstrations

than the controls.

Table 1-1 Group Scores on Paper and Pencil tests

Training

Card Rotation

Test

Cube Comparison

GEFT

Median

Range

Median

Range

Median

Range

Strategy

121.5

82.0

24.0

34.0

18.5

16.0

Control

114.5

110.0

25.0

36.0

21.0

13.0

*Note: Some scores were discarded as mentioned in Sect. 3.1.

3.3.5 Retention Tests

Subjects returned 1, 7, and 30 days later (retention days) for retesting. We first wanted to know

how well they could reconstruct the relative orientation of the objects in the array. So during each

“retention” test session, we first tested the accuracy of their configurational knowledge of both previously

learned environments, in the order in which these environments were learned. Subjects were asked to

“pick-and-place” the target objects from a palette onto the surfaces of the virtual chamber to reconstruct

the appearance of that chamber in the baseline orientation. They were free to take as much time as they

needed to place the objects in the order they chose, and then to revise their choices.

Configuration tests were scored using a convention that classified each response in terms of its

“geometric distance” from the correct response (i.e. the size of its error; see Appendix F). Responses that

required geometrically more complex paths from the correct response were considered more erroneous

and given higher (i.e. worse) scores. For example, a perfect reconstruction would be given a score of 0. A

deviation by single rotation was given a better (i.e. lower) score than an inversion or two rotations, and

any single transformation (rotation or inversion) was given a better score than any combination of itself

with another. A double rotation was given a better score than a double inversion. Responses that

contained more complex transformations (e.g., exchange of adjacent surfaces) beyond combinations of

34

simple rotations and inversions were classified as “other”. Any rotation (roll) about the z-axis received a

better score than any yaw (y-axis), and any yaw scored better than any simple pitch (x-axis). Similarly,

simple inversions through the z, y, and x (roll, yaw, and pitch) axes were considered more geometrically

different from the baseline orientation than inversions through axes earlier in that list.

Once their configurational knowledge was tested, their spatial ability for the second array was

then retested using a procedure similar to that employed in the original spatial learning trials. Subjects,

however, were not shown the object configuration between trials. Thus, we showed subjects the correct

configuration of the second array before this test began in order to prevent poor memory of the

configuration from confounding our measurement of retained spatial ability. We did not show subjects the

correct configuration of the first array because we wanted to see how long their configurational

knowledge would last when no feedback or practice was given.

On each retention day, each subject twice completed a block of 24 pseudorandomly ordered trials

(6 sets) identical to those encountered on the training day (i.e. 48 trials during which all six surfaces were

faced twice in each roll orientation). Subjects completed 48 trials instead of just 24 because we thought

spatial ability might continue to improve with extra practice, despite not ever getting to view the full

object array between trials.

3.3.6 Exit Interviews

After the tasks for each day were completed, subjects were asked multiple-part, open-ended

questions regarding the learning strategies they used and the relative difficulty experienced between

environments (training day) and between days (retention days). The investigator interviewed subjects

separately on the training day and on each of the retention days.

Training Day Questions:

(1) “Do you think your ability to do the spatial memory tasks improved in the second

training environment relative to the first?”

35

(2) “What strategy (or strategies) did you use in the first environment? Did you use these

same strategies in the second environment? If not, what was different about the strategies

you used in the second environment?”

(3) “Were you ever able to mentally visualize the pictures around you without the help of

any rules? I.e. Could you ‘see’ the pictures in your ‘mind’s eye’?”

Retention Day Questions:

(1) “What was the relative difficulty of today’s experience compared to that of the previous

day? What was harder/easier?”

(2) “How do you think the time away affected your ability to perform the tasks?”

(3) “Did you use the same strategies as you did on previous days?”

3.4 Experiment Design and Data Analysis

For the spatial learning trials, dependent variables were percent correct (% correct) indications

and response time (RT) during the memory phase of each trial. Independent variables set, environment,

and day were varied within subject, and training (strategy vs. control) was varied between subject (order

was counterbalanced). On the training day, we divided sets into the first (sets 1-3) and second (sets 4-6)

arrays seen.5 To assess effects, measures were collapsed across array presentation order within training

group. For the retention session configuration tests, median score and mean time to completion were

calculated, by array, across presentation order within training group.

The quantized % correct measure was inappropriate for statistical tests requiring normally

distributed data, so we used nonparametric Friedman ANOVA and Kruskal-Wallis tests to assess

differences within and contrasts between groups in % correct performance, respectively. Stronger training

effects might have been observed if we had shown only one picture, but showing only one symmetrical

object does not provide adequate orientation information for the “forward” task we used. Learning trends

36

were analyzed via the Page test (Conover, 1999), which detects increasing or decreasing monotonic trends

for group data across sets. Configuration test scores were compared within and between group via the

aforementioned nonparametric tests.

On the other hand, we used the stronger statistical tests (repeated measures ANOVA, paired and

two independent sample t-tests) to evaluate improvement within and contrasts between group in RT

because those set means were approximately normally distributed.

Statistical analysis was performed using conventional packages (Systat v9.0, SPSS, Inc.;

StatXact-4, Cytel, Inc.).

5

We were mainly interested in performance in the last 24 trials, and therefore, we omitted the 12 training trials from

analyses.

37

Chapter 4: RESULTS

4.1 Evidence of Learning

Figures 4.1 - 4.3 show the mean % correct and RT, respectively, by set and target group, within

array, for both strategy and control groups on the training day. The 12 trials (4 upright and 8 facing a

second surface) before testing in each environment (i.e. before set 1 and between sets 3 and 4) were

essentially training in the new array and were not included in the analyses.

When all targets were taken together, a Friedman test revealed a significant difference in %

correct performance between sets in both the first and second arrays for the control group (first array: χ2 =

9.80, df = 2, exact p < .01; second array: χ2 = 6.78, df = 2, exact p < .05). [Note: The StatXact software

computes many tests exactly rather than by the more usual asymptotic approximation by the chi-squared

distribution. When the indication “exact” is noted below, it refers to this property of the StatXact

calculation.] Although no significant improvement was found in % correct between sets in either array for

the strategy group (p = .05 criterion), the Page test, whose characteristic statistic is denoted “pa(x)”

below, revealed a significant increasing trend across sets in the first array for this group [pa(x) = 1.83, df

= 2, exact p < .05]. A repeated measures ANOVA on RT performance revealed significant main effects of

array and set [F(1,21) = 16.87, p < .01 and F(2,42) = 6.35, p < .01, respectively]. Training, array

presentation order, and interaction effects were not significant.

Most subjects learned to do the task within 36 trials in either test array. The control group showed

learning in % correct in both arrays, while the strategy group showed it in the first array only. Both

groups showed learning in RT in both environments.

4.2 Transfer of Learning from the First Array to the Second

As we chose to define it, transfer of learning, or “learning how to learn,” required that learning

occur faster (i.e. faster rise or fall time) in the second environment, but not necessarily that a higher level

of asymptotic performance be achieved (since one array might be intrinsically more difficult to learn than

the other, despite our intention to make them similar). One indication of transfer of learning, then, is that

38

subjects perform better, by set, in the earlier sets when training in the second environment than they did in

the first. Table 4.1 indicates with asterisks the sets in which performance significantly improved from the

first object array to the second array.

We contrasted performance between array, by first (sets 1 vs. 4), second (set 2 vs. 5) and third

(set 3 vs. 6) sets. When all targets were taken together, paired t-tests revealed that RT performance was

better in the second array in the first and second sets for the strategy group [first RT t(11) = 3.1, p < .01

and second RT t(11) = 2.7, p < .05], but only in the second set for the control group [t(11) = 3.67, p <

.01]. Significant differences in % correct performance between arrays could not be demonstrated in any

set for either training group when all targets were taken together.

When relative-target-direction groups were taken separately (see section 4.8), a Friedman test

revealed that % correct performance for above/behind targets in the second set of the second array was

better than in the first for the strategy group (χ2 = 6.0, df = 1, exact p = .0313). Paired t-tests revealed that

RT for above/behind targets in the first and second sets was significantly shorter in the second array than

in the first for the strategy group [first set: RT t(11) = 2.9, p < .05 and second set: RT t(11) = 3.0, p < .05],

but this effect appeared only in the second set for the control group [t(11) = 2.3, p < .05]. No significant

transfer in performance for left/right targets was seen in either measure for either training group.

We also tested for improving trends that continued across the change in array, suggesting that

learning transferred from the first array to the second. When all targets were taken together, Page tests

across all six sets within training groups revealed significant improving trends in both % correct and RT

performance for both training groups [control % correct: pa(x) = 2.6, exact p < .01 and RT: pa(x) = -4.6,

exact p < .0001; strategy % correct: pa(x) = 2.3, exact p < .01 and RT: pa(x) = -3.5, exact p < .001].

When relative-target-direction groups and array-presentation-order subgroups were taken

separately, interesting differences emerged. Table 4.2 and 4.3 summarize set means and significance of

trends found on the training day by subgroup. Shaded regions correspond to sets across which significant

trends were found. Page tests revealed that three out of four groups had significant trends of

39

improvement in both % correct and RT for above/behind targets – no significant trends in either measure

for above/behind targets were seen for the control group who saw array order 2 (Array A first). Only the

strategy group who saw Array A first had significant trends of improvement that continued across the

change in array in both % correct and RT for left/right targets.

In summary, both training groups showed significant transfer in RT performance for

above/behind targets, but not for left/right targets. The transfer, however, occurred in earlier sets and in

more sets for the strategy group than for the control. Corresponding transfer in % correct was found for

above/behind targets in the second set for the strategy group. The strategy-trained subjects who saw Array

A first had the most compelling transfer: they were the only ones to show significant learning trends in

both % correct and RT for left/right targets that continued across the change in environment. This is a

sign of the effects that training was intended to produce.

Table 4-1 Significant Transfer in Mean RT and % Correct by Training, Target Group, and Set

Target Group

Measure

Training

Group

Above/Behind

All

First

Second

Third

First

Second

Third

Set

Set

Set

Set

Set

Set

Strategy

*

*

*

*

Mean RT

Control

*

Strategy

*

*

% Correct

Control

* : Performance was significantly (p < .05) better in the second array than in the first.

40

Table 4-2 Trends in Performance by Training, Array Presentation Order, and Set on the Training Day for

Above/Behind Targets

Percent Correct

Mean Response Time (sec)

Training Array

Order

First Array

Second Array

First Array

Second Array

1

2

3

4

5

6

1

2

3

4

5

6

54%

64%

83%

71%

76%

88%

5.88

5.84

5.11

4.79

4.41

4.11

3.51

3.84

3.10

2.86

2.61

3.18

2.29

2.40

1

pa(x) = 2.4

Exact p < .005

Control

65%

70%

87%

65%

2

pa(x) = -3.4

Exact p < .0005

73%

77%

4.63

4.12

3.53

--

-79%

83%

91%

1

93%

100%

98%

4.80

4.07

Pa(x) = 2.4

Exact p < .005

Strategy

80%

83%

100%

2

95%

3.88

pa(x) = -2.1

Exact p < .05

100%

100%

3.76

4.66

Pa(x) = 1.9

Exact p < .005

3.91

2.43

pa(x) = -2.2

Exact p < .05

Table 4-3 Trends in Performance Means by Training, Array Presentation Order, and Set on the Training

Day for Left/Right Targets

Training Array

Percent Correct

Mean Reaction Time (sec)

Order

First Environment

Second

First

Second

1

2

3

4

5

6

1

2

3

4

5

6

39%

57%

71%

64%

50%

64%

5.65

6.50

5.65

6.43

6.04

5.59

1

pa(x) = 1.7

exact p < .05

--

pa(x) = -1.9

exact p < .05

--

Control

40%

50%

45%

40%

55%

55%

6.33

5.34

2

43%

43%

50%

Strategy

46%

43%

46%

6.89

6.84

6.56

45%

55%

4.32

4.20

6.37

6.67

6.23

6.59

7.15

--

-45%

2

4.49

pa(x) = -3.0

exact p < .001

-1

5.08

55%

pa(x) = 2.2

exact p < .01

75%

85%

7.55

7.12

6.68

pa(x) = -1.8

Exact p < .05

6.95

--

41

4.3 Effect of Strategy Training6

A Kruskal-Wallis test across array presentation order revealed that the strategy group had

significantly better % correct performance for above/behind targets than the control group in the first and

second sets of the second array (set 4 % correct: χ2 = 5.3, exact p < .05; set 5 % correct: χ2 = 9.1, exact p

< .01). A corresponding training effect was found in RT performance in the second set of the second array

(χ2 = 7.0, exact p < .01). The control group had better RT performance for left/right targets than the

strategy group in the last set of both arrays (set 3: χ2 = 4.1, exact p < .05; set 6: χ2 = 4.3, exact p < .05).

As shown in Table 4.4, strategy training helped for above/behind targets in the early sets of both

test environments. Strategy training, however, hindered RT performance for left/right targets in the last

set of both environments.

Table 4-4 Training Effects on Performance by Relative Target Direction, Array, and Set on the Training

Day

Relative-Target Direction

Measure

Array

Above/behind

Left/right

First Set Second Set Third Set First Set Second Set Third Set

C

First

Mean RT

S

Second

C

First

% Correct

Second

S

S

S = Stragety-trained subjects had significantly better performance than control.

C = Control subjects had significantly better performance than strategy-trained.

6

There was 1 degree of freedom for all statistical results reported in this section.

42

% Correct for All Targets on the Training Day

100

First Array

90

Second Array

80

% Correct

70

60

50

40

30

Training Group

20

Control

Strategy

10

0

0

1

2

3

4

SET

5

6

7

Mean RT for All Targets on the Training Day

Mean Response Time (sec)

10

9

8

First Array

Second Array

7

6

5

4

3

Training Group

2

Control

Strategy

1

0

0

1

2

3

4

5

6

7

Set

Figure 4.1. Mean Performance for All Targets, by Set, within Training Group on the Training Day Upper

panel: % correct, lower panel: RT; Error bars are +/- 1 SEM.

43

% Correct for Left/Right Targets on the Training Day

100

90

Second Array

First Array

80

% Correct

70

60

50

40

30

Training Group

20

Control

Strategy

10

0

0

1

2

3

4

SET

5

6

7

Mean RT for Left/Right Targets on the Training Day

Mean Response Time (sec)

10

9

First Array

Second Array

8

7

6

5

4

3

Training Group

2

Control

Strategy

1

0

0

1

2

3

4

5

6

7

Set

Figure 4.2. Mean Performance for Left/Right Targets, by Set, within Training Group on the

Training Day Upper panel: % correct, lower panel: RT; Error bars are +/- 1 SEM.

44

% Correct for Above/Behind Targets on the Training Day

First Array

Second Array

100

90

80

% Correct

70

60

50

40

30

Training Group

20

Control

Strategy

10

0

0

1

2

3

4

SET

5

6

7

Mean RT for Above/Behind Targets on the Training Day

Mean Response Time (sec)

10

9

8

First Array

Second Array

7

6

5

4

3

Training Group

2

Control

Strategy

1

0

0

1

2

3

4

5

6

7

Set

Figure 4.3. Mean Performance for Above/Behind Targets, by Set, within Training Group on the Training

Day Upper panel: % correct, lower panel: RT; Error bars are +/- 1 SEM.

45

4.4 Effect of Using Mental Imagery7

Whether or not subjects used mental imagery apparently influenced the subjects performance,

and their response to training: In response to Question 3 of the Exit Interview, four subjects from each

training group (n = 8) reported using mental imagery in at least one of the environments on the training

day. We referred to this group as the imagery group. The remaining subjects (n = 16) claimed to use

mental rules instead or that they found it too hard to use mental imagery. We called this group the nonimagery group. Figure 4.4 shows mean % correct for left/right targets, by set, within imagery group

across training groups. Figure 4.5 shows mean % correct for above/behind targets, by set, within training

group, among non-imagery subjects. Table 4.5 summarizes training effects found among non-imagery

subjects.

Kruskal-Wallis tests revealed that the imagery group had significantly higher scores on all three

paper-and-pencil tests than the non-imagery [Card rotations: χ2 = 5.7, p(Monte Carlo estimate) < .05;

Cube comparisons: χ2 = 8.2, p(Monte Carlo estimate) < .005; GEFT: χ2 = 4.0, p(Monte Carlo estimate) <

.05]. When relative-target-direction groups were taken separately, the imagery group had significantly

higher % correct performance for left/right targets than the non-imagery group in the last set of the first

array and in all three sets of the second array (set 3: χ2 = 4.0, exact p < .05; set 4: χ2 = 4.5, exact p < .05;

set 5: χ2 = 5.6, exact p < .05; set 6: χ2 = 8.7, exact p < .005). No significant differences in performance for

above/behind targets between imagery groups were found. When all targets were taken together, the

imagery group had significantly better % correct performance in all three sets of the second array [set 4:

χ2 = 5.2, p(Monte Carlo estimate) < .05; set 5: χ2 = 5.4, p(Monte Carlo estimate) < .05; set 5: χ2 = 9.0,

p(Monte Carlo estimate) < .005].

7

There was 1 degree of freedom for all statistical results reported in this section.

46

% Correct for Above/Behind Targets for Imagery Groups

First Array

Second Array

100

90

80

% Correct

70

60

50

40

30

Group

20

Non-Imagery

Imagery

10

0

0

1

2

3

4

5

6

7

Set

% Correct for Left/Right Targets for Imagery Groups

First Array

Second Array

100

90

80

% Correct

70

60

50

40

30

Group

20

Non-Imagery

Imagery

10

0

0

1

2

3

4

5

6

7

Set

Figure 4.4. Mean % Correct, by Set, within Imagery Group across Training Group on the Training Day

Upper panel: above/behind targets, lower panel: left/right targets; error bars are +/- 1 SEM.

47

Table 4-5 Effect of Claimed Use of Mental Imagery on % Correct by Set

Target Group

Array

First

1

Left/Right

All

2

Second

3

4

5

6

*

*

*

*

*

*

*

* : The imagery group had significantly [p < .05] better % correct performance than the non-imagery group.

We conducted a post-hoc analysis of the effect of training among imagery groups. Among nonimagery subjects, those who received strategy training had better % correct performance than the control

subjects in the first and second sets of the second array (Kruskal-Wallis, set 4: χ2 = 9.1, exact p < .01; set

5: χ2 = 4.8, exact p < .05). Similarly, when relative-target-direction groups were taken separately, those

who received strategy training had better % correct performance for above/behind targets than the control

subjects in the first and second sets of both arrays (Kruskal-Wallis, set 1: χ2 = 4.5, exact p < .05; set 2: χ2

= 4.5, exact p < .05; set 4: χ2 = 5.1, exact p < .05; and set 5: χ2 = 8.4, exact p < .01). We found no effect

of training among non-imagery subjects for left/right targets.

48

% Correct for Above/Behind Targets for Non-Imagery Subjects

First Array

Second Array

100

90

80

% Correct

70

60

50

40

30

Training Group

20

Control

Strategy

10

0

0

1

2

3

4

5

6

7

Set

% Correct for Left/Right Targets for Non-Imagery Subjects

100

90

80

First Array

Second Array

% Correct

70

60

50

40

30

Training

20

Control

Strategy

10

0

0

1

2

3

4

5

6

7

Set

Figure 4.5. Mean % Correct for Non-imagery subjects, by Set, within Training Group Upper panel:

above/behind targets, lower panel: left/right targets; error bars are +/- 1 SEM.

49

Table 4-6 Effect of Strategy Training on % Correct by Set among the Non-imagery Group

Target Group

Array

Above/Behind

Set 1

First

Set 2

*

*

All

Set 3

Set 4

Second

Set 5

*

*

*

*

Set 6

* = The strategy group had significantly [p < .05] better % correct performance than the control group

among the non-imagery subjects.

4.5 Retention Testing

4.5.1 Spatial Ability Test

Figures 4.6 – 4.8 show % correct and RT performance for the second array, by set, over days

within training groups. Friedman ANOVA tests by day and set yielded a significant difference in %

correct between days in set 3 and 6 for the control group (set 3: χ2 = 7.6, df = 3, exact p < .05 and set 6: χ2

= 8.4, df = 2, exact p < .05). Similarly, a significant difference in % correct was revealed between days in

set 1 and 6 for the strategy group (set 1: χ2 = 9.7, df = 3, exact p < .05 and set 6: χ2 = 8.9, df = 2, exact p <

.01). Results for RT performance were analyzed via repeated measures ANOVA by training, array

presentation order, retention day, and set. Significant main effects of set and array presentation order were

found [set: F(5,105) = 8.38, p < .001; array presentation order: F(1,21) = 7.20, p < .05]. The overall

improvement in RT performance for above/behind targets between day 1 and 30 was significant [F(3,21)

= 3.09, p < .05].

Page tests across all sets (7-24) of the retention days within training group revealed that both

groups had a significant trend of improvement in % correct across all eighteen sets of retention testing

[control group: pa(x) = 3.3, p(Monte-Carlo estimate) < .0001 and strategy group: pa(x) = 3.3, p(MonteCarlo estimate) < .0005]. Similarly, the strategy group had a corresponding significant trend of