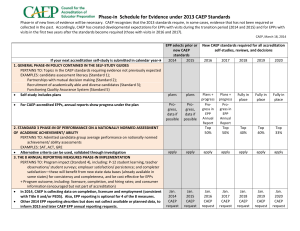

Guide to CAEP Accreditation: February 2014 The Continuous Improvement

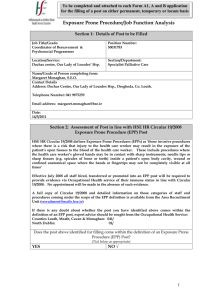

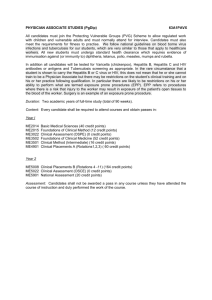

advertisement