18.303 Problem Set 2 Solutions Problem 1: (5+5+5+5+5+(3+3+3) points)

advertisement

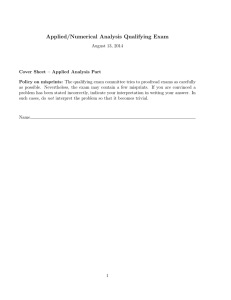

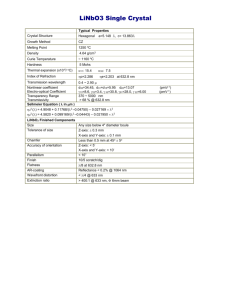

18.303 Problem Set 2 Solutions Problem 1: (5+5+5+5+5+(3+3+3) points) −um . Define cm+0.5 = c([m + 0.5]∆x). Now (a) As in class, let u0 ([m + 0.5]∆x) ≈ u0m+0.5 = um+1 ∆x we want to take the derivative of cm+0.5 u0m+0.5 in order to approximate Âu at m by a center difference: um+1 −um um −um−1 c − c m+0.5 m−0.5 ∆x ∆x ≈ Âu . ∆x m∆x There are other ways to solve this problem of course, that are also second-order accurate. 000 00 0000 (b) As in class, we write um±1 = u ± u0 ∆x + u2 ∆x2 ± u6 ∆x3 + u24 ∆x4 + · · · , where u and its 0 00 derivatives are evaluated at m∆x. Similar, write cm±0.5 = c ± c2 ∆x + c8 ∆x2 + · · · , expanding c around m∆x. As suggested, let’s plug in the terms of c one by one. Wha • The first term, c = c(m∆x), is a constant so that we can pull it out of the expression above. What remains is simply the center-difference approximation for u00 from class, which we already showed is second-order accurate. So, this term gives us cu00 + O(∆x2 ) . [The notation O(∆x2 ) denotes a function that is asymptotically proportional to ∆x2 (or smaller) as ∆x → 0.] 0 • The second term, ± c2 ∆x, when we plug it in above gives c0 ∆x 2 um+1 − u m ∆x u m −um−1 + ∆x um+1 − um−1 = c0 u0 + O(∆x2 ), = c0 2∆x ∆x using the fact from class that approximation for u0 (m∆x). • The third term, c000 ∆x2 8 c000 2 8 ∆x um+1 −um−1 2∆x is a second-order accurate center-difference is again a constant that can be pulled out, giving um+1 −um ∆x − um −um−1 ∆x ∆x = c000 ∆x2 u00 + O(∆x2 ) = O(∆x2 ), 8 where we have again used the result for the center-difference approximation of u00 from clas, and noted that the product of two O(∆x2 ) terms is O(∆x4 ) and is therefore asymptotically negligible as ∆x → 0. • Thus, combining the above three terms, we have cu00 + c0 u0 + O(∆x2 ) as desired. (The remaining terms in the Taylor expansion of c are higher-order in ∆x). (c) The resulting convergence plot is shown in Figure 1, and demonstrates that the error decreases as ∆x2 until ∆x becomes small, at which point roundoff errors take over (and the error actually increases). The IJulia notebook that created this plot is posted on the 18.303 website. (d) In order to approximate Âu, we did three things: compute u0 by a center-difference as in class, multiply by cm+0.5 at each point m + 0.5, then compute the derivative by another center-difference. The first and last steps are exactly the same center-difference steps as in class, so they correspond as in class to multiplying by D and −DT , respectively, where D is 1 100 10-2 |error| in derivative 10-4 10-6 10-8 10-10 10-12 |error| 10-14 ∆x2 10-8 10-7 10-5 10-6 ∆x 10-4 10-3 10-2 10-1 Figure 1: Convergence of center-difference approximation for (cu0 )0 , with c = e3x and u = sin(x), at x = 1. The error decreases as ∆x2 until ∆x becomes small, at which point roundoff errors take over. the (M + 1) × M matrix 1 −1 1 D= ∆x 1 −1 1 .. . .. . −1 . 1 −1 The middle step, multiplying the (M + 1)-component vector u0 by cm+0.5 at each point is just multiplication by a diagonal (M + 1) × (M + 1) matrix c0.5 c1.5 C= . .. . cM +0.5 Putting these steps together in sequence, from right to left, means that A = −DT CD PM +1 (e) For any x, x∗ Ax = −x∗ DT CDx = −(Dx)∗ C(Dx) = −y∗ Cy = − m=1 cm−0.5 |ym |2 ≤ 0 where y = Dx (using c(x) > 0 =⇒ cm−0.5 > 0). We also obtain x∗ Ax = 0 if and only if y = Dx = 0 (since any nonzero component of y would contribute a positive term to the sum), and this is true if and only if x = 0 since D is full column-rank as shown in class. Hence A is negative-definite. (f) In Julia, the diagm(c) command will create a diagonal matrix from a vector c. The function diff1(M) = [ [1.0 zeros(1,M-1)]; diagm(ones(M-1),1) - eye(M) ] 2 0.20 fourth third second first 0.15 eigenfunctions 0.10 0.05 0.00 0.05 0.10 0.15 0.200.0 0.2 0.4 x 0.6 Figure 2: Smallest-|λ| eigenfunctions of  = 0.8 d dx 1.0 d c(x) dx for c(x) = e3x . will allow you to create the (M + 1) × M matrix D from class via D = diff1(M) for any given value of M . Using these two commands, construct the matrix A from part (d) for M = 100 and L = 1 and c(x) = e3x via L = 1 M = 100 D = diff1(M) dx = L / (M+1) x = dx*0.5:dx:L # sequence of x values from 0.5*dx to <= L in steps of dx C = ....something from c(x)... A = -D’ * C * D / dx^2 You can now get the eigenvalues and eigenvectors by λ, U = eig(A), where λ is an array of eigenvalues and U is a matrix whose columns are the corresponding eigenvectors (notice that all the λ are < 0 since A is negative-definite). (i) The plot is shown in Figure 2. The eigenfunctions look vaguely “sine-like”—they have the same number of oscillations as sin(nπx/L) for n = 1, 2, 3, 4—but are “squeezed” to the left-hand side. (ii) We find that the dot product is ≈ 4.3 × 10−16 , which is zero up to roundoff errors (your exact value may differ, but should be of the same order of magnitude). (iii) In the posted IJulia notebook for the solutions, we show a plot of |λ2M −λM | as a function of M on a log–log scale, and verify that it indeed decreases ∼ 1/M 2 . You can also just look at the numbers instead of plotting, and we find that this difference decreases by a factor of ≈ 3.95 from M = 100 to M = 200 and by a factor of ≈ 3.98 from M = 200 to M = 400, almost exactly the expected factor of 4. (For fun, in the solutions I went to M = 1600, but you only needed to go to M = 800.) 3 Problem 2: (10+5 points) Here, we consider inner products hu, vi on some vector space of complex-valued functions and the corresponding adjoint Â∗ of linear operators Â, where the adjoint is defined, as in class, by whatever satisfies hu, Âvi for all u and v. Usually, Â∗ is obtained from  by some kind of integration by parts. In particular, suppose V consists of functions u(x) on x ∈ [0, L] with the (“Robin”) boundary conditions u(0) = 0 and u0 (L) = u(L)/L as in pset 1, and define the inner product RL hu, vi = 0 u(x)v(x)dx as in class. (a) Here, we consider the boundary conditions u(0) = 0 and u0 (L) = ±u(L)/L for both signs with d2  = dx 2 . We get Z L Z L L L u0 v 0 = (ūv 0 − u0 v)0 + u00 v ūv 00 = ūv 0 |0 − 0 0 0 h (i ((( ( ( ( hÂu, vi ± ( u(L)v(L) − u(L)v(L) /L − 0, ( ( Z hu, Âvi = = L where we have applied the boundary conditions in the last step. Hence  = Â∗ . For definiteness, as in class we look at hu, Âui and integrate by parts only once, from the middle of the above derivation, obtaining (after applying the boundary conditions): 2 |u(L)| − L hu, Âui = ± Z L 2 |u0 (x)| dx. 0 The easy case is for the minus sign, and this is all you are required to show. In this case it is obviously ≤ 0, and = 0 only if u0 = 0 =⇒ u = constant =⇒ u = 0 as in class, so  < 0 (negative definite) for this boundary condition. For the + sign, which you are not required to prove, we could apply p the Cauchy–Schwartz RL inequality |hu, vi|2 ≤ hu, uihv, vi to show that hu0 , 1i = 0 u0 = u(L) ≤ hu0 , u0 ih1, 1i. Therefore, since h1, 1i = L, we obtain: 0 0 Z hu , u i = 2 L 2 |u0 (x)| dx ≥ 0 |u(L)| , L which means that hu, Âui ≤ 0 from above, and hence  is negative semidefinite. (b) The code to compute the integral is posted in the solution notebook online, and we indeed find that hu1 , u2 i ≈ 0 up to roundoff errors. 4