18.303 Problem Set 3 Solutions Problem 1: (5+5+10)

advertisement

18.303 Problem Set 3 Solutions

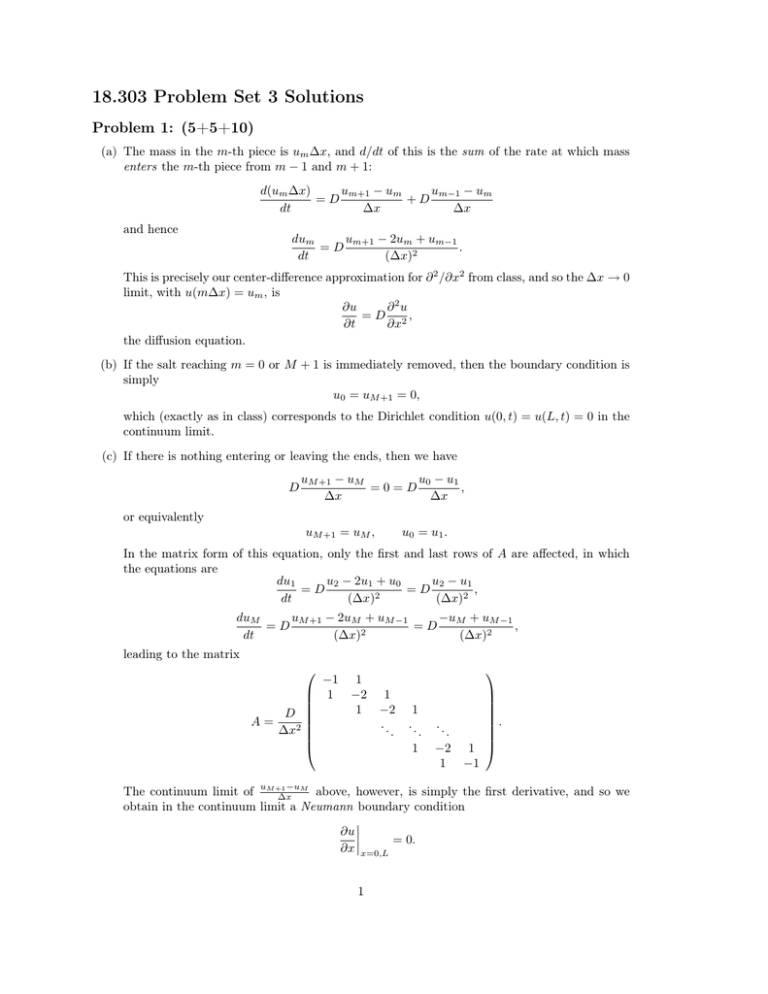

Problem 1: (5+5+10)

(a) The mass in the m-th piece is um ∆x, and d/dt of this is the sum of the rate at which mass

enters the m-th piece from m − 1 and m + 1:

um+1 − um

um−1 − um

d(um ∆x)

=D

+D

dt

∆x

∆x

and hence

dum

um+1 − 2um + um−1

.

=D

dt

(∆x)2

This is precisely our center-difference approximation for ∂ 2 /∂x2 from class, and so the ∆x → 0

limit, with u(m∆x) = um , is

∂u

∂2u

= D 2,

∂t

∂x

the diffusion equation.

(b) If the salt reaching m = 0 or M + 1 is immediately removed, then the boundary condition is

simply

u0 = uM +1 = 0,

which (exactly as in class) corresponds to the Dirichlet condition u(0, t) = u(L, t) = 0 in the

continuum limit.

(c) If there is nothing entering or leaving the ends, then we have

D

uM +1 − uM

u0 − u1

=0=D

,

∆x

∆x

or equivalently

uM +1 = uM ,

u0 = u1 .

In the matrix form of this equation, only the first and last rows of A are affected, in which

the equations are

du1

u2 − 2u1 + u0

u2 − u1

=D

=D

,

2

dt

(∆x)

(∆x)2

uM +1 − 2uM + uM −1

−uM + uM −1

duM

=D

=D

,

2

dt

(∆x)

(∆x)2

leading to the matrix

−1

1

D

A=

∆x2

1

−2

1

1

−2

..

.

1

..

.

1

..

.

−2

1

.

1

−1

−uM

above, however, is simply the first derivative, and so we

The continuum limit of uM +1

∆x

obtain in the continuum limit a Neumann boundary condition

∂u = 0.

∂x x=0,L

1

Problem 2: (10+10)

(a) To show that we have orthogonal modes with real frequencies, using the result from class for

general hyperbolic equations, we just need to show that  is self-adjoint and negativedefinite. In particular, for  = ρ1 ∇ · C∇, as in class we want an inner product that cancels

the 1/ρ factor:

ˆ

hu, vi =

ρūv .

Ω

´

‚

´

Using the same integration-by-parts rule as in class Ω f ∇ · g = ∂Ω f g · ds − Ω g · ∇f, we

then find:

ˆ

‹

ˆ

1

hu, Âvi =

∇

·

C∇v

=

ρ

ū

ūC∇v

·

ds

−

C∇v · ∇ū

|{z}

| {z } Ω

∂Ω

Ω

ρ g

f

ˆ

ˆ

ˆ

= − (C∇v)T ∇u = − (∇v)T C T ∇u = − (∇v)T C∇u

Ω

‹

ˆ Ω

ˆΩ

· ds +

vC∇u

v · ∇ · C∇u

∇ |{z}

v ·C∇u

=−

| {z } = −

∂Ω

Ω

Ω

=

g

f

ˆ

1

ρ ∇ · C∇u · v = hÂu, vi,

Ω ρ

hence  is self-adjoint. Here, in the second line we have used the fact that a dot product

of two vectors is really g · h = gT h in linear-algebra notation, which allows us to use the fact

that C T = C = C̄. The boundary terms vanish because u and v are zero at the boundaries.

Furthermore, negative-definiteness is obtained by inspection of the second line:

ˆ

hu, Âui = − (∇u)∗ C(∇u),

Ω

where ∗ is the conjugate-transpose. C is given to be positive-definite, so (∇u)∗ C(∇u) > 0 for

∇u 6= 0 by definition, but ∇u = 0 ⇐⇒ u = constant ⇐⇒ u = 0 by the boundary conditions,

and hence hu, Âui < 0 for u 6= 0 and  is negative-definite as desired.

(b) For example, the Dirichlet boundary condition u|∂Ω = 0 is sufficient. (Other possibilities

include periodic boundary conditions, if Ω is a parellelogram, or Neumann-like boundary conditions n · ∇u|∂Ω = 0 and ∇ · u|∂Ω = 0, i.e. that the normal derivative of each component of

u vanishes at the boundary along with the divergence of u.)

To see this, consider

ˆ

ux ∇2 ux + uy ∇2 uy + u · ∇(∇ · u) .

hu, Âvi =

Ω

2

The first two ∇ are self-adjoint with Dirichlet boundaries

by exactly

the proof

´

‚

´ from class.

The third term proceeds analogously, using the same Ω f ∇ · g = ∂Ω f g · ds − Ω g · ∇f rule:

ˆ

‹

ˆ

u ·∇ (∇ · v) = (∇· v)u · ds − (∇ · |{z}

v )∇

|{z}

| {z· u}

| {z } ∂Ω

Ω

Ω

g

f

g

‹

f

ˆ

(∇· u)v · ds +

∇(∇ · u) · v,

= − ∂Ω

Ω

where we have integrated by parts twice, and hence hu, ∇(∇ · v)i = h∇(∇ · u), vi as desired.

2

Problem 3: (10+10)

(a) I used the following code. Note that M is a 5 × 5 matrix, but K is a 6 × 6 matrix (since

there are 6 springs for 5 masses). All of the eigenvalues [diag(S)] are indeed real, and the

eigenvectors are orthogonal as verified by the fact that V ∗ M V has zero offdiagonal elements

(up to roundoff errors ≈ 10−15 ):

Ddx = diff1(5);

M = diag([1 2 3 4 5]);

K = diag([6 5 4 3 2 1]);

A = -inv(M) * Ddx’ * K * Ddx;

[V,S] = eig(A);

diag(S)

ans =

-12.59560

-4.25113

-1.86565

-0.78303

-0.18793

V’ * M * V

ans =

1.0955e+00

8.5167e-16

2.1469e-16

1.0274e-16

-2.7274e-17

8.4354e-16

2.0799e+00

-2.1447e-15

-1.3782e-15

4.4169e-16

2.1948e-16

-2.1329e-15

2.8931e+00

-5.0491e-16

2.8168e-16

1.0228e-16

-1.2613e-15

-5.1825e-16

3.4751e+00

1.3080e-15

-3.0413e-17

3.8557e-16

3.6597e-16

1.2537e-15

3.9021e+00

(b) For A = V ΛV −1 , real Λ = Λ∗ , W = (V V ∗ )−1 = V −∗ V −1 [where V −∗ denotes (V ∗ )−1 =

(V −1 )∗ ], and hx, yiW = x∗ W y, we have:

hx, AyiW = x∗ V −∗

V −1

V ΛV −1 y = (V −1 x)∗ Λ∗ V −1 y

= (ΛV −1 x)∗ V −1 y = (ΛV −1 x)∗

V ∗

V −∗ V −1 y

= (V ΛV −1 x)∗ V −∗ V −1 y = hAx, yiW ,

so A is self-adjoint.

However, if we compute this in Matlab from above, using W = inv(V*V’), we do not get

W = M . In fact, W is not even a diagonal matrix. The reason is that the choice of eigenvectors V , and hence the choice of W = (V V ∗ )−1 , is not unique: even if the eigenvalues

have multiplicity 1 (as they do here), we can multiply the eigenvectors by any scale factor

we want, which corresponds to transforming V → V Σ for some diagonal scaling matrix Σ,

and hence transforming W → (V ΣΣ∗ V ∗ )−1 . Matlab, particular, defaults to normalizing its

eigenvectors v so that v ∗ v = 1, whereas to get W = M we would need to normalize them so

that v ∗ M v = 1.

3