a l r n u o

advertisement

J

ni

o

r

Elect

o

u

a

rn l

o

f

P

c

r

ob

abil

ity

Vol. 10 (2005), Paper no. 45, pages 1468-1495.

Journal URL

http://www.math.washington.edu/∼ejpecp/

The Steepest Descent Method for Forward-Backward SDEs

1

Jakša Cvitanić and Jianfeng Zhang

Caltech, M/C 228-77, 1200 E. California Blvd.

Pasadena, CA 91125.

Ph: (626) 395-1784. E-mail: cvitanic@hss.caltech.edu

and

Department of Mathematics, USC

3620 S Vermont Ave, KAP 108, Los Angeles, CA 90089-1113.

Ph: (213) 740-9805. E-mail: jianfenz@usc.edu.

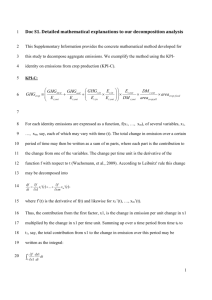

Abstract. This paper aims to open a door to Monte-Carlo methods for numerically solving Forward-Backward SDEs, without computing over all Cartesian grids

as usually done in the literature. We transform the FBSDE to a control problem

and propose the steepest descent method to solve the latter one. We show that the

original (coupled) FBSDE can be approximated by decoupled FBSDEs, which further boils down to computing a sequence of conditional expectations. The rate of

convergence is obtained, and the key to its proof is a new well-posedness result for

FBSDEs. However, the approximating decoupled FBSDEs are non-Markovian. Some

Markovian type of modification is needed in order to make the algorithm efficiently

implementable.

Keywords: Forward-Backward SDEs, quasilinear PDEs, stochastic control, steepest

decent method, Monte-Carlo method, rate of convergence.

2000 Mathematics Subject Classification. Primary: 60H35; Secondary: 60H10, 65C05,

35K55

Submitted to EJP on July 26, 2005. Final version accepted on December 1, 2005.

1

Research supported in part by NSF grant DMS 04-03575.

1468

1

Introduction

Since the seminal work of Pardoux-Peng [19], there have been numerous publications on Backward Stochastic Differential Equations (BSDEs) and Forward-Backward

SDEs (FBSDEs). We refer the readers to the book Ma-Yong [17] and the reference

therein for the details on the subject. In particular, FBSDEs of the following type

are studied extensively:

Z

Xt = x +

t

0

b(s, Xs , Ys , Zs )ds +

Yt = g(XT ) +

Z

T

t

Z

t

0

σ(s, Xs , Ys )dWs ;

f (s, Xs , Ys , Zs )ds −

Z

T

t

(1.1)

Zs dWs ;

where W is a standard Brownian Motion, T > 0 is a deterministic terminal time,

and b, σ, f, g are deterministic functions. Here for notational simplicity we assume

all processes are 1-dimensional. It is well known that FBSDE (1.1) is related to the

following parabolic PDE on [0, T ] × IR (see, e.g., [13], [20], and [7])

(

ut + 12 σ 2 (t, x, u)uxx + b(t, x, u, σ(t, x, u)ux )ux + f (t, x, u, σ(t, x, u)ux ) = 0;

u(T, x) = g(x);

(1.2)

in the sense that (if a smooth solution u exists)

Yt = u(t, Xt ),

Zt = ux (t, Xt )σ(t, Xt , u(t, Xt )).

(1.3)

Due to its importance in applications, numerical methods for BSDEs have received strong attention in recent years. Bally [1] proposed an algorithm by using

a random time discretization. Based on a new notion of L2 -regularity, Zhang [21]

obtained rate of convergence for deterministic time discretization and transformed

the problem to computing a sequence of conditional expectations. In Markovian setting, significant progress has been made in computing the conditional expectations.

The following methods are of particular interest: the quantization method (see, e.g.,

Bally-Pagès-Printems [2]), the Malliavin calculus approach (see Bouchard-Touzi [4]),

the linear regression method or the Longstaff-Schwartz algorithm (see Gobet-LemorWaxin [10]), and the Picard iteration approach (see Bender-Denk [3]). These methods

work well in reasonably high dimensions. There are also lots of publications on numerical methods for non-Markovian BSDEs (see, e.g., [5], [6], [12], [15], [24]). But in

general these methods do not work when the dimension is high.

Numerical approximations for FBSDEs, however, are much more difficult. To our

knowledge, there are only very few works in the literature. The first one was DouglasMa-Protter [9], based on the four step scheme. Their main idea is to numerically

solve the PDE (1.2). Milstein-Tretyakov [16] and Makarov [14] also proposed some

1469

numerical schemes for (1.2). Recently Delarue-Menozzi [8] proposed a probabilistic

algorithm. Note that all these methods essentially need to discretize the space over

regular Cartesian grids, and thus are not practical in high dimensions.

In this paper we aim to open a door to truly Monte-Carlo methods for FBSDEs,

without computing over all Cartesian grids. Our main idea is to transform the FBSDE

to a stochastic control problem and propose the steepest descent method to solve the

latter one. We show that the original (coupled) FBSDE can be approximated by

solving a certain number of decoupled FBSDEs. We then discretize the approximating

decoupled FBSDEs in time and thus the problem boils down to computing a sequence

of conditional expectations. The rate of convergence is obtained.

We note that the idea to approximate with a corresponding stochastic control

problem is somewhat similar to the approximating solvability of FBSDEs in MaYong [18] and the near-optimal control in Zhou [25]. However, in those works the

original problem may have no exact solution and the authors try to find a so called

approximating solution. In our case the exact solution exists and we want to approximate it with numerically computable terms. More importantly, in those works one

only cares for the existence of the approximating solutions, while here for practical

reasons we need explicit construction of the approximations as well as the rate of

convergence.

The key to the proof is a new well-posedness result for FBSDEs. In order to

obtain the rate of convergence of our approximations, we need the well-posedness of

some adjoint FBSDEs, which are linear but with random coefficients. It turns out

that all the existing methods in the literature do not work in our case.

At this point we should point out that, unfortunately, our approximating decoupled FBSDEs are non-Markovian (that is, the coefficients are random), and thus we

cannot apply directly the existing methods for Markovian BSDEs. In order to make

our algorithm efficiently implementable, some further modification of Markovian type

is needed.

Although in the long term we aim to solve high dimensional FBSDEs, as a first

attempt and for technical reasons (in order to apply Theorem 1.2 below), in this

paper we assume all the processes are one dimensional. We also assume that b = 0

and f is independent of Z. That is, we will study the following FBSDE:

Z

Xt = x +

t

0

σ(s, Xs , Ys )dWs ;

Yt = g(XT ) +

Z

T

t

f (s, Xs , Ys )ds −

Z

(1.4)

T

t

Zs dWs .

In this case, PDE (1.2) becomes

(

ut + 12 σ 2 (t, x, u)uxx + f (t, x, u) = 0;

u(T, x) = g(x);

1470

(1.5)

Moreover, in order to simplify the presentation and to focus on the main idea, throughout the paper we assume

Assumption 1.1 All the coefficients σ, f, g are bounded, smooth enough with bounded

derivatives, and σ is uniformly nondegenerate.

Under Assumption 1.1, it is well known that PDE (1.5) has a unique solution u

which is bounded and smooth with bounded derivatives (see [11]), that FBSDE (1.4)

has a unique solution (X, Y, Z), and that (1.3) holds true (see [13]). Unless otherwise

specified, throughout the paper we use (X, Y, Z) and u to denote these solutions, and

C, c > 0 to denote generic constants depending only on T , the upper bounds of the

derivatives of the coefficients, and the uniform nondegeneracy of σ. We allow C, c to

vary from line to line.

Finally, we cite a well-posedness result from Zhang [23] (or [22] for a weaker result)

which will play an important role in our proofs.

Theorem 1.2 Consider the following FBSDE

Z

Xt = x +

t

0

b(ω, s, Xs , Ys , Zs )ds +

Yt = g(ω, XT ) +

Z

T

t

Z

t

0

σ(ω, s, Xs , Ys )dWs ;

f (ω, s, Xs , Ys , Zs )ds −

Z

T

t

(1.6)

Zs dWs ;

Assume that b, σ, f, g are uniformly Lipschitz continuous with respect to (x, y, z); that

there exists a constant c > 0 such that

σy bz ≤ −c|by + σx bz + σy fz |;

(1.7)

and that

4

n

I02 = E x2 + |g(ω, 0)|2 +

Z

T

0

o

[|b|2 + |σ|2 + |f |2 ](ω, t, 0, 0, 0)dt < ∞.

Then FBSDE (1.6) has a unique solution (X, Y, Z) such that

E

n

sup [|Xt |2 + |Yt |2 ] +

0≤t≤T

Z

T

0

o

|Zt |2 dt ≤ CI02 ,

where C is a constant depending only on T, c and the Lipschitz constants of the coefficients.

The rest of the paper is organized as follows. In the next section we transform FBSDE (1.4) to a stochastic control problem and propose the steepest descent method;

in §3 we discretize the decoupled FBSDEs introduced in §2; and in §4 we transform

the discrete FBSDEs to a sequence of conditional expectations.

1471

2

The Steepest Descent Method

Let (Ω, F, P ) be a complete probability space, W a standard Brownian motion, T > 0

4

a fixed terminal time, F = {Ft }0≤t≤T the filtration generated by W and augmented

by the P -null sets. Let L2 (F) denote square integrable F-adapted processes. From

now on we always assume Assumption 1.1 is in force.

2.1

The Control Problem

In order to numerically solve (1.4), we first formulate a related stochastic control problem. Given y0 ∈ IR and z 0 ∈ L2 (F), consider the following 2-dimensional (forward)

SDE with random coefficients (z 0 being considered as a coefficient):

Z t

0

Xt = x +

σ(s, Xs0 , Ys0 )dWs ;

0

Z

Z t

Yt0 = y0 −

f (s, Xs0 , Ys0 )ds +

0

and denote

t

0

zs0 dWs ;

n

o

4 1

V (y0 , z 0 ) = E |YT0 − g(XT0 )|2 .

2

Our first result is

(2.2)

Theorem 2.1 We have

E

n

sup [|Xt − Xt0 |2 + |Yt − Yt0 |2 ] +

0≤t≤T

Z

T

0

o

|Zt − zt0 |2 dt ≤ CV (y0 , z 0 ).

Proof. The idea is similar to the four step scheme (see [13]).

Step 1. Denote

4

∆Yt = Yt0 − u(t, Xt0 );

4

∆Zt = zt0 − ux (t, Xt0 )σ(t, Xt0 , Yt0 ).

Recalling (1.5) we have

d(∆Yt ) = zt0 dWt − f (t, Xt0 , Yt0 )dt − ux (t, Xt0 )σ(t, Xt0 , Yt0 )dWt

h

i

1

− ut (t, Xt0 ) + uxx (t, Xt0 )σ 2 (t, Xt0 , Yt0 ) dt

2

h1

i

= ∆Zt dWt − uxx (t, Xt0 )σ 2 (t, Xt0 , Yt0 ) + f (t, Xt0 , Yt0 ) dt

2

i

h1

+ uxx (t, Xt0 )σ 2 (t, Xt0 , u(t, Xt0 )) + f (t, Xt0 , u(t, Xt0 )) dt

2

= ∆Zt dWt − αt ∆Yt dt,

1472

(2.1)

where

1

uxx (t, Xt0 )[σ 2 (t, Xt0 , Yt0 ) − σ 2 (t, Xt0 , u(t, Xt0 ))]

2∆Yt

1

[f (t, Xt0 , Yt0 ) − f (t, Xt0 , u(t, Xt0 ))]

+

∆Yt

4

αt =

is bounded. Note that ∆YT = YT0 − g(XT0 ). By standard arguments one can easily get

E

n

2

sup |∆Yt | +

0≤t≤T

Z

T

0

o

|∆Zt |2 dt ≤ CE{|∆YT |2 } = CV (y0 , z 0 ).

(2.3)

4

Step 2. Denote ∆Xt = Xt − Xt0 . We show that

E

n

o

sup |∆Xt |2 ≤ CV (y0 , z 0 ).

0≤t≤T

(2.4)

In fact,

i

h

d(∆Xt ) = σ(t, Xt , u(t, Xt )) − σ(t, Xt0 , Yt0 ) dWt .

Note that

u(t, Xt ) − Yt0 = u(t, Xt ) − u(t, Xt0 ) − ∆Yt .

One has

d(∆Xt ) = [αt1 ∆Xt + αt2 ∆Yt ]dWt ,

where αti are defined in an obvious way and are uniformly bounded. Note that ∆X0 =

0. Then by standard arguments we get

E

n

sup |∆Xt |

0≤t≤T

2

o

≤ CE

nZ

T

0

o

|∆Yt |2 dt ,

which, together with (2.3), implies (2.4).

Step 3. We now prove the theorem. Recall (1.3), we have

E

n

sup |Yt −

0≤t≤T

=E

n

Yt0 |2

+

Z

T

0

|Zt − zt0 |2 dt

o

sup |u(t, Xt ) − u(t, Xt0 ) − ∆Yt |2

0≤t≤T

Z T¯

¯

¯ux (t, Xt )σ(t, Xt , u(t, Xt )) − ux (t, Xt0 )σ(t, Xt0 , u(t, Xt0 ))

0

¯2 o

+ux (t, Xt0 )σ(t, Xt0 , u(t, Xt0 )) − ux (t, Xt0 )σ(t, Xt0 , Yt0 ) − ∆Zt ¯¯ dt

Z Th

n

i o

+

≤ CE

≤ CV

sup [|∆Xt |2 + |∆Yt |2 ] +

0≤t≤T

(y0 , z 0 ),

which, together with (2.4), ends the proof.

1473

0

|∆Xt |2 + |∆Yt |2 + |∆Zt |2 dt

2.2

The Steepest Descent Direction

Our idea is to modify (y0 , z 0 ) along the steepest descent direction so as to decrease

V as fast as possible. First we need to find the Fréchet derivative of V along some

direction (∆y, ∆z), where ∆y ∈ IR, ∆z ∈ L2 (F). For δ ≥ 0, denote

4

4

zt0,δ = zt0 + δ∆zt ;

y0δ = y0 + δ∆y;

and let X 0,δ , Y 0,δ be the solution to (2.1) corresponding to (y0δ , z 0,δ ). Denote:

Z t

0

∇X

=

[σx0 ∇Xs0 + σy0 ∇Ys0 ]dWs ;

t

0

Z t

0

∇Yt = ∆y −

4

[fx0 ∇Xs0 + fy0 ∇Ys0 ]ds +

Z

t

∆zs dWs ;

0n

0

o

∇V (y , z 0 ) = E [Y 0 − g(X 0 )][∇Y 0 − g 0 (X 0 )∇X 0 ] ;

0

T

T

T

T

T

where ϕ0s = ϕ(s, Xs0 , Ys0 ) for any function ϕ. By standard arguments, one can easily

show that

1

1

lim [Xt0,δ − Xt0 ] = ∇Xt0 ;

lim [Yt0,δ − Yt0 ] = ∇Yt0 ;

δ→0 δ

δ→0 δ

1

lim [V (y0δ , z 0,δ ) − V (y0 , z 0 )] = ∇V (y0 , z 0 );

δ→0 δ

where the two limits in the first line are in the L2 (F) sense.

To investigate ∇V (y0 , z 0 ) further, we define some adjoint processes. Consider

(X 0 , Y 0 ) as random coefficients and let (Ȳ 0 , Ỹ 0 , Z̄ 0 , Z̃ 0 ) be the solution to the following 2-dimensional BSDE:

Z

0

0

0

Ȳt = [YT − g(XT )] −

Ỹt0 = g 0 (XT0 )[YT0 −

T

[fy0 Ȳs0 +

Z T

t

g(XT0 )]

+

σy0 Z̃s0 ]ds

−

Z

T

t

Z̄s0 dWs ;

Z

[fx0 Ȳs0 + σx0 Z̃s0 ]ds −

t

T

t

Z̃s0 dWs .

We note that (2.5) depends only on (y0 , z 0 ), but not on (∆y, ∆z).

Lemma 2.2 For any (∆y, ∆z), we have

0

∇V (y0 , z ) = E

n

Ȳ00 ∆y

+

Z

T

0

o

Z̄t0 ∆zt dt .

Proof. Note that

n

o

∇V (y0 , z 0 ) = E ȲT0 ∇YT0 − ỸT0 ∇XT0 .

Applying Ito’s formula one can easily check that

d(Ȳt0 ∇Yt0 − Ỹt0 ∇Xt0 ) = Z̄t0 ∆zt dt + (· · ·)dWt .

1474

(2.5)

Then

n

∇V (y0 , z 0 ) = E Ȳ00 ∇Y00 − Ỹ00 ∇X00 +

Z

T

0

o

n

Z̄t0 ∆zt dt = E Ȳ00 ∆y +

Z

T

0

o

Z̄t0 ∆zt dt .

That proves the lemma.

Recall that our goal is to decrease V (y0 , z 0 ). Very naturally one would like to

choose the following steepest descent direction:

4

4

∆y = −Ȳ00 ;

∆zt = −Z̄t0 .

Then

n

∇V (y0 , z 0 ) = −E |Ȳ00 |2 +

Z

T

0

(2.6)

o

|Z̄t0 |2 dt ,

(2.7)

which depends only on (y0 , z 0 ) (not on (∆y, ∆z)).

Note that if ∇V (y0 , z 0 ) = 0, then we gain nothing on decreasing V (y0 , z 0 ). Fortunately this is not the case.

Lemma 2.3 Assume (2.6). Then ∇V (y0 , z 0 ) ≤ −cV (y0 , z 0 ).

Proof. Rewrite (2.5) as

Z t

Z t

0

0

0 0

0 0

Ȳt = Ȳ0 +

[fy Ȳs + σy Z̃s ]ds +

Z̄s0 dWs ;

0

0

Z T

Z T

Ỹt0 = g 0 (XT0 )ȲT0 +

Z̃s0 dWs .

[fx0 Ȳs0 + σx0 Z̃s0 ]ds −

(2.8)

t

t

One may consider (2.8) as an FBSDE with solution triple (Ȳt , Ỹt , Z̃t ), where Ȳt is the

forward component and (Ỹt , Z̃t ) are the backward components. Then (Ȳ00 , Z̄t0 ) are

considered as (random) coefficients of the FBSDE. One can easily check that FBSDE

(2.8) satisfies condition (1.7) (with both sides equal to 0). Applying Theorem 1.2 we

get

E

n

sup [|Ȳt0 |2 + |Ỹt0 |2 ] +

0≤t≤T

Z

T

0

o

n

|Z̃t0 |dt ≤ CI02 = CE |Ȳ00 |2 +

Z

T

0

o

|Z̄t0 |2 dt .

In particular,

Z T

o

n

1

|Z̄t0 |2 dt ,

V (y0 , z 0 ) = E{|ȲT0 |2 } ≤ CE |Ȳ00 |2 +

2

0

which, combined with (2.7), implies the lemma.

1475

(2.9)

2.3

Iterative Modifications

We now fix a desired error level ε and pick an (y0 , z 0 ). If we are extremely lucky that

V (y0 , z 0 ) ≤ ε2 , then we may use (X 0 , Y 0 , z 0 ) defined by (2.1) as an approximation of

(X, Y, Z). In other cases we want to modify (y0 , z 0 ). From now on we assume

V (y0 , z 0 ) > ε2 ;

E{|YT0 − g(XT0 )|4 } ≤ K04 ;

(2.10)

where K0 ≥ 1 is a constant. We note that one can always assume the existence of K0

by letting, for example, y0 = 0, zt0 = 0.

Lemma 2.4 Assume (2.10). There exist constants C0 , c0 , c1 > 0, which are independent of K0 and ε, such that

c0 ε

4

∆V (y0 , z 0 ) = V (y1 , z 1 ) − V (y0 , z 0 ) ≤ − 2 V (y0 , z 0 ),

(2.11)

K0

and

4

(2.12)

4

(2.13)

E{|YT1 − g(XT1 )|4 } ≤ K14 = K04 + 2C0 εK02 ,

4 c1 ε

,

K02

where, by denoting λ =

4

y1 = y0 − λȲ00 ;

zt1 = zt0 − λZ̄t0 ;

and, for 0 ≤ θ ≤ 1,

Z

θ

Xt = x +

t

0

σ(s, Xsθ , Ysθ )dWs ;

Ytθ = y0 − θλȲ00 −

Z

t

0

f (s, Xsθ , Ysθ )ds

+

Z

t

0

(2.14)

[zs0 − θλZ̄s0 ]dWs ;

Proof. We proceed in four steps.

Step 1. For 0 ≤ θ ≤ 1, denote

Z T

Z T

θ

θ

θ

θ θ

θ θ

Ȳ

=

[Y

−

g(X

)]

−

[f

Ȳ

+

σ

Z̃

]ds

−

Z̄sθ dWs ;

t

T

T

y s

y s

t

t

Z T

Z T

Ỹtθ = g 0 (XTθ )[YTθ − g(XTθ )] +

Z̃sθ dWs ;

[fxθ Ȳsθ + σxθ Z̃sθ ]ds −

t

t

Z t

θ

θ

θ

θ

θ

∇Xt = [σx ∇Xs + σy ∇Ys ]dWs ;

0

Z t

Z t

θ

θ

θ

θ

∇Ytθ = −Ȳ00 −

Z̄s0 dWs ;

[fx ∇Xs + fy ∇Ys ]ds −

0

0

4

where ϕθt = ϕ(t, Xtθ , Ytθ ) for any function ϕ. Then

∆V (y0 , z 0 ) =

o

1 n 1

E [YT − g(XT1 )]2 − [YT0 − g(XT0 )]2

2Z

= λ

1

0

n

o

E [YTθ − g(XTθ )][∇YTθ − g 0 (XTθ )∇XTθ ] dθ.

1476

Following the proof of Lemma 2.2, we have

0

∆V (y0 , z ) = −λ

Z

1

E

0

n

Ȳ0θ Ȳ00

+

Step 2. First, one can easily show that

E

n

sup [|Ȳt0 |4 + |Ỹt0 |4 ] +

0≤t≤T

³Z

T

0

Z

T

0

o

Z̄tθ Z̄t0 dt dθ.

[|Z̄t0 |2 + |Z̃t0 |2 ]dt

´2 o

(2.15)

≤ CK04 .

(2.16)

Denote

4

4

∆Xtθ = Xtθ − Xt0 ;

Then

∆Ytθ = Ytθ − Yt0 .

Z t

∆Xtθ =

[αs1,θ ∆Xsθ + βs1,θ ∆Ysθ ]dWs ;

0

Z t

Z

∆Ytθ = −θλȲ00 −

[αs2,θ ∆Xsθ + βs2,θ ∆Ysθ ]ds − θλ

0

t

0

Z̄s0 dWs ;

where αi,θ , β i,θ are defined in an obvious way and are bounded. Thus, by (2.16),

E

n

sup

0≤t≤T

[|∆Xtθ |4

+

|∆Ytθ |4 ]

o

4 4

≤θ λ E

n

|Ȳ00 |4

+

³Z

T

0

|Z̄t0 |2 dt

´2 o

≤ CK04 λ4 .

(2.17)

Denote

1

[g(XTθ ) − g(XT0 )],

∆XTθ

which is bounded. For any constants a, b > 0 and 0 < λ < 1, applying the Young’s

Inequality we have

4

αTθ =

3

1

1

1

1

1

(a + b)4 = a4 + 4(λ 4 a)3 (λ− 4 b) + 6(λ 4 a)2 (λ− 4 b)2 + 4(λ 4 a)(λ− 12 b)3 + b4

1

≤ [1 + Cλ]a4 + C[λ−3 + λ−1 + λ− 3 + 1]b4 ≤ [1 + Cλ]a4 + Cλ−3 b4 .

Noting that the value of λ we will choose is less than 1, we have

n

o

n

E |YTθ − g(XTθ )|4 = E |YT0 − g(XT0 ) + ∆YTθ − αTθ ∆XTθ |4

n

o

n

o

≤ [1 + Cλ]E |YT0 − g(XT0 )|4 + Cλ−3 E |∆YTθ |4 + |∆XTθ |4

≤ [1 + Cλ]K04 .

o

(2.18)

Step 3. Denote

4

∆Ȳtθ = Ȳtθ − Ȳt0 ;

4

4

∆Ỹtθ = Ỹtθ − Ỹt0 ;

∆Z̄tθ = Z̄tθ − Z̄t0 ;

4

∆Z̃tθ = Z̃tθ − Z̃t0 .

Then

Z T

Z T

θ

θ

θ

θ

θ

θ

θ

θ

∆

Ȳ

=

[∆Y

−

α

∆X

]

−

[f

∆

Ȳ

+

σ

∆

Z̃

]ds

−

∆Z̄sθ dWs

t

T

T

T

y

s

y

s

t

t

Z T

0

θ

0

θ

−

[Ȳs ∆fy + Z̃s ∆σy ]ds;

t

Z T

Z T

θ

θ

θ

θ

θ

θ

θ

0

θ

θ

∆Z̃sθ dWs

[fx ∆Ȳs + σx ∆Z̃s ]ds −

∆Ỹt = g (XT )[∆YT − αT ∆XT ] +

t

t

Z T

[Ȳ 0 ∆f θ + Z̃ 0 ∆σ θ ]ds,

+[Y 0 − g(X 0 )]∆g 0 (θ) +

T

T

t

s

1477

x

s

x

where

4

∆fy (θ) = fy (t, Xtθ , Ytθ ) − fy (t, Xt0 , Yt0 );

and all other terms are defined in a similar way. By standard arguments one has

E

n

sup [|∆Ȳtθ |2 + |∆Ỹtθ |2 ] +

0≤t≤T

≤ CE

+

Z

n

T

0

n

|∆YTθ |2

h

+

|∆XTθ |2

+

Z

T

0

|YT0

[|∆Z̄tθ |2 + |∆Z̃tθ |2 ]dt}

− g(XT0 )|2 |∆g 0 (θ)|2

i

|Ȳt0 |2 |[∆fxθ |2 + |∆fyθ |2 ] + |Z̃t0 |2 [|∆σxθ |2 + |∆σyθ |2 ] dt

≤ CE |∆YTθ |2 + |∆XTθ |2 + |YT0 − g(XT0 )|2 |∆XTθ |2

+

Z

T

0

1

≤ CE 2

E

≤

n

1

2

[|Ȳt0 |2 + |Z̃t0 |2 ][|∆Xtθ |2 + |∆Ytθ |2 ]dt

n

o

sup [|∆Xtθ |4 + |∆Ytθ |4 ] ×

0≤t≤T

1+

|YT0

CK02 λ2 [1

+

−

g(XT0 )|4

K02 ]

≤

+

³Z

T

0

o

o

[|Ȳt0 |2 + |Z̃t0 |2 ]dt

CK04 λ2 ,

´2 o

thanks to (2.17), (2.10), and (2.16). In particular,

E

n

|∆Ȳ0θ |2

+

Z

T

0

o

|∆Z̄tθ |2 dt ≤ CK04 λ2 .

(2.19)

Step 4. Note that

Z

¯ n

¯

θ 0

¯E Ȳ0 Ȳ0 +

n

T

0

Z̄tθ Z̄t0 dt

≤ E |∆Ȳ0θ Ȳ00 | +

n

≤ CE |∆Ȳ0θ |2 +

Z

Z

T

0

0

T

o

−E

n

|Ȳ00 |2

|∆Z̄tθ Z̄t0 |dt

+

o

Z

T

0

o¯

|Z̄t0 |2 dt ¯¯

Z T

o

o

1 n

|Z̄t0 |2 dt

|∆Z̄tθ |2 dt + E |Ȳ00 |2 +

2

0

Z T

o

1 n

|Z̄t0 |2 dt .

≤ CK04 λ2 + E |Ȳ00 |2 +

2

0

Then, by (2.9) we have

n

E Ȳ0θ Ȳ00 +

4

Choose c1 =

get

q

c

2C

n

Z

T

0

Z̄tθ Z̄t0 dt

o

1 n 02 ZT 02 o

E |Ȳ0 | +

|Z̄t | dt − CK04 λ2

2

0

≥ cV (y0 , z 0 ) − CK04 λ2 .

≥

4 c1 ε

.

K02

for the constants c, C as above, and λ =

E Ȳ0θ Ȳ00 +

Z

T

0

Then by (2.10) we

o

c

c

Z̄tθ Z̄t0 dt ≥ cV (y0 , z 0 ) − ε2 ≥ V (y0 , z 0 ).

2

2

1478

(2.20)

Then (2.11) follows directly from (2.15).

Finally, plug λ into (2.18) and let θ = 1 we get (2.12) for some C0 .

Now we are ready to approximate FBSDE (1.4) iteratively. Set

4

y0 = 0,

4

4

1

K0 = E 4 {|YT0 − g(XT0 )|4 }.

zt0 = 0,

(2.21)

For k = 0, 1, · · · , let (X k , Y k , Ȳ k , Ỹ k , Z̄ k , Z̃ k ) be the solution to the following FBSDE:

Z t

k

X

=

x

+

σ(s, Xsk , Ysk )dWs ;

t

Z t

Z0 t

k

k

k

zsk dWs ;

f (s, Xs , Ys )ds +

Yt = y k −

0

0

Z T

Z T

k k

k

k

k

k k

Ȳt = [YT − g(XT )] −

Z̄sk dWs ;

[fy Ȳs + σy Z̃s ]ds −

t

t

Z

Z T

T

k

k

0

k

k

k

k

k

k

Ỹt = g (XT )[YT − g(XT )] +

Z̃sk dWs .

[fx Ȳs + σx Z̃s ]ds −

t

(2.22)

t

We note that (2.22) is decoupled, with forward components (X k , Y k ) and backward

components (Ȳ k , Ỹ k , Z̄ k , Z̃ k ). Denote

4

λk =

c1 ε

,

Kk2

4

yk+1 = yk − λk Ȳ0k ,

4

ztk+1 = ztk − λk Z̄tk ,

4

4

Kk+1

= Kk4 + 2C0 εKk2 , (2.23)

where c1 , C0 are the constants in Lemma 2.4.

Theorem 2.5 There exist constants C1 , C2 and N ≤ C1 ε−C2 such that

V (yN , z N ) ≤ ε2 .

4

Proof. Assume V (yk , z k ) > ε2 for k = 0, · · · , N − 1. Note that Kk+1

≤ (Kk2 + C0 ε)2 .

2

Then Kk+1

≤ Kk2 + C0 ε, which implies that

Kk2 ≤ K02 + C0 kε.

Thus by Lemma 2.4 we have

h

V (yk+1 , z k+1 ) ≤ 1 −

i

c0 ε

V (yk , z k ).

K02 + C0 kε

Note that log(1 − x) ≤ −x for x ∈ [0, 1). For ε small enough, we get

log(V (yN , z N )) ≤ log(V (0, 0)) +

≤C −c

N

−1

X

k=0

h

1

≤C −c

k + ε−1

Z

N

−1

X

k=0

N −1

0

³

log 1 −

dx

x + ε−1

i

−1

´

c0 ε

K02 + C0 kε

= C − c log(N − 1 + ε ) − log(ε−1 ) = C − c log(1 + ε(N − 1)).

1479

For c, C as above, choose N to be the smallest integer such that

C

2

N ≥ 1 + ε−1 [e c ε− c − 1].

We get

log(V (yN , z N )) ≤ C − c[

C 2

− log(ε)] = log(ε2 ),

c

c

which obviously proves the theorem.

3

Time Discretization

We now investigate the time discretization of FBSDEs (2.22). Fix n and denote

4

ti =

3.1

i

T;

n

4

∆t =

T

;

n

i = 0, · · · , n.

Discretization of the FSDEs

Given y0 ∈ IR and z 0 ∈ L2 (F), denote

4

4

Xtn,0

= x; Ytn,0

= y0 ;

0

0

n,0 4

n,0

n,0

Xt

+ σ(ti , Xt , Ytn,0 )[Wt − Wti ],

= Xt

i

i

i

Z

n,0 4

n,0

n,0

n,0

Yt

= Yti − f (ti , Xti , Yti )[t − ti ] +

t

ti

t ∈ (ti , ti+1 ];

zs0 dWs ,

(3.1)

t ∈ (ti , ti+1 ].

Note that we do not discretize z 0 here. For notational simplicity, we denote

4

;

Xin,0 = Xtn,0

i

Define

4

;

Yin,0 = Ytn,0

i

i = 0, · · · , n.

4 1

Vn (y0 , z 0 ) = E{|Ynn,0 − g(Xnn,0 )|2 }.

2

(3.2)

First we have

Theorem 3.1 Denote

4

n

I n,0 = E max [|Xti − Xin,0 |2 + |Yti − Yin,0 |2 ] +

0≤i≤n

Then

I n,0 ≤ CVn (y0 , z 0 ) +

1480

C

.

n

Z

T

0

o

|Zt − zt0 |2 dt .

(3.3)

(3.4)

We note that (see, e.g. Zhang [21]),

C

;

0≤i≤n−1

n

ti ≤t≤ti+1

n

o

C log n

;

E max

sup [|Xt − Xti |2 + |Yt − Yti |2 ] ≤

0≤i≤n−1 ti ≤t≤ti+1

n

max E

E

n n−1

XZ

i=0

n

o

sup [|Xt − Xti |2 + |Yt − Yti |2 ] ≤

ti+1

ti

Z ti+1

o

1

C

Ei {

|Zt −

Zs ds}|2 dt ≤ ;

∆t

n

ti

4

where Ei {·} = E{·|Fti }. Then one can easily show the following estimates:

Corollary 3.2 We have

C

;

0≤i≤n−1

n

ti ≤t≤ti+1

n

o

C log n

E max

sup [|Xt − Xin,0 |2 + |Yt − Yin,0 |2 ] ≤ CVn (y0 , z 0 ) +

;

0≤i≤n−1 ti ≤t≤ti+1

n

max E

E

n n−1

XZ

i=0

n

ti+1

ti

o

sup [|Xt − Xin,0 |2 + |Yt − Yin,0 |2 ] ≤ CVn (y0 , z 0 ) +

|Zt −

Z ti+1

o

C

1

zs0 ds}|2 dt ≤ CVn (y0 , z 0 ) + .

Ei {

∆t

n

ti

Proof of Theorem 3.1. Recall (2.1). For i = 0, · · · , n, denote

4

∆Xi = Xt0i − Xin,0 ;

4

∆Yi = Yt0i − Yin,0 .

Then

∆X0 = 0;

∆Y0 = 0;

∆Xi+1 = ∆Xi +

Z

∆Yi+1 = ∆Yi −

Z

ti+1

ti

ti+1

ti

h

h

i

[αi1 ∆Xi + βi1 ∆Yi ] + [σ(t, Xt0 , Yt0 ) − σ(ti , Xt0i , Yt0i )] dWt ;

i

[αi2 ∆Xi + βi2 ∆Yi ] + [f (t, Xt0 , Yt0 ) − f (ti , Xt0i , Yt0i )] dt;

where αij , βij ∈ Fti are defined in an obvious way and are uniformly bounded. Then

E{|∆Xi+1 |2 }

n

= E |∆Xi |2 +

Z

ti+1

ti

h

i2

[αi1 ∆Xi + βi1 ∆Yi ] + [σ(t, Xt0 , Yt0 ) − σ(ti , Xt0i , Yt0i )] dt

o

Z ti+1

o

C

2

2

≤ E |∆Xi | + [|∆Xi | + |∆Yi | ] + C

[|Xt0 − Xt0i |2 + |Yt0 − Yt0i |2 ]dt ;

n

ti

n

2

and, similarly,

E{|∆Yi+1 |2 }

Z ti+1

o

C

2

2

≤ E |∆Yi | + [|∆Xi | + |∆Yi | ] + C

[|Xt0 − Xt0i |2 + |Yt0 − Yt0i |2 ]dt .

n

ti

n

2

1481

Denote

4

Ai = E{|∆Xi |2 + |∆Yi |2 }.

Then A0 = 0, and

o

n Z ti+1

C

[|Xt0 − Xt0i |2 + |Yt0 − Yt0i |2 ]dt .

]Ai + CE

n

ti

Ai+1 ≤ [1 +

By the discrete Gronwall inequality we get

max Ai ≤ C

0≤i≤n

≤C

≤C

≤

n−1

X

i=0

n−1

X

i=0

E

nZ

n−1

X

E

i=0

ti+1

ti

n

nZ

hZ

t

ti

ti+1

ti

[|Xt0 − Xt0i |2 + |Yt0 − Yt0i |2 ]dt

E |∆t|2 + |∆t|3 + ∆t

C C

+ E

n

n

nZ

T

0

Z

|σ(s, Xs0 , Ys0 )|2 ds + |

Z

ti+1

ti

|zt0 |2 dt

t

ti

o

f (s, Xs0 , Ys0 )ds|2 +

Z

t

ti

i

|zs0 |2 ds dt

o

o

|zt0 |2 dt .

(3.5)

Next, note that

∆Xi =

∆Yi =

i−1 Z

X

tj+1

j=0 tj

i−1 Z

X

tj+1

j=0 tj

h

h

i

[αj1 ∆Xj + βj1 ∆Yj ] + [σ(t, Xt0 , Yt0 ) − σ(tj , Xt0j , Yt0j )] dWt ;

i

[αj2 ∆Xj + βj2 ∆Yj ] − [f (t, Xt0 , Yt0 ) − f (tj , Xt0j , Yt0j )] dt.

Applying the Burkholder-Davis-Gundy Inequality and by (3.5) we get

C C nZ T 0 2 o

|zt | dt ,

E max [|∆Xi | + |∆Yi | ] ≤ + E

0≤i≤n

n

n

0

n

o

2

2

o

which, together with Theorem 2.1, implies that

I n,0 ≤ CV (y0 , z 0 ) +

C C nZ T 0 2 o

+ E

|zt | dt .

n

n

0

Finally, note that

n

o

V (y0 , z 0 ) ≤ CVn (y0 , z 0 ) + CE |∆Xn |2 + |∆Yn |2 = CVn (y0 , z 0 ) + CAn .

We get

I n,0 ≤ CVn (y0 , z 0 ) +

C C nZ T 0 2 o

|zt | dt .

+ E

n

n

0

1482

Moreover, noting that Zt = ux (t, Xt )σ(t, Xt , Yt ) is bounded, we have

E

nZ

T

0

|zt0 |2 dt

o

≤ CE

≤ CE

Thus

nZ

nZ

T

|Zt −

0

T

0

zt0 |2 dt

o

+ CE

o

nZ

T

0

|Zt |2 dt

o

|Zt − zt0 |2 dt + C.

o

C C nZ T

|Zt − zt0 |2 dt .

I ≤ CVn (y0 , z ) + + E

n

n

0

Choose n ≥ 2C for C as above, by (3.3) we prove (3.4) immediately.

0

n,0

3.2

Discretization of the BSDEs

Define the adjoint processes (or say, discretize BSDE (2.5)) as follows.

4

4

Ȳnn,0 = Ynn,0 − g(Xnn,0 ); Ỹnn,0 = g 0 (Xnn,0 )[Ynn,0 − g(Xnn,0 )];

Z ti

Z ti

n,0

n,0

n,0

n,0

n,0

n,0

n,0

Ȳi−1 = Ȳi

Z̄t dWt ;

Z̃t dt −

− fy,i−1 Ȳi−1 ∆t − σy,i−1

(3.6)

Zti−1

Zti−1

ti

ti

n,0

n,0

n,0

n,0

n,0

n,0

Z̃t dt −

Z̃tn,0 dWt ;

Ỹi−1 = Ỹi + fx,i−1 Ȳi−1 ∆t + σx,i−1

ti−1

ti−1

4

= ϕ(ti , Xin,0 , Yin,0 ) for any function ϕ. We note again that Z̄ n,0 , Z̃ n,0 are

where ϕn,0

i

4

not discretized. Denote ∆Wi+1 = Wti+1 −Wti , i = 0, · · · , n−1. Following the direction

(∆y, ∆z), by (3.1) we have the following gradients:

∇X0n,0 = 0, ∇Y0n,0h = ∆y;

i

n,0

n,0

n,0

n,0

n,0

n,0

∆Wi+1 ;

∇Xi+1 = ∇Xi + σx,i ∇Xi + σy,i ∇Yi

Z ti+1

i

h

n,0

n,0

n,0

n,0

n,0

n,0

∆zt dWt ;

∇Y

+

f

∆t

+

∇X

−

f

=

∇Y

∇Y

i

y,i

i

x,i

i

i+1

ti

n

o

∇Vn (y0 , z 0 ) = E [Y n,0 − g(X n,0 )][∇Y n,0 − g 0 (X n,0 )∇X n,0 ] .

n

Then

n

n

n

∇Vn (y0 , z 0 ) = E Ȳnn,0 ∇Ynn,0 − Ỹnn,0 ∇Xnn,0

= E

h

nh

n

o

n,0

n,0

n,0

n,0

Ȳn−1

∆t + σy,n−1

Ȳn−1

+ fy,n−1

tn

tn−1

Z̃tn,0 dt +

n,0

n,0

n,0

n,0

n,0

]∆t +

∇Yn−1

+ fy,n−1

∇Xn−1

− [fx,n−1

∇Yn−1

h

n,0

n,0

n,0

n,0

Ȳn−1

∆t − σx,n−1

− Ỹn−1

− fx,n−1

h

Z

n,0

∇Xn−1

+

n,0

n,0

∇Xn−1

[σx,n−1

+

n

tn

tn−1

Z

Z̃tn,0 dt +

n,0

n,0

]∆Wn

∇Yn−1

σy,n−1

n,0

n,0

n,0

n,0

+

∇Xn−1

− Ỹn−1

= E Ȳn−1

∇Yn−1

1483

Z

n

Z

tn

tn−1

Z

tn

tn−1

tn

tn−1

Z tn

i

i

Z̄tn,0 dWt ×

∆zt dWt

tn−1

i

i

Z̃tn,0 dWt ×

}

o

Z̄tn,0 ∆zt dt + Inn,0 ,

where

4

n,0

Iin,0 = σy,i−1

Z

ti

ti−1

Z̃tn,0 dt

Z

ti

ti−1

n,0

n,0

n,0

+σx,i−1

[σx,i−1

∇Xi−1

∆zt dWt

+

n,0

n,0

σy,i−1

∇Yi−1

]∆Wi

n,0

n,0

n,0

n,0

n,0

−σy,i−1

[fx,i−1

∇Xi−1

+ fy,i−1

∇Yi−1

]∆t

Z

Z

ti

ti−1

ti

ti−1

Z̃tn,0 dt

Z̃tn,0 dt

(3.7)

n,0

n,0 n,0

n,0

n,0

n,0

−fy,i−1

Ȳi−1

[fx,i−1 ∇Xi−1

+ fy,i−1

∇Yi−1

]|∆t|2 .

Repeating the same arguments and by induction we get

n

∇Vn (y0 , z 0 ) = E Ȳ0n,0 ∆y +

Z

T

0

Z̄tn,0 ∆zt dt +

n

X

i=1

o

Iin,0 .

(3.8)

¿From now on, we choose the following “almost” steepest descent direction:

4

∆y =

−Ȳ0n,0 ;

Z

ti

4

ti−1

∆zt dWt = Ei−1 {Ȳin,0 } − Ȳin,0 .

(3.9)

We note that ∆z is well defined here. Then we have

Lemma 3.3 Assume (3.9). Then for n large, we have

∇Vn (y0 , z 0 ) ≤ −cVn (y0 , z 0 ).

Proof. We proceed in several steps.

Step 1. We show that

n

E max [|Ȳin,0 |2 + |Ỹin,0 |2 ] +

0≤i≤n

Z

T

0

o

[|Z̄tn,0 |2 + |Z̃tn,0 |2 ]dt ≤ CVn (y0 , z 0 ).

(3.10)

In fact, for any i,

n

n,0 2

n,0 2

E |Ȳi−1

| + |Ỹi−1

| +

Z

ti

ti−1

[|Z̄tn,0 |2 + |Z̃tn,0 |2 ]dt

Z

n¯

¯ n,0

n,0

n,0

n,0

= E ¯Ȳi − fy,i−1 Ȳi−1 ∆t − σy,i−1

Z

¯

n,0

n,0

n,0

∆t + σx,i−1

Ȳi−1

+¯¯Ỹin,0 + fx,i−1

ti

ti−1

ti

ti−1

o

¯2

Z̃tn,0 dt¯¯

¯2 o

Z̃tn,0 dt¯¯

o

C

C

1 n Z ti

n,0 2

n,0 2

n,0 2

≤ [1 + ]E |Ȳi | + |Ỹi | } + E{|Ȳi−1 | } + E

[|Z̄tn,0 |2 + |Z̃tn,0 |2 ]dt .

n

n

2

ti−1

n

Then

E

n

n,0 2

|

|Ȳi−1

+

n,0 2

|

|Ỹi−1

o

o

1 Z ti

C n

+

[|Z̄tn,0 |2 + |Z̃tn,0 |2 ]dt ≤ [1 + ]E |Ȳin,0 |2 + |Ỹin,0 |2 .

2 ti−1

n

1484

By standard arguments we get

n

o

max E |Ȳin,0 |2 + |Ỹin,0 |2 + E

0≤i≤n

≤ CE

n

|Ȳnn,0 |2

+

|Ỹnn,0 |2

o

nZ

T

0

[|Z̄tn,0 |2 + |Z̃tn,0 |2 ]dt

≤ CVn (y0 , z 0 ).

o

Then (3.10) follows from the Burkholder-Davis-Gundy Inequality.

Step 2. We show that

0

Vn (y0 , z ) ≤ CE

n

|Ȳ0n,0 |2

+

Z

T

o

|Z̄tn,0 |2 dt .

0

(3.11)

In fact, for t ∈ (ti , ti+1 ], let

4

Ȳtn,0 =

Ȳin,0

+

n,0 n,0

fy,i

Ȳi [t

n,0

σy,i

− ti ] +

n,0 n,0

n,0

Ỹtn,0 = Ỹin,0 − fx,i

Ȳi [t − ti ] − σx,i

Z

t

ti

Z t

ti

Z̃sn,0 ds

t

Z

+

Z̃sn,0 ds +

ti

Z t

Z̄sn,0 dWs ;

ti

Z̃sn,0 dWs ;

4

Denote π(t) = ti for ti ∈ [ti , ti+1 ). Then one can write them as

Ȳtn,0 = Ȳ0n,0 +

+

Ỹtn,0

=

Z

t

0

Z

t

0

n,0

− Ȳsn,0 ]ds

fyn,0 (π(s))[Ȳπ(s)

[fyn,0 (π(s))Ȳsn,0

g 0 (Xnn,0 )ȲTn,0

+

Z

T

t

+

Z

T

t

+

σyn,0 (π(s))Z̃sn,0 ]ds

n,0

fxn,0 (π(s))[Ȳπ(s)

[fxn,0 (π(s))Ȳsn,0

+

+

Z

t

Z̄sn,0 dWs ;

0

− Ȳsn,0 ]ds

σxn,0 (π(s))Z̃sn,0 ]ds

Z

−

T

t

Z̃sn,0 dWs .

Applying Theorem 1.2, we get

1

Vn (y0 , z 0 ) = E{|Ȳnn,0 |2 }

2

+

Z

n

= CE |Ȳ0n,0 |2 +

Z

n

Z

≤ CE

n

|Ȳ0n,0 |2

≤ CE |Ȳ0n,0 |2 +

+C∆t

n−1

Xh

i=0

n

T

0

T

0

T

0

|Z̄tn,0 |2 dt

|Z̄tn,0 |2 dt +

≤ CE |Ȳ0n,0 |2 +

T

0

T

n,0 2

|Ȳtn,0 − Ȳπ(t)

| dt

0

n−1

X Z ti+1

i=0

ti

o

|Ȳtn,0 − Ȳtn,0

|2 dt

i

o

|Z̄tn,0 |2 dt

|Ȳin,0 |2 |∆t|2

Z

+

Z

+ ∆t

Z

ti+1

ti

o

|Z̄tn,0 |2 dt +

1485

|Z̃tn,0 |2 dt

C

Vn (y0 , z 0 ),

n

+

Z

ti+1

ti

|Z̄tn,0 |2 dt

io

thanks to (3.10). Choosing n ≥ 2C, we get (3.11) immediately.

Step 3. By (3.9) we have

E

=

=

nZ

T

|∆zt +

0

n−1

X

i=0

n−1

X

i=0

Z̄tn,0 |2 dt

o

=

n−1

X

i=0

n¯ Z

E ¯¯

ti+1

ti

n¯

n,0 n,0

n,0

Ȳi ∆t + σy,i

Ei {

E ¯¯Ȳin,0 + fy,i

¯Z

n

n,0 2 ¯

E |σy,i | ¯

≤ C∆t

n−1

X

E

i=0

nZ

ti+1

ti

ti+1

ti

Z̃tn,0 dt − Ei {

o

|Z̃tn,0 |2 dt ≤

Z

Z

∆zt dWt +

ti+1

ti

ti+1

ti

Z

Z̃tn,0 dt}

ti+1

ti

−

¯2 o

Z̄tn,0 dWt ¯¯

n,0

Ȳi+1

+

Z

ti+1

ti

¯2 o

¯

Z̃tn,0 dt}¯

C

Vn (y0 , z 0 ),

n

¯2 o

Z̄tn,0 dWt ¯¯

(3.12)

where we used (3.10) for the last inequality. Then,

Z T

Z T

¯ n

o

n

o¯

¯

¯

n,0

¯E Ȳ0 ∆y +

Z̄tn,0 ∆zt dt + E |Ȳ0n,0 |2 +

|Z̄tn,0 |2 dt ¯

0

0

¯ nZ T

o¯

Z̄tn,0 [∆zt + Z̄tn,0 ]dt ¯¯

= ¯¯E

0

o

o

nZ T

nZ T

1

≤ CE 2

1

0

|Z̄tn,0 |2 dt E 2

q

≤ C Vn (y0 , z 0 )

s

0

|∆zt + Z̄tn,0 |2 dt

C

C

Vn (y0 , z 0 ) = √ Vn (y0 , z 0 ).

n

n

Assume n is large. By (3.11) we get

E

n

Ȳ0n,0 ∆y

+

Z

T

0

Z̄tn,0 ∆zt dt

o

1 n n,0 2 Z T n,0 2 o

|Z̄t | dt ≤ −cVn (y0 , z 0 ). (3.13)

≤ − E |Ȳ0 | +

2

0

Step 4. It remains to estimate Iin,0 . First, by standard arguments and recalling

(3.9), (3.12), and (3.10), we have

n

o

n

E max [|∇Xin,0 |2 + |∇Yin,0 |2 ] ≤ CE |∆y|2 +

0≤i≤n

n

≤ CE |Ȳ0n,0 |2 +

Z

T

0

Z

T

0

|∆zt |2 dt

o

o

[|∆zt + Z̄tn,0 |2 + |Z̄tn,0 |2 ]dt ≤ CVn (y0 , z 0 ).

(3.14)

Then

n

¯X

¯

¯

¯

¯

E{Iin,0 }¯

i=1

Z ti+1

X n Z ti+1

C n−1

n,0 2

≤ √

|∆zt |2 dt

|Z̃t | dt +

E

n i=0

ti

ti

+[|∇Xin,0 |2 + |∇Yin,0 |2 ][Eti {|∆Wi+1 |2 } + |∆t|2 ] + |Ȳin,0 |2 ∆t

o

C n Z T n,0 2

[|Z̃t | + |Z̄tn,0 |2 + |∆zt + Z̄tn,0 |2 ]dt

≤ √ E

n

0

1486

o

n

o

C

+ √ max E |∇Xin,0 |2 + |∇Yin,0 |2 + |Ȳin,0 |2

n 0≤i≤n

C

≤ √ Vn (y0 , z 0 ).

n

(3.15)

Recall (3.8). Combining the above inequality with (3.13) we prove the lemma for

large n.

3.3

Iterative Modifications

We now fix a desired error level ε. In light of Theorem 3.1, we set n = ε−2 . So it

suffices to find (y, z) such that Vn (y, z) ≤ ε2 . As in §2.3, we assume

Vn (y0 , z 0 ) > ε2 ;

n

o

E |Ynn,0 − g(Xnn,0 )|4 ≤ K04 .

(3.16)

Lemma 3.4 Assume (3.16). There exist constants C0 , c0 , c1 > 0, which are independent of K0 and ε, such that

4

∆Vn (y0 , z 0 ) = Vn (y1 , z 1 ) − Vn (y0 , z 0 ) ≤ −

c0 ε

V (y , z 0 ),

2 n 0

K0

(3.17)

and

4

E{|Ynn,1 − g(XTn,1 )|4 } ≤ K14 = K04 + 2C0 εK02 ,

(3.18)

4 c1 ε

,

K02

where, recalling (3.9) and denoting λ =

4

y1 = y0 + λ∆y;

4

zt1 = zt0 + λ∆zt ;

(3.19)

and, for 0 ≤ θ ≤ 1,

4

4

X0n,θ = x; Y0n,θ = y0 + θλ∆y;

n,θ

n,θ

n,θ

n,θ 4

Xi+1 = Xi

+ σ(ti , Xi , Yi )∆Wi+1 ;

Z

n,θ

n,θ

n,θ

n,θ 4

Yi+1 = Yi − f (ti , Xi , Yi )∆t +

ti+1

ti

(3.20)

[zt + θλ∆zt ]dWt .

Proof. We shall follow the proof for Lemma 2.4.

Step 1. For 0 ≤ θ ≤ 1, denote

4

4

Ȳnn,θ = Ynn,θ − g(Xnn,θ ); Ỹnn,θ = g 0 (Xnn,θ )[Ynn,θ − g(Xnn,θ )];

Z ti

Z ti

n,θ

n,θ

n,θ

n,θ

n,θ

n,θ

n,θ

Ȳi−1 = Ȳi

Z̃t dt −

− fy,i−1 Ȳi−1 ∆t − σy,i−1

Z̄t dWt ;

Zti−1

Zti−1

ti

ti

n,θ

n,θ

n,θ

n,θ

n,θ

n,θ

Z̃t dt −

Z̃tn,θ dWt ,

Ỹi−1 = Ỹi + fx,i−1 Ȳi−1 ∆t + σx,i−1

ti−1

1487

ti−1

and

∇X0n,θ = 0,

n,θ

∇Xi+1

∇Y0n,θh = ∆y;

i

n,θ

n,θ

= ∇Xin,θ + σx,i

∇Xin,θ + σy,i

∇Yin,θ ∆Wi+1 ;

Z

i

h

n,θ

n,θ

n,θ

n,θ

n,θ

n,θ

∆t +

∇Yi+1 = ∇Yi − fx,i ∇Xi + fy,i ∇Yi

ti+1

ti

∆zt dWt ;

4

where ϕn,θ

= ϕ(ti , Xin,θ , Yin,θ ) for any function ϕ. Then

i

n

∆Vn (y0 , z 0 ) = E [Ynn,1 − g(Xnn,1 )]2 − [Ynn,0 − g(Xnn,0 ]2

= λ

Z

1

0

n

o

o

E [Ynn,θ − g(Xnn,θ )][∇Ynn,θ − g 0 (Xnn,θ )∇Xnn,θ ] dθ.

By (3.8) we have

∆Vn (y0 , z 0 ) = λ

Z

1

0

n

E Ȳ0n,θ ∆y +

Z

T

0

Z̄tn,θ ∆zt dt +

n

X

o

Iin,θ dθ;

i=1

(3.21)

where

Iin,θ

4

=

n,θ

σy,i−1

Z

ti

ti−1

Z̃tn,θ dt

Z

ti

ti−1

n,θ

n,θ

n,θ

∇Xi−1

[σx,i−1

+σx,i−1

∆zt dWt

+

n,θ

n,θ

]∆Wi

∇Yi−1

σy,i−1

n,θ

n,θ

n,θ

n,θ

n,θ

−σy,i−1

[fx,i−1

∇Xi−1

+ fy,i−1

∇Yi−1

]∆t

Z

Z

ti

ti−1

ti

Z̃tn,θ dt

Z̃tn,θ dt

ti−1

(3.22)

n,θ

n,θ

n,θ

n,θ n,θ

n,θ

]|∆t|2 .

∇Yi−1

+ fy,i−1

[fx,i−1 ∇Xi−1

Ȳi−1

−fy,i−1

Step 2. First, similarly to (3.10) and (3.12) one can show that

E

n

max [|Ȳin,0 |4

0≤i≤n

+

|Ỹin,0 |4 ]

+

³Z

T

0

[|Z̄tn,0 |2 + |Z̃tn,0 |2 + |∆zt |2 ]dt

´2 o

≤ CK04 .

(3.23)

Denote

4

∆Xin,θ = Xin,θ − Xin,0 ;

4

∆Yin,θ = Yin,θ − Yin,0 .

Then

∆Y0n,θ = θλ∆y;

= ∆Xin,θ + [αi1,θ ∆Xin,θ + βi1,θ ∆Yin,θ ]∆Wi+1 ;

∆X0n,θ = 0;

n,θ

∆Xi+1

Z

n,θ

2,θ

n,θ

2,θ

n,θ

n,θ

∆Yi+1

= ∆Yi − [αi ∆Xi + βi ∆Yi ]∆t − θλ

ti+1

ti

∆zt dWt ;

where αij,θ , βij,θ are defined in an obvious way and are bounded. Thus, by (3.23),

n

o

n

E max [|∆Xin,θ |4 + |∆Yin,θ |4 ] ≤ Cθ4 λ4 E |∆y|4 +

0≤i≤n

1488

³Z

T

0

|∆zt |2 dt

´2 o

≤ CK04 λ4 .

(3.24)

Therefore, similarly to (2.18) one can show that

n

o

E |Ynn,θ − g(Xnn,θ )|4 ≤ [1 + Cλ]K04 .

(3.25)

Step 3. Denote

4

∆Ȳin,θ = Ȳin,θ − Ȳin,0 ;

4

∆Z̄tn,θ = Z̄tn,θ − Z̄tn,0 ;

4

∆Ỹin,θ = Ỹin,θ − Ỹin,0 ;

4

∆Z̃tn,θ = Z̃tn,θ − Z̃tn,0 .

Then

∆Ȳnn,θ = ∆Ynn,θ − αnn,θ ∆Xnn,θ ;

∆Ỹnn,θ = g 0 (Xnn,θ )[∆Ynn,θ − αnn,θ ∆Xnn,θ ] + [Ynn,0 − g(Xnn,0 )]∆g 0 (n, θ);

Z ti

Z ti

n,θ

n,θ

n,θ

n,θ

n,θ

n,θ

∆Ȳi−1 = ∆Ȳi − fy,i−1 ∆Ȳi−1 ∆t − σy,i−1

∆Z̃t dt −

∆Z̄tn,θ dWt

ti−1

ti−1

Z ti

n,0

n,θ

n,0

n,θ

−Ȳi−1 ∆fy,i−1 ∆t −

Z̃t dt∆σy,i−1 ;

ti−1

Z ti

Z ti

n,θ

n,θ

n,θ

n,θ

n,θ

n,θ

∆Ỹi−1 = ∆Ỹi + fx,i−1 ∆Ȳi−1 ∆t + σx,i−1

∆Z̃t dt −

∆Z̃tn,θ dWt

ti−1

ti−1

Z ti

n,0

n,θ

n,0

n,θ

Z̃t dt∆σx,i−1 ,

+Ȳi−1 ∆fx,i−1 ∆t +

ti−1

where

g(Xnn,θ ) − g(Xnn,0 )

;

∆Xnn,θ

4

αnn,θ =

4

= ϕ(ti , Xin,θ , Yin,θ ) − ϕ(ti , Xin,0 , Yin,0 );

∆ϕn,θ

i

and all other terms are defined in a similar way. By standard arguments one has

E

n

max [|∆Ȳin,θ |2

0≤i≤n

≤ CE

+

n

|∆Ynn,θ |2

n−1

Xh

i=0

n

+

+

|∆Ỹin,θ |2 ]

|∆Xnn,θ |2

+

+

Z

T

0

n,0

|Yn

[|∆Z̄tn,θ |2 + |∆Z̃tn,θ |2 ]dt

− g(Xnn,0 )|2 |∆g 0 (n, θ)|2

n,θ 2

n,θ 2

| ]∆t +

| + |∆fx,i

|Ȳin,0 |2 [|∆fy,i

Z

ti+1

ti

o

n,θ 2

n,θ 2

|]

| + |∆σx,i

|Z̃tn,0 |2 dt[|∆σy,i

≤ CE |∆Ynn,θ |2 + |∆Xnn,θ |2 + |Ynn,0 − g(Xnn,0 )|2 |∆Xnn,θ |2

+

n−1

X

i=0

≤ CE

E

≤

1

2

1

2

[|Ȳin,0 |2 ∆t

n

n

+

Z

ti+1

ti

|Z̃tn,0 |2 dt][|∆Xin,θ |2 + |∆Yin,θ |2 ]

o

max [|∆Xin,θ |4 + |∆Yin,θ |4 ] ×

0≤i≤n

1 + |Ynn,0 − g(Xnn,0 )|4 + max |Ȳin,0 |4 +

CK02 λ2 [1

0≤i≤n

+

K02 ]

≤

CK04 λ2 ,

1489

³Z

T

0

o

|Z̃tn,0 |2 dt

´2 o

io

thanks to (3.24), (3.16), and (3.23). In particular,

E

n

|∆Ȳ0n,θ |2

+

Z

T

0

o

|∆Z̄tn,θ |2 dt ≤ CK04 λ2 .

(3.26)

Step 4. Recall (3.12). Note that

Z

¯ n

¯

n,θ

¯E Ȳ0 ∆y +

≤E

n

T

Z̄tn,θ ∆zt dt

0

|∆Ȳ0n,θ Ȳ0n,0 |

+

Z

T

0

T

h

o

+E

n

|Ȳ0n,0 |2

|∆Z̄tn,θ ||Z̄tn,0 |

+

Z

T

0

o¯

|Z̄tn,0 |2 dt ¯¯

i

+ (|∆Z̄tn,θ | + |Z̄tn,0 |)|∆zt + Z̄tn,0 | dt

o

Z T

o

o

1 n

|Z̄tn,0 |2 dt

[|∆Z̄tn,θ |2 + |∆zt + Z̄tn,0 |2 ]dt + E |Ȳ0n,0 |2 +

2

0

0

Z T

n

o

C

1

≤ CK04 λ2 + Vn (y0 , z 0 ) + E |Ȳ0n,0 |2 +

|Z̄tn,0 |2 dt .

n

2

0

n

≤ CE |∆Ȳ0n,θ |2 +

Z

Then

n

E Ȳ0n,θ ∆y +

Z

T

0

Z T

o

o

1 n

C

Z̄tn,θ ∆zt dt ≤ − E |Ȳ0n,0 |2 +

|Z̄tn,0 |2 dt + CK04 λ2 + Vn (y0 , z 0 ).

2

n

0

Choose n large and by (3.11) we get

n

E Ȳ0n,θ ∆y +

Z

T

0

o

Z̄tn,θ ∆zt dt ≤ −cVn (y0 , z 0 ) + CK04 λ2 .

(3.27)

Moreover, similarly to (3.14) and (3.15) we have

n

o

E max [|∇Xin,θ |2 + |∇Yin,θ |2 ] ≤ CVn (y0 , z 0 );

0≤i≤n

|

n

X

C

E{Iin,θ }| ≤ √ Vn (y0 , z 0 ).

n

i=1

Then by (3.27) and choosing n large, we get

E

n

4

Ȳ0n,θ ∆y

+

Z

T

0

Z̄tn,θ ∆zt dt

+

n

X

i=1

o

Iin,θ ≤ −cVn (y0 , z 0 ) + CK04 λ2 .

4 c1 ε

.

K02

q

c

for the constants c, C as above, and λ =

Choose c1 = 2C

(3.16), we have

Then by (3.8) and

c

c0 ε

∆Vn (y0 , z 0 ) ≤ λ[− Vn (y0 , z 0 )] = − 2 Vn (y0 , z 0 ).

2

K0

Finally, plug λ into (3.25) and let θ = 1 to get (3.18) for some C0 .

We now iteratively modify the approximations. Set

4

y0 = 0,

4

z 0 = 0,

4

1

K0 = E 4 {|Ynn,0 − g(Xnn,0 )|4 }.

1490

(3.28)

For k = 0, 1, · · · , define (X n,k , Y n,k , Ȳ n,k , Ỹ n,k , Z̄ n,k , Z̃ n,k ) as follows:

4

4

X0n,k = x; Y0n,k = yk ;

n,k 4

n,k

n,k

Xi+1 = Xi

+ σ(ti , Xi , Yin,k )∆Wi+1 ;

Z

n,k 4

n,k

Yi+1 = Yi

− f (ti , Xin,k , Yin,k )∆t +

and

ti+1

ti

(3.29)

ztk dWt ;

4

4

Ȳnn,k = Ynn,k − g(Xnn,k ); Ỹnn,k = g 0 (Xnn,k )[Ynn,k − g(Xnn,k )];

Z ti

Z ti

n,k

n,k

n,k

n,k

n,k

n,k

n,k

Ȳi−1 = Ȳi

− fy,i−1 Ȳi−1 ∆t − σy,i−1

Z̃t dt −

Z̄t dWt ;

Zti−1

Zti−1

ti

ti

n,k

n,k

n,k

n,k

n,k

n,k

+ fx,i−1 Ȳi−1 ∆t + σx,i−1

Z̃t dt −

Z̃tn,k dWt ,

Ỹi−1 = Ỹi

ti−1

Denote

4

∆yk = −Ȳ0n,k ;

Z

ti

ti−1

4

ti−1

(3.30)

∆ztk dWt = Ei−1 {Ȳin,k } − Ȳin,k ;

(3.31)

and

4

λk =

c1 ε

;

Kk2

4

yk+1 = yk +λk ∆yk ;

4

ztk+1 = ztk +λk ∆ztk ;

4

4

Kk+1

= Kk4 +2C0 εKk2 , (3.32)

where c1 , C0 are the constants in Lemma 3.4. Then following exactly the same arguments as in Theorem 2.5, we can prove

Theorem 3.5 Set n = ε−2 . There exist constants C1 , C2 and N ≤ C1 ε−C2 such that

Vn (yN , z N ) ≤ ε2 .

4

Further Simplification

We now transform (3.30) into conditional expectations. First,

4

zin,k =

Second, denote

Then

1 n Z ti+1 k o

1

n,k

∆Wi+1 }.

Ei

zt dt =

Ei {Yi+1

∆t

∆t

ti

³

1 n,k 2

4

n,k

∆Wi − |σx,i−1

Min,k = exp σx,i−1

| ∆t).

2

n

o

n,k

n,k

n,k

∆t;

Ȳi−1

= Ei−1 Min,k Ỹin,k + fx,i−1

Ỹi−1

n,k

n,k

n,k

n,k

n,k

n,k

Ei−1 {Ỹin,k }

Ei−1 {Ȳin,k } + σy,i−1

= σx,i−1

+ σy,i−1

Ỹi−1

σx,i−1

Ȳi−1

n,k

n,k

n,k

n,k

n,k

∆t.

+[σy,i−1

fx,i−1

− σx,i−1

fy,i−1

]Ȳi−1

1491

(4.1)

Thus

n,k

Ȳi−1

=

1

n,k

1 + fy,i−1

∆t

h

n,k

σy,i−1

Ei−1 {Ȳin,k } −

n,k

σx,i−1

i

Ei−1 {Ỹin,k [Min,k − 1]} ;

(4.2)

n,k

n,k

n,k

Ỹi−1

= Ei−1 {Min,k Ỹin,k } + fx,i−1

Ȳi−1

∆t.

n,k

When σx,i−1

= 0, by solving (3.30) directly, we see that (4.2) becomes

n,k

Ȳi−1

=

n,0

Ỹi−1

=

1

1+

n,k

fy,i−1

∆t

Ei−1 {Ỹin,k }

h

i

n,k

Ei−1 {Ȳin,k } − σy,i−1

Ei−1 {Ỹin,k ∆Wi } ;

+

(4.3)

n,k

fx Ȳi−1

∆t.

4

Now fix ε and, in light of (3.4), set n = ε−2 . Let c1 , C0 be the constants in Lemma

3.4. We have the following algorithm.

First, set

n,0 4

X0 = x;

n,0 4

4

Y0n,0 = 0;

n,0

n,0

n,0

Xi+1 = Xi + σ(ti , Xi , Yi )∆Wi+1 ;

4

Y n,0 =

Yin,0 − f (ti , Xin,0 , Yin,0 )∆t;

i+1

and

4

zin,0 = 0;

4

1

n

o

K0 = E 4 |Ynn,0 − g(Xnn,0 )|4 .

For k = 0, 1, · · ·, if E{|Ynk − g(Xnk )|2 } ≤ ε2 , we quit the loop and by Theorems 3.1,

3.4, and Corollary 3.2, we have

n

E max [|Xti −

0≤i≤n

Xin,k |2

+ |Yti −

Yin,k |2 ]

+

n−1

X Z ti+1

i=0

ti

o

|Zt − zin,k |2 dt ≤ Cε2 .

Otherwise, we proceed the loop as follows:

Step 1. Define (Ȳnn,k , Ỹnn,k ) by the first line of (3.30); and for i = n, · · · , 1, define

n,k

n,k

) by (4.2) or (4.3).

, Ỹi−1

(Ȳi−1

4 c1 ε

4

4

Step 2. Let λk = 2 , Kk+1

= Kk4 + 2C0 εKk2 . Define (X n,k+1 , Y n,k+1 , z n,k+1 ) by

Kk

4

4

X0n,k+1 = x; Y0n,k+1 = Y0n,k − λk Ȳ0n,k ;

4

X n,k+1 =

Xin,k+1 + σ(ti , Xin,k+1 , Yin,k+1 )∆Wi+1 ;

i+1

n,k+1 4

= Yin,k+1 − f (ti , Xin,k+1 , Yin,k+1 )∆t

Yi+1

i

h

h

n,k

n,k

n,k

n,k

+ Yi+1 − Yi

+ f (ti , Xi , Yi

1492

(4.4)

i

n,k

n,k

)∆t + λk Ei {Ȳi+1

;

} − Ȳi+1

and

o

1 n n,k+1

Ei Yi+1 ∆Wi+1 .

(4.5)

∆t

Rt

We note that in the last line of (4.4), the two terms stand for tii+1 ztk dWt and

R ti+1

∆ztk dWt , respectively.

ti

4

zin,k+1 =

By Theorem 3.4, the above loop should stop after at most C1 ε−C2 steps.

We note that in the above algorithm the only costly terms are the conditional

expectations:

n,k

Ei {Ȳi+1

},

n,k

Ei {Ỹi+1

},

n,k

Ei {∆Wi+1 Yi+1

},

n,k n,k

n,k

Ei {Mi+1

Ỹi+1 } or Ei {∆Wi+1 Ỹi+1

}.

(4.6)

By induction, one can easily show that

n,k

n,k

Yin,k = un,k

i (X0 , · · · , Xi ),

n,k

, Ỹin,k ).

for some deterministic function un,k

i . Similar properties hold true for (Ȳi

However, they are not Markovian in the sense that one cannot write Yin,k , Ȳin,k , Ỹin,k

as functions of Xin,k only. In order to use Monte-Carlo methods to compute the

conditional expectations in (4.6) efficiently, some Markovian type modification of our

algorithm is needed.

Acknowledgement. We are very grateful to the anonymous referee for his/her

careful reading of the manuscript and many very helpful suggestions.

References

[1] V. Bally, Approximation scheme for solutions of BSDE, Backward stochastic

differential equations (Paris, 1995–1996), 177–191, Pitman Res. Notes Math.

Ser., 364, Longman, Harlow, 1997.

[2] V. Bally, G. Pagès, and J. Printems, A quantization tree method for pricing and

hedging multidimensional American options, Math. Finance, 15 (2005), no. 1,

119–168.

[3] C. Bender and R. Denk, Forward Simulation of Backward SDEs, preprint.

[4] B. Bouchard and N. Touzi, Discrete-time approximation and Monte-Carlo simulation of backward stochastic differential equations, Stochastic Process. Appl.,

111 (2004), no. 2, 175–206.

[5] P. Briand, B. Delyon, and J. Mémin, Donsker-type theorem for BSDEs, Electron.

Comm. Probab., 6 (2001), 1–14 (electronic).

1493

[6] D. Chevance, Numerical methods for backward stochastic differential equations,

Numerical methods in finance, 232–244, Publ. Newton Inst., Cambridge Univ.

Press, Cambridge, 1997.

[7] F. Delarue, On the existence and uniqueness of solutions to FBSDEs in a nondegenerate case, Stochastic Process. Appl., 99 (2002), no. 2, 209–286.

[8] F. Delarue and S. Menozzi, A forward-backward stochastic algorithm for quasilinear PDEs, Ann. Appl. Probab., to appear.

[9] J. Jr. Douglas, J. Ma, and P. Protter, Numerical methods for forward-backward

stochastic differential equations, Ann. Appl. Probab., 6 (1996), 940-968.

[10] E. Gobet, J. Lemor, and X. Warin, A regression-based Monte-Carlo method to

solve backward stochastic differential equations, Ann. Appl. Probab., 15 (2005),

no. 3, 2172–2202..

[11] O. Ladyzhenskaya, V. Solonnikov, and N. Ural’ceva, Linear and quasilinear equations of parabolic type, Translations of Mathematical Monographs, Vol. 23 American Mathematical Society, Providence, R.I. 1967.

[12] J. Ma, P. Protter, J. San Martı́n, and S. Torres, Numerical method for backward

stochastic differential equations, Ann. Appl. Probab. 12 (2002), no. 1, 302–316.

[13] J. Ma, P. Protter, and J. Yong, Solving forward-backward stochastic differential equations explicitly - a four step scheme, Probab. Theory Relat. Fields., 98

(1994), 339-359.

[14] R. Makarov, Numerical solution of quasilinear parabolic equations and backward

stochastic differential equations, Russian J. Numer. Anal. Math. Modelling, 18

(2003), no. 5, 397–412.

[15] J. Mémin, S. Peng, and M. Xu, Convergence of solutions of discrete reflected

BSDEs and simulations, preprint.

[16] G. Milstein and M. Tretyakov, Numerical algorithms for semilinear parabolic

equations with small parameter based on approximation of stochastic equations,

Math. Comp., 69 (2000), no. 229, 237–267.

[17] J. Ma and J. Yong, Forward-Backward Stochastic Differential Equations and

Their Applications, Lecture Notes in Math., 1702, Springer, 1999.

[18] J. Ma and J. Yong, Approximate solvability of forward-backward stochastic differential equations, Appl. Math. Optim., 45 (2002), no. 1, 1–22.

1494

[19] E. Pardoux and S. Peng S., Adapted solutions of backward stochastic equations,

System and Control Letters, 14 (1990), 55-61.

[20] E. Pardoux and S. Tang, Forward-backward stochastic differential equations and

quasilinear parabolic PDEs, Probab. Theory Related Fields, 114 (1999), no. 2,

123–150.

[21] J. Zhang, A numerical scheme for BSDEs, Ann. Appl. Probab., 14 (2004), no.

1, 459–488.

[22] J. Zhang, The well-posedness of FBSDEs, Discrete and Continuous Dynamical

Systems (B), to appear.

[23] J. Zhang, The well-posedness of FBSDEs (II), submitted.

[24] Y. Zhang and W. Zheng, Discretizing a backward stochastic differential equation,

Int. J. Math. Math. Sci., 32 (2002), no. 2, 103–116.

[25] X. Zhou, Stochastic near-optimal controls: necessary and sufficient conditions for

near-optimality, SIAM J. Control Optim., 36 (1998), no. 3, 929–947 (electronic).

1495