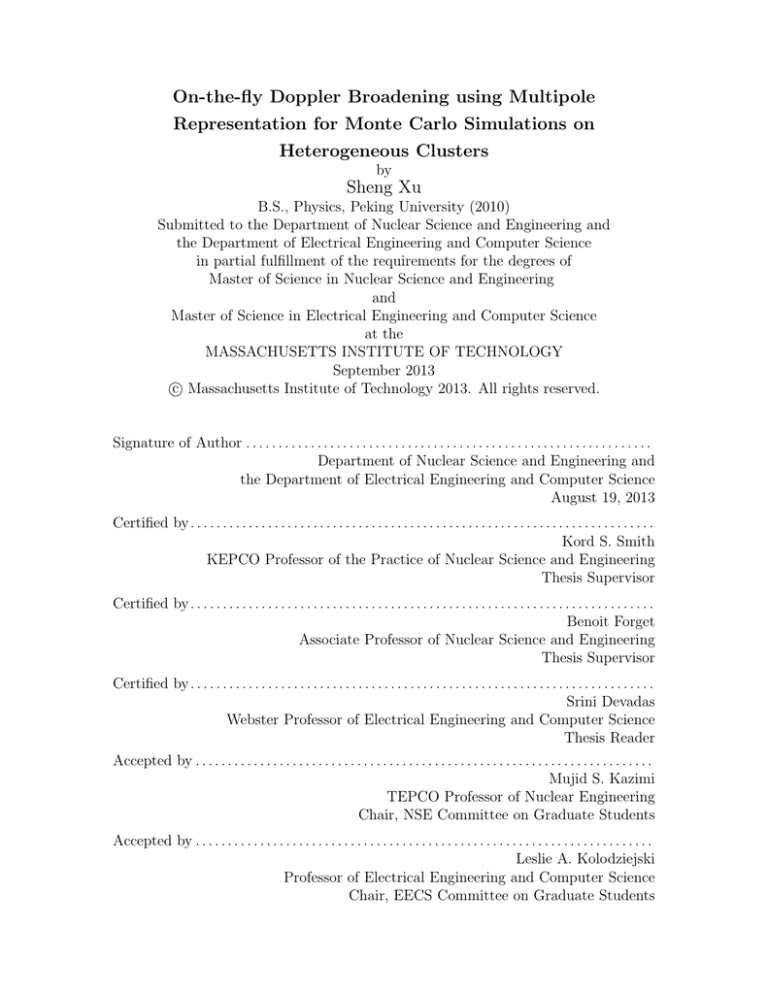

On-the-fly Doppler Broadening using Multipole

Representation for Monte Carlo Simulations on

Heterogeneous Clusters

by

Sheng Xu

B.S., Physics, Peking University (2010)

Submitted to the Department of Nuclear Science and Engineering and

the Department of Electrical Engineering and Computer Science

in partial fulfillment of the requirements for the degrees of

Master of Science in Nuclear Science and Engineering

and

Master of Science in Electrical Engineering and Computer Science

at the

MASSACHUSETTS INSTITUTE OF TECHNOLOGY

September 2013

c Massachusetts Institute of Technology 2013. All rights reserved.

Signature of Author . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

Department of Nuclear Science and Engineering and

the Department of Electrical Engineering and Computer Science

August 19, 2013

Certified by . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

Kord S. Smith

KEPCO Professor of the Practice of Nuclear Science and Engineering

Thesis Supervisor

Certified by . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

Benoit Forget

Associate Professor of Nuclear Science and Engineering

Thesis Supervisor

Certified by . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

Srini Devadas

Webster Professor of Electrical Engineering and Computer Science

Thesis Reader

Accepted by . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

Mujid S. Kazimi

TEPCO Professor of Nuclear Engineering

Chair, NSE Committee on Graduate Students

Accepted by . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

Leslie A. Kolodziejski

Professor of Electrical Engineering and Computer Science

Chair, EECS Committee on Graduate Students

2

On-the-fly Doppler Broadening using Multipole

Representation for Monte Carlo Simulations on

Heterogeneous Clusters

by

Sheng Xu

Submitted to the Department of Nuclear Science and Engineering and

the Department of Electrical Engineering and Computer Science

on August 19, 2013, in partial fulfillment of the

requirements for the degrees of

Master of Science in Nuclear Science and Engineering

and

Master of Science in Electrical Engineering and Computer Science

Abstract

In order to use Monte Carlo methods for reactor simulations beyond benchmark

activities, the traditional way of preparing and using nuclear cross sections needs to

be changed, since large datasets of cross sections at many temperatures are required

to account for Doppler effects, which can impose an unacceptably high overhead in

computer memory. In this thesis, a novel approach, based on the multipole representation, is proposed to reduce the memory footprint for the cross sections with little

loss of efficiency.

The multipole representation transforms resonance parameters into a set of poles

only some of which exhibit resonant behavior. A strategy is introduced to preprocess

the majority of the poles so that their contributions to the cross section over a small

energy interval can be approximated with a low-order polynomial, while only a small

number of poles are left to be broadened on the fly. This new approach can reduce

the memory footprint of the cross sections by one to two orders over comparable

techniques. In addition, it can provide accurate cross sections with an efficiency

comparable to current methods: depending on the machines used, the speed of the

new approach ranges from being faster than the latter, to being less than 50% slower.

Moreover, it has better scalability features than the latter.

The significant reduction in memory footprint makes it possible to deploy the

Monte Carlo code for realistic reactor simulations on heterogeneous clusters with

GPUs in order to utilize their massively parallel capability. In the thesis, a CUDA

version of this new approach is implemented for a slowing down problem to examine

its potential performance on GPUs. Through some extensive optimization efforts, the

CUDA version can achieve around 22 times speedup compared to the corresponding

serial CPU version.

Thesis Supervisor: Kord S. Smith

Title: KEPCO Professor of the Practice of Nuclear Science and Engineering

Thesis Supervisor: Benoit Forget

Title: Associate Professor of Nuclear Science and Engineering

3

4

Acknowledgments

I would firstly like to thank my supervisors, Prof. Kord Smith and Prof. Benoit

Forget, for their invaluable guidance and insights throughout this project, and for the

support and freedom they gave me to pursue the research topic that I am interested

in.

I would also like to thank Prof. Srini Devadas for being my thesis reader. His

expertise in computer architecture, especially in GPU, has helped me tremendously

during this project. I wish I could have more time to learn from him.

I am grateful to Luiz Leal of ORNL for introducing us to the multipole representation, and Roger Blomquist for his help in getting the WHOPPER code. This work

was supported by the Office of Advanced Scientific Computing Research, Office of

Science, U.S. Department of Energy, under Contract DE-AC02-06CH11357.

Due to the nature of this project, I have also received help from many other people

of both departments and I would like to thank all of them, among whom are: Dr.

Paul Romano, Dr. Koroush Shirvan, Bryan Herman, Jeremy Roberts, Nick Horelik,

Nathan Gilbson and Will Boyd of NSE, and Prof. Charles Leiserson, Prof. Nir Shavit,

Haogang Chen and Ilia Lebedev of EECS.

Furthermore, I want to express my gratitude to Prof. Mujid Kazimi, for his

guidance during my first year and a half here at MIT, and for his continuous patience,

understanding and support over my entire three years here.

In addition, I would like to thank Clare Egan and Heather Barry of NSE and

Janet Fischer of EECS, for the many administrative processes that they have helped

me through from the application to the completion of the dual degrees. I also wish

to give my thanks to all other people at MIT who have provided direct or indirect

support to my studies over the last three years.

Lastly, I would like to thank my family and friends for their love and care to

me. Special thanks go to my wife, Hengchen Dai, for her constant love, support and

5

encouragement, which helped me go through each and every hard time during the

years that we have been together.

6

Contents

Contents

7

List of Figures

11

List of Tables

13

1 Introduction

15

1.1

Motivation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

15

1.2

Objectives . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

17

1.3

Thesis organization . . . . . . . . . . . . . . . . . . . . . . . . . . . .

18

2 Background and Review

2.1

2.2

19

Existing methods for Doppler broadening . . . . . . . . . . . . . . . .

19

2.1.1

Cullen’s method . . . . . . . . . . . . . . . . . . . . . . . . . .

20

2.1.2

Regression model . . . . . . . . . . . . . . . . . . . . . . . . .

23

2.1.3

Explicit temperature treatment method . . . . . . . . . . . . .

25

2.1.4

Other methods . . . . . . . . . . . . . . . . . . . . . . . . . .

27

General purpose computing on GPU . . . . . . . . . . . . . . . . . .

28

2.2.1

GPU architecture . . . . . . . . . . . . . . . . . . . . . . . . .

29

2.2.2

CUDA Programming Model . . . . . . . . . . . . . . . . . . .

32

2.2.3

GPU performance pitfalls . . . . . . . . . . . . . . . . . . . .

34

2.2.4

Floating point precision support . . . . . . . . . . . . . . . . .

35

7

3 Approximate Multipole Method

3.1

3.2

3.3

3.4

37

Multipole representation . . . . . . . . . . . . . . . . . . . . . . . . .

37

3.1.1

Theory of multipole representation . . . . . . . . . . . . . . .

37

3.1.2

Doppler broadening . . . . . . . . . . . . . . . . . . . . . . . .

40

3.1.3

Characteristics of poles . . . . . . . . . . . . . . . . . . . . . .

41

3.1.4

Previous efforts on reducing poles to broaden . . . . . . . . .

42

Approximate multipole method . . . . . . . . . . . . . . . . . . . . .

44

3.2.1

Properties of Faddeeva function and the implications . . . . .

44

3.2.2

Overlapping energy domains strategy . . . . . . . . . . . . . .

47

Outer and inner window size . . . . . . . . . . . . . . . . . . . . . . .

49

3.3.1

Outer window size . . . . . . . . . . . . . . . . . . . . . . . .

51

3.3.2

Inner window size . . . . . . . . . . . . . . . . . . . . . . . . .

56

Implementation of Faddeeva function . . . . . . . . . . . . . . . . . .

60

4 Implementation and Performance Analysis on CPU

65

4.1

Implementation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

65

4.2

Test setup . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

68

4.2.1

Table lookup . . . . . . . . . . . . . . . . . . . . . . . . . . .

69

4.2.2

Cullen’s method . . . . . . . . . . . . . . . . . . . . . . . . . .

70

4.2.3

Approximate multipole method . . . . . . . . . . . . . . . . .

70

Test one . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

71

4.3.1

Serial performance . . . . . . . . . . . . . . . . . . . . . . . .

72

4.3.2

Revisit inner window size

. . . . . . . . . . . . . . . . . . . .

74

4.3.3

Parallel performance . . . . . . . . . . . . . . . . . . . . . . .

74

4.4

Test two . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

77

4.5

Summary . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

80

4.3

8

5 Implementation and Performance Analysis on GPU

5.1

5.2

5.3

83

Test setup and initial implementation . . . . . . . . . . . . . . . . . .

83

5.1.1

Implementation . . . . . . . . . . . . . . . . . . . . . . . . . .

84

5.1.2

Hardware specification . . . . . . . . . . . . . . . . . . . . . .

86

5.1.3

Results . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

86

Optimization efforts . . . . . . . . . . . . . . . . . . . . . . . . . . . .

88

5.2.1

Profiling . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

88

5.2.2

Floating point precision . . . . . . . . . . . . . . . . . . . . .

90

5.2.3

Global memory efficiency . . . . . . . . . . . . . . . . . . . . .

92

5.2.4

Shared memory and register usage

. . . . . . . . . . . . . . .

95

Results and discussion . . . . . . . . . . . . . . . . . . . . . . . . . .

96

6 Summary and Future Work

99

6.1

Summary . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

6.2

Future work . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 101

A Whopper Input Files

99

103

A.1 U238 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 103

A.2 U235 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 105

A.3 Gd155 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 106

References

107

9

10

List of Figures

1-1 U238 capture cross section at 6.67 eV resonance for different temperatures.

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

16

2-1 Evolution of GPU and CPU throughput [1]. . . . . . . . . . . . . . .

29

2-2 Architecture of an Nvidia GF100 card (Courtesy of Nvidia). . . . . .

30

2-3 GPU memory hierarchy (Courtesy of Nvidia). . . . . . . . . . . . . .

31

2-4 CUDA thread, block and grid hierarchy [1].

33

. . . . . . . . . . . . . .

3-1 Poles distribution for U238. Black and red dots represent the poles

with l = 0 and with positive and negative real parts, respectively, and

green dots represent the poles with l > 0. . . . . . . . . . . . . . . . .

42

3-2 Relative error of U238 total cross section at 3000K broadening only

principal poles compared with NJOY data. . . . . . . . . . . . . . . .

46

3-3 Relative error of U235 total cross section at 3000 K broadening only

principal poles against NJOY data. . . . . . . . . . . . . . . . . . . .

3-4 Faddeeva function in the upper half plane of complex domain

47

. . . .

48

3-5 Demonstration of overlapping window. . . . . . . . . . . . . . . . . .

49

3-6 Relative error of U238 total cross section at 3000 K calculated with

constant outer window size of twice of average resonance spacing. . .

11

53

3-7 Relative error of background cross section approximation for U238 total

cross section at 3000 K with constant outer window size of twice of

average resonance spacing. . . . . . . . . . . . . . . . . . . . . . . . .

54

3-8 Relative error of U238 total cross section at 3000 K calculated with

outer window size from Eq. 3.22. . . . . . . . . . . . . . . . . . . . .

56

3-9 Relative error of background cross section approximation for U238 total

cross section at 3000 K with outer window size from Eq. 3.22. . . . .

57

3-10 Relative error of U235 total cross section at 3000 K calculated with

outer window size from Eq. 3.22. . . . . . . . . . . . . . . . . . . . .

58

3-11 Demo for the six-point bivariate interpolation scheme. . . . . . . . . .

61

3-12 Relative error of modified W against scipy.special.wofz. . . . . . . . .

63

3-13 Relative error of modified QUICKW against scipy.special.wofz. . . . .

64

4-1 Strong scaling study of table lookup and approximate multipole methods for 300 nuclides. The straight line represents perfect scalability. .

75

4-2 Strong scaling study of table lookup and approximate multipole methods for 3000 nuclides. The straight line represents perfect scalability.

76

4-3 Weak scaling study of table lookup and approximate multipole methods with 300 nuclides per thread. . . . . . . . . . . . . . . . . . . . .

77

4-4 OpenMP scalability of table lookup and multipole methods for neutron

slowing down. The straight line represents perfect scalability. . . . . .

12

80

List of Tables

2.1

Latency and throughput of different types of memory in GPU [2]. . .

3.1

Information related to outer window size for U238 and U235 total cross

section at different temperatures . . . . . . . . . . . . . . . . . . . . .

3.2

32

59

Number of terms needed for background cross section and the corresponding storage size for various inner window size (multiple of average

resonance spacing). . . . . . . . . . . . . . . . . . . . . . . . . . . . .

60

4.1

Resonance Information of U235, U238 and Gd155 . . . . . . . . . . .

68

4.2

Performance results of the serial version of different methods for test

one. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

4.3

Runtime breakdown of approximate multipole method with modified

QUICKW. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

4.4

73

Performance and storage size of background information with varying

inner window size.

4.5

72

. . . . . . . . . . . . . . . . . . . . . . . . . . . .

74

kef f (with standard deviation) and the average runtime per neutron

history for both table lookup and approximate multipole methods. . .

79

5.1

GPU specifications. . . . . . . . . . . . . . . . . . . . . . . . . . . . .

87

5.2

Performance statistics of the initial implementation on Quadro 4000

from Nvidia visual profiler nvvp . . . . . . . . . . . . . . . . . . . . .

13

89

5.3

kef f (with standard deviation) and the average runtime per neutron

history for different cases. . . . . . . . . . . . . . . . . . . . . . . . .

92

5.4

Speedup of GPU vs. serial CPU version on both GPU cards. . . . . .

96

5.5

Performance statistics of the optimized single precision version on Quadro

4000 from nvvp. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

14

97

Chapter 1

Introduction

1.1

Motivation

Most Monte Carlo neutron transport codes rely on point-wise neutron cross section

data, which provides an efficient way to evaluate cross sections by linear interpolation with a pre-defined level of accuracy. Due to the effect of thermal motion

from target nucleus, or Doppler effect, cross sections usually vary with temperature,

which is particularly significant for the resonance capture and fission cross section,

as demonstrated in Fig. 1-1. This temperature dependence must be taken into consideration for realistic, detailed Monte Carlo reactor simulations, especially if Monte

Carlo method is to be used for the multi-physics simulations with thermal-hydraulic

feedback impacting temperatures and material densities.

The traditional way of dealing with this problem is to generate cross sections for

each nuclide at specific reference temperatures by Doppler broadening the corresponding 0 K cross sections with nuclear data processing codes such as NJOY [3], and then

to use linear interpolation between temperature points to get the cross sections for the

desired temperature. However, this method can require a prohibitively large amount

of cross section data to cover a large temperature range. For example, Trumbull [4]

15

0K

300 K

3000 K

4

Cross section (barn)

10

3

10

2

10

1

10

6

6.2

6.4

6.6

6.8

7

7.2

7.4

E (eV)

Figure 1-1: U238 capture cross section at 6.67 eV resonance for different temperatures.

studied the issue and suggested that using a temperature grid of 5 − 10 K spacing

for the cross sections would provide the level of accuracy needed. Considering that

the temperature range for an operating reactor (including the accident scenario) is

between 300 and 3000 K, and the data size of cross sections at a single temperature

for 300 nuclides of interest is approximately 1 GB, this means that around 250 − 500

GB of data would need to be stored and accessed, which usually exceeds the size of

the main memory of most single computer nodes and thus degrades the performance

tremendously.

On the other hand, the exceedingly high power density with faster transistors

limits the continuing increase in the speed of the central processor unit (CPU). Consequently, more and more cores have to be placed on a single processor to maintain

the performance increases through wider parallelism. One direct result is that each

processor core will have limited access to fast memory (or cache) which can cause a

large disparity between processor power and available memory. This is especially true

for the graphics processing unit (GPU), which is at the leading edge of this trend of

embracing wider parallelism (although for different reasons, which will be discussed in

16

the next chapter). Therefore, reducing the memory footprint of a program can play a

significant role in increasing its efficiency with new trends of computer architectures.

As a result, to enable the Monte Carlo reactor simulation beyond the benchmark

activities and to prepare it for the new computer architectures, the memory footprint of the temperature-dependent cross section data must be reduced. This can be

done through evaluating the cross section on the fly, but classic Doppler broadening

methods are usually too expensive, since they were all developed for preprocessing

purposes. A few recent efforts on this [5, 6] have showed some improvements, but

either the memory footprint is still too high for massively parallel architectures, or

the efficiency is significantly degraded relative to current methods. Details on these

methods will be presented in the next chapter.

In this thesis, a new method of evaluating the cross section on the fly is proposed

based on the multipole representation developed in [7], to both reduce the memory

footprint of cross section data and to preserve the computational efficiency. In particular, the energy range above 1 eV in the resolved resonance region will be the focus

of this thesis, since the cross sections in this range dominate the storage.

1.2

Objectives

The main goal of this work is to develop a method that can dramatically reduce the

memory footprint for the temperature-dependent nuclear cross section data so that

the Monte Carlo method can be used for realistic reactor simulations. At the same

time, the efficiency must be maintained at least comparable to the current method.

In addition, this new method will be implemented on the GPU to utilize its massively

parallel capability.

17

1.3

Thesis organization

The remaining parts of the thesis are organized as follows:

Chapter 2 reviews the existing methods for Doppler broadening, especially those

that are developed to be used on the fly. It also provides some background information

for general purpose computing on GPU.

Chapter 3 describes the theory of the multipole representation and subsequently

the formulation of the new method, the approximate multipole method [8]. The

underlying strategy used for this new method will be discussed, as well as some

important parameters and functions that can impact the performance or memory

footprint thereof.

Chapter 4 presents the implementation of the approximate multipole method on

CPU and its performance comparison against the current method for both the serial

and parallel version.

Chapter 5 presents the implementation and performance analysis of the approximate multipole method on GPU. Specifically, the performance bottlenecks and optimization efforts in the GPU implementation are discussed.

Chapter 6 summarizes the work in this thesis, followed by a discussion of possible

future work and directions.

18

Chapter 2

Background and Review

This chapter first reviews the existing methods for Doppler broadening, with a focus

on recent efforts for broadening on the fly. The general purpose computing on GPU

is then reviewed, mainly on the architectural aspect of GPU, the programming model

and performance issues related to GPU.

2.1

Existing methods for Doppler broadening

Doppler broadening of nuclear cross sections has long been an important problem in

nuclear reactor analysis and methods. The traditional methods are mainly for the

purpose of preprocessing and generating cross section libraries. Recently the concept

of doing the Doppler broadening on the fly has gained popularity due to the prohibitively large storage size of temperature-dependent cross section data needed by

Monte Carlo simulation with coupled thermal-hydraulic feedback, and a few methods in this category have been developed. In this section, both types of Doppler

broadening methods are presented, with a focus on the latter type.

19

2.1.1

Cullen’s method

The well-known Doppler broadening method developed by Cullen [9] uses a detailed

integration of the integral equation defining the effective cross section due to the

relative motion of target nucleus. For the ideal gas model, where the target motion

is isotropic and its velocity obeys the Maxwell-Boltzmann distribution, the effective

Doppler-broadened cross section at temperature T takes the following form

√ Z∞

α

2

2

dV σ 0 (V )V 2 [e−α(V −v) − eα(V +v) ],

σ̄(v) = √ 2

πv 0

(2.1)

M

, with kB being the Boltzmann’s constant and M the mass of the

2kB T

target nucleus, v is the incident neutron velocity, V is the relative velocity between

where α =

the neutron and the target nucleus, and σ 0 (v) represents the 0 K cross section for

neutron with velocity v.

As Cullen’s method will be used as a reference method in Chapter 4, it is helpful

to present the algorithm for evaluating Eq. 2.1 here. To begin, Eq. 2.1 can be broken

into two parts:

σ̄(v) = σ ∗ (v) − σ ∗ (−v),

(2.2)

√ Z∞

α

2

dV σ 0 (V )V 2 e−α(V −v) .

σ (v) = √ 2

πv 0

(2.3)

where

∗

The exponential term in Eq. 2.3 limits the significant part of the integral to the range

4

4

v−√ <V <v+√ ,

α

α

20

(2.4)

while for σ ∗ (v), the range of significance becomes

4

0<V < √ .

α

(2.5)

The numerical evaluation of Eq. 2.3 developed in [9] assumes that the 0 K cross

sections can be represented by a piecewise linear function of energy with acceptable

accuracy, which is just the form of NJOY PENDF files [3]. By defining the reduced

√

√

variables x = αV and y = αv, the 0 K cross sections can be expressed as

σ 0 (x) = σi0 + si (x2 − x2i ),

(2.6)

0

− σi0 )/(xi+1 − xi ) for the i-th energy interval. As a result, Eq.

with slope si = (σi+1

2.3 becomes

N Z xi+1

1 X

2

σ (y) = √ 2

σ 0 (x)x2 e−(x−y) dx

πy i=0 xi

(2.7)

σ ∗ (y) =

(2.8)

∗

X

[Ai (σi0 − si x2i ) + Bi si ],

i

where N denotes the number of energy intervals that fall in the significance range as

determined by Eq. 2.4 and 2.5, and

1

H2 +

y2

1

= 2 H4 +

y

Ai =

Bi

2

H1 + H0 ,

y

4

H3 + 6H2 + 4yH1 + y 2 H0 ,

y

where Hn is shorthand for Hn (xi − y, xi+1 − y), defined by

1 Z b n −z2

Hn (a, b) = √

z e dz.

π a

21

(2.9)

To compute Hn (a, b), one can write it in the form

Hn (a, b) = Fn (a) − Fn (b),

(2.10)

1 Z ∞ n −z2

Fn (x) = √

z e dz.

π x

(2.11)

where Fn (x) is defined by

and satisfies a recursive relation

1

erfc(x),

2

1

2

F1 (x) = √ e−a

2 π

n−1

Fn−2 (x) + xn−1 F1 (x),

Fn (x) =

2

F0 (x) =

(2.12)

(2.13)

(2.14)

with erfc(x) being the complementary error function

2 Z ∞ −z2

e dz.

erfc(x) = √

π a

(2.15)

If a and b are very close with each other, the difference in Eq. 2.10 may loose

significance. To avoid this, one can use a different method based on direct Taylor

expansion (see [10]). However, with the use of double precision floating point numbers,

this problem did not show up during the course of this thesis work.

Traditionally Cullen’s method is used in NJOY to Doppler broaden the 0 K cross

section and generate cross section libraries for reference temperatures. Any cross

section needed are then evaluated with this library by linear interpolation, which will

be denoted “table lookup” method henceforth in this thesis. As will be shown in

Chapter 4 (and also in other places such as [5]), Cullen’s method is not very practical

to broaden the cross section on they fly since it requires an unacceptable amount of

22

computation time, mainly due to the cost of evaluating complementary error functions

for the many energy points that fall in the range of significance, especially at high

energy.

2.1.2

Regression model

In [5], Yesilyurt et al. developed a new regression model to perform on-the-fly Doppler

broadening based on series expansion of the multi-level Adler-Adler formalism with

temperature dependence. Take the total cross section as an example (similar analysis

applies to other types of cross section), its expression in Adler-Adler formalism is

σt (E, ξR ) = 4πλ̄2 sin2 φ0

√ X 2

[(GR cos2φ0 + HR sin2φ0 )ψ(x, ξR )

+ πλ̄2 E{

R ΓR,t

+ (HR cos2φ0 − GR sin2φ0 )χ(x, ξR )]

+ A1 +

A2 A3 A4

+ 2 + 3 + B1 E + B2 E 2 }

E

E

E

(2.16)

where

ψ(x, ξR ) =

χ(x, ξR ) =

λ̄ =

x =

ξR =

ξR Z ∞ exp[− 14 (x − y)2 ξR2 ]dy

√

2 π ∞

1 + y2

ξR Z ∞ exp[− 14 (x − y)2 ξR2 ]ydy

√

2 π ∞

1 + y2

√

1

1

2mn awri

= √ , k0 =

,

k

h̄ awri + 1

k0 E

2(E − ER )

,

Γ

s T

awri

ΓT

,

4kB ER T

(2.17)

(2.18)

(2.19)

(2.20)

(2.21)

and the other symbols are: GR , symmetric total parameter; HR , asymmetric total

parameter; Ai and Bi , coefficients of the total background correction; φ0 , phase shift;

ER , energy of resonance R; ΓT , total resonance width; mn , neutron mass; and awri,

23

the mass ratio between the nuclide and the neutron. Since the only temperature

dependence of Eq. 2.24 is in ξR , and ξR only appears in ψ(x, ξR ) and χ(x, ξR ), as a

result, by writing ψ(x, ξR ) and χ(x, ξR ) as ψR (T ) and χR (T ) and expanding them in

terms of T ,

ψR (T ) =

X

aR,i fi (T ),

(2.22)

bR,i hi (T ),

(2.23)

i

χR (T ) =

X

i

one can arrive at an expression for the total cross section in terms of series expansion

of T

σt (E, T ) = AR +

X

a00i fi (T ) +

i

X

b00i hi (T ),

(2.24)

i

where a00i and b00i are parameters specific for a given energy and nuclide.

Through analyzing the asymptotic expansions of both ψ(x, ξR ) and χ(x, ξR ), augmented by numerical investigation [5], a final form for σt (E, T ),

6

X

ai

+

bi T i/2 + c,

σt (E, T ) =

i/2

i=1

i=1 T

6

X

(2.25)

where ai , bi and c are parameters unique to energy, reaction type and nuclide, was

found to give good accuracy for a number of nuclides examined over the temperature

ranges of 77 − 3200K.

As shown in [11], for production MCNP code, the overhead in performance to

incorporate this regression model method over the traditional table lookup method

is only 10% − 20%. However, since for each 0 K energy point of each cross section

type of any nuclide, there are 13 parameters needed, which suggests that the total

size of data for this method is around 13 times of that of standard 0 K cross sections,

or about 13 GB. Although reduced significantly from the table lookup method, this

24

size is still taxing for many hardware systems, such as GPUs.

2.1.3

Explicit temperature treatment method

In essence, the explicit temperature treatment method is not a method for Doppler

broadening, since there is no broadening of any cross section at any point during

the process. However, due to the fact that it does solve the problem of temperature

dependence of cross sections in a clever way, it is also included in this category.

As described in [6], the whole method is based on a concept similar to that of

Woodcock delta-tracking method [12], so essentially it is a type of rejection sampling

method. Specifically, for incident neutron energy E at ambient temperature T , a

majorant cross section for a nuclide n is defined as

Σmaj,n (E) = gn (E, T, awrin )

Σ0tot,n (E 0 ),

max √

√

E 0 ∈[( E−4/λn (T ))2 ,( E+4/λn (T ))2 ]

(2.26)

where gn (E, T, awrin ) is a correction factor for the temperature-initiated increase in

potential scattering cross section of the form

√

1

e−λn (T ) E

√ ,

gn (E, T, awrin ) = [1 +

]erf[λn (T ) E] − √

2λn (T )2 E

πλn (T ) E

2

s

λn (T ) =

awrin

,

kB T

(2.27)

(2.28)

0

(E)

Σ0tot,n (E) = Nn σtot,n

(2.29)

0

and Nn and σtot,n

are the number density and the 0 K total microscopic cross section

of nuclide n. A majorant cross section for a material region with n different nuclides

and a maximum temperature Tm is then defined as

Σmaj (E) =

X

Σmaj,n (E)

n

25

(2.30)

The neutron transport process can subsequently be simulated with the tracking

scheme shown in Algorithm 1.

Algorithm 1 Tracking scheme for explicit temperature treatment method

while true do

starting at position ~r, sample a path length ~l based on the majorant cross section

at ~r, Σmaj (E, ~r)

get a temporary collision point, ~r0 = ~r + ~l

sample target nuclide at position ~r0 , with probability for nuclide n as

Σmaj,n (E, ~r0 )

Pn =

Σmaj (E, ~r0 )

sample the target velocity from the Maxwellian distribution with temperature

Tm (~r0 ), get the energy E 0 corresponding to the relative velocity between neutron

and the target nucleus

Σ0tot,n (E 0 )

:

rejection sampling with criterion ξ <

Σmaj,n (E, ~r0 )

if rejected then

continue

else

set the collision point: ~r ← ~r0

sample the reaction type with energy E 0 and the 0 K microscopic cross

section

continue

end if

end while

Combining the definition of majorant cross section and the tracking scheme, it is

clear that the material majorant cross section is defined such that every single nucleus

in this material is assigned with the maximum possible microscopic cross section (if

the high energy Maxwellian tail can be ignored), and the rejection sampling is then

performed based on the real microscopic cross section from Maxwell distribution.

The dataset needed for this method are the 0 K cross sections and the majorant

microscopic cross sections for the material temperatures of interest, and thus the

storage requirement is on the order of a few GB. However, due to the use of delta

tracking and rejection sampling, the efficiency can be impacted. In fact, as reported

in [13], the runtime of explicit temperature treatment method is 2 − 4 times slower

than the standard table lookup method for a few test cases.

26

2.1.4

Other methods

There are also some other methods that have been developed for Doppler broadening.

They will be discussed briefly below.

The psi-chi method [14] is a single level resonance representation that introduces

a few substantial approximations to Eq. 2.1, including using the single-level BreitWigner formula for the 0 K cross section, omitting the second exponential term,

approximating the exponent in the first exponential term with Taylor series expansion and extending the lower limit of the integration to −∞. The final form of the

Doppler-broadened cross section uses the functions ψ(x, ξR ) and χ(x, ξR ) as defined

in Eq. 2.17 and 2.18. Due to the extensive approximations used, psi-chi method is

not very accurate for cross section evaluation, especially for low energies. Besides,

the single-level Breit-Wigner formalism is now considered obsolete as the resonance

representation for major nuclides.

The Fast-Doppler-Broadening method developed in [15] uses a two-point GaussLegendre quadrature for the integration in Eq. 2.7 when xi+1 − xi > 1.0, and uses

Eq. 2.8 otherwise. This method was found to be 2 − 3 times faster than Cullen’s

method, thus still not efficient enough for on-the-fly Doppler broadening.

Another method that was proposed during the course of this thesis makes use

of the fact that the velocity of target nucleus is usually much smaller than that

of incident neutron velocity. Consequently , the energy corresponding to the relative

velocity between neutron and target nucleus can be approximated as a function of vT µ,

where vT is the velocity of the target nucleus and µ is the cosine between the neutron

velocity and target velocity. It can be proved that vT µ obeys Gaussian distribution,

as a result, the effective Doppler-broadened cross section at temperature T can be

expressed as a convolution of the 0 K cross section and a Gaussian distribution

σ̄(E) =

Z ∞

−∞

σ 0 [Erel (u)]G(u, T )du

27

(2.31)

where u = vT µ and G(u, T ) represents a Gaussian distribution for temperature T .

With the similar energy cut-off strategy as used in Cullen’s method, and by using a

tabulated cumulative distribution function (cdf) for Gaussian distribution, the above

integration can be evaluated numerically and requires much fewer operations than the

Cullen’s method for each energy grid point. However, the inherent inaccuracy for low

energy ranges and the still high computational time makes it unattractive, especially

when compared to the approximate multipole method that will be discussed in the

next chapter, as a result, it was abandoned.

2.2

General purpose computing on GPU

GPUs started as fixed-function hardware dedicated to handle the manipulation and

display of 2D graphics in order to offload the computationally complex graphical calculations from the CPU. Due to the inherent parallel nature of displaying computer

graphics, over the years, more and more parallel processors are added to a single

GPU card and thus make it massively parallel and of tremendously high floating

point operations throughput. Nowadays, GPUs are capable of performing trillions

of floating point operations per second (Teraflops) from a single GPU card, much

higher than that of the high-end CPUs (see Fig. 2-1). In order to utilize this huge

computational power in other areas of computing, some efforts have been made to

transfer GPUs into fully-programmable processors capable of general-purpose computing, among which is NVidia’s CUDA (Compute Unified Device Architecture)[16],

a general-purpose parallel computing architecture. Ever since then, many scientific

applications have been accelerated with GPUs, including some cases for Monte Carlo

neutron transport such as [17, 18, 19, 20]. In the remaining part of this section, the

architectural aspects of GPUs and the CUDA programming model, as well as some

of the performance considerations for GPUs, are briefly reviewed.

28

Figure 2-1: Evolution of GPU and CPU throughput [1].

2.2.1

GPU architecture

GPU architecture is very different from conventional multi-core CPU design, since

GPUs are fundamentally geared towards high-throughput (versus low-latency) computing. As a parallel architecture, it is designed to process large quantities of concurrent, fine-grained tasks.

Fig. 2-2 illustrates the architecture of an NVidia GPU. A typical high performance

GPU usually contains a number of streaming multiprocessors (SMs), each of which

comprises tens of homogeneous processing cores. SMs use single instruction multiple

thread (SIMT) and simultaneous multithreading (SMT) techniques to map threads

of execution onto these cores.

SIMT techniques are architecturally efficient in that one hardware unit for instructionissue can service many data paths and different threads execute in lock step fashion.

However, to avoid the scalability issues of signal propagation delay and underutilization that may happen with a single instruction stream for the entire SM [21], GPUs

29

Figure 2-2: Architecture of an Nvidia GF100 card (Courtesy of Nvidia).

typically implement fixed-size SIMT groupings of threads called warps, and the width

of a warp is usually 32. Distinct warps are not run in lock step and may diverge.

Using SMT techniques, each SM maintains and schedules the execution contexts of

many warps. This style of SMT enables GPUs to hide latency by switching amongst

warp contexts when architectural, data, and control hazards would normally introduce stalls. This leads to a more efficient utilization of the available physical resource,

and the maximal instruction throughput occurs when the number of thread contexts

is much greater than the aggregate number of SIMT lanes per SM.

Communication between threads is achieved by reading and writing data to various shared memory spaces. As shown in Fig. 2-3, GPUs have three levels of explicitly

managed storage that vary in terms of visibility and latency: per-thread registers,

30

shared memory local to a collection of warps running on the same SM, and a large

global (device) memory in off-chip DRAM that is accessible by all threads.

Figure 2-3: GPU memory hierarchy (Courtesy of Nvidia).

Unlike traditional CPU architecture, GPUs do not implement data caches for

the purpose of maintaining the programs working set in nearby, low-latency storage.

Rather, the cumulative register file comprises the bulk of on-chip storage, and a

much smaller cache hierarchy often exists for the primary purpose of smoothing over

irregular memory access patterns. In addition, there are also two special types of readonly memory on GPUs: constant and texture memory. Both are part of the global

memory, but each of them has a corresponding cache that can facilitate the memory

access. In general, constant memory is most efficient when all threads in a warp

access the same memory location, while texture memory is good for memory access

with spatial locality. Since different types of memory have very different latency and

31

bandwidth, as shown in Table 2.1, a proper use of the memory hierarchy is essential

in achieving a good performance for GPU programs.

Table 2.1: Latency and throughput of different types of memory in GPU [2].

Registers

Shared

Global

Constant

memory

memory

memory

Latency

(unit: ∼ 1

∼5

∼ 500

∼

5 with

clock cycle)

caching

Bandwidth

Extremely

High

Modest

High

high

2.2.2

CUDA Programming Model

CUDA is both a hardware architecture and a software platform to expose that hardware at a high level for general purpose computing. CUDA gives developers access

to the virtual instruction set and memory of the parallel computational elements in

GPUs. This platform is accessible through extensions to industry-standard programming languages, including C/C++ and FORTRAN, and CUDA C is used in current

work.

A typical CUDA C program consists of two parts of code: host code and device

code. The host code is similar to any common C program, except that it needs to

take care of memory allocation on GPU, data transfer between CPU and GPU and

offload the computation to GPU. Device code, as suggested by the name, are the code

that executes on the device (GPU). The device code is started with a kernel launch

from the host code, through which a kernel function is executed by a collection of

logical threads on the GPU. These logical threads are mapped onto hardware threads

by a scheduling runtime, either in software or hardware.

In CUDA, all logical threads are organized into a two-level hierarchy, block and

grid, for efficient thread management. Specifically, a block contains a number of

cooperative threads that will be assigned to the same SM, and all the blocks of a

32

kernel form a grid, as shown in Fig. 2-4. During a kernel launch, both block and

grid dimensions have to be specified. The thread execution can then be specialized

by its identifier in the hierarchy, allowing threads to determine which portion of the

problem to operate on.

Figure 2-4: CUDA thread, block and grid hierarchy [1].

The threads within a block share the same local shared memory, since they are

assigned on the same SM, and some synchronization instructions exist to ensure

memory coherence for this per-block shared memory. On the other hand, global

memory, which can be accessed by all the threads within a grid, or threads for a

kernel, is only guaranteed to be consistent at the boundaries between sequential kernel

invocations.

33

2.2.3

GPU performance pitfalls

Although GPUs can deliver very high throughput on parallel computations, they

require large amounts of fine-grained concurrency to do so. In addition, due to the fact

that GPUs have long been a special-purpose processor for graphics, the underlying

hardware can penalize algorithms having irregular and dynamic behavior not typical

of computations related to graphics. In fact, GPU architecture is particularly sensitive

to load imbalance among processing elements. Here, two major performance pitfalls

relevant to current work are discussed: variable memory access cost from SIMT access

patterns and thread divergence.

Non-uniform memory access

Due to the SIMT nature of the GPU programming model, for each load/store instruction executed by a warp in one lock step, there are probably a few accesses to

different memory locations. Different types of physical memory may respond differently to such collective accesses, but they all share one thing in common, that is, each

of them are optimized for some specific access patterns. A mismatched access pattern

often leads to significant underutilization of the physical resource that can be as high

as an order of magnitude.

For global memory, the individual accesses for each thread within a warp can be

combined/bundled together by the memory subsystem into a single memory transaction, if every reference falls within the same contiguous global memory segment. This

is called “memory coalescing”. A a result, if each of the thread references a memory

location of a distinct segment, then many transactions have to be made, which is very

wasteful of the memory bandwidth.

For local shared memory, performance is highest when no threads within the same

warp access different words in a same memory bank. Otherwise the memory accesses

(to the same bank) will be serialized by the hardware, called “bank conflicts”. Bank

34

conflicts is a serious performance hurdle for shared memory and needs to be avoid to

achieve good performance.

Thread divergence

In the GPU programming model, logical threads are grouped into warps of execution.

A single program counter is shared by all threads within the warp. Warps, not threads,

are free to pursue independent paths through the kernel program.

To provide the illusion of individualized control flow, the execution model must

transparently handle branch divergence. This situation occurs when a conditional

branch instruction, like “If...Else...”, would redirect a subset of threads down the “If”

path, leaving the others to continue the “Else” path. Because threads within the

warp proceed in lock step fashion, the warp must necessarily execute both halves of

the branch, making some of the threads idle where appropriate. This mechanism

can lead to an inherently unfair scheduling of logical threads. In the worst case,

only one logical thread may be active while all others threads perform no work. The

GPU’s relatively large SIMT width exacerbates the problem and branch divergence

can impose an order of magnitude slowdown in overall computing throughput.

2.2.4

Floating point precision support

For the early generation of GPU cards, there was only support for single precision

floating numbers and corresponding arithmetic, since accuracy is not a big issue for

image processing and graphics display. However, with the advent of general purpose

GPU computing for scientific purposes, the need for support of double precision floating point arithmetic becomes imperative. As a result, since the introduction of GT200

card and CUDA Computability 1.3, double precision arithmetic has been supported

on most Nvidia high performance GPU cards.

For Nvidia GPUs earlier than Computability 2.0, each SM has only one special

35

function unit (SFU) that can perform double precision computation, while there are

eight units for single precision computation. As a result, on these GPU cards, the

theoretical peak performance of double precision computation is only one eighth of

that of single precision computation. Since CUDA Computability 2.0, more SFUs

that support double precision computation are added to GPUs and nowadays the

theoretical peak performance of double precision computation is usually a half of

that of single precision on a normal Nvidia high performance GPU card.

36

Chapter 3

Approximate Multipole Method

This chapter starts with the description of multipole representation. The formulation of the approximate multipole method for on-the-fly Doppler broadening is then

presented, along with the overlapping energy domains strategy. A systematic study

of a few important parameters in the proposed strategy then follows. The chapter

concludes with a discussion about an important function in the multipole method.

3.1

3.1.1

Multipole representation

Theory of multipole representation

In resonance theory, the reaction cross section for any incident channel c and exit

channel c0 can be expressed in terms of collision matrix Ucc0

σcc0 = πλ̄2 gc |δcc0 − Ucc0 |2 ,

37

(3.1)

where gc and δcc0 are the statistical spin factor and the Kronecker delta, respectively,

and λ̄ is as defined in 2.19. Similarly, the total cross section can be derived as

σt =

X

σcc0 = 2πλ̄2 gc (1 − ReUcc0 ).

(3.2)

c0

The collision matrix can be described by R-matrix representation, which has four

practical formalisms, i.e., single-level Breit-Wigner (SLBW), multilevel Breit-Wigner

(MLBW), Adler-Adler, and Reich-Moore. Because of the rigor of the Reich-Moore

formalism in representing the energy behavior of the cross section, it is used extensively for major actinides in the current ENDF/B format. In Reich-Moore formalism,

the collision matrix can be represented by the transmission probability[22]

Ucc0 = e−i(φc +φc0 ) (δcc0 − 2ρcc0 ),

(3.3)

where ρcc0 is the transmission probability from channel c to c0 , and φc and φc0 are the

hard-sphere phase shift of channel c and c0 , respectively.

Due to the physical condition that the collision matrix must be single-valued and

meromorphic1 in momentum space, the collision matrix and thus the transmission

probability can be rigorously represented by rational functions with simple poles in

√

E domain [7]. This is a generalization of the rationale suggested by de Saussure

and Perez [23] for the s-wave resonances, and lays the foundation for the multipole

representation as described in [7]. In this representation, the neutron-neutron and the

generic neutron to channel c transmission probabilities of the Reich-Moore formalism

for all N resonances with angular momentum number l can be written as

ρnn

√

2M

Pn2M −1 ( E) X

Rnλ

√

√

=

=

2M

P ( E)

E

λ=1 pλ −

1

(3.4)

A meromorphic function is a function that is well behaved except at isolated points. In contrast,

a holomorphic function is a well-behaved function in the whole domain.

38

√

2M

X

|Pc2M −1 ( E)|2

2Rcλ

√

√

|ρnc | =

=

|P 2M ( E)|2

E

λ=1 pλ −

2

(3.5)

where ρnn and ρnc are the transmission probabilities, P represents a holomorphic

function, pλ ’s are the poles of the complex function while Rnλ and Rcλ are the corresponding residues for the transmission probability, and M = (l + 1)N . These two

equations suggest that a resonance with angular momentum number l corresponds

to 2(l + 1) poles. Finding the poles is complicated since they are the roots of a

high order complex polynomial with roots that are often quite close to each other

in momentum space. A code package, WHOPPER, was developed by Argonne National Laboratory to find all the complex poles, making use of good initial guesses

and quadruple precision [7]. Once all poles and residues have been obtained, the 0 K

neutron cross-sections can be computed by substituting Eq. 3.3 - 3.5 into Eq. 3.1 3.2, which yields

(j)∗

where pλ

(x)

N 2(l+1)

X

−iRl,J,λ,j

1 XX

Re[ (j)∗ √ ]

σx (E) =

E l,J λ=1 j=1

pλ − E

(3.6)

(t)

N 2(l+1)

X

−iRl,J,λ,j

1 XX

−2iφl

σt (E) = σp (E) +

Re[e

√ ]

(j)∗

E l,J λ=1 j=1

pλ − E

(3.7)

(x)

is the complex conjugate of the j-th pole of resonance λ, and Rl,J,λ,j and

(t)

Rl,J,λ,j are the residues for reaction type x and total cross section, respectively, and

the potential cross-section σp (E) is given by

σp (E) =

X

4πλ̄2 gJ sin2 φl

(3.8)

l,J

with φl being the the phaseshift. In this form, the cross-sections can be computed by

summations over angular momentum of the channel (l), channel spin (J), number of

resonances (N ) and number of poles associated to a given resonance(2(l + 1)).

39

3.1.2

Doppler broadening

By casting the expression for cross section into the form of Lorentzian-like terms

as shown in Eq. 3.6 and 3.7, the Doppler broadened cross section can be derived in

analytical forms consisting of well-known functions, as demonstrated in [7]. Specially,

with the use of Solbrig kernel [24],

√ √

S( E, E 0 ) =

√

√

√

√

√

( E− E 0 )2

( E+ E 0 )2

E0

[−

]

[−

]

2

2

∆

∆

m

m

√ {e

−e

}

∆m πE

(3.9)

s

kB T

is the Doppler width in momentum space, and kB is the Boltzawri

mann constant, Eq. 3.6 and 3.7 can be Doppler broadened to take the following

where ∆m =

form

(x)

√

(j)∗

√ (x)

iRl,J,λ,j

E p

N 2(l+1)

X Re[ πRl,J,λ,j W(z0 ) + √π C( ∆m , ∆λm )]

1 XX

σx (E, T ) =

E l,J λ=1 j=1

∆m

σt (E, T ) = σp (E) +

1

E

N 2(l+1)

XX

X

√ (t)

Re{e−2iφl [ πRl,J,λ,j W(z0 ) +

(3.10)

√

(j)∗

iRl,J,λ,j

E pλ

√

C(

,

)]}

π

∆m ∆m

(t)

∆m

l,J λ=1 j=1

(3.11)

where

√

z0 =

(j)∗

E − pλ

,

∆m

(3.12)

W(z) is the Faddeeva function, defined as

2

i Z ∞ e−t dt

W(z) =

,

π −∞ z − t

40

(3.13)

and the quantity related to C can be regarded as the low energy correction term,

with the full expression

√

√

2

(j)∗

(j)∗

−2i pλ − ∆E2 Z ∞

E pλ

e−t −2 Et/∆m

,

)= √

e m

C(

dt (j)∗

.

∆m ∆m

π∆m ∆m

0

[pλ /∆m ]2 − t2

(3.14)

Since this correction term is generally negligible for energy above the thermal region

[25], and only energy above 1 eV are of interest in this thesis, this correction term

will be ignored from now on. As a result, the above equations become

√

N 2(l+1)

X

π

1 XX

(t)

Re[Rl,J,λ,j W(z0 )],

σx (E) =

E l,J λ=1 j=1 ∆m

√

N 2(l+1)

X

1 XX

π

(t)

σt (E) = σp (E) +

Re[e−2iφl Rl,J,λ,j W(z0 )],

E l,J λ=1 j=1 ∆m

(3.15)

(3.16)

and Doppler broadening a cross section at a given energy E is thus reduced to a

summation over all poles, each with a separate Faddeeva function evaluation.

3.1.3

Characteristics of poles

As mentioned in 3.1.1, each resonance with angular momentum l corresponds to in

total 2(l + 1) poles. Among them, two are called “s-wavelike” poles, and their real

part are of the opposite sign but have the same value, which is close to the square

root of the resonance energy, while their imaginary part are very small; the other 2l

poles behave like l conjugate pairs featuring large imaginary part with a characteristic

1

magnitude of

, where a is the channel radius and k0 is defined in Eq. 2.19. Fig.

k0 a

3-1 shows the pole distribution of U238, which has both l = 0 and l = 1 resonances,

to demonstrate the relative magnitude of different type of poles.

In ENDF/B resonance parameters, for most major nuclides, there are usually a

number of artificial resonances (called “external resonances”) outside of the resolved

resonance regions. Since they are general s-wave resonances, each of them also cor41

600

400

Im(p)

200

0

−200

−400

−600

−200

−150

−100

−50

0

Re(p)

50

100

150

200

Figure 3-1: Poles distribution for U238. Black and red dots represent the poles with

l = 0 and with positive and negative real parts, respectively, and green dots represent

the poles with l > 0.

responds to two poles, the same as an ordinary s-wave resonance. Besides, for the

external resonances that are above the upper bound of resolved resonance region, the

value of poles also obey the same rule as that for the ordinary s-wave, while for those

that have negative resonance energies, the two poles are of opposite sign and the absolute value of the real part is very small and that of the imaginary part is relatively

large, as demonstrated by the black and red dots (almost) along the imaginary axis

in Fig. 3-1.

3.1.4

Previous efforts on reducing poles to broaden

To reduce the number of poles to be broadened when evaluating cross sections for

elevated temperatures, an approach was proposed to replace half of the first type of

42

poles with a few pseudo-poles[25]. Specifically, it was noticed that the first type of

poles with negative real part have very smooth contributions to the resolved resonance

energy range, therefore, the summation of the contribution from these poles are also

smooth and can be approximated by a few smooth functions. Moreover, it turned

out that rational functions that are of the same form as the pole representation (Eq.

3.6 and 3.7) works very well as the fitting function. Each of these rational functions

effectively defines a “pseudo-pole”. It was found that only three of such pseudopoles are necessary to achieve a good accuracy for the ENDF/B-VI U238 evaluation

[25]. In addition, the contribution from the second type of poles were found to be

temperature independent due to their exceedingly large Doppler widths, therefore

they do not need to be broadened for elevated temperatures. As a result, only half

of the first type of poles, which is the same as the number of resonances (including

the external resonances), plus three pseudo-poles, are left to be broadened, which

effectively reduces the number of poles to be broadened.

For the new ENDF/B-VII U238 evaluational, there are 3343 resonances in total.

A preliminary study shows that around 10 pseudo-poles are needed to approximate

the contributions from the first type of poles with negative real part. Therefore, a

total of around 3353 poles need to be broadened.

In general, this is a good strategy in increasing the computational efficiency of

Doppler broadening process. However, the number of poles to be broadened is still

too large to be practical for performing Doppler broadening on the fly. Therefore, a

new strategy is proposed during the course of this thesis to further reduce the number

of poles to be broadened and will be discussed in the following sections.

43

3.2

3.2.1

Approximate multipole method

Properties of Faddeeva function and the implications

From the definition of Faddeeva function in Eq. 3.13, it can be approximated as a

Gauss-Hermite quadrature

M

aj

i X

,

W(z) ≈

π j=1 z − tj

(3.17)

where aj and tj are the Gauss-Hermite weights and nodes, respectively. As demonstrated in [26], the following number of quadrature points are sufficient to ensure a

relative error less than 10−5 :

M =

6,

3.9 < |z| < 6.9

4, 6.9 < |z| < 20.0

(3.18)

2, 20.0 < |z| < 100

1,

|z| ≥ 100 .

The nodes and weights for Gauss-Hermite quadrature of degree one are 0 and

√

respectively. Therefore, if |

√

π,

(j)∗

E−pλ

∆m

| ≥ 100, then

√

√

(j)∗

π

E − pλ

W[

]

∆m

∆m

√

≈

=

√

i

π · ∆m

· √

∆m π E − p(j)∗

λ

−i

√ .

(j)∗

pλ − E

π

By comparing to the 0 K expression in Eq. 3.6, this suggests that from a practical

point of view, the Doppler broadening effect is only significant to within a range of ∼

100 Doppler width away from a pole in the complex momentum domain [25]. In other

words, to calculate the cross section of any energy point for a certain temperature,

one can first sum up the contribution at 0 K from all those poles that are 100 Doppler

44

widths away from this energy, since this part is independent of temperature; and then

broaden the other neighboring poles for the desired temperature similar to Eq. 3.15

or Eq. 3.16. Taking the cross section evaluation of U238 at 1 KeV at 3000 K as an

example, 100 Doppler widths corresponds to about three in the momentum domain,

within which there are only around 470 poles. Compared to the total number to be

evaluated from 3.1.4, it is clear that this strategy can effectively reduce the number

of poles to be broadened on the fly.

As mentioned in 3.1.3, the second type of poles usually have very large imaginary

parts that are much greater than the Doppler width, therefore, they can be treated

as temperature independent. As to the first type of poles, the ones with negative real

parts in general can be treated as temperature independent too2 , since cross sections

are always computed in the complex domain with positive real parts. In addition,

those poles from negative resonances can also be treated as temperature independent,

since they usually have large imaginary parts. As a result, one is now left with only

those first type of poles with positive real part from positive resonances, which are

denoted as “principal poles” henceforth.

Fig. 3-2 and 3-3 show the relative errors of total cross section of U238 and U235

at 3000 K calculated with broadening only the principal poles against the NJOY

data. All results are based on the ENDF/B-VII resonance parameters. In general,

the agreement between the multipole representation and NJOY is very good, except

for some local minimum points (e.g. very low absolute cross section values) in U238

which have little practical importance. From now on, the cross sections calculated by

broadening all principal poles will be used as the reference multipole representation

cross section, unless otherwise noted.

An attractive property of the principal poles is that the input arguments to the

2

Strictly speaking, this property does not hold for poles that are very close to the origin and

when evaluating cross sections for very low energy, but this energy range is not of interest in current

work.

45

Reference

Calculated

RelErr 105

104

103

102

101

100

10-1

10-2

10-3

10-4

10-5

10-6

10-7

10-8

10-9

Cross section (barn)

102

101

100

10-1

10-2

10-3

Relative Error

10

3

101

104

102

103

E (eV)

Figure 3-2: Relative error of U238 total cross section at 3000K broadening only

principal poles compared with NJOY data.

Faddeeva function from these poles always fall in the upper half plane of the complex

domain, where the Faddeeva function is well-behaved, as shown in Fig. 3-4. In this

region, due to the presence of the exponential term, the Faddeeva function decreases

rapidly with increasing |z|. This property indicates that everything else being the

same, faraway poles in general have a much smaller contribution than the nearby

poles to a given energy point. Therefore, even though some poles may not lie outside

of the 100 Doppler width range, since their contribution is so small and the variation

in temperature is even smaller, these poles may also be treated as temperature independent. This can further reduce the number of poles to be broadened. However,

since the relative importance in contribution from different poles also depends on the

value of their residue, this effect has to be studied on a nuclide by nuclide basis, which

will be discussed later.

46

1

RelErr 10

Reference

Calculated

100

10-1

10-2

10-3

10-4

101

10-5

Relative Error

Cross section (barn)

102

10-6

10-7

100

10-8

101

102

103

E (eV)

Figure 3-3: Relative error of U235 total cross section at 3000 K broadening only

principal poles against NJOY data.

3.2.2

Overlapping energy domains strategy

Since the imaginary part of principal poles are very small, the value of the real

part is the major deciding factor of whether a pole is close to or far away from an

energy point to be evaluated and thus whether it is significant in terms of Doppler

broadening effect. As a result, for any given energy E at which the cross section needs

to be evaluated, the principal poles that are close to it in momentum space should be

consecutive in energy domain, therefore, if the principal poles are sorted by their real

part, then the poles that are close to E can be specified with a start and end index.

This property makes the storage of information very convenient.

As a result, one can divide the resolved resonance region of any nuclide into many

equal-sized small energy intervals (so that a direct index fetch instead of a binary

47

0.99

0.88

10

0.77

Im(x)

8

0.66

0.55

6

0.44

4

0.33

0.22

2

0.11

0

−10

−5

5

0

10

0.00

Re(x)

(a) Real part

0.540

10

0.405

0.270

8

Im(x)

0.135

6

0.000

−0.135

4

−0.270

−0.405

2

−0.540

0

−10

−5

5

0

10

Re(x)

(b) Imaginary part

Figure 3-4: Faddeeva function in the upper half plane of complex domain

search can be used). For each interval, there are only a certain number of local poles

(including those inside the interval) that have broadening effect on this interval, and

these poles can be recorded with their indices. For the other poles, their contribution

to this interval can be pre-calculated. Due to the fact that the Faddeeva function is

smooth away from origin, the accumulated contribution will also be smooth and can

be estimated with a low order polynomial. Since the potential part of the total cross

section is very smooth as well, it can also be included in the background cross section.

48

Therefore, when evaluating the cross section, one needs only to broaden those local

poles, and to add the background cross section approximated from the polynomials.

This can be expressed as

√

1 X π

(x)

σx (E) = px (E) +

Re[Rl,J,λ,j W(z0 )]

E pλ ∈Ω ∆m

√

1 X π

(t)

Re[e−2iφl Rl,J,λ,j W(z0 )]

σt (E) = pt (E) +

E pλ ∈Ω ∆m

(3.19)

(3.20)

where px (E) and pt (E) represent the polynomial approximation of background cross

section, and pλ ∈ Ω represent the poles that need to be broadened on the fly.

To ensure the smoothness and temperature independence of the background cross

section in the energy interval (inner window), the poles that are within a certain

distance away from both edges of the energy interval also have to be broadened on

the fly. To this end, an overlapping energy domains strategy is used, i.e., outside of

the inner window, an equal size window (outer window) in energy is chosen and the

poles within both the inner and outer windows need to be broadened on the fly, as

demonstrated in Fig. 3-5. The size of the outer and inner window affects the number

of poles to be broadened on the fly, as well as the storage size and accuracy, which

will be studied in later parts of the thesis.

p(E)

outer

window

inner

window

outer

window

Figure 3-5: Demonstration of overlapping window.

3.3

Outer and inner window size

In the current overlapping energy domains strategy, to evaluate the cross section for

a given energy, one needs to broaden a number of poles lying inside the inner and

49

outer windows, as well as to calculate the background cross section. It is anticipated

that the major time component for this method will be the broadening of poles, so

the number of poles is an important metric to be monitored.

In general, the outer window mainly determines the number of poles to be evaluated, therefore, a smaller outer window is always preferred, provided the accuracy

criterion is met. As to the inner window size, although it also affects the number of

poles to be broadened, this is usually less significant compared to the outer window

size. Instead, its major impact is on the storage size of the supplemental information

other than poles and residues. As mentioned before, for each inner window, two indices of the poles to be broadened need to be stored, in addition to the polynomial

coefficients. The number of inner windows depends both on the length of resolved

resonance region and the inner window size. Since the resolved resonance region is

fixed for each nuclide, the larger the inner window, the fewer inner windows there are,

and thus less data to be stored. However, as the inner window gets larger, a higher

order polynomial may be necessary to ensure a certain accuracy for the background

cross section, which may also increase the storage size. As a result, there is a tradeoff

between performance and storage size for inner window size, which adds complexity.

As a starting point, the inner window size is set to be the average spacing of the

resonances for each nuclide (e.g. 5.98 eV for U238 and 0.70 eV for U235). There

are two advantages for this choice: first, there is in general only one pole lying in

the inner window, which poses a very minimal performance overhead; second, the

number of inner window is bounded with the number of resonances of each nuclide,

which is at most a few thousand in current ENDF/B resonance data, even though

the resolved resonance regions of different nuclides can span from a few hundred eV

to a few MeV. For the background cross section, a second-order polynomial is used

for the approximation, unless otherwise noted. To account for the 1/v effect of cross

sections for low energy, a smaller inner window size, currently 0.2 eV, is used for

50

energies below 10 eV.

In this study of inner and outer window size, two nuclides, U238 and U235, are

used, due both to their importance in nuclear reactor simulation and to the large

number of resonances they have that poses a challenge to the current method.

To quantify the accuracy, a set of cross sections for these two nuclides with the

same energy grid as that from NJOY (with the same temperature) are prepared as

the reference, by broadening all the principal poles for the desired temperatures and

accumulating the 0 K contributions from all other poles. For each nuclide, the cross

sections are evaluated for a fixed set of equal-lethargy energy points (∆ξ = 0.0001)

between 1 eV and the upper bound of the resolved resonance of this nuclide using the

overlapping energy domains strategy with the specific inner and outer window size.

The root mean square (RMS) of the relative cross section error with respect to the

reference, the RMS of the relative error weighted by the absolute difference in cross

section, and the maximum relative error are used together as the error quantification.

Assuming the total

points evaluated is N , then they can

s Pnumber of crossssection

PN

N

2

2

i=1 (RelErri )

i=1 AbsErri (RelErri )

,

and max RelErri ,

be expressed as

i=0,1,...,N

N

N

respectively. The second metric is introduced mainly to put less emphasis on cross

sections that are very small, since a low level of relative error is not usually necessary

for these cross sections in real application.

3.3.1

Outer window size

From the analysis of 3.2.1, the Doppler effect of a resonance (with pole p at energy

ER ) to an energy point E0 depends mainly on the magnitude of the corresponding

input argument to Faddeeva function, which in this case is

√

E0 − p∗

∆m

√

≈

E0 −

q

√

ER

kT

awri

51

∝

∝

√

∆ E

√

T

∆E

√

,

ET

(3.21)

This suggests that, everything else being the same, for a given nuclide, the outer

√

window size (in energy domain) should increase proportional to ET to ensure that

all those poles with significant Doppler effect are taken into account. However, as

pointed out before, as the outer window size increases, the contribution from the poles

outside the window becomes very small, and the varying contribution due to Doppler

broadening may become negligible. Therefore, the exact functional dependence on

energy or temperature may be of a different form. In the remaining part of this

section, the energy effect alone will first be studied, followed by the temperature

effect.

Energy Dependence

Since the cross section at an energy point is mostly affected by nearby resonances,

the average spacing of resonances also seems to be a good choice as the characteristic

length of the outer window. To get an idea of the minimum size of the outer window,

a constant of twice of the average resonance spacing is used to evaluate the total cross

section of U238 at 3000 K for the whole resolved resonance region. Fig. 3-6 shows the

relative error of the calculated total cross section with the overlapping energy domains

strategy compared with the corresponding reference cross section. It is clear that the

outer window size is large enough to achieve a very good accuracy for energies up to

1 KeV, but the error increases significantly for higher energy, which indicates that a

larger outer window size is needed for this energy range.

To confirm that the large error at high energy indeed comes from the Doppler

effect of poles that should have been included in the outer window, the relative error

of the background cross section approximation is also analyzed. The approximation is

52

10