Lectures 9-13: Divide-and-Conquer CSE 431/531: Analysis of Algorithms Lecturer: Shi Li

advertisement

CSE 431/531: Analysis of Algorithms

Lectures 9-13: Divide-and-Conquer

Lecturer: Shi Li

Department of Computer Science and Engineering

University at Buffalo

Spring 2016

MoWeFr 3:00-3:50pm

Knox 110

Outline

1

Divide-and-Conquer

2

Solving Recurrences

3

Counting Inversions

4

Polynomial Multiplication

5

Strassen’s Algorithm for Matrix Multiplication

6

Quicksort

Randomized Quicksort

Average-Case Analysis

7

Lower Bound for Comparison-Based Sorting Algorithms

8

Selection Problem

Divide-and-Conquer

Divide: Divide instance into many smaller instances

Conquer: Solve each of smaller instances recursively and

separately

Combine: Combine solutions to small instances to obtain a

solution for original big instance

merge-sort(A, n)

1

2

3

4

5

6

if n = 1 then

return A

else

B ← merge-sort A 1..bn/2c , bn/2c

C ← merge-sort A bn/2c + 1..n , dn/2e

return merge(B, C, bn/2c, dn/2e)

merge-sort(A, n)

1

2

3

4

5

6

if n = 1 then

return A

else

B ← merge-sort A 1..bn/2c , bn/2c

C ← merge-sort A bn/2c + 1..n , dn/2e

return merge(B, C, bn/2c, dn/2e)

Divide: trivial

Conquer: 4 , 5

Combine: 6

Merge Two Sorted Arrays

3

8 12 20 32 48

5

7

9 25 29

Merge Two Sorted Arrays

3

8 12 20 32 48

5

7

9 25 29

Merge Two Sorted Arrays

3

8 12 20 32 48

5

7

3

9 25 29

Merge Two Sorted Arrays

3

8 12 20 32 48

5

7

3

9 25 29

Merge Two Sorted Arrays

3

8 12 20 32 48

5

7

3

5

9 25 29

Merge Two Sorted Arrays

3

8 12 20 32 48

5

7

3

5

9 25 29

Merge Two Sorted Arrays

3

8 12 20 32 48

5

7

9 25 29

3

5

7

Merge Two Sorted Arrays

3

8 12 20 32 48

5

7

9 25 29

3

5

7

Merge Two Sorted Arrays

3

8 12 20 32 48

5

7

9 25 29

3

5

7

8

Merge Two Sorted Arrays

3

8 12 20 32 48

5

7

9 25 29

3

5

7

8

Merge Two Sorted Arrays

3

8 12 20 32 48

5

7

9 25 29

3

5

7

8

9 12 20 25 29

Merge Two Sorted Arrays

3

8 12 20 32 48

5

7

9 25 29

3

5

7

8

9 12 20 25 29 32 48

Merge Two Sorted Arrays

Merge(B, C, n1 , n2 )

1

2

3

4

5

6

7

8

9

A ← []; i ← 1; j ← 1

while i ≤ n1 and j ≤ n2

if (B[i] ≤ C[j]) then

append B[i] to A; i ← i + 1;

else

append C[j] to A; j ← j + 1

if i ≤ n1 then append B[i..n1 ] to A

if j ≤ n2 then append C[j..n2 ] to A

return A

Recurrence for Running Time for Merge-Sort

T (n): running time for sorting an array of size n

(

c

if n = 1

T (n) =

T (bn/2c) + T (dn/2e) + cn if n ≥ 2

Recurrence for Running Time for Merge-Sort

T (n): running time for sorting an array of size n

(

c

if n = 1

T (n) =

2T (n/2) + cn if n ≥ 2

Recurrence for Running Time for Merge-Sort

T (n): running time for sorting an array of size n

T (n) = 2T (n/2) + O(n)

Outline

1

Divide-and-Conquer

2

Solving Recurrences

3

Counting Inversions

4

Polynomial Multiplication

5

Strassen’s Algorithm for Matrix Multiplication

6

Quicksort

Randomized Quicksort

Average-Case Analysis

7

Lower Bound for Comparison-Based Sorting Algorithms

8

Selection Problem

Methods for Solving Recurrences

The recursion-tree method

The substitution method

The master method

Recursion-Tree Method

T (n) = 2T (n/2) + O(n)

Recursion-Tree Method

T (n) = 2T (n/2) + O(n)

n

n/2

n/2

n/4

n/4

n/4

n/4

n/8

n/8

n/8

n/8

n/8

n/8

n/8

n/8

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

Recursion-Tree Method

T (n) = 2T (n/2) + O(n)

n

n/2

n/2

n/4

n/4

n/4

n/4

n/8

n/8

n/8

n/8

n/8

n/8

n/8

n/8

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

Each level takes running time O(n)

Recursion-Tree Method

T (n) = 2T (n/2) + O(n)

n

n/2

n/2

n/4

n/4

n/4

n/4

n/8

n/8

n/8

n/8

n/8

n/8

n/8

n/8

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

Each level takes running time O(n)

There are O(lg n) levels

Recursion-Tree Method

T (n) = 2T (n/2) + O(n)

n

n/2

n/2

n/4

n/4

n/4

n/4

n/8

n/8

n/8

n/8

n/8

n/8

n/8

n/8

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

Each level takes running time O(n)

There are O(lg n) levels

Running time = O(n lg n)

Recursion-Tree Method

T (n) = 4T (n/2) + O(n)

Recursion-Tree Method

T (n) = 4T (n/2) + O(n)

n

Recursion-Tree Method

T (n) = 4T (n/2) + O(n)

n

n/2

n/2

n/2

n/2

Recursion-Tree Method

T (n) = 4T (n/2) + O(n)

n

n/2

n/4

n/4

n/4

n/2

n/4

···

n/2

···

···

n/2

n/4

n/4

n/4

n/4

Recursion-Tree Method

T (n) = 4T (n/2) + O(n)

n

n/2

n/4

n

8

n/4

n

8

n

8

n/4

n

8

n/2

n/4

n/2

···

···

···

···

···

···

n/2

n/4

n/4

n

8

n/4

n

8

n/4

n

8

n

8

Recursion-Tree Method

T (n) = 4T (n/2) + O(n)

n

n/2

n/4

n

8

n/4

n

8

n

8

n/4

n

8

n/2

n/4

n/2

···

···

···

···

···

···

Total running time at level i?

n/2

n/4

n/4

n

8

n/4

n

8

n/4

n

8

n

8

Recursion-Tree Method

T (n) = 4T (n/2) + O(n)

n

n/2

n/4

n

8

n/4

n

8

n

8

n/4

n

8

n/2

n/4

n/2

···

···

···

···

···

···

Total running time at level i?

n

2i

n/2

n/4

n/4

n

8

× 4i = 2i n

n/4

n

8

n/4

n

8

n

8

Recursion-Tree Method

T (n) = 4T (n/2) + O(n)

n

n/2

n/4

n

8

n/4

n

8

n

8

n/4

n

8

n/2

n/4

n/2

···

···

···

···

···

···

Total running time at level i?

Total number of levels?

n

2i

n/2

n/4

n/4

n

8

× 4i = 2i n

n/4

n

8

n/4

n

8

n

8

Recursion-Tree Method

T (n) = 4T (n/2) + O(n)

n

n/2

n/4

n

8

n/4

n

8

n

8

n/4

n

8

n/2

n/4

n/2

···

···

···

···

···

···

Total running time at level i?

Total number of levels? lg2 n

n

2i

n/2

n/4

n/4

n

8

× 4i = 2i n

n/4

n

8

n/4

n

8

n

8

Recursion-Tree Method

T (n) = 4T (n/2) + O(n)

n

n/2

n/4

n

8

n/4

n

8

n

8

n/4

n

8

n/2

n/4

n/2

···

···

···

···

···

···

Total running time at level i?

Total number of levels? lg2 n

Total running time?

n

2i

n/2

n/4

n/4

n

8

× 4i = 2i n

n/4

n

8

n/4

n

8

n

8

Recursion-Tree Method

T (n) = 4T (n/2) + O(n)

n

n/2

n/4

n

8

n/4

n

8

n

8

n/4

n

8

n/2

n/4

n/2

···

···

···

···

···

···

Total running time at level i?

Total number of levels? lg2 n

Total running time?

n

2i

n/2

n/4

n/4

n

8

n/4

n

8

n/4

n

8

n

8

× 4i = 2i n

lg2 n

X

i=1

2i n = n(2lg2 n+1 − 1) = n(2n − 1) = O(n2 ).

Substitution Method

1. Guess the form of the solution

2. Use mathematical induction to find the constants

Substitution Method

1. Guess the form of the solution

2. Use mathematical induction to find the constants

Ex: T (n) = 2T (n/2) + O(n)

Substitution Method

1. Guess the form of the solution

2. Use mathematical induction to find the constants

Ex: T (n) = 2T (n/2) + O(n)

Ex: T (n) = 4T (n/2) + O(n3)

Substitution Method

1. Guess the form of the solution

2. Use mathematical induction to find the constants

Ex: T (n) = 2T (n/2) + O(n)

Ex: T (n) = 4T (n/2) + O(n3)

Ex: T (n) = T (n/5) + T (7n/10) + O(n)

Master Theorem

a ≥ 1, b > 1, c ≥ 0: constants

T (n) = aT (n/b) + O(nc )

Master Theorem

a ≥ 1, b > 1, c ≥ 0: constants

T (n) = aT (n/b) + O(nc )

Then,

T (n) =

??

if c < lgb a

if c = lgb a

if c > lgb a

Master Theorem

a ≥ 1, b > 1, c ≥ 0: constants

T (n) = aT (n/b) + O(nc )

Then,

T (n) =

lg a

O(n b )

if c < lgb a

if c = lgb a

if c > lgb a

Master Theorem

a ≥ 1, b > 1, c ≥ 0: constants

T (n) = aT (n/b) + O(nc )

Then,

T (n) =

lg a

O(n b )

??

if c < lgb a

if c = lgb a

if c > lgb a

Master Theorem

a ≥ 1, b > 1, c ≥ 0: constants

T (n) = aT (n/b) + O(nc )

Then,

T (n) =

lg a

O(n b )

O(nc )

if c < lgb a

if c = lgb a

if c > lgb a

Master Theorem

a ≥ 1, b > 1, c ≥ 0: constants

T (n) = aT (n/b) + O(nc )

Then,

lg a

O(n b )

T (n) = ??

O(nc )

if c < lgb a

if c = lgb a

if c > lgb a

Master Theorem

a ≥ 1, b > 1, c ≥ 0: constants

T (n) = aT (n/b) + O(nc )

Then,

lg a

O(n b )

T (n) = O(nc lg n)

O(nc )

if c < lgb a

if c = lgb a

if c > lgb a

Master Theorem

a ≥ 1, b > 1, c ≥ 0: constants

T (n) = aT (n/b) + O(nc )

Then,

lg a

O(n b )

T (n) = O(nc lg n)

O(nc )

if c < lgb a

if c = lgb a

if c > lgb a

Ex: T (n) = 4T (n/2) + O(n2 ). Which Case?

Master Theorem

a ≥ 1, b > 1, c ≥ 0: constants

T (n) = aT (n/b) + O(nc )

Then,

lg a

O(n b )

T (n) = O(nc lg n)

O(nc )

if c < lgb a

if c = lgb a

if c > lgb a

Ex: T (n) = 4T (n/2) + O(n2 ). Case 2.

Master Theorem

a ≥ 1, b > 1, c ≥ 0: constants

T (n) = aT (n/b) + O(nc )

Then,

lg a

O(n b )

T (n) = O(nc lg n)

O(nc )

if c < lgb a

if c = lgb a

if c > lgb a

Ex: T (n) = 4T (n/2) + O(n2 ). Case 2. T (n) = O(n2 lg n)

Master Theorem

a ≥ 1, b > 1, c ≥ 0: constants

T (n) = aT (n/b) + O(nc )

Then,

lg a

O(n b )

T (n) = O(nc lg n)

O(nc )

if c < lgb a

if c = lgb a

if c > lgb a

Ex: T (n) = 4T (n/2) + O(n2 ). Case 2. T (n) = O(n2 lg n)

Ex: T (n) = 3T (n/2) + O(n). Which Case?

Master Theorem

a ≥ 1, b > 1, c ≥ 0: constants

T (n) = aT (n/b) + O(nc )

Then,

lg a

O(n b )

T (n) = O(nc lg n)

O(nc )

if c < lgb a

if c = lgb a

if c > lgb a

Ex: T (n) = 4T (n/2) + O(n2 ). Case 2. T (n) = O(n2 lg n)

Ex: T (n) = 3T (n/2) + O(n). Case 1.

Master Theorem

a ≥ 1, b > 1, c ≥ 0: constants

T (n) = aT (n/b) + O(nc )

Then,

lg a

O(n b )

T (n) = O(nc lg n)

O(nc )

if c < lgb a

if c = lgb a

if c > lgb a

Ex: T (n) = 4T (n/2) + O(n2 ). Case 2. T (n) = O(n2 lg n)

Ex: T (n) = 3T (n/2) + O(n). Case 1. T (n) = O(nlg2 3 )

Master Theorem

a ≥ 1, b > 1, c ≥ 0: constants

T (n) = aT (n/b) + O(nc )

Then,

lg a

O(n b )

T (n) = O(nc lg n)

O(nc )

if c < lgb a

if c = lgb a

if c > lgb a

Ex: T (n) = 4T (n/2) + O(n2 ). Case 2. T (n) = O(n2 lg n)

Ex: T (n) = 3T (n/2) + O(n). Case 1. T (n) = O(nlg2 3 )

Ex: T (n) = T (n/2) + O(1). Which Case?

Master Theorem

a ≥ 1, b > 1, c ≥ 0: constants

T (n) = aT (n/b) + O(nc )

Then,

lg a

O(n b )

T (n) = O(nc lg n)

O(nc )

if c < lgb a

if c = lgb a

if c > lgb a

Ex: T (n) = 4T (n/2) + O(n2 ). Case 2. T (n) = O(n2 lg n)

Ex: T (n) = 3T (n/2) + O(n). Case 1. T (n) = O(nlg2 3 )

Ex: T (n) = T (n/2) + O(1). Case 2.

Master Theorem

a ≥ 1, b > 1, c ≥ 0: constants

T (n) = aT (n/b) + O(nc )

Then,

lg a

O(n b )

T (n) = O(nc lg n)

O(nc )

if c < lgb a

if c = lgb a

if c > lgb a

Ex: T (n) = 4T (n/2) + O(n2 ). Case 2. T (n) = O(n2 lg n)

Ex: T (n) = 3T (n/2) + O(n). Case 1. T (n) = O(nlg2 3 )

Ex: T (n) = T (n/2) + O(1). Case 2. T (n) = O(lg n)

Master Theorem

a ≥ 1, b > 1, c ≥ 0: constants

T (n) = aT (n/b) + O(nc )

Then,

Ex:

Ex:

Ex:

Ex:

lg a

O(n b )

T (n) = O(nc lg n)

O(nc )

if c < lgb a

if c = lgb a

if c > lgb a

T (n) = 4T (n/2) + O(n2 ). Case 2. T (n) = O(n2 lg n)

T (n) = 3T (n/2) + O(n). Case 1. T (n) = O(nlg2 3 )

T (n) = T (n/2) + O(1). Case 2. T (n) = O(lg n)

T (n) = 2T (n/2) + O(n2 ). Which Case?

Master Theorem

a ≥ 1, b > 1, c ≥ 0: constants

T (n) = aT (n/b) + O(nc )

Then,

Ex:

Ex:

Ex:

Ex:

lg a

O(n b )

T (n) = O(nc lg n)

O(nc )

if c < lgb a

if c = lgb a

if c > lgb a

T (n) = 4T (n/2) + O(n2 ). Case 2. T (n) = O(n2 lg n)

T (n) = 3T (n/2) + O(n). Case 1. T (n) = O(nlg2 3 )

T (n) = T (n/2) + O(1). Case 2. T (n) = O(lg n)

T (n) = 2T (n/2) + O(n2 ). Case 3.

Master Theorem

a ≥ 1, b > 1, c ≥ 0: constants

T (n) = aT (n/b) + O(nc )

Then,

Ex:

Ex:

Ex:

Ex:

lg a

O(n b )

T (n) = O(nc lg n)

O(nc )

if c < lgb a

if c = lgb a

if c > lgb a

T (n) = 4T (n/2) + O(n2 ). Case 2. T (n) = O(n2 lg n)

T (n) = 3T (n/2) + O(n). Case 1. T (n) = O(nlg2 3 )

T (n) = T (n/2) + O(1). Case 2. T (n) = O(lg n)

T (n) = 2T (n/2) + O(n2 ). Case 3. T (n) = O(n2 )

Recurssion Tree for Master Theorem

nc

1 node

(n/b)c

a nodes

(n/b2)c

a2 nodes

a3 nodes

n c

b3

.

.

.

n c

b3

.

.

.

(n/b)c

(n/b2)c

n c

b3

.

.

.

n c

b3

.

.

.

(n/b2)c

n c

b3

.

.

.

(n/b2)c

n c

b3

.

.

.

n c

b3

.

.

.

n c

b3

.

.

.

Recurssion Tree for Master Theorem

nc

1 node

(n/b)c

a nodes

(n/b2)c

a2 nodes

a3 nodes

nc

n c

b3

.

.

.

n c

b3

.

.

.

(n/b2)c

n c

b3

.

.

.

a c

bc n

(n/b)c

n c

b3

.

.

.

(n/b2)c

n c

b3

.

.

.

(n/b2)c

n c

b3

.

.

.

n c

b3

.

.

.

n c

b3

.

.

.

a 2 c

n

bc

a 3 c

n

bc

Recurssion Tree for Master Theorem

nc

1 node

(n/b)c

a nodes

(n/b2)c

a2 nodes

a3 nodes

nc

n c

b3

.

.

.

n c

b3

.

.

.

(n/b2)c

n c

b3

.

.

.

a c

bc n

(n/b)c

n c

b3

.

.

.

(n/b2)c

n c

b3

.

.

.

c < lgb a : bottom-level dominates:

(n/b2)c

n c

b3

.

.

.

n c

b3

.

.

.

a lgb n

bc

n c

b3

a 2 c

n

bc

a 3 c

n

bc

.

.

.

nc = nlgb a

Recurssion Tree for Master Theorem

nc

1 node

(n/b)c

a nodes

(n/b2)c

a2 nodes

a3 nodes

nc

n c

b3

.

.

.

n c

b3

.

.

.

(n/b2)c

n c

b3

.

.

.

a c

bc n

(n/b)c

n c

b3

.

.

.

(n/b2)c

n c

b3

.

.

.

(n/b2)c

n c

b3

.

.

.

n c

b3

.

.

.

n c

b3

a 2 c

n

bc

a 3 c

n

bc

.

.

.

lg n

c < lgb a : bottom-level dominates: bac b nc = nlgb a

c = lgb a : all levels are the same: nc lgb n = O(nc lg n)

Recurssion Tree for Master Theorem

nc

1 node

(n/b)c

a nodes

(n/b2)c

a2 nodes

a3 nodes

nc

n c

b3

.

.

.

n c

b3

.

.

.

(n/b2)c

n c

b3

.

.

.

a c

bc n

(n/b)c

n c

b3

.

.

.

(n/b2)c

n c

b3

.

.

.

(n/b2)c

n c

b3

.

.

.

n c

b3

.

.

.

n c

b3

a 2 c

n

bc

a 3 c

n

bc

.

.

.

lg n

c < lgb a : bottom-level dominates: bac b nc = nlgb a

c = lgb a : all levels are the same: nc lgb n = O(nc lg n)

c > lgb a : top-level dominates: O(nc )

Outline

1

Divide-and-Conquer

2

Solving Recurrences

3

Counting Inversions

4

Polynomial Multiplication

5

Strassen’s Algorithm for Matrix Multiplication

6

Quicksort

Randomized Quicksort

Average-Case Analysis

7

Lower Bound for Comparison-Based Sorting Algorithms

8

Selection Problem

Def. Given an array A of n integers, an inversion of A is a pair

(i, j) of indices such that i < j and ai > aj .

Def. Given an array A of n integers, an inversion of A is a pair

(i, j) of indices such that i < j and ai > aj .

Counting Inversions

Input: a sequence a1 , a2 , · · · , an of n distinct numbers

Output: number of inversions

Def. Given an array A of n integers, an inversion of A is a pair

(i, j) of indices such that i < j and ai > aj .

Counting Inversions

Input: a sequence a1 , a2 , · · · , an of n distinct numbers

Output: number of inversions

Example:

10

8

15

9

12

Def. Given an array A of n integers, an inversion of A is a pair

(i, j) of indices such that i < j and ai > aj .

Counting Inversions

Input: a sequence a1 , a2 , · · · , an of n distinct numbers

Output: number of inversions

Example:

10

8

15

9

12

8

9

10

12

15

Def. Given an array A of n integers, an inversion of A is a pair

(i, j) of indices such that i < j and ai > aj .

Counting Inversions

Input: a sequence a1 , a2 , · · · , an of n distinct numbers

Output: number of inversions

Example:

10

8

15

9

12

8

9

10

12

15

Def. Given an array A of n integers, an inversion of A is a pair

(i, j) of indices such that i < j and ai > aj .

Counting Inversions

Input: a sequence a1 , a2 , · · · , an of n distinct numbers

Output: number of inversions

Example:

10

8

15

9

12

8

9

10

12

15

4 inversions (for convenience, using numbers, not indices):

(10, 8), (10, 9), (15, 9), (15, 12)

Naive Algorithm for Counting Inversions

count-inversions(A, n)

1

2

3

4

5

c←0

for every i ← 1 to n − 1

for every j ← i + 1 to n

if A[i] > A[j] then c ← c + 1

return c

Divide-and-Conquer

A:

B

C

Recursion:

inversions(A) = inversions(B) + inversions(C) + m

m = {(i, j) : 1 ≤ i ≤ bn/2c, bn/2c < j ≤ n, Ai > Aj }

Computing m in O(n2 ) time: T (n) = 2T (n/2) + O(n2 )

T (n) = O(n2 ): bad

Computing m in O(n) time: T (n) = 2T (n/2) + O(n)

T (n) = O(n lg n): good

Counting Inversions between B and C

Count pairs i, j such that B[i] > C[j]:

B:

3

8 12 20 32 48

C:

5

7

9 25 29

total= 0

Counting Inversions between B and C

Count pairs i, j such that B[i] > C[j]:

B:

3

8 12 20 32 48

C:

5

7

9 25 29

total= 0

Counting Inversions between B and C

Count pairs i, j such that B[i] > C[j]:

B:

3

8 12 20 32 48

C:

5

7

3

9 25 29

total= 0

Counting Inversions between B and C

Count pairs i, j such that B[i] > C[j]:

B:

3

8 12 20 32 48

C:

5

7

3

9 25 29

total= 0

Counting Inversions between B and C

Count pairs i, j such that B[i] > C[j]:

B:

3

8 12 20 32 48

C:

5

7

3

5

9 25 29

total= 0

Counting Inversions between B and C

Count pairs i, j such that B[i] > C[j]:

B:

3

8 12 20 32 48

C:

5

7

3

5

9 25 29

total= 0

Counting Inversions between B and C

Count pairs i, j such that B[i] > C[j]:

B:

3

8 12 20 32 48

C:

5

7

9 25 29

3

5

7

total= 0

Counting Inversions between B and C

Count pairs i, j such that B[i] > C[j]:

B:

3

8 12 20 32 48

C:

5

7

9 25 29

3

5

7

total= 0

Counting Inversions between B and C

Count pairs i, j such that B[i] > C[j]:

B:

3

8 12 20 32 48

C:

5

7

9 25 29

3

5

7

8

total= 022

Counting Inversions between B and C

Count pairs i, j such that B[i] > C[j]:

B:

3

8 12 20 32 48

C:

5

7

9 25 29

3

5

7

8

total= 022

Counting Inversions between B and C

Count pairs i, j such that B[i] > C[j]:

B:

3

8 12 20 32 48

C:

5

7

9 25 29

3

5

7

8

9

total= 022

Counting Inversions between B and C

Count pairs i, j such that B[i] > C[j]:

B:

3

8 12 20 32 48

C:

5

7

9 25 29

3

5

7

8

9

total= 022

Counting Inversions between B and C

Count pairs i, j such that B[i] > C[j]:

B:

3

8 12 20 32 48

C:

5

7

9 25 29

3

5

7

8

9 12

total= 0225

Counting Inversions between B and C

Count pairs i, j such that B[i] > C[j]:

B:

3

8 12 20 32 48

C:

5

7

9 25 29

3

5

7

8

9 12

total= 0225

Counting Inversions between B and C

Count pairs i, j such that B[i] > C[j]:

B:

3

8 12 20 32 48

C:

5

7

9 25 29

3

5

7

8

9 12 20

total= 02258

Counting Inversions between B and C

Count pairs i, j such that B[i] > C[j]:

B:

3

8 12 20 32 48

C:

5

7

9 25 29

3

5

7

8

9 12 20

total= 02258

Counting Inversions between B and C

Count pairs i, j such that B[i] > C[j]:

B:

3

8 12 20 32 48

C:

5

7

9 25 29

3

5

7

8

total= 02258

9 12 20 25

Counting Inversions between B and C

Count pairs i, j such that B[i] > C[j]:

B:

3

8 12 20 32 48

C:

5

7

9 25 29

3

5

7

8

total= 02258

9 12 20 25

Counting Inversions between B and C

Count pairs i, j such that B[i] > C[j]:

B:

3

8 12 20 32 48

C:

5

7

9 25 29

3

5

7

8

total= 02258

9 12 20 25 29

Counting Inversions between B and C

Count pairs i, j such that B[i] > C[j]:

B:

3

8 12 20 32 48

C:

5

7

9 25 29

3

5

7

8

total= 02258

9 12 20 25 29

Counting Inversions between B and C

Count pairs i, j such that B[i] > C[j]:

B:

3

8 12 20 32 48

C:

5

7

9 25 29

3

5

7

8

02258

total= 13

9 12 20 25 29 32

Counting Inversions between B and C

Count pairs i, j such that B[i] > C[j]:

B:

3

8 12 20 32 48

C:

5

7

9 25 29

3

5

7

8

02258

total= 13

9 12 20 25 29 32

Counting Inversions between B and C

Count pairs i, j such that B[i] > C[j]:

B:

3

8 12 20 32 48

C:

5

7

9 25 29

3

5

7

8

13

02258

total= 18

9 12 20 25 29 32 48

Counting Inversions between B and C

Count pairs i, j such that B[i] > C[j]:

B:

3

8 12 20 32 48

C:

5

7

9 25 29

3

5

7

8

13

02258

total= 18

9 12 20 25 29 32 48

Count Inversions between B and C

inversions-between(B, C, n1 , n2 )

1

2

3

4

5

6

7

8

9

count ← 0;

A ← []; i ← 1; j ← 1

while i ≤ n1 or j ≤ n2

j > n2 or (i ≤ n1 and B[i] ≤ C[j]) then

append B[i] to A; i ← i + 1

count ← count + (j − 1)

else

append C[j] to A; j ← j + 1

return (A, count)

Count Inversions in A

inversions(A, n)

1

2

3

4

5

6

7

if n = 1 then

return (A, 0)

else

(B, m1 ) ← inversions A 1..bn/2c , bn/2c

(C, m2 ) ← inversions A bn/2c + 1..n , dn/2e

(A, m3 ) ← inversions-between(B, C, bn/2c, dn/2e)

return (A, m1 + m2 + m3 )

Count Inversions in A

inversions(A, n)

1

2

3

4

5

6

7

Divide: trivial

Conquer: 5 , 6

Combine: 7

if n = 1 then

return (A, 0)

else

(B, m1 ) ← inversions A 1..bn/2c , bn/2c

(C, m2 ) ← inversions A bn/2c + 1..n , dn/2e

(A, m3 ) ← inversions-between(B, C, bn/2c, dn/2e)

return (A, m1 + m2 + m3 )

Outline

1

Divide-and-Conquer

2

Solving Recurrences

3

Counting Inversions

4

Polynomial Multiplication

5

Strassen’s Algorithm for Matrix Multiplication

6

Quicksort

Randomized Quicksort

Average-Case Analysis

7

Lower Bound for Comparison-Based Sorting Algorithms

8

Selection Problem

Polynomial Multiplication

Input: two polynomials of degree n − 1

Output: product of two polynomials

Polynomial Multiplication

Input: two polynomials of degree n − 1

Output: product of two polynomials

Example:

(3x3 + 2x2 − 5x + 4) × (2x3 − 3x2 + 6x − 5)

Polynomial Multiplication

Input: two polynomials of degree n − 1

Output: product of two polynomials

Example:

(3x3 + 2x2 − 5x + 4) × (2x3 − 3x2 + 6x − 5)

= 6x6 − 9x5 + 18x4 − 15x3

+ 4x5 − 6x4 + 12x3 − 10x2

− 10x4 + 15x3 − 30x2 + 25x

+ 8x3 − 12x2 + 24x − 20

= 6x6 − 5x5 + 2x4 + 20x3 − 52x2 + 49x − 20

Polynomial Multiplication

Input: two polynomials of degree n − 1

Output: product of two polynomials

Example:

(3x3 + 2x2 − 5x + 4) × (2x3 − 3x2 + 6x − 5)

= 6x6 − 9x5 + 18x4 − 15x3

+ 4x5 − 6x4 + 12x3 − 10x2

− 10x4 + 15x3 − 30x2 + 25x

+ 8x3 − 12x2 + 24x − 20

= 6x6 − 5x5 + 2x4 + 20x3 − 52x2 + 49x − 20

Input: (4, −5, 2, 3), (−5, 6, −3, 2)

Output: (−20, 49, −52, 20, 2, −5, 6)

Naı̈ve Algorithm

polynomial-multiplication(A, B, n)

1

2

3

4

5

let C[k] = 0 for every k = 0, 1, 2, · · · , 2n − 2

for i ← 0 to n − 1

for j ← 0 to n − 1

C[i + j] ← C[i + j] + A[i] × B[j]

return C

Naı̈ve Algorithm

polynomial-multiplication(A, B, n)

1

2

3

4

5

let C[k] = 0 for every k = 0, 1, 2, · · · , 2n − 2

for i ← 0 to n − 1

for j ← 0 to n − 1

C[i + j] ← C[i + j] + A[i] × B[j]

return C

Running time: O(n2 )

Divide-and-Conquer for Polynomial Multiplication

p(x) = 3x3 + 2x2 − 5x + 4 = (3x + 2)x2 + (−5x + 4)

q(x) = 2x3 − 3x2 + 6x − 5 = (2x − 3)x2 + (6x − 5)

Divide-and-Conquer for Polynomial Multiplication

p(x) = 3x3 + 2x2 − 5x + 4 = (3x + 2)x2 + (−5x + 4)

q(x) = 2x3 − 3x2 + 6x − 5 = (2x − 3)x2 + (6x − 5)

p(x): degree of n − 1 (assume n is even)

p(x) = pH (x)xn/2 + pL (x),

pH (x), pL (x): polynomials of degree n/2 − 1.

Divide-and-Conquer for Polynomial Multiplication

p(x) = 3x3 + 2x2 − 5x + 4 = (3x + 2)x2 + (−5x + 4)

q(x) = 2x3 − 3x2 + 6x − 5 = (2x − 3)x2 + (6x − 5)

p(x): degree of n − 1 (assume n is even)

p(x) = pH (x)xn/2 + pL (x),

pH (x), pL (x): polynomials of degree n/2 − 1.

pq = pH xn/2 + pL qH xn/2 + qL

Divide-and-Conquer for Polynomial Multiplication

p(x) = 3x3 + 2x2 − 5x + 4 = (3x + 2)x2 + (−5x + 4)

q(x) = 2x3 − 3x2 + 6x − 5 = (2x − 3)x2 + (6x − 5)

p(x): degree of n − 1 (assume n is even)

p(x) = pH (x)xn/2 + pL (x),

pH (x), pL (x): polynomials of degree n/2 − 1.

pq = pH xn/2 + pL qH xn/2 + qL

= pH qH xn + pH qL + pL qH xn/2 + pL qL

Divide-and-Conquer for Polynomial Multiplication

pq = pH xn/2 + pL qH xn/2 + qL

= pH qH xn + pH qL + pL qH xn/2 + pL qL

Divide-and-Conquer for Polynomial Multiplication

pq = pH xn/2 + pL qH xn/2 + qL

= pH qH xn + pH qL + pL qH xn/2 + pL qL

multiply(p, q) = multiply(pH , qH ) × xn

+ multiply(pH , qL ) + multiply(pL , qH ) × xn/2

+ multiply(pL , qL )

Divide-and-Conquer for Polynomial Multiplication

pq = pH xn/2 + pL qH xn/2 + qL

= pH qH xn + pH qL + pL qH xn/2 + pL qL

multiply(p, q) = multiply(pH , qH ) × xn

+ multiply(pH , qL ) + multiply(pL , qH ) × xn/2

+ multiply(pL , qL )

Recurrence: T (n) = 4T (n/2) + O(n)

Divide-and-Conquer for Polynomial Multiplication

pq = pH xn/2 + pL qH xn/2 + qL

= pH qH xn + pH qL + pL qH xn/2 + pL qL

multiply(p, q) = multiply(pH , qH ) × xn

+ multiply(pH , qL ) + multiply(pL , qH ) × xn/2

+ multiply(pL , qL )

Recurrence: T (n) = 4T (n/2) + O(n)

T (n) = O(n2 )

Reduce Number from 4 to 3

Reduce Number from 4 to 3

pq = pH xn/2 + pL qH xn/2 + qL

= pH qH xn + pH qL + pL qH xn/2 + pL qL

Reduce Number from 4 to 3

pq = pH xn/2 + pL qH xn/2 + qL

= pH qH xn + pH qL + pL qH xn/2 + pL qL

pH qL + pL qH = (pH + pL )(qH + qL ) − pH qH − pL qL

Divide-and-Conquer for Polynomial Multiplication

rH = multiply(pH , qH )

rL = multiply(pL , qL )

multiply(p, q) = rH × xn

+ multiply(pH + pL , qH + qL ) − rH − rL × xn/2

+ rL

Divide-and-Conquer for Polynomial Multiplication

rH = multiply(pH , qH )

rL = multiply(pL , qL )

multiply(p, q) = rH × xn

+ multiply(pH + pL , qH + qL ) − rH − rL × xn/2

+ rL

Recurrence: T (n) = 3T (n/2) + O(n)

T (n) = O(nlg2 3 ) = O(n1.585 )

multiply(A, B, n)

1

2

3

4

5

6

7

8

9

10

11

12

13

\\ assume n is power of 2

if n = 1 then return (A[0]B[0])

AL ← A[0 .. n/2 − 1], AH ← A[n/2 .. n − 1]

BL ← B[0 .. n/2 − 1], BH ← B[n/2 .. n − 1]

CL ← multiply(AL , BL , n/2)

CH ← multiply(AH , BH , n/2)

CM ← multiply(AL + AH , BL + BH , n/2)

for i ← 0 to 2n − 2

C[i] ← 0

for i ← 0 to n − 2

C[i] ← C[i] + CL [i]

C[i + n] ← C[i + n] + CH [i]

C[i + n/2] ← C[i + n/2] + CM [i] − CL [i] − CH [i]

return C

Outline

1

Divide-and-Conquer

2

Solving Recurrences

3

Counting Inversions

4

Polynomial Multiplication

5

Strassen’s Algorithm for Matrix Multiplication

6

Quicksort

Randomized Quicksort

Average-Case Analysis

7

Lower Bound for Comparison-Based Sorting Algorithms

8

Selection Problem

Strassen’s Algorithm for Matrix Multiplication

Matrix Multiplication

Input: two n × n matrices A and B

Output: C = AB

Running time: O(n3 )

Strassen’s Algorithm for Matrix Multiplication

Matrix Multiplication

Input: two n × n matrices A and B

Output: C = AB

Naive Algorithm: matrix-multiplication(A, B, n)

1

2

3

4

5

6

for i ← 1 to n

for j ← 1 to n

C[i, j] ← 0

for k ← 1 to n

C[i, j] ← C[i, j] + A[i, k] × B[k, j]

return C

Running time: O(n3 )

Try to Use Divide-and-Conquer

n/2

A11 A12

A=

B11 B12

n/2

n/2

B=

A21 A22

n/2

B21 B22

A11 B11 + A12 B21 A11 B12 + A12 B22

C=

A21 B11 + A22 B21 A21 B12 + A22 B22

matrix multiplication(A, B) recursively calls

matrix multiplication(A11 , B11 ),

matrix multiplication(A12 , B21 ),

···

Try to Use Divide-and-Conquer

n/2

A11 A12

A=

B11 B12

n/2

n/2

B=

A21 A22

n/2

B21 B22

A11 B11 + A12 B21 A11 B12 + A12 B22

C=

A21 B11 + A22 B21 A21 B12 + A22 B22

matrix multiplication(A, B) recursively calls

matrix multiplication(A11 , B11 ),

matrix multiplication(A12 , B21 ),

···

Recurrence for running time: T (n) = 8T (n/2) + O(n2 )

T (n) = O(n3 )

Strassen’s Algorithm

T (n) = 8T (n/2) + O(n2 )

Strassen’s Algorithm: improve the number of multiplications

from 8 to 7!

New recurrence: T (n) = 7T (n/2) + O(n2 )

New running time?

Strassen’s Algorithm

T (n) = 8T (n/2) + O(n2 )

Strassen’s Algorithm: improve the number of multiplications

from 8 to 7!

New recurrence: T (n) = 7T (n/2) + O(n2 )

New running time?

T (n) = O(nlog2 7 ) = O(n2.808 )

Strassen’s Algorithm: Reduce 8 to 7

M1

M2

M3

M4

M5

M6

M7

:= (A11 + A22 )(B11 + B22 )

:= (A21 + A22 )B11

:= A11 (B12 − B22 )

:= A22 (B21 − B11 )

:= (A11 + A12 )B22

:= (A21 − A11 )(B11 + B12 )

:= (A12 − A22 )(B21 + B22 )

= M1 + M4 − M5 + M7

= M3 + M5

= M2 + M4

= M1 − M2 + M3 + M6

C11 C12

C=

.

C21 C22

C11

C12

C21

C22

Strassen’s Algorithm: Reduce 8 to 7

M1

M2

M3

M4

M5

M6

M7

:= (A11 + A22 )(B11 + B22 )

:= (A21 + A22 )B11

:= A11 (B12 − B22 )

:= A22 (B21 − B11 )

:= (A11 + A12 )B22

:= (A21 − A11 )(B11 + B12 )

:= (A12 − A22 )(B21 + B22 )

= M1 + M4 − M5 + M7

= M3 + M5

= M2 + M4

= M1 − M2 + M3 + M6

C11 C12

C=

.

C21 C22

C11

C12

C21

C22

Current best running time : O(n2.373 )

Outline

1

Divide-and-Conquer

2

Solving Recurrences

3

Counting Inversions

4

Polynomial Multiplication

5

Strassen’s Algorithm for Matrix Multiplication

6

Quicksort

Randomized Quicksort

Average-Case Analysis

7

Lower Bound for Comparison-Based Sorting Algorithms

8

Selection Problem

Quicksort Example

Assumption: we can choose median of an array in linear time.

29 82 75 64 38 45 94 69 25 76 15 92 37 17 85

Quicksort Example

Assumption: we can choose median of an array in linear time.

29 82 75 64 38 45 94 69 25 76 15 92 37 17 85

Quicksort Example

Assumption: we can choose median of an array in linear time.

29 82 75 64 38 45 94 69 25 76 15 92 37 17 85

29 38 45 25 15 37 17 64 82 75 94 92 69 76 85

Quicksort Example

Assumption: we can choose median of an array in linear time.

29 82 75 64 38 45 94 69 25 76 15 92 37 17 85

29 38 45 25 15 37 17 64 82 75 94 92 69 76 85

Quicksort Example

Assumption: we can choose median of an array in linear time.

29 82 75 64 38 45 94 69 25 76 15 92 37 17 85

29 38 45 25 15 37 17 64 82 75 94 92 69 76 85

25 15 17 29 38 45 37 64 82 75 94 92 69 76 85

Quicksort

quicksort(A, n)

1

2

3

4

5

6

7

if n = 1 return A;

x ← median of A;

AL ← elements in A that are less than x;

AR ← elements in A that are greater than x;

BL ← quicksort(AL , AL .size)

BR ← quicksort(AR , AR .size)

return BL concatenating x concatenating BR

\\ Divide

\\ Divide

\\ Conquer

\\ Conquer

\\ Combine

Quicksort

quicksort(A, n)

1

2

3

4

5

6

7

if n = 1 return A;

x ← median of A;

AL ← elements in A that are less than x;

AR ← elements in A that are greater than x;

BL ← quicksort(AL , AL .size)

BR ← quicksort(AR , AR .size)

return BL concatenating x concatenating BR

how?

\\ Divide

\\ Divide

\\ Conquer

\\ Conquer

\\ Combine

Quicksort

quicksort(A, n)

1

2

3

4

5

6

7

if n = 1 return A;

x ← median of A;

AL ← elements in A that are less than x;

AR ← elements in A that are greater than x;

BL ← quicksort(AL , AL .size)

BR ← quicksort(AR , AR .size)

return BL concatenating x concatenating BR

Recurrence T (n) = 2T (n/2) + O(n)

how?

\\ Divide

\\ Divide

\\ Conquer

\\ Conquer

\\ Combine

Quicksort

quicksort(A, n)

1

2

3

4

5

6

7

if n = 1 return A;

x ← median of A;

AL ← elements in A that are less than x;

AR ← elements in A that are greater than x;

BL ← quicksort(AL , AL .size)

BR ← quicksort(AR , AR .size)

return BL concatenating x concatenating BR

Recurrence T (n) = 2T (n/2) + O(n)

Running time = O(n lg n)

how?

\\ Divide

\\ Divide

\\ Conquer

\\ Conquer

\\ Combine

Quicksort in Practice

quicksort(A, n)

1

2

3

4

5

6

7

if n = 1 return A;

x ← pivot of A;

AL ← elements in A that are less than x;

AR ← elements in A that are greater than x;

BL ← quicksort(AL , AL .size)

BR ← quicksort(AR , AR .size)

return BL concatenating x concatenating BR

\\ Divide

\\ Divide

\\ Conquer

\\ Conquer

\\ Combine

Quicksort in Practice

quicksort(A, n)

1

2

3

4

5

6

7

if n = 1 return A;

x ← pivot of A;

AL ← elements in A that are less than x;

AR ← elements in A that are greater than x;

BL ← quicksort(AL , AL .size)

BR ← quicksort(AR , AR .size)

return BL concatenating x concatenating BR

Ways to chose pivot:

(a) first element of A

(b) middle element of A

\\ Divide

\\ Divide

\\ Conquer

\\ Conquer

\\ Combine

worst case running time = O(n2 )

worst case running time=

Quicksort in Practice

quicksort(A, n)

1

2

3

4

5

6

7

if n = 1 return A;

x ← pivot of A;

AL ← elements in A that are less than x;

AR ← elements in A that are greater than x;

BL ← quicksort(AL , AL .size)

BR ← quicksort(AR , AR .size)

return BL concatenating x concatenating BR

Ways to chose pivot:

(a) first element of A

(b) middle element of A

\\ Divide

\\ Divide

\\ Conquer

\\ Conquer

\\ Combine

worst case running time = O(n2 )

worst case running time= O(n2 )

Ways to chose pivot:

(a) first element of A

(b) middle element of A

worst case running time = Θ(n2 )

worst case running time= Θ(n2 )

Any deterministic way of choosing pivot has worst-case

running time Θ(n2 )

Ways to chose pivot:

(a) first element of A

(b) middle element of A

worst case running time = Θ(n2 )

worst case running time= Θ(n2 )

Any deterministic way of choosing pivot has worst-case

running time Θ(n2 )

Idea: using a random pivot

Outline

1

Divide-and-Conquer

2

Solving Recurrences

3

Counting Inversions

4

Polynomial Multiplication

5

Strassen’s Algorithm for Matrix Multiplication

6

Quicksort

Randomized Quicksort

Average-Case Analysis

7

Lower Bound for Comparison-Based Sorting Algorithms

8

Selection Problem

Randomized Algorithm Model

Assumption: we can choose a random integer in

{a, a + 1, · · · , b}

Randomized Algorithm Model

Assumption: we can choose a random integer in

{a, a + 1, · · · , b}

Can computers really produce random numbers?

Randomized Algorithm Model

Assumption: we can choose a random integer in

{a, a + 1, · · · , b}

Can computers really produce random numbers?

No! Computer programs are deterministic!

Randomized Algorithm Model

Assumption: we can choose a random integer in

{a, a + 1, · · · , b}

Can computers really produce random numbers?

No! Computer programs are deterministic!

In practice: use pseudo-random-generator, a deterministic

algorithm returning numbers that “look” random

Randomized Algorithm Model

Assumption: we can choose a random integer in

{a, a + 1, · · · , b}

Can computers really produce random numbers?

No! Computer programs are deterministic!

In practice: use pseudo-random-generator, a deterministic

algorithm returning numbers that “look” random

In theory: assuming the answer is yes.

Randomized Quick-Sort

Quicksort(A, n)

1

2

3

4

5

6

7

if n = 1 return A;

x ← random element of A;

AL ← elements in A that are less than x;

AR ← elements in A that are greater than x;

BL ← Quicksort(AL , AL .size)

BR ← Quicksort(AR , AR .size)

return BL concatenating x concatenating BR

\\ Divide

\\ Divide

\\ Conquer

\\ Conquer

\\ Combine

Randomized Quick-Sort

Quicksort(A, n)

1

2

3

4

5

6

7

if n = 1 return A;

x ← random element of A;

AL ← elements in A that are less than x;

AR ← elements in A that are greater than x;

BL ← Quicksort(AL , AL .size)

BR ← Quicksort(AR , AR .size)

return BL concatenating x concatenating BR

\\ Divide

\\ Divide

\\ Conquer

\\ Conquer

\\ Combine

T (n) = expected running time of sorting n elements

Randomized Quick-Sort

Quicksort(A, n)

1

2

3

4

5

6

7

if n = 1 return A;

x ← random element of A;

AL ← elements in A that are less than x;

AR ← elements in A that are greater than x;

BL ← Quicksort(AL , AL .size)

BR ← Quicksort(AR , AR .size)

return BL concatenating x concatenating BR

\\ Divide

\\ Divide

\\ Conquer

\\ Conquer

\\ Combine

T (n) = expected running time of sorting n elements

P

T (n) = n1 ni=1 T (i − 1) + T (n − i) + O(n)

Randomized Quick-Sort

Quicksort(A, n)

1

2

3

4

5

6

7

if n = 1 return A;

x ← random element of A;

AL ← elements in A that are less than x;

AR ← elements in A that are greater than x;

BL ← Quicksort(AL , AL .size)

BR ← Quicksort(AR , AR .size)

return BL concatenating x concatenating BR

\\ Divide

\\ Divide

\\ Conquer

\\ Conquer

\\ Combine

T (n) = expected running time of sorting n elements

P

T (n) = n1 ni=1 T (i − 1) + T (n − i) + O(n)

T (n) = O(n lg n)

Outline

1

Divide-and-Conquer

2

Solving Recurrences

3

Counting Inversions

4

Polynomial Multiplication

5

Strassen’s Algorithm for Matrix Multiplication

6

Quicksort

Randomized Quicksort

Average-Case Analysis

7

Lower Bound for Comparison-Based Sorting Algorithms

8

Selection Problem

Quicksort: Average-Case Analysis

Deterministic ways to choose pivot:

(a) first element of A

worst-case running time = Θ(n2 )

(b) the middle element of A worst-case running time = Θ(n2 )

Quicksort: Average-Case Analysis

Deterministic ways to choose pivot:

(a) first element of A

worst-case running time = Θ(n2 )

(b) the middle element of A worst-case running time = Θ(n2 )

what if the input array is already randomly perturbed?

i.e, all n! permutations are equally likely to happen

Quicksort: Average-Case Analysis

Deterministic ways to choose pivot:

(a) first element of A

worst-case running time = Θ(n2 )

(b) the middle element of A worst-case running time = Θ(n2 )

what if the input array is already randomly perturbed?

i.e, all n! permutations are equally likely to happen

O(n lg n) expected time: equivalent to choose pivot

randomly

Quicksort: Average-Case Analysis

Deterministic ways to choose pivot:

(a) first element of A

worst-case running time = Θ(n2 )

(b) the middle element of A worst-case running time = Θ(n2 )

what if the input array is already randomly perturbed?

i.e, all n! permutations are equally likely to happen

O(n lg n) expected time: equivalent to choose pivot

randomly

average-case running time of quicksort using (a) or (b) is

O(n lg n)

Practical Issue

Deterministic ways to choose pivot:

(a) first element of A

(b) the middle element of A

average-case running time of quicksort using (a) or (b) is

O(n lg n)

Practical Issue

Deterministic ways to choose pivot:

Bad in Practice

(a) first element of A

Good in Practice

(b) the middle element of A

average-case running time of quicksort using (a) or (b) is

O(n lg n)

Sort “in-Place”

Quick-sort can be implemented as an “in-place” algorithm;

Merge-sort can not

3

8 12 20 32 48

5

7

9 25 29

Sort “in-Place”

Quick-sort can be implemented as an “in-place” algorithm;

Merge-sort can not

3

8 12 20 32 48

5

7

9 25 29

Sort “in-Place”

Quick-sort can be implemented as an “in-place” algorithm;

Merge-sort can not

3

8 12 20 32 48

5

7

3

9 25 29

Sort “in-Place”

Quick-sort can be implemented as an “in-place” algorithm;

Merge-sort can not

3

8 12 20 32 48

5

7

3

9 25 29

Sort “in-Place”

Quick-sort can be implemented as an “in-place” algorithm;

Merge-sort can not

3

8 12 20 32 48

5

7

3

5

9 25 29

Sort “in-Place”

Quick-sort can be implemented as an “in-place” algorithm;

Merge-sort can not

3

8 12 20 32 48

5

7

3

5

9 25 29

Sort “in-Place”

Quick-sort can be implemented as an “in-place” algorithm;

Merge-sort can not

3

8 12 20 32 48

5

7

9 25 29

3

5

7

Sort “in-Place”

Quick-sort can be implemented as an “in-place” algorithm;

Merge-sort can not

3

8 12 20 32 48

5

7

9 25 29

3

5

7

Sort “in-Place”

Quick-sort can be implemented as an “in-place” algorithm;

Merge-sort can not

3

8 12 20 32 48

5

7

9 25 29

3

5

7

8

Sort “in-Place”

Quick-sort can be implemented as an “in-place” algorithm;

Merge-sort can not

3

8 12 20 32 48

5

7

9 25 29

3

5

7

8

Sort “in-Place”

Quick-sort can be implemented as an “in-place” algorithm;

Merge-sort can not

3

8 12 20 32 48

5

7

9 25 29

3

5

7

8

9 12 20 25 29

Sort “in-Place”

Quick-sort can be implemented as an “in-place” algorithm;

Merge-sort can not

3

8 12 20 32 48

5

7

9 25 29

3

5

7

8

9 12 20 25 29 32 48

Sort “in-Place”

29 82 75 64 38 45 94 69 25 76 15 92 37 17 85

Sort “in-Place”

64 82 75 29 38 45 94 69 25 76 15 92 37 17 85

Sort “in-Place”

i

j

64 82 75 29 38 45 94 69 25 76 15 92 37 17 85

Sort “in-Place”

i

j

64 82 75 29 38 45 94 69 25 76 15 92 37 17 85

Sort “in-Place”

i

j

64 82 75 29 38 45 94 69 25 76 15 92 37 17

17

64 85

Sort “in-Place”

i

j

64 82 75 29 38 45 94 69 25 76 15 92 37 17

17

64 85

Sort “in-Place”

i

j

64 82

17

64 75 29 38 45 94 69 25 76 15 92 37 82

64 85

17

Sort “in-Place”

i

j

64 82

17

64 75 29 38 45 94 69 25 76 15 92 37 17

82 85

64

Sort “in-Place”

i

j

64 82

17

64 75 29 38 45 94 69 25 76 15 92 64

37

37 17

82 85

64

Sort “in-Place”

i

j

64 82

17

37 75 29 38 45 94 69 25 76 15 92 64

64

37 17

82 85

64

Sort “in-Place”

i

j

64 82

17

37 64

64

75 29 38 45 94 69 25 76 15 92 75

37 17

64

82 85

64

Sort “in-Place”

i

j

64 82

17

37 64

64

75 29 38 45 94 69 25 76 15 92 64

37 17

75

82 85

64

Sort “in-Place”

i

j

64 82

17

37 15

64

75 29 38 45 94 69 25 76 64

64

15 92 64

37 17

75

82 85

64

Sort “in-Place”

i

j

64 82

17

37 64

64

75 29 38 45 94 69 25 76 64

15

15 92 64

37 17

75

82 85

64

Sort “in-Place”

i

j

64 82

17

37 64

64

75 29 38 45 64

15

94 69 25 76 94

15 92 64

64

37 17

75

82 85

64

Sort “in-Place”

i

j

64 82

17

37 64

64

75 29 38 45 64

15

94 69 25 76 64

15 92 64

94

37 17

75

82 85

64

Sort “in-Place”

i

j

64 82

17

37 64

64

75 29 38 45 25

15

94 69 64

64

25 76 64

15 92 64

94

37 17

75

82 85

64

Sort “in-Place”

i

j

64 82

17

37 64

64

75 29 38 45 64

15

94 69 64

25

25 76 64

15 92 64

94

37 17

75

82 85

64

Sort “in-Place”

i

j

64 82

17

37 64

64

75 29 38 45 64

15

94 64

25

69 69

25 76 64

64

15 92 64

94

37 17

75

82 85

64

Sort “in-Place”

ij

64 82

17

37 64

64

75 29 38 45 64

15

94 64

25

69 69

25 76 64

64

15 92 64

94

37 17

75

82 85

64

Sort “in-Place”

partition(A, `, r)

1

2

3

4

5

6

7

8

9

p ← random integer between ` and r

swap A[p] and A[`]

i ← `, j ← r

while i < j do

while i < j and A[i] ≤ A[j] do j ← j − 1

swap A[i] and A[j]

while i < j and A[i] ≤ A[j] do i ← i + 1

swap A[i] and A[j]

return i

quicksort(A, `, r)

1

2

3

4

if ` ≥ r return

p = Patition(`, r)

quicksort(A, `, p − 1)

quicksort(A, p + 1, r)

Outline

1

Divide-and-Conquer

2

Solving Recurrences

3

Counting Inversions

4

Polynomial Multiplication

5

Strassen’s Algorithm for Matrix Multiplication

6

Quicksort

Randomized Quicksort

Average-Case Analysis

7

Lower Bound for Comparison-Based Sorting Algorithms

8

Selection Problem

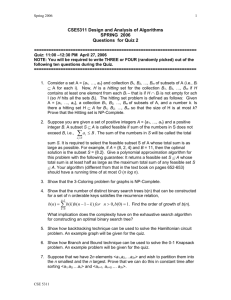

Comparison-Based Sorting Algorithms

Q: Can we do better than O(n log n) for sorting?

Comparison-Based Sorting Algorithms

Q: Can we do better than O(n log n) for sorting?

A: No, for comparison-based sorting algorithms.

Comparison-Based Sorting Algorithms

Q: Can we do better than O(n log n) for sorting?

A: No, for comparison-based sorting algorithms.

Bob has one number x in his hand, x ∈ {1, 2, 3, · · · , N }.

Comparison-Based Sorting Algorithms

Q: Can we do better than O(n log n) for sorting?

A: No, for comparison-based sorting algorithms.

Bob has one number x in his hand, x ∈ {1, 2, 3, · · · , N }.

You can ask Bob “yes/no” questions about x.

Comparison-Based Sorting Algorithms

Q: Can we do better than O(n log n) for sorting?

A: No, for comparison-based sorting algorithms.

Bob has one number x in his hand, x ∈ {1, 2, 3, · · · , N }.

You can ask Bob “yes/no” questions about x.

Q: How many questions do you need to ask in order to get the

value of x?

Comparison-Based Sorting Algorithms

Q: Can we do better than O(n log n) for sorting?

A: No, for comparison-based sorting algorithms.

Bob has one number x in his hand, x ∈ {1, 2, 3, · · · , N }.

You can ask Bob “yes/no” questions about x.

Q: How many questions do you need to ask in order to get the

value of x?

A: dlog2 N e.

Comparison-Based Sorting Algorithms

x ≤ 2?

x = 3?

x = 1?

1

2

3

4

Comparison-Based Sorting Algorithms

Q: Can we do better than O(n log n) for sorting?

A: No, for comparison-based sorting algorithms.

Bob has a permutation π over {1, 2, 3, · · · , n} in his hand.

You can ask Bob “yes/no” questions about π.

Comparison-Based Sorting Algorithms

Q: Can we do better than O(n log n) for sorting?

A: No, for comparison-based sorting algorithms.

Bob has a permutation π over {1, 2, 3, · · · , n} in his hand.

You can ask Bob “yes/no” questions about π.

Q: How many questions do you need to ask in order to get the

permutation π?

Comparison-Based Sorting Algorithms

Q: Can we do better than O(n log n) for sorting?

A: No, for comparison-based sorting algorithms.

Bob has a permutation π over {1, 2, 3, · · · , n} in his hand.

You can ask Bob “yes/no” questions about π.

Q: How many questions do you need to ask in order to get the

permutation π?

A: log2 n! = Θ(n lg n)

Comparison-Based Sorting Algorithms

Q: Can we do better than O(n log n) for sorting?

A: No, for comparison-based sorting algorithms.

Bob has a permutation π over {1, 2, 3, · · · , n} in his hand.

You can ask Bob questions of the form “does i appear before

j in π?”

Comparison-Based Sorting Algorithms

Q: Can we do better than O(n log n) for sorting?

A: No, for comparison-based sorting algorithms.

Bob has a permutation π over {1, 2, 3, · · · , n} in his hand.

You can ask Bob questions of the form “does i appear before

j in π?”

Q: How many questions do you need to ask in order to get the

permutation π?

Comparison-Based Sorting Algorithms

Q: Can we do better than O(n log n) for sorting?

A: No, for comparison-based sorting algorithms.

Bob has a permutation π over {1, 2, 3, · · · , n} in his hand.

You can ask Bob questions of the form “does i appear before

j in π?”

Q: How many questions do you need to ask in order to get the

permutation π?

A: log2 n! = Θ(n lg n)

Outline

1

Divide-and-Conquer

2

Solving Recurrences

3

Counting Inversions

4

Polynomial Multiplication

5

Strassen’s Algorithm for Matrix Multiplication

6

Quicksort

Randomized Quicksort

Average-Case Analysis

7

Lower Bound for Comparison-Based Sorting Algorithms

8

Selection Problem

Selection Problem

Input: a set A of n (distinct) numbers, and 1 ≤ i ≤ n

Output: the i-th smallest number in A

Selection Problem

Input: a set A of n (distinct) numbers, and 1 ≤ i ≤ n

Output: the i-th smallest number in A

Sorting solves the problem in time O(n lg n).

Selection Problem

Input: a set A of n (distinct) numbers, and 1 ≤ i ≤ n

Output: the i-th smallest number in A

Sorting solves the problem in time O(n lg n).

Our goal: O(n) running time

Recall: Randomized Quick-Sort

quicksort(A, n)

1

2

3

4

5

6

7

if n = 1 return A;

x ← random element of A;

AL ← elements in A that are less than x;

AR ← elements in A that are greater than x;

BL ← Quicksort(AL , AL .size)

BR ← Quicksort(AR , AR .size)

return BL concatenating x concatenating BR

\\ Divide

\\ Divide

\\ Conquer

\\ Conquer

\\ Combine

Randomized Algorithm for Selection

select(A, n, i)

1

2

3

4

5

6

7

8

9

10

if n = 1 return A[1];

x ← random element of A;

AL ← elements in A that are less than x;

AR ← elements in A that are greater than x;

if i ≤ AL .size then

return select(AL , AL .size, i)

else if i = AL .size + 1 then

return x

else

return select(AR , AR .size, i − AL .size − 1)

Deterministic Algorithm for Selection

Deterministic Algorithm for Selection

Deterministic Algorithm for Selection

Deterministic Algorithm for Selection

select(A, n, i)

1

2

3

4

5

6

7

8

9

10

11

12

if n is small enough, run the simple algorithm and return

partition A into groups of size 3

for each group, pick the median of the group

call select to find the median x of the dn/3e picked medians

AL = numbers in A that are less than x

AR = numbers in A that are greater than x

if i ≤ AL .size then

return select(AL , AL .size, i)

else if i = AL .size + 1 then

return x

else

return select(AR , AR .size, i − AL .size − 1)

Running Time

T (n) ≤ T (n/3) + T (2n/3) + O(n)

Running Time

T (n) ≤ T (n/3) + T (2n/3) + O(n)

T (n) = O(n lg n) (not good!)

Running Time

T (n) ≤ T (n/3) + T (2n/3) + O(n)

T (n) = O(n lg n) (not good!)

Groups of size 5 instead of 3

Running Time

T (n) ≤ T (n/3) + T (2n/3) + O(n)

T (n) = O(n lg n) (not good!)

Groups of size 5 instead of 3

T (n) ≤ T (n/5) + T (7n/10) + O(n)

Running Time

T (n) ≤ T (n/3) + T (2n/3) + O(n)

T (n) = O(n lg n) (not good!)

Groups of size 5 instead of 3

T (n) ≤ T (n/5) + T (7n/10) + O(n)

T (n) = O(n) (good!)