Document 10557255

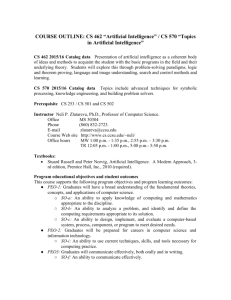

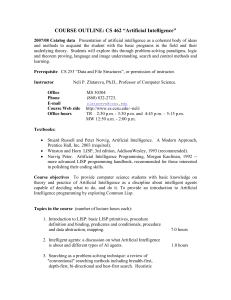

advertisement